Established container solutions in Linux

Old Hands

When talk turns to Linux and container virtualization, the name you nearly always hear is Docker. Docker has managed to plant its name with a certain amount of force in the administrator's field of view and IT strategies. In fact, you might gain the impression that Docker invented container virtualization for Linux systems. However, it has been around for about 15 years and has been in production use for a long time. In this article, I reveal why containers are so attractive to administrators and the fundamental approaches that vie for the administrator's attention.

Containers vs. Full Virtualization

Virtualization comes in different flavors. In one camp are full virtualizers, in the form of virtual computers, that give you a complete system with a virtual BIOS that looks like a physical server to the software running on it. Under normal circumstances, the software on the VM doesn't even notice that it is not running on metal. Today, administrators typically choose this approach. Paravirtualization in particular has helped popularize full virtualization. In paravirtualization, the virtualizer makes better use of the host's hardware, thus resulting in better performance.

Full virtualization has one major disadvantage, however: Because this approach always simulates a complete computer, it also generates more overhead. To put it another way, by running multiple virtual machines (e.g., with KVM and Qemu or Xen) the host wastes resources that would otherwise be available to the virtualized computers.

On a small scale, this effect is not particularly tragic, but the problem scales with the environment. Cloud service providers in particular are affected: The more virtual machines they have running in a setup (and need to manage), the greater the overhead. Therefore, the density of virtual machines per host or rack is limited, which affects the planning of large-scale data centers and explains why administrators are so grateful for container virtualization.

With containers, the host does not emulate a complete computer; it has no virtual BIOS and no kernel. All the containers on the system share the same operating system kernel, access the hardware directly, and are managed by the host kernel. This eliminates almost entirely the overhead caused by full virtualization.

Kernel Dependence

Critics maintain that containers cannot represent genuine virtualization. After all, only the filesystem is virtual in the case of a container. Everything else relies on the host kernel functions to ensure that the various containers do not battle each other for resources and work as desired. In the past, these functions regularly gave rise to heated debate in the kernel developer camp.

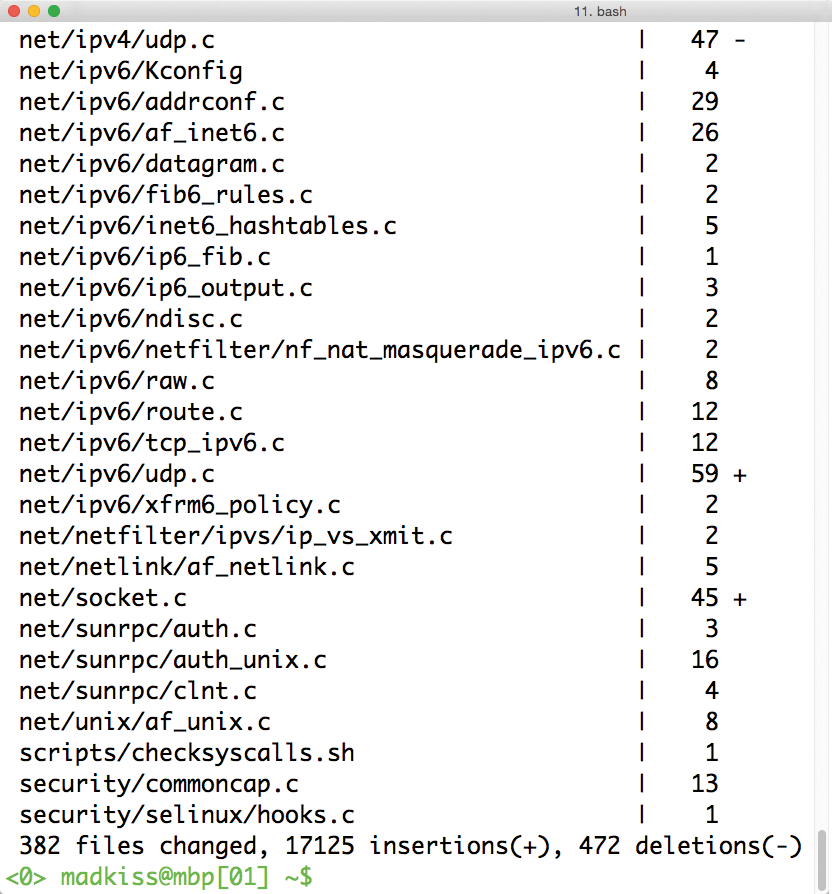

Several years ago, OpenVZ, whose commercial variant was later dubbed Virtuozzo, was launched as one of the first container solutions for Linux. At the time, container virtualization was not a big topic for the Linux kernel, which completely lacked the functionality to support it.

OpenVZ overcame this deficiency with its own patched Frankenstein kernel, which was absolutely necessary if you wanted to use OpenVZ containers on the host. Many attempts to integrate the OpenVZ patches into the kernel failed for technical reasons. Finally, parts of OpenVZ became the basis for cgroups and network namespaces, which today provide a framework for containers on Linux. To this day, OpenVZ still needs its own patched kernel if admins want to use the full functionality provided by the software.

The free Linux operating system was pretty much a latecomer in terms of a framework to implement containers in the kernel; in particular, FreeBSD offered its own container implementations at an early stage. (See "The Bigger Picture" for information about containers on other Unix-style operating systems.) LXC was the first solution that offered an option for using containers based on cgroups, which is generally regarded as reliable, and practically all of today's container solutions for Linux are based on cgroups and its various namespaces for networks and processes.

From the outset, Docker set out to leverage the kernel's functionality for its containers. Other solutions, such as Linux-VServer still do very much their own thing, which tends to make using them quite complicated.

Linux-VServer

The prize for the first container-style solution for Linux goes to the Linux-VServer project: As early as 2001, the project went public with kernel patches (Figure 2). At the time, the idea was revolutionary in numerous respects – even full virtualizers like Xen or KVM were miles away from the success that they currently enjoy.

For most users at the time, a server was simply metal that lived in a server cabinet at the data center. Although the hardware then was far less powerful than today's servers, the requirements imposed on the server were also considerably lower: Web 1.0 was just ramping up to speed, with no sign of Web 2.0. Websites with Flash were spectacular and the exception rather than the rule.

At the time, not everybody needed their own website, and especially not their own (virtual) server. People who wanted to run a website or a server quite frequently relied on free hosting offerings that existed at the time.

It was precisely these web hosting providers that first had the idea of using containers, even though they didn't use this term at the time. Driven by the need to host multiple customers on a physical server at the same time (instead of buying a separate server for each customer), they started creating containers.

The idea of Linux-VServer was born. The intent was for individual customers to have their own filesystems while sharing the hardware with other customers. Come what may, no user was to be able to escape from their own Linux-VServer and see what other customers were doing on the same hardware.

The result of development efforts was a fairly large kernel patch that is still needed today to use Linux-VServer meaningfully. Remember that when Linux-VServer started to get up to speed, the Linux kernel showed no sign of any appropriate functionality. Although that' no longer true now, the Linux-VServer developers have not yet made any attempt to modify their project to reflect the changed circumstances. If you want a Linux-VServer-capable kernel, you typically have no alternative but to build it yourself. That said, the developers do provide a patch for Linux 3.18 on their website [2].

Although Linux-VServer setups are still in use and actively maintained today, there is no concealing the fact that the project website looks fairly jaded, and the last posts in the news section or wiki are pretty old – a couple of years old, in fact.

At the end of the day, Linux-VServer seems to be somewhat out of time. The overhead of patching the kernel does not pay dividends, and administrators would do better to choose one of the solutions that is based on kernel functionality.

Virtuozzo

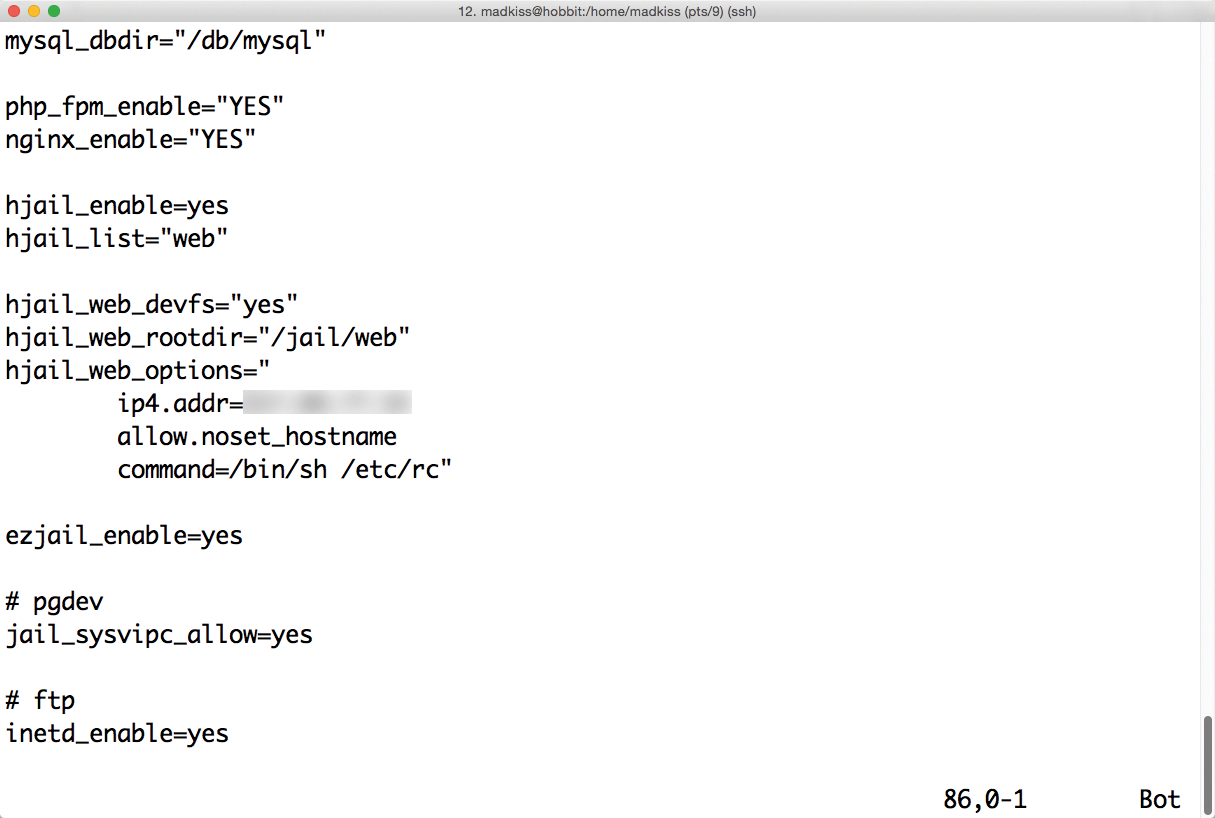

Virtuozzo (previously known as OpenVZ; Figure 3) was also a pioneer in terms of containers for Linux. Although it took far longer than Linux-VServer to establish its market niche, it still works fairly well: OpenVZ Virtuozzo, which had commercial branches later on, soon established a large community following. SWsoft (who later acquired Parallels and changed the company name to reflect the fact) was able to offer users a complete virtualization solution based on Linux for the first time – including tools and kernel patches.

If you do set up Virtuozzo in your data center, you can rely on a package that leaves nothing to be desired. Immediately after the install, the distribution asks you if you want to create containers – virtual private servers (VPS) or virtual environments (VEs).

Virtuozzo, like Linux-VServer, originated in age when the Linux kernel did not have the required virtualization infrastructure in place, so a patched kernel is still required for Virtuozzo releases even now.

From a technical point of view, the developers have done some amazing work. CPU and I/O quotas can be defined for containers so that an application running wild in a container does not compromise the host and take down containers operated by other users. Each container is given its own network stack, for which you can define separate firewall rules; however, you do have to configure this explicitly.

Users in a container cannot access any other system resources. Process IDs are virtualized; each container has an init process with an ID of 1. Users in the container do not see which processes are running in VEs operated by other users. The same thing applies to accessing system libraries or the files in /dev, /proc, and /sys.

The Never-Ending Kernel Story

Virtuozzo was a commercial top seller for many years, and the open source version of Virtuozzo and Parallels Cloud Server (as the project was renamed later) shared parts of the same code. The commercial Parallels offering contained several components that were missing from the open source solution.

In 2014, the powers that be decided to merge the free and commercial components and release them as a completely free product in the future. However, this step probably wasn't entirely motivated by management suddenly discovering their passion for free software. After all, maintaining OpenVZ was a labor of Sisyphus for many years.

The reason for all this work is the separate kernel patch, which Virtuozzo, like Linux-VServer, needs. Although the developers started rewriting parts of the required functions and merging them with the Linux kernel – for example, parts of the cgroups implementation were realized under their lead – Virtuozzo still has not fully broken with its custom functions to this day. You still need to patch the current kernel for everything to work.

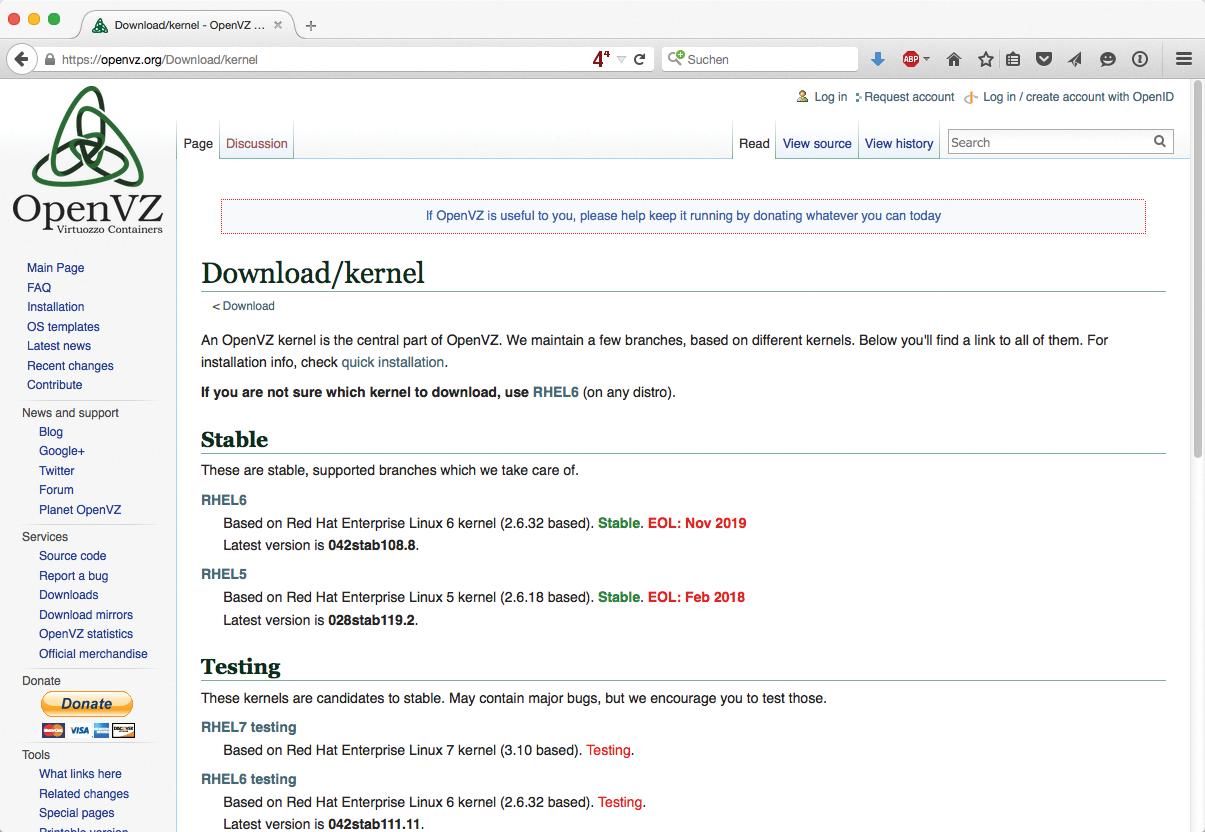

Parallels Cloud Server still relies on Red Hat Enterprise Linux (RHEL) 6 and comes with Linux 2.6.32, albeit in Red Hat's Frankenstein incarnation. An old kernel like this can fail on state-of-the-art hardware, so Virtuozzo is hardly in the running for new deployments. Today, a beta version at least is based on RHEL 7, which fully complies with FL/OSS rules. Whether or not the vendor today, Odin, will manage to regain lost trust in the product still remains doubtful [3].

LXC

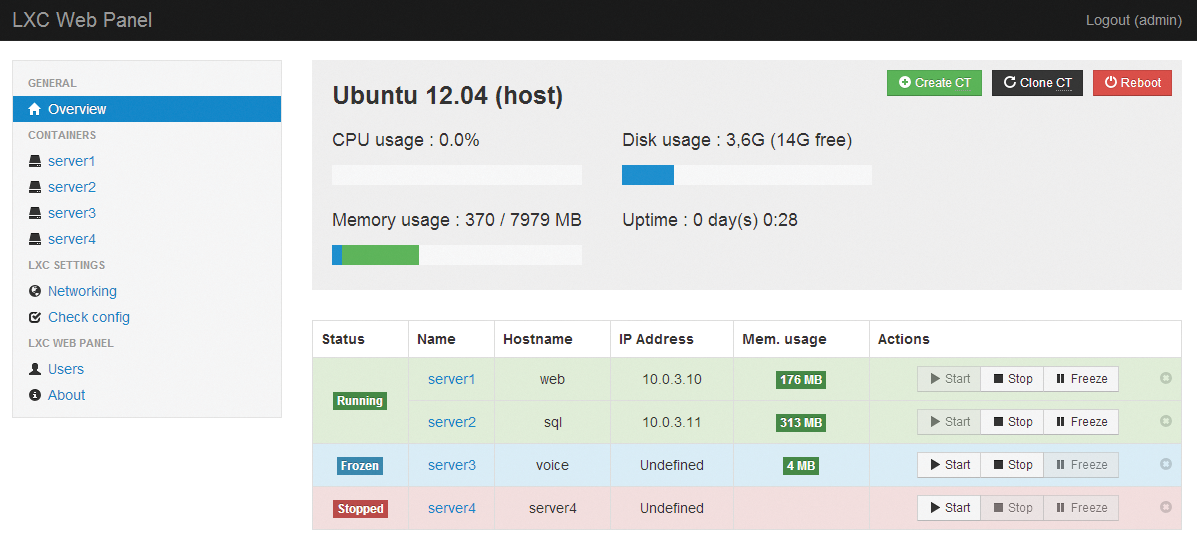

One of the reasons Virtuozzo has a hard row to hoe is that LXC [4] (Figure 4) already provides a complete container solution that can be deployed immediately with any plain vanilla kernel. That it was the OpenVZ developers, with their various patches, who made LXC in its original form possible is possibly one of the greatest ironies of history.

LXC containers officially saw the light of day in 2008. At the time, Linux 2.6.24 had just been released. A few kernel releases before this, the functions required for LXC containers had already made their way into the kernel. Cgroups (control groups) is a kernel interface that makes it possible to restrict the privileges and permissions of individual programs on a Linux system. Cgroups originally went by the name "process containers" because they supported operations with individual processes in virtual environments that were screened off from the rest of the system.

The main initiator was Google, but support for the new technology was soon coming in from various directions. Shortly after the release of the first LXC version, the new technology attracted some initial hype. For the first time, admins had a tool for virtualizing processes on a system without needing a complete virtual machine. In test setups, LXC-based solutions were regularly deployed at the time.

However, they rarely found their way from planning into production, because LXC posed a couple of unanswered questions relating to the security of the individual containers. If you had root privileges in one container, you were able – for a long time – to issue arbitrary commands with root privileges on the host. This did not change until Linux kernel 3.8 was released, which offered new cgroups functions for the first time.

In 2013, the kernel developers put cgroups through a low-level redesign, adding namespaces, which created virtual network stacks or PIDs that are strictly segregated from other namespaces or the main system. If a process is running in one network namespace, it only sees the (completely virtual) network interfaces in that namespace.

Users can only indirectly access the network cards the host uses, and they cannot see them at all. Also, users do not see processes that belong to a separate PID namespace that runs processes in other namespaces on the system. Namespaces in combination with cgroups thus offer far stricter segregation of individual containers than was previously the case.

Now, Linux version 3.8 and later had all the tools needed to run containers in a meaningful way. However, LXC never fully recovered from poor marketing. Shortly before it became interesting for corporate users, another tool had entered the stage and conquered the hearts of administrators and hipsters alike: Docker [5].

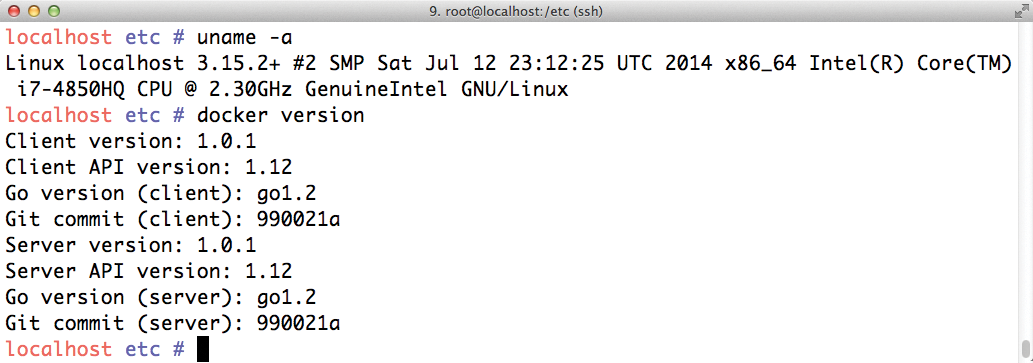

Docker

Docker (Figure 5) stands among the ranks of established solutions because it has already been working the market for several years, and it has built up its own microcosmos of third-party software vendors. Thanks to Google's Kubernetes, for example, Docker can now also run in a cluster. The single Docker container on a single host is no longer important; instead, the focus has shifted to whole swarms of containers that can be rolled out to any number of hosts in an automated process.

Seen from a technical point of view, Docker's success is impressive, because it is very similar to LXC: Like LXC, Docker relies on cgroups and namespaces in the Linux kernel, and LXC containers can just as easily use a plain vanilla kernel as Docker. Although Docker bolsters each kernel function with many features, such as version management for containers, these features are not crucial when deciding for Docker or LXC. The developers behind Docker, however, did not just get the technology right, they consistently pushed forward with targeted marketing from the outset.

Docker seems to offer administrators a plethora of new options. For example, PaaS (Platform as a Service) is easy with Docker: A new container that houses the desired application can be created in the shortest possible time, and this is precisely the capability that Docker consistently and successfully promotes.

Dark Clouds

In the meantime, the hype surrounding Docker seems to be ebbing slightly, given the critical opinions being voiced about Docker of late. CoreOS recently departed from Docker and is now developing its own container format named Rocket.

The way in which Docker handles containers is not universally lauded. For example, admins criticize the substantial security risk they take in their daily routines with Docker containers. The principle behind the development of many Docker containers is that "it works for me," but that is not exactly a quality metric. For example, something that works for a developer in a container on their laptop will probably also work on a server, but any administrator hosting that container could be taking an incalculable risk – a fact that is often ignored. The CoreOS developers cited a similar view as their motivation for migrating from Docker to their own format.

Conclusions

When you compare the established container solutions for Linux, the future belongs to cgroups and namespaces. Both tools are an integral part of the Linux kernel. Popular solutions such as LXC and Docker already make intensive use of them, and other solutions that previously followed their own approaches (e.g., Virtuozzo) are preparing to migrate to the kernel functions or have completed a migration in part.

Linux-VServer is a special case among the candidates introduced in this article; the project made a conscious decision to continue with its own special approach. For the developers, this is a burden for the future and a factor of uncertainty for administrators; the solution can only hope to survive if someone can be found to keep providing the required kernel patches in the future.

In comparison, it is unlikely that cgroups and namespaces, and along with them LXC and Docker, will simply disappear. Too many corporations have invested time and money in developing the required interfaces, with no sign of an alternative. LXC and Docker are the Linux containers of the present day and are not alone in their dependence on cgroups and namespaces.

Linux has thus officially and finally acquired the functionality that containers (zones) in Solaris or jails in FreeBSD have had for years – a standardized interface right at the heart of the operating system that third-party vendors can use as needed.