New container solutions for Linux

Young and Wild

Ever since Docker started dominating daily conversations, more than a few observers have predicted that virtualization with KVM or Xen would suffer declines. Only time will tell whether these prophecies are true, but certainly containers have a lot going for them. In a direct comparison with full-fledged virtualizers, containers have an advantage with a high density of virtual environments on the virtualization host.

As commercial interest in containers grows, so does the number of solutions available on the market. While LXC and Docker have been vying for users' attention, VMware has introduced its own container approach (Photon OS), as has CoreOS, which has departed from Docker. Meanwhile, Canonical has launched a third product, LXD.

For administrators, it is difficult to tell at first glance which of these solutions is the correct choice for a given application. In this article, I provide an overview of the latest container approaches and clarify which is the best fit for different application scenarios.

The King of the Hill: Docker

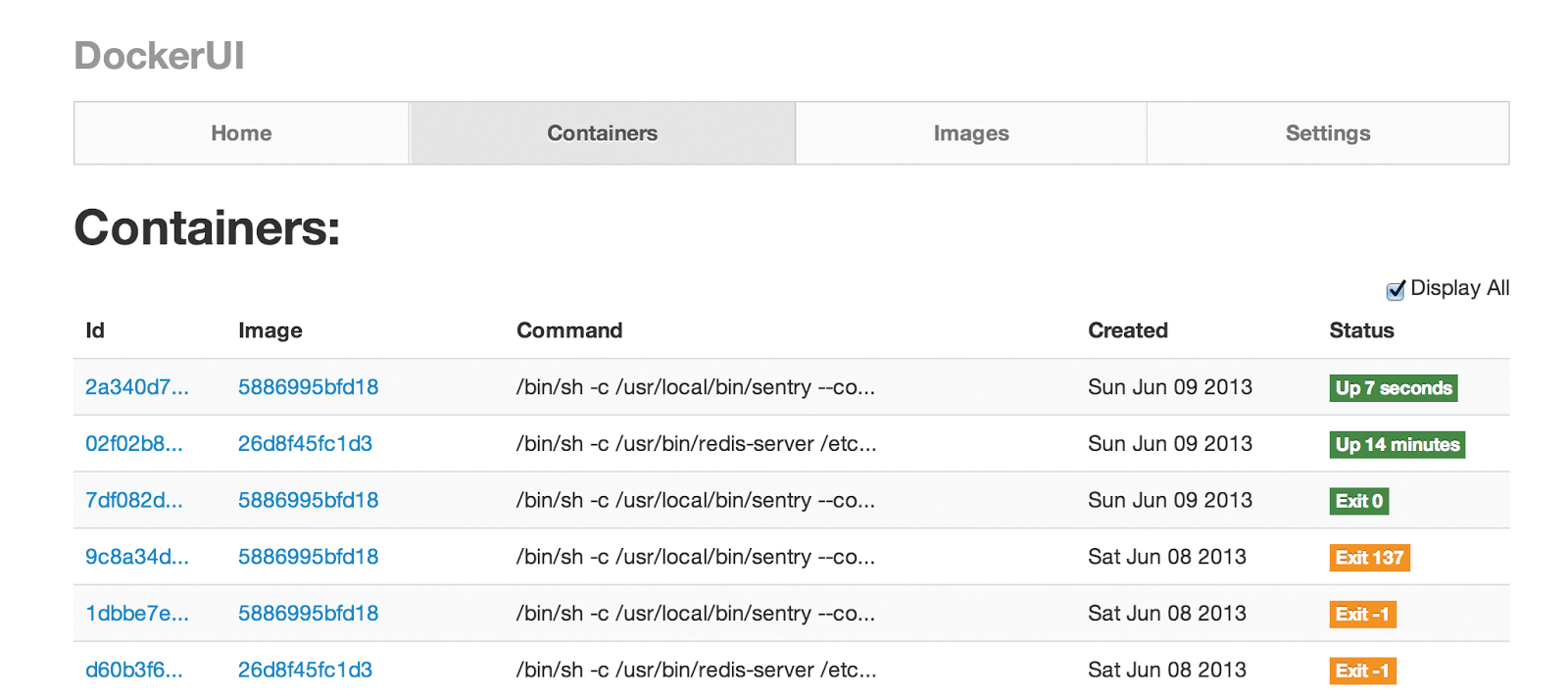

I still rank Docker [1] (Figure 1) as "young and wild," even though it is an established solution, because no other container solution has attracted so much attention, seen such massive growth in terms of features, and impressed the critics.

From a technical standpoint, one or two details are worthy of note in the case of Docker. First, Docker now supports container operations with its own library, libcontainer. This was not part of the solution right from the start; up until version 0.9, Docker drew directly on LXC to start and manage containers. However, LXC lacked several features, so Docker decided to create its own container platform. The ambition of the libcontainer project is to establish a universal container format for Linux that other solutions can follow.

The second important point in Docker's case is that it strives to achieve exclusive mastery over the containers. Each host runs a separate Docker service, and its child processes are the containers. If you want to launch a Docker container, you issue a matching command from the command-line (CLI) that talks to the Docker daemon on the host in question. The Docker daemon then starts a container and makes sure it runs as desired; it is also responsible for deleting the container when told to do so by the administrator at the end of the day.

Docker fans consider this comprehensive life cycle management to be key. Once the Docker binaries are installed on the host, you can get started, and because a large number of Docker containers are available on the Internet, it doesn't take long to set up your first working container.

Therefore, Docker now is mainly used in the style of platform as a service (PaaS), wherein prefabricated containers with preinstalled software are deployed (i.e., the software developer offers an appropriate container that the user downloads and starts). The individual Docker components are so tightly meshed, that it is more or less impossible for external solutions to intervene meaningfully with the process. In the search for reasons to migrate to Docker alternatives, this is the one point that really stood out, and it provided a justification for the first candidate: Rocket.

CoreOS and Rocket

That CoreOS would enter the ranks of content providers seemed to be virtually impossible just a year ago. It is contradictory to how CoreOS sees itself: as a tool for organizing container operations in masses. Containers are originally tied to the host on which they were started by the user.

This scenario basically ignores the cloud as a factor: Tools that support conveniently starting multiple containers at the same time and then distributing them to multiple hosts were not originally envisaged in the Linux kernel. To this day, even Docker itself does not contain a function that supports something like this.

The CoreOS project is an example of retrofitted clustering capability for containers. You can create a cloud that offers the customer virtually unlimited capacity that is available on demand. CoreOS is a minimal operating system that, out of the box, can do much more than start containers and then manage them in swarms.

Each CoreOS host has sufficient intelligence to know on which host its container is running. If you want to launch a swarm of containers (e.g., to fire up a complete infrastructure as a service (IaaS) webserver farm at the push of a button), CoreOS steps in with the tools that let you do so. These tools (e.g., etcd, fleetd, and many others) then set up the containers in the cloud.

From the outset, CoreOS relied on Docker as its default container format. It was thus possible to say that a container running on host 1 would very likely run on host n. Because Docker supplied the necessary userland integration, the solution was the ideal tool for CoreOS or Kubernetes.

Much of the work done by CoreOS was fed back to Docker, with Brandon Philips, one of the founders of CoreOS, being a top committer to the project. With the increasing use of Docker as a PaaS tool, its focus shifted, however, and cracks started to appear in the relations between CoreOS and Docker.

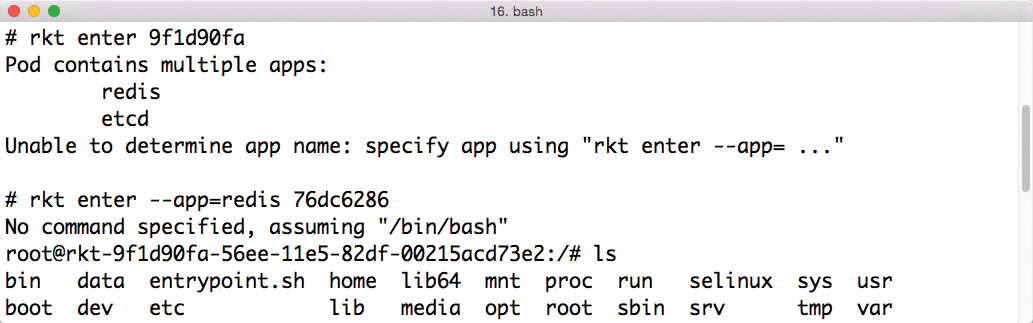

The battle culminated in an announcement by CoreOS that the project would be looking to develop its own container format, and this format already had a name: Rocket [2] (Figure 2). CoreOS was not sparing with its criticism of the people at Docker: The original intent of being a container standard for Linux had been left behind, they said. In the meantime, the container manifesto, once a technical guideline for Docker, had completely disappeared, and, said the CoreOS developers, talk had turned more or less to a Docker platform rather than simple containers.

According to the CoreOS developers, Docker no longer wants to be a container format, but more of a universal platform for distributing and starting operating system images, including matching software for PaaS purposes. This is viewed as a problem in many respects. The CoreOS developers particularly dislike that a typical Docker container could easily be a Trojan running with root privileges. Moreover, Docker has moved so far away from its original goals that it has become virtually unusable in CoreOS.

Rocket to the Rescue

Rocket is the attempt by CoreOS to create just a container format that does not aspire to be much more. The intent is for Rocket primarily to offer the functionality that exists in simple Docker setups. This includes, on the one hand, the format in which the containers are distributed; that is, it answers the questions as to whether an image is available and the kind of filesystem it uses. On the other hand, a container solution also needs matching programs that support container operations. After all, administrators need to be able to type something at the command line to launch a container.

At the same time, the Rocket format sets out to solve some of the major problems with Docker. In the CoreOS developers' opinion, this includes the topic of security, which they seek to manage through more granular isolation and through the image format itself. The idea is for the community to specify and maintain the format, instead of being guided by the commercial interests of a single company.

From a technology point of view, the differences between the early Rocket versions and the current Docker are already very much apparent. Rocket hosts no longer run a daemon, which the administrator talks to via the CLI and which then finally starts the containers. Instead, the rkt program starts the containers directly. The practical thing about this is that Rocket containers can thus also interact well with other control mechanisms, such as systemd-nspawn, which allows Rocket to offer a more universal interface than the older and more established Docker.

At the end of the day, Rocket is an alternative to the Docker tools that pursue goals similar to those originally sought by Docker. Under the hood, Rocket builds on the same kernel functionality that Docker uses. In the current CoreOS versions, Rocket can already be used, and you will search in vain for large numbers of publicly available images for containers. You can't expect Rocket images to start appearing until the Rocket format becomes more widespread.

The project itself seems to have understood that a cold migration from Docker to Rocket in CoreOS does not make much sense right now. Thus far, the developers are sticking to the statement that Docker will continue to be supported in CoreOS in the future. Plans to get rid completely of Docker support from CoreOS do not currently exist, so for Rocket, this means it is only usefully deployable in the framework of orchestration solutions like CoreOS.

LXD

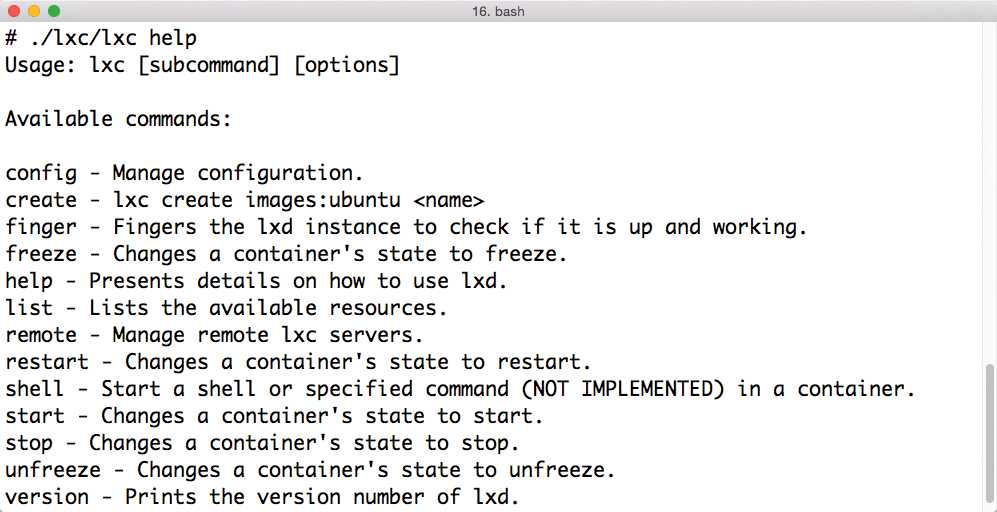

The next candidate in the overview is LXD [3] (Figure 3). Again, I need to detour into the cloud to explain the motivation behind the solution. Much like Docker, OpenStack cloud software also is experiencing a great deal of hype, so what would make more sense than to combine the two? At first glance, an OpenStack cloud with Docker as the virtualizer running in it seems to be the ultimate combination.

lxd runs in the background.However, if you start taking a closer look at Docker and OpenStack, you will quickly notice that the two solutions are not that easy to combine. For example, Docker comes with its own image management and relies on each host having a copy of the container image for which it is to launch a container. In contrast, OpenStack has its own image service, Glance.

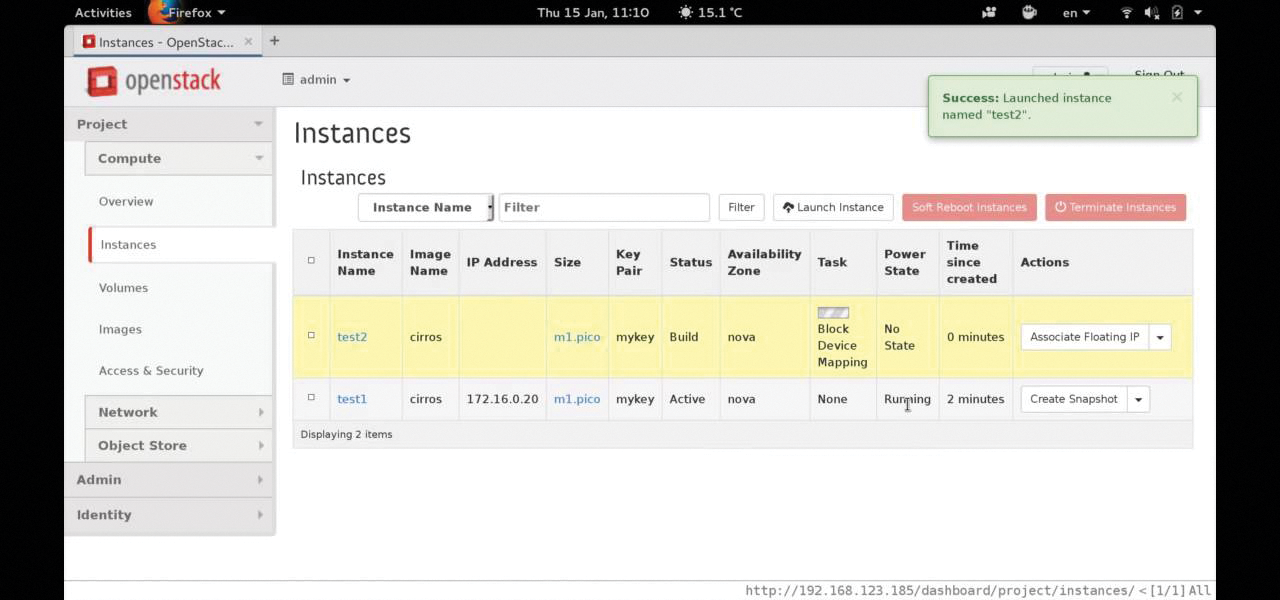

Similar problems crop up when it comes to networking and storage. The dilemma is comparable to the Docker problems that the CoreOS developers saw in their own project: Docker is much more than a container standard; it is a separate platform for container operations. Teaming up with OpenStack thus does not work very well, either (Figure 4).

A driver that teaches OpenStack Nova – the OpenStack virtualization manager – how to handle Docker may already exist. It was already an integral part of Nova, but some releases ago, the Nova Docker driver was removed from the platform. The official message at the time was that the developers wanted to improve the driver without taking the OpenStack release cycles into consideration and then put it back into OpenStack. In fact, it is unclear today if and when this will happen.

Canonical must be frustrated about this: After all, the corporation invested a large amount of money in becoming the vanguard of the FL/OSS world in terms of OpenStack. Most OpenStack clouds currently run on Ubuntu, but the containers are still missing. In contrast, KVM, Qemu, and Xen can be operated more or less painlessly as hypervisors in OpenStack installations. To this day, there is no approach for container virtualization with Docker that is suitable for production operations. The tinkering with Docker that is necessary today can hardly be described as ready for production.

This prompted Canonical to build a new framework and containers specially designed for operations in OpenStack. The company dubbed the project LXD. The similarity of this name to LXC is no coincidence: LXD sees itself as a supplement to LXC, which handles distributed operation of LXC containers within an OpenStack installation.

LXD to the Rescue

Under the hood, LXD thus uses LXC, and it thus becomes clear that LXD relies on the typical Linux container interfaces: cgroups and namespaces. In the media, Canonical's announcement hit home like a bombshell: Canonical was more or less committed to Docker, after all. Did the announcement that Canonical was building its own container solution mean a departure from Docker?

Canonical hurried to deny such allegations. Canonical stated that it was still convinced that Docker was the best container solution for various applications, but in the OpenStack field, they thought that LXD offered a faster track. In the meantime, Canonical underpinned the announcement of LXD at the OpenStack developer meeting at the end of 2014 with some usable code, and LXD can now be operated on Ubuntu 14.04.

Canonical delivers OpenStack integration into the bargain, with a hypervisor driver for Nova in the form of nova-compute-lxd, which can manage LXD containers on OpenStack hosts. This is made possible by the API component in LXD: In typical OpenStack style, LXD extends LXC by adding a RESTful API, which can be controlled and managed from the outside using HTTP-based commands. Viewed in the light of day, LXD is thus a collection of tools, written in Go, that remotely controls LXC containers.

Similarities to Rocket definitely exist: LXD also lacks a daemon that handles the control of all the containers centrally on a host. API calls instruct the matching binaries to install the containers directly on the hosts without needing a daemon. As with Rocket, this makes it easier for external solutions to dock onto LXD – and not just for OpenStack.

A contributing factor is that LXD does not try to manage the complete life cycle of a container. Although the LXD programs take care of starting and stopping, what happens to the virtual machine in the meantime is entirely the responsibility of the management solution in the background.

Whether or not Canonical really has started to rock the Docker boat remains to be seen. For example, Mirantis now has a solution that installs Docker in a way that makes it usable with OpenStack; however, not without some technical contortions. The screenshots published by Mirantis do not really lend themselves to drawing conclusions on how meaningfully Docker can be run in combination with OpenStack.

If you want containers with OpenStack, LXD is the right choice. If you do not want to integrate containers with a cloud framework like OpenStack, you will probably find it easier to go for the standard Docker and solutions such as Kubernetes or CoreOS.

Photon by VMware

VMware launching its own container solution seems a little strange at first glance. After all, VMware still generates most of its turnover with classical full virtualization. Containers do not have a reason to exist in the ESXi sphere, which is precisely why VMware's sortie into the container market makes sense on closer inspection. If customers really do want to move from full virtualization to containers, then VMware has a vested interest in earning money by providing containers, if need be.

Officially, the reasons are different. VMware has announced that Photon OS [4] (Figure 5) has been placed under a free license to ensure that an efficient system is available for operating containers in the data center. From a technical point of view, Photon by VMware is thus more than LXD or Rocket. VMware's work is more of an alternative to solutions such as Kubernetes or CoreOS: It is a minimal operating system that also executes the manager's containers.

Great Functionality

The company from Palo Alto offers a full feature set: Although Photon OS does not use Docker itself, it does support its container format, and it can also handle containers that use the Rocket format. Of course, perfect integration with existing VMware environments is guaranteed. If you already use a VMware virtualization platform, you can seamlessly connect Photon OS instances to this management software.

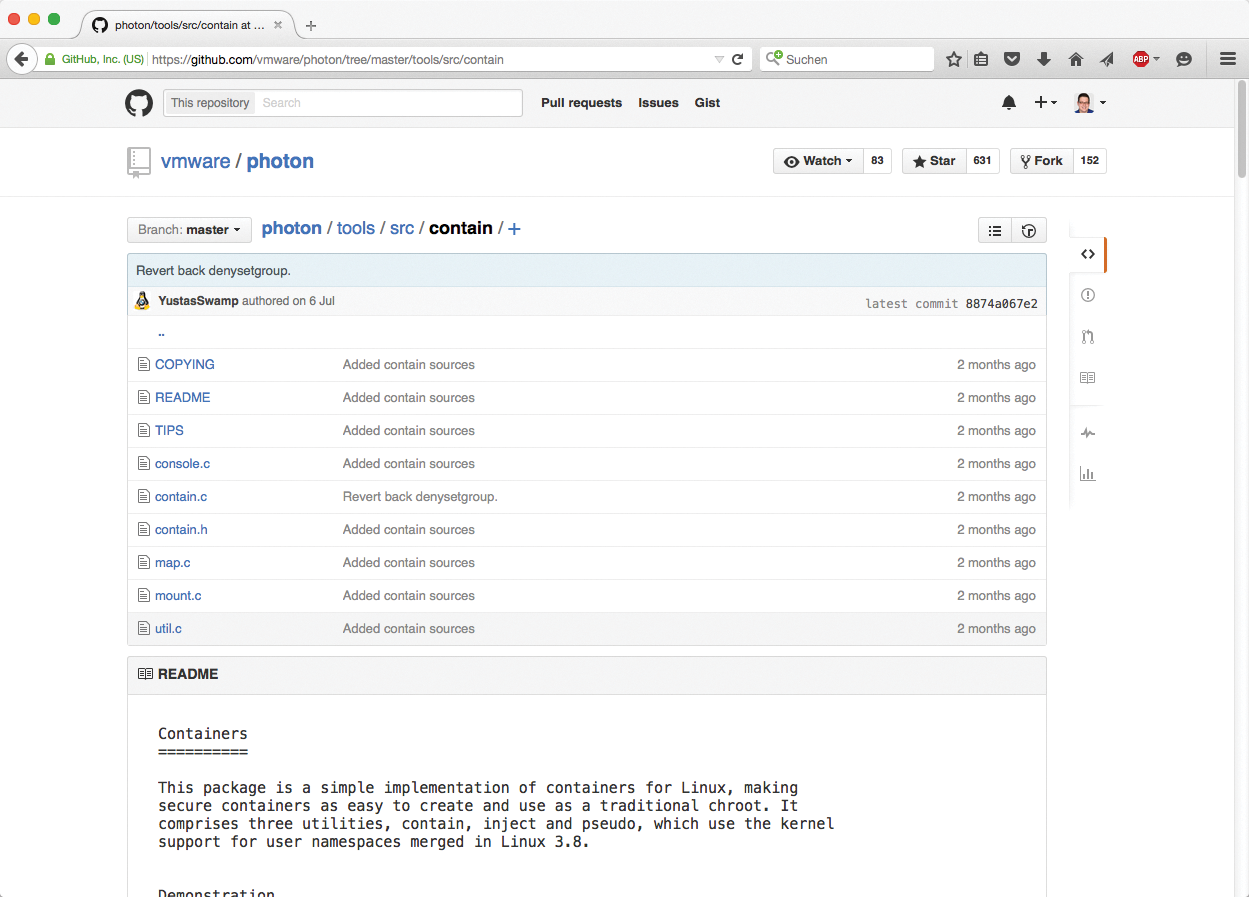

Surprisingly, VMware is developing the product openly, right from the outset. In this way, the company is seeking – according to its own statements [5] – to establish a community around the product and hopes that patches will be contributed from the outside that might fix bugs or extend the functionality. The open source code allows for some rare insights: Photon OS implements containers using its own tool, container, which is based on cgroups and namespaces.

It is at least questionable whether the product will meet with the success that VMware hopes for within the community. Even if VMware is looking to establish a reputation for universally deployable functionality, the first point on the feature list is the previously mentioned integration with other VMware products. Integration with third-party environments looks less promising: For example, OpenStack integration has not been mentioned thus far, with no code for this purpose.

VMware still has an ace up its sleeve, though: Lightwave is being bundled with Photon OS and will, in future, take care of container security management. VMware is looking to provide an option for handling various aspects, such as user management or X509 certificates, centrally from the cloud controller. Ideally, the cloud controller should be a VMware product; however, compatibility with protocols such as LDAP or Kerberos is intended.

Compared with the other candidates, Photon OS leaves one in two minds. On the one hand, VMware's intent is clearly identifiable – opening up an option for creating a business driver well away from its own classical product portfolio. That the company is relying on open source software to do so is worthy of praise, at least if the motivation behind it is more than just bagging free patches. Tight integration with existing VMware products is undeniably visible. Whether the niche market of VMware users who are prepared to contribute to the codebase is big enough remains to be seen.

Conclusions

The market for new container solutions remains pretty confusing. However, the era of adopting a universal approach looks to be coming to an end: Docker has exhausted this market already, and in a cloud context, operating individual containers does not make sense anyway. The focus is now on container swarms.

If you need such a universal approach, you will probably look to one of the many available alternatives: Google's Kubernetes is based on Docker, and CoreOS (still) has its own Docker interface. If you do not rely on specific Docker functionality, Rocket provides a leaner container format within CoreOS, and development is not exclusively driven by the interests of a single enterprise.

When it comes to integration and cloud computing solutions, LXD in combination with OpenStack seems to be the most promising approach right now. You will not find alternatives that work well either in the cloud camp or in the container camp: CloudStack, for example, which has survived as perhaps the only genuine alternative to OpenStack, does not currently have support for Docker or for any of the other container technologies I looked at here.

VMware makes you think that the company is looking to establish its position in a new market with Photon OS. However, Photon OS at least currently, is so tightly meshed with the typical VMware products that independent operations with Photon OS do not seem to make much sense. VMware's solution – viewed in this light – would be competing with offerings such as CoreOS or Kubernetes.

At the end of the day, the clear-cut options for production operations with containers depend heavily on your use case, but the number of options for each use case is very limited – and mostly to just one.