An introduction to Intel QuickAssist Technology

Fast Help

Big Data, IoT, and storage (cloud and enterprise) solutions are very compute-intensive. These technologies move large amounts of data in and out of storage and require secure data transmission across the network. To help this evolving market, Intel is introducing QuickAssist technology.

Intel QuickAssist Technology [1] provides security and compression acceleration capabilities to improve performance and efficiency on Intel Architecture platforms. Server, networking, big data, and storage applications use Intel QuickAssist to offload compute-intensive operations, such as:

- Symmetric cryptography functions, including cipher operations and authentication operations

- Public key functions, including RSA, Diffie-Hellman, and elliptic curve cryptography,

- Compression and decompression functions, including DEFLATE

QuickAssist enables users to meet the demands of ever-increasing amounts of data, especially data with the need for encryption and compression. QuickAssist helps users ensure applications are fast, secure, and available.

What is QuickAssist?

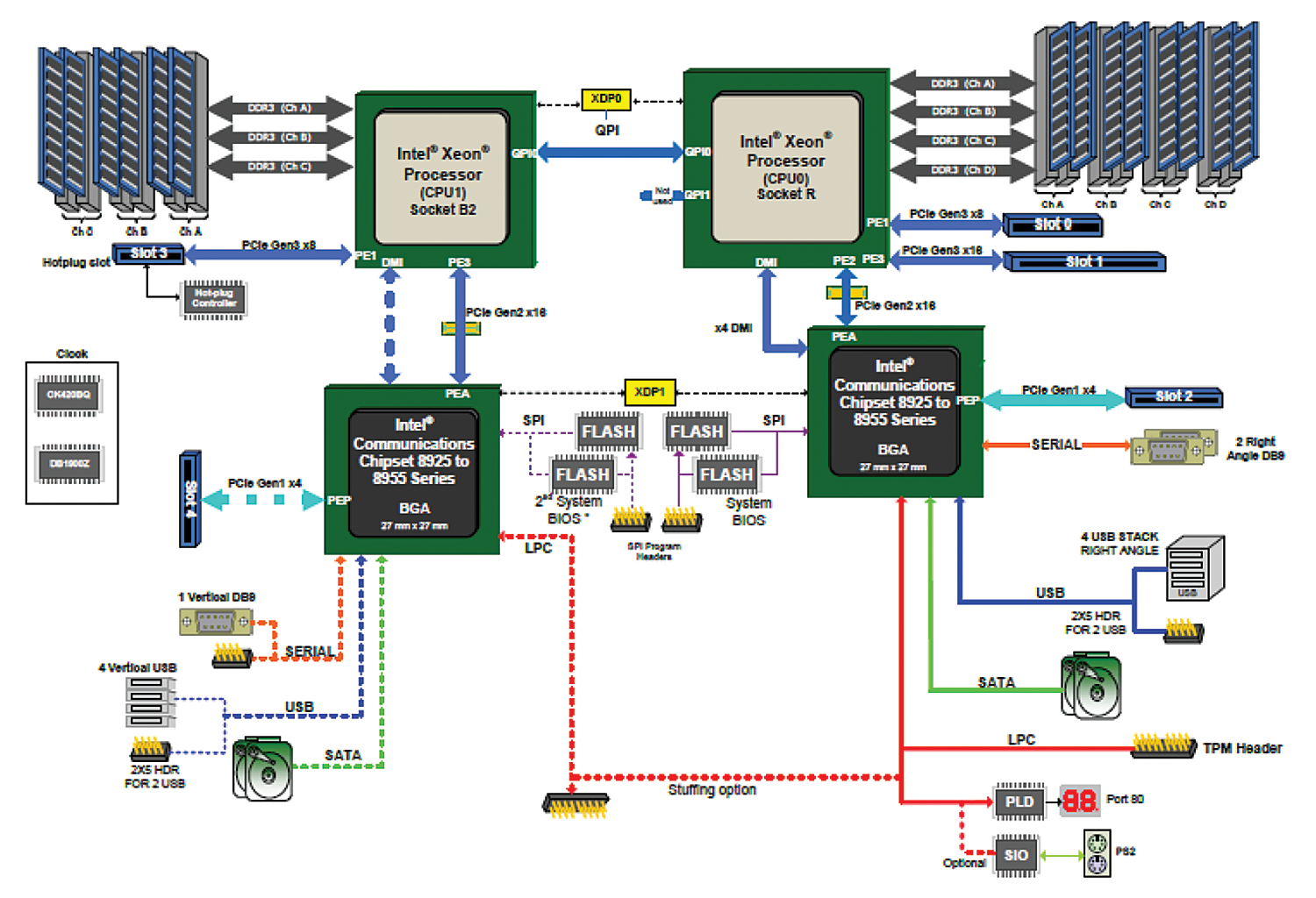

Intel QuickAssist Technology runs on Intel architecture. At a high level, the platform pairs an Intel architecture processor(s) with the Intel Communications 8925 to 8955 Series chipset.

QuickAssist offloads crypto and compression tasks from the CPU to the 89XX communications chip. Functionally, the Intel Communications Chipset 8925 to 8955 is most easily described as a Platform Controller Hub (PCH) that includes both standard PC interfaces (e.g., PCI Express, SATA, USB, and so on) together with Intel QuickAssist Technology accelerator and I/O interfaces.

Figure 1 shows an Intel communications platform that features the Intel Xeon Processor E5-2658 and E5-2448L with the Intel Communications Chipset 89xx Development Kit.

The Intel QuickAssist accelerator on the 89XX chip acts as a companion chip to the CPU. Its purpose is to relieve the CPU from compute-intensive operations such as cryptography and compression operations. The acceleration capabilities provide security and compression services that improve performance and efficiency in storage, networking, big data, and security applications.

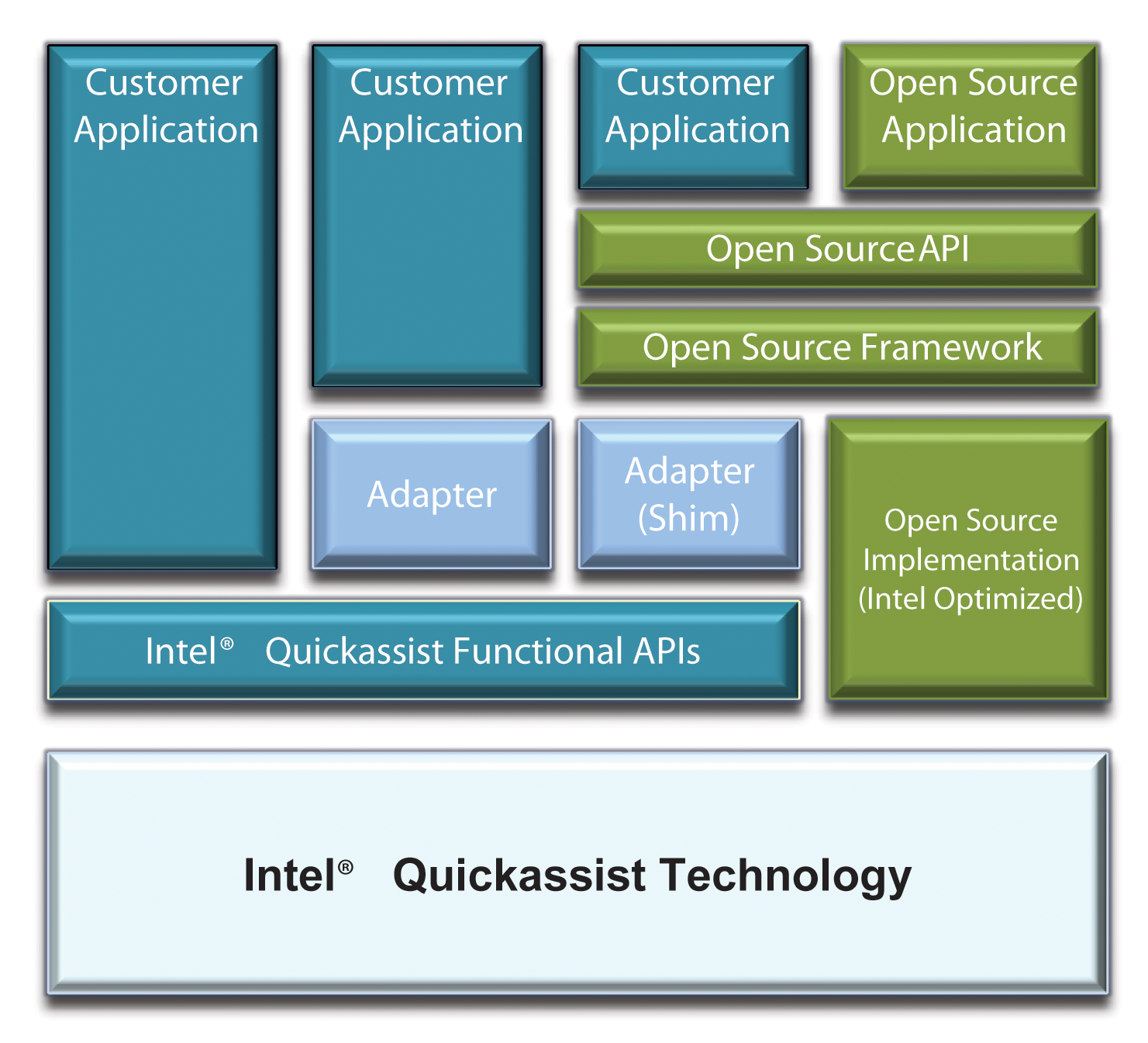

Intel QuickAssist in the Software Stack

Application developers can access QuickAssist features through the Intel QuickAssist API. The API enables easy interfacing between the customer application and the QuickAssist acceleration driver.

It is also possible to use QuickAssist with open source software frameworks via a SHIM layer. The SHIM layer acts as an adapter between the Intel QuickAssist API and the interface expected by industry standard frameworks. Figure 2 shows how you can integrate Intel QuickAssist Technology at multiple levels.

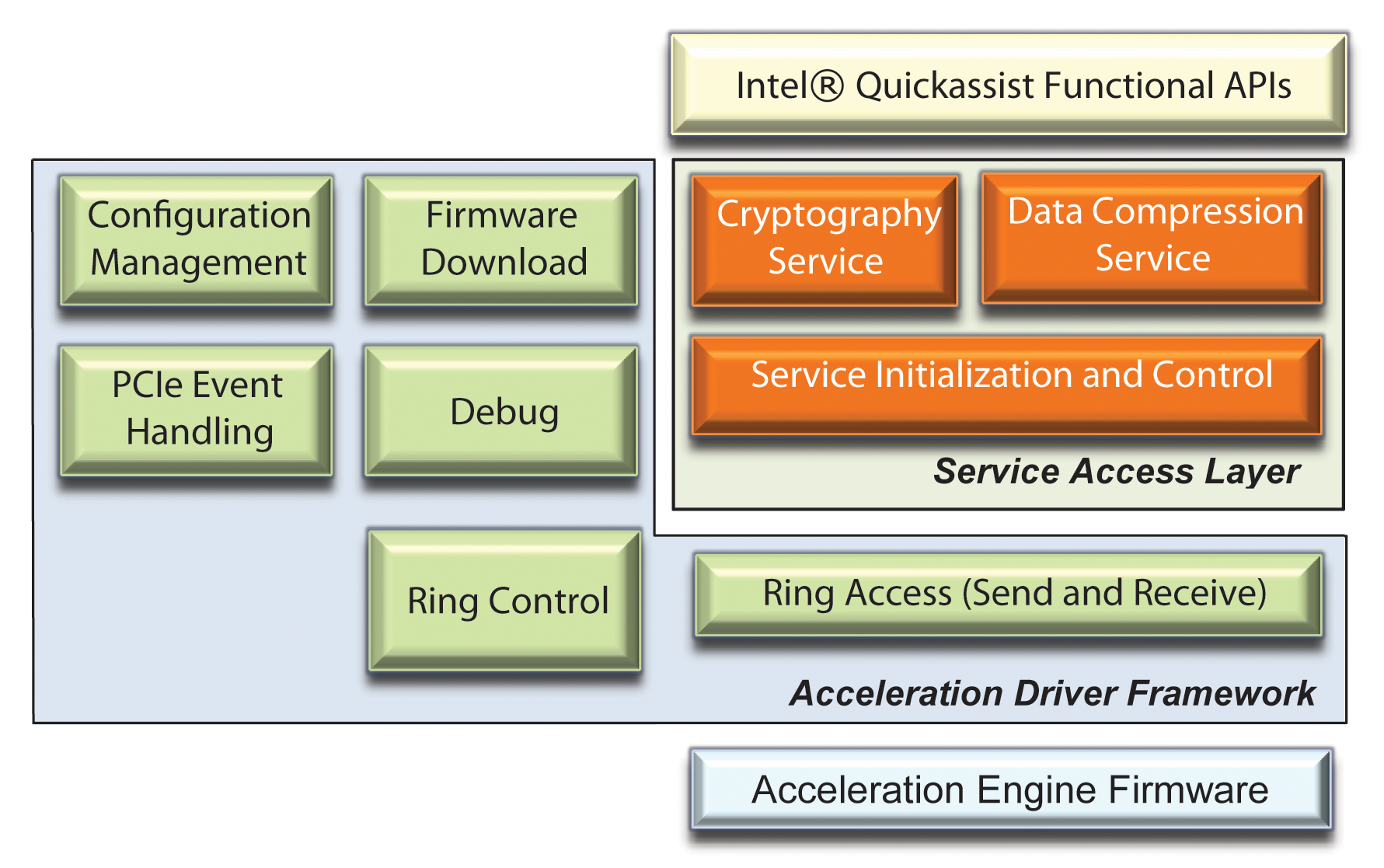

The QuickAssist API accesses the Intel QuickAssist driver, which is responsible for exposing the acceleration services to the application software. The driver consists of two layers:

- The Acceleration Driver Framework (ADF)

- The Service Access Layer (SAL)

The Acceleration Driver Framework has multiple roles, including:

- Downloading the firmware that will control the hardware performing the acceleration operations. The firmware will convert request messages created by SAL into commands that control the acceleration engines. When the acceleration operation completes, it will send a response message back.

- Creating the Transmit and Receive rings. The Acceleration Driver Framework communicates with the firmware through host DRAM implemented rings. The ring system is used to pass request or response messages between the SAL and the firmware.

- Listening and responding to PCIe events.

- Adding debugging capability via the

procfilesystem. It is possible to view the configuration and the ring traffic activity using an entry in theprocfilesystem. The following console command will show the configuration of the device:

cat /proc/icp_dh895xcc_dev0/cfg_debug

- Handle the user configuration defined in the configuration file.

The Service Access Layer (SAL) in turn creates and starts the acceleration services. Two services are currently available:

- The cryptographic service

- The compression service

Once a service is enabled, the SAL will enable the service-associated APIs. The SAL will also create the messages that will be put on the transmit rings. When a transmit message is processed, the firmware will put a response message on the receive rings. When a response message is present on the receive ring, SAL will decode the response message in a callback function and update the API output parameters with statistics and processed payload. Figure 3 shows the QuickAssist driver architecture.

Intel QuickAssist API

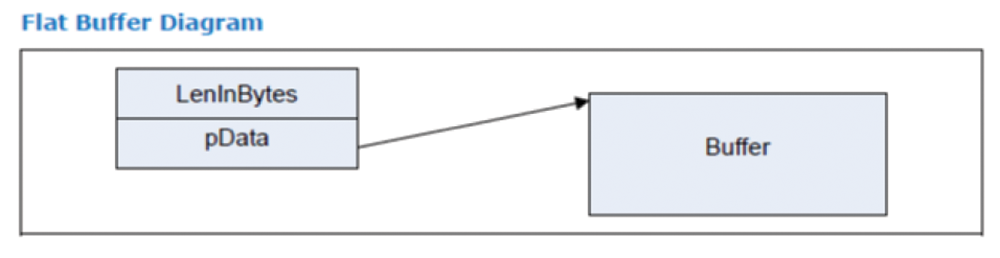

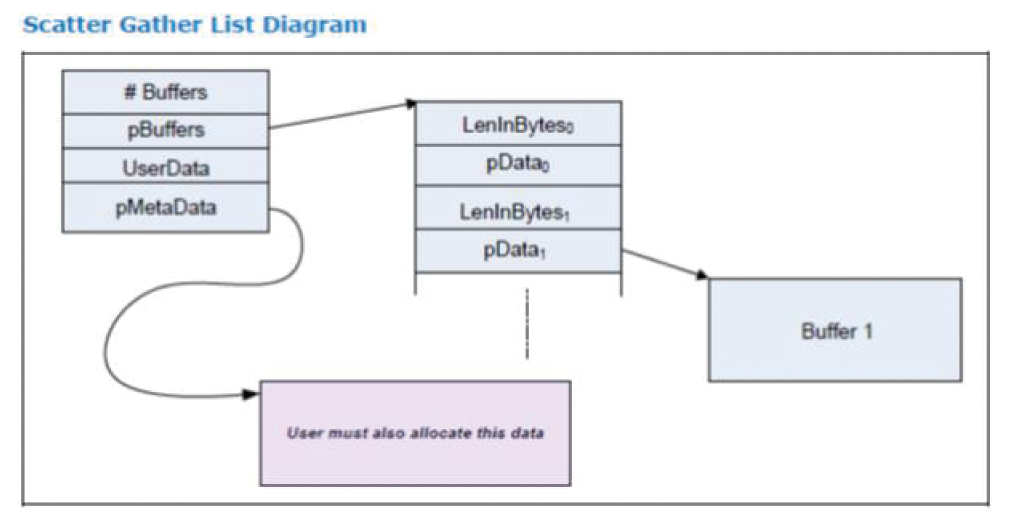

The Intel QuickAssist API is the top-level API for QuickAssist technology. The API can operate both in kernel and user space and contains structures, data types, and definitions that are common across the interface. The application must format the payload data in either Flat buffer or Scatter-Gather buffer list. The two data structures are described in Table 1:

Tabelle 1: QuickAssist API Buffer Format

|

Buffer Option |

Example |

Diagram |

|---|---|---|

|

Flat buffer format |

struct _CpaFlatBuffer { Cpa32U dataLenInBytes; Cpa8U *pData;} CpaFlatBuffer; |

|

|

Scatter Gather buffer list format |

struct _CpaBufferList { Cpa32U numBuffers; CpaFlatBuffer *pBuffers; void *pUserData; void *pPrivateMetaData;} CpaBufferList; |

Execution Flow

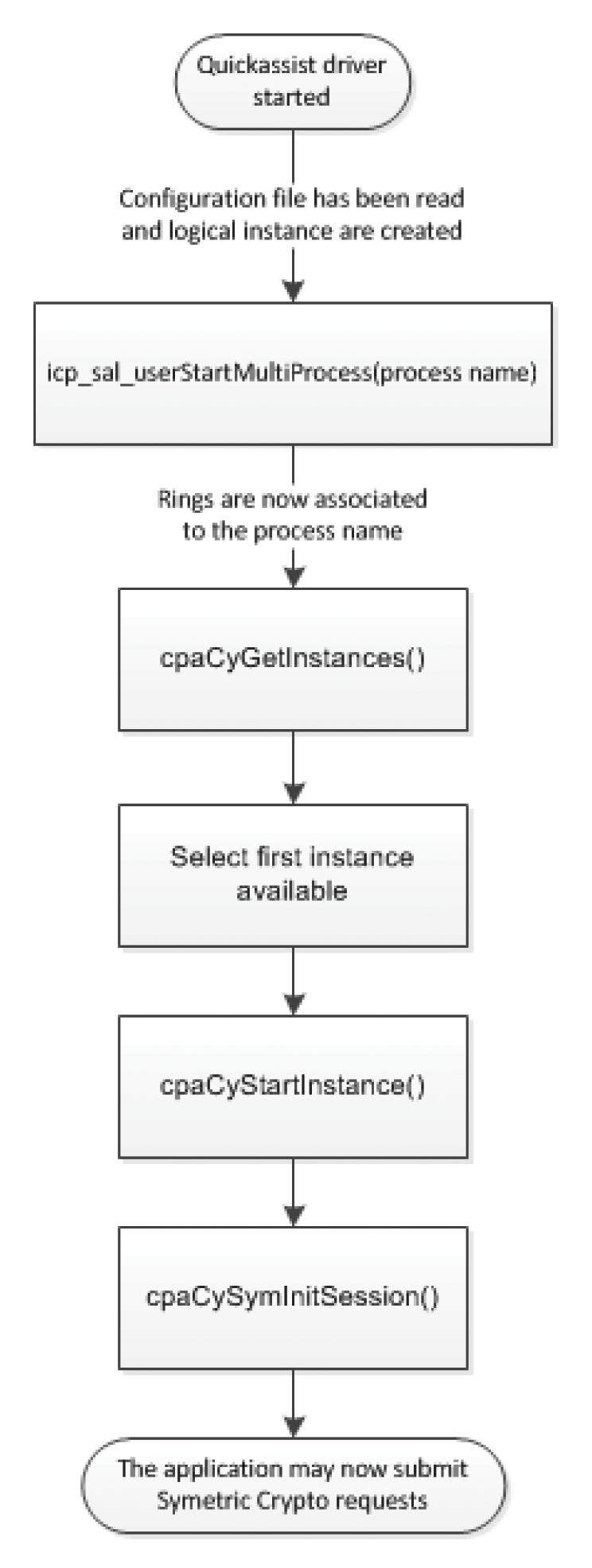

In the case of a userspace application, the accelerator rings must be exposed to the process. The SAL must be configured to expose the logical instance to the userspace process. The instances are declared in the QuickAssist driver configuration file. The QuickAssist driver enables the userspace application to register with QuickAssist via icp_sal_userStartMultiProcess(). Similarly, when the process exits, it needs to call icp_sal_userStop().

The sequence described in Figure 4 shows the example of a case where the user has configured two compression logical instances for a process called SSL. The userspace process may then access these logical instances by calling cpaDcGetInstances(). The application may then initiate a session with these logical instances and perform in the present example a compression or decompression operation.

The driver does not allocate any memory. The application is entirely responsible for allocating the necessary resources for the Intel QuickAssist APIs to execute successfully. The application must provide instance allocation, session allocation, and payload buffers.

QuickAssist Compression Service

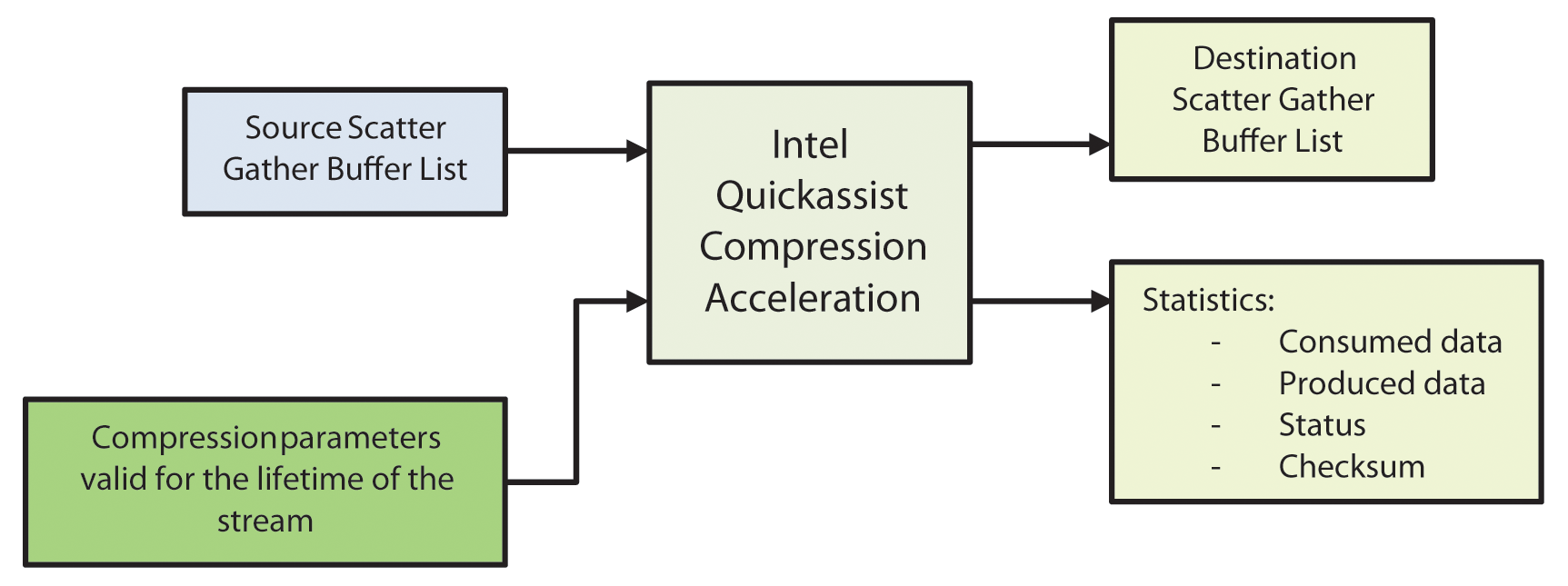

Bringing down storage cost is the key to any data storage solution. Support for stateful dynamic compression in the Intel QuickAssist compression service improves the compression ratio and provides checksum information that is useful for data integrity checking.

QuickAssist also offers a Compress and a Decompress API. These APIs take both the source and destination data as Scatter-Gather List buffers. After execution, the APIs return the statistics of the request.

The Intel QuickAssist compression service (Figure 5) offers two modes of operation:

- Stateless operation mode: This mode can be defined as compress and forget mode. In this mode, the current request has no dependency on the previous request.

- Stateful operation mode: The current request has a dependency on a previous buffer of the same stream. When a request completes, a portion of the source data is saved in a history buffer. The history buffer is then restored on the following request. This mode offers a better compression ratio than the Stateless mode for compression operations. For decompression, the stateful mode enables the application to decompress partial fragments of data.

The checksum output is computed over the lifetime of the stream. The checksum is stored in a data structure that is saved and restored between requests just like the story buffer.

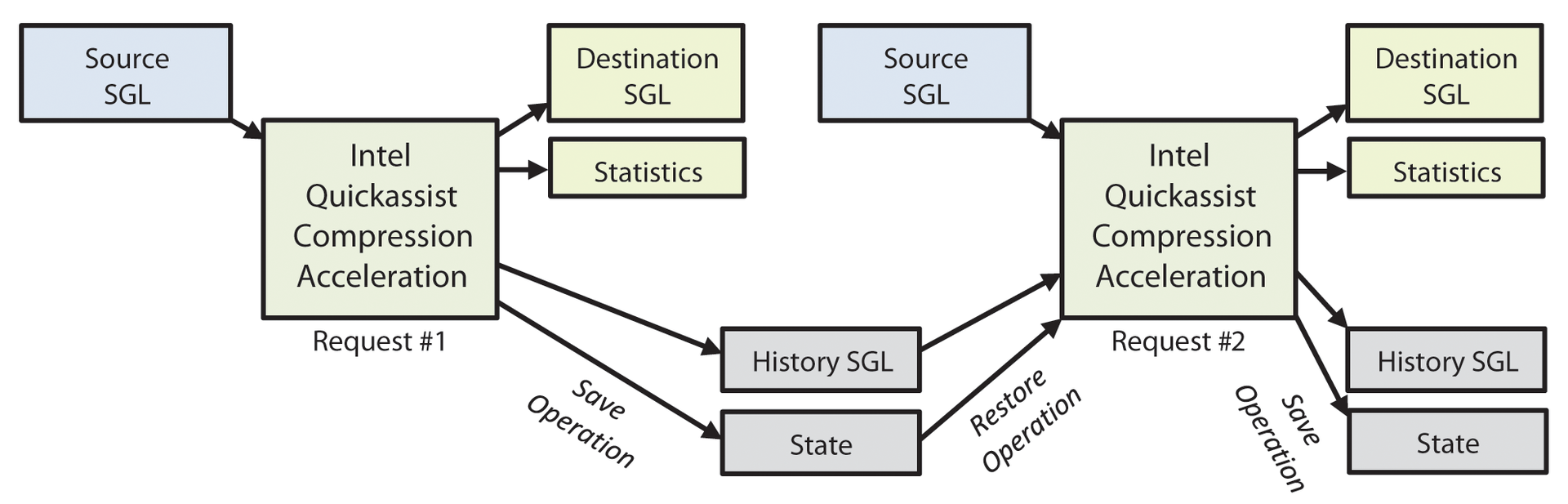

The diagram in Figure 6 shows the dependency between requests during stateful operation mode. With the history updated and transferred from one request to another, matching patterns are searched across the source and the history buffers, which contributes to improving the compression ratio.

Batch and Pack

The save and restore functions associated with the stateful mode of operations do affect the bandwidth and IO performance. The history can be up to 48KB for some of the compression levels. Saving and restoring the history between each request means transferring to DRAM 96KB (48 x2) of data for each request. This constraint affects host interface bandwidth and reduces the performance of the compression/decompression engine because of the time spent saving and restoring state.

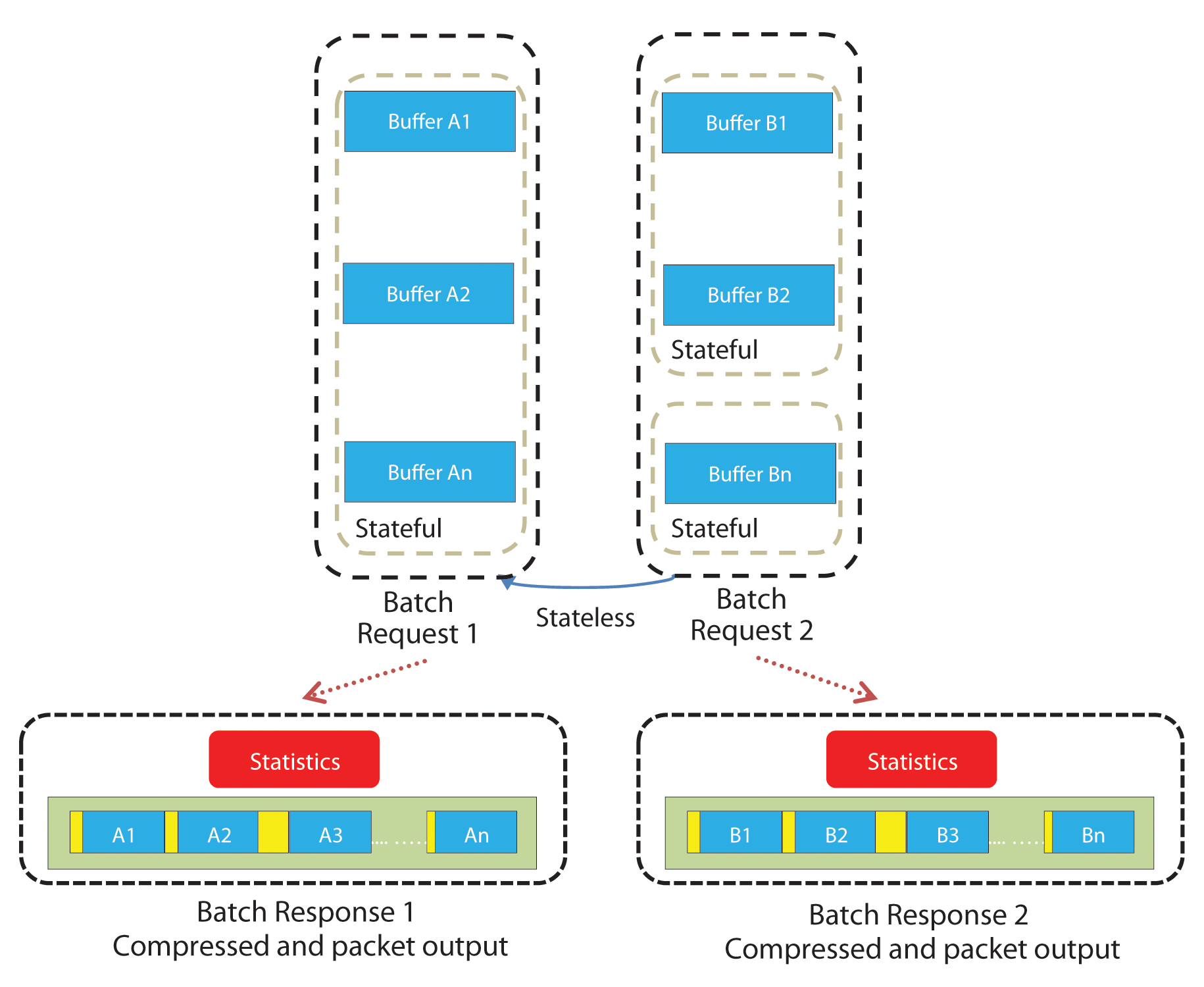

However if all the requests could be batched into one and submitted at once, history could remain local to the group of requests.

In storage usage models, the client sends a buffer (usually 4 to 8KB) to the storage controller. The controller takes several of these user IOs and packs them together in a single output buffer. Depending upon the storage controller architecture, the controller can decide that each user IO is either stateful or stateless compared to the previous one in the buffer list. For example, if multiple user IOs happen to be contiguous, it is feasible to make them stateful, thereby improving the overall compression ratio (compared with the use case where each user IO was individually compressed).

On a user IO write, the controller batches multiple user IOs, compresses them, and packs them before writing back to the storage media. Finally, when the client reads the user IO data, the controller seeks the buffer of interest, decompresses the data, and returns the inflated data back to the user.

Thanks to a smart software algorithm and a flexible hardware accelerator, the Batch and Pack QuickAssist feature improves the compression ratio, system resource utilizations, and read performance, as well as keeping the I/O operations to a minimum.

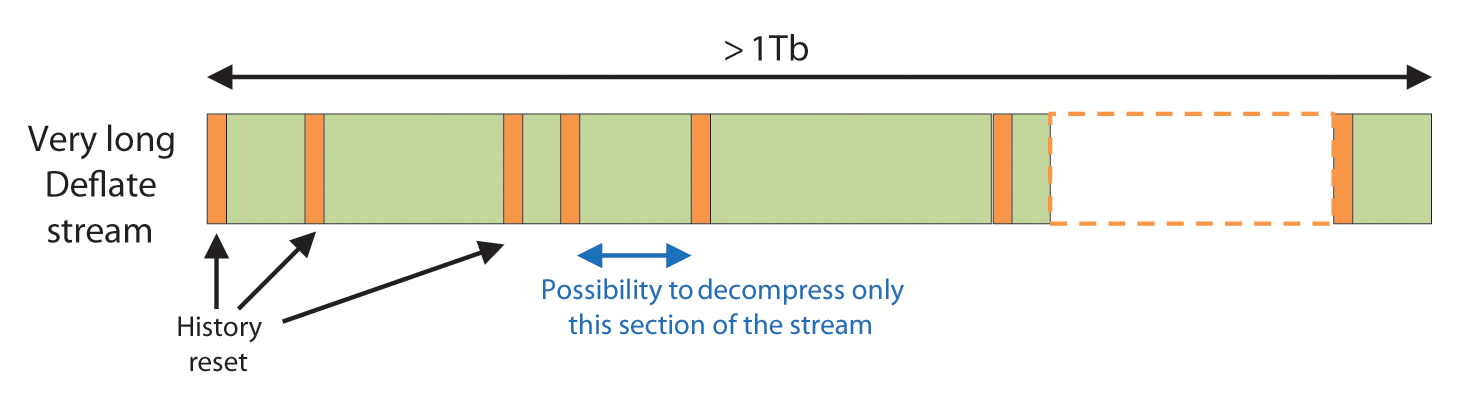

The Batch and Pack feature is intended to optimize the implementation of seekable compression. Seekable compression involves compressing multiple streams into one deflate stream, providing the facility to decompress only a portion of the deflate stream. The Batch and Pack feature allows grouping multiple compression jobs into a single request (batch). The deflated output data is concatenated in one buffer (pack). The Batch and Pack feature is exposed via a QuickAssist API.

The user has the option to select between stateful and stateless operation mode within the API. With stateful, the history is shared across all the jobs within the batch. As a consequence, the current job does not take advantage of the history from just the previous job (as with standard stateful requests), but from all the previous jobs instead. This behavior is the key to improving the compression ratio. However, it imposes a restriction: the entire "packed" buffer must be decompressed even if a single stream within the batch is needed by the application. No data dependency exists between multiple Batch and Pack requests; the Batch and Pack feature behaves in stateless mode.

In case the application would like to insert its own metadata, the Batch and Pack API provides a mechanism allowing the application to insert its metadata in the deflate output. Metadata is stored in a skip region added either at the end or at the beginning of a job.

If a skip region is included in the job source data, the Batch and Pack API will skip it. Data inside a skip region is not processed and is not available in the deflate bit stream. Figure 7 shows the behavior of the Batch and Pack feature across two requests in stateful mode. As you can see, the statefulness is kept within the jobs inside the request but not between requests. Table 2 lists the QuickAssist Batch and Pack API features.

Tabelle 2: Batch and Pack API Feature List

|

Input Parameters |

Output Parameters |

|---|---|

|

Unlimited number of Jobs |

Deflated output available in one single for all the jobs within the batch |

|

Each job data is stored in a scatter-gather buffer list |

Statistics available for each job |

|

Support for skip regions at the beginning or at the end of each job |

|

|

Support to reset history |

The Batch and Pack feature makes it possible to decompress a specific portion of a long stream without having to decompress the entire stream. The skip region allows inserting user metadata, which can easily be accommodated to store checksums, deflate block lengths, and offsets. With the history reset capability, it is possible to decompress only a portion of the deflate stream to retrieve the data segment of interest.

Figure 8 shows an example of how seekable decompression is achieved with Batch and Pack. Even if the deflate stream is very long, it is possible to decompress only a section of it. When the QuickAssist Batch and Pack API is called with the history reset on a particular job, you can decompress the deflate output from this job on.

Conclusion

Intel QuickAssist technology allows the application to take advantage of the hardware acceleration. With features such as stateful compression and Batch and Pack, QuickAssist lets storage providers take advantage of leading-edge encryption and data compression capabilities.