OpenShift 3: Platform as a Service

Pain-Free Support

Most cloud providers try to attract customers with Infrastructure as a Service (IaaS), for which they receive access to configurable virtual machines (VMs) in the cloud. Because almost every application is able to operate in an IaaS environment, it offers the greatest flexibility across all services – accompanied, however, by high administrative effort. Platform as a Service (PaaS), on the other hand, appeals to developers who need a suitable environment to build and test an application but are not in the least interested in setting it up themselves.

IaaS, then, cannot be accomplished on the fly, and certainly not without in-depth knowledge of system administration. The customer is not merely free to configure it – they must. Because the basic images used in clouds are mostly minimal versions of the various distributions, the entire overhead of setting up the system rests on the admin. Developers, however, only need to know whether their programs function, and the admin just wants to be in position to manage the VM. The concept of PaaS brings admins and developers together as a helpful building block for DevOps. Red Hat presents OpenShift as a platform, providing PaaS as a finished product for businesses; however, does it deliver what the red-hatted ones promise?

The PaaS Alternative

A pre-made PaaS environment allows you to start new development environments at lightning speed with all the important tools (e.g., Git and Jenkins). Continuous integration is the goal: Virtual systems, in which the testing application is available and executable, immediately arise from the source code repositories used by developers to enter their modifications. This principle does require suitable tools and processes that work together.

The final step of the process is rolling out the application into productive operation. PaaS considerably reduces the effort required for the admin because, for a complete LAMP stack with an executable application, you essentially have to do nothing more than start the relevant container.

OpenShift's Variants

Red Hat offers interested parties several options for using OpenShift. Its most simple variants are "gears" (Red Hat-speak for containers) in the public OpenShift cloud operated by Red Hat itself. All functions mentioned so far in this article are integrated in this public cloud. If you only want to look at the container side of OpenShift, you are well served by this option: The barriers to entry are very low, and your first platform will be up and running within just a few minutes.

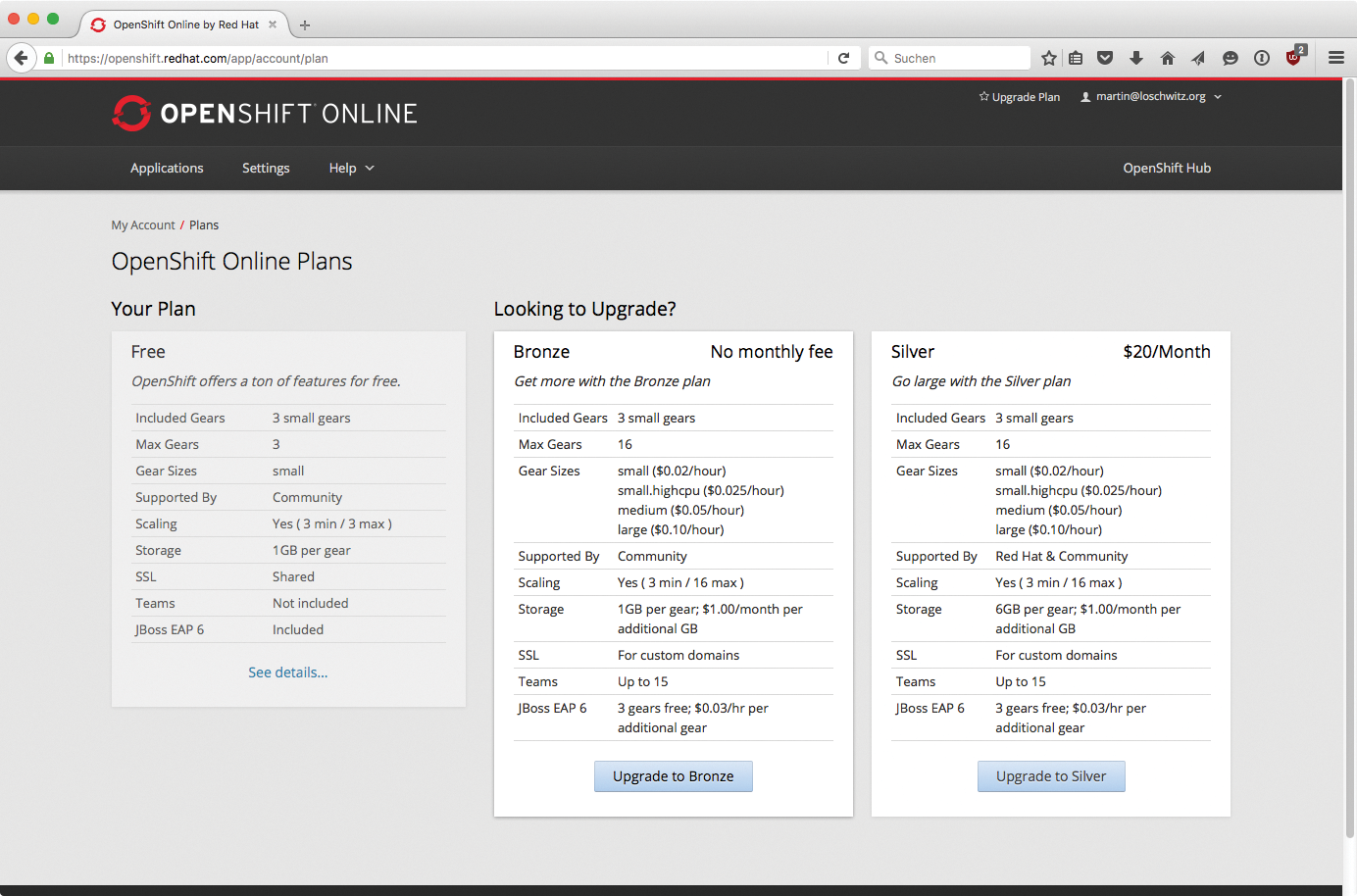

In the Free plan, up to three gears can operate free of charge; those needing more resources can switch to the Bronze or Silver plan. The Bronze plan is still basically free, but it does allow you to subscribe to further services for an additional charge. In the Silver plan, support is also part of the bargain (Figure 1).

Those unable to warm to a public cloud and who instead need the certainty that OpenShift will run on their own hardware, and only for their own purposes, might prefer the OpenShift Dedicated variant as an alternative to public installation. The Dedicated model is based on a platform hosted by Red Hat, but exclusively available to the respective customer. The provider takes care of both the hardware and the software; the responsibility of the user starts with operating the groups of containers (pods) that form the PaaS.

That sort of setup costs at least $48,000 a year, with the possible addition of further fees for more traffic or the provision of more application nodes [1]. However, compared with the costs of hardware alone for such a setup, that is a thoroughly reasonable price.

If, for whatever reason, you not only need exclusive hardware but also want to operate the platform yourself, you can turn to the OpenShift Enterprise version.

With the Enterprise version, you install OpenShift in your own data center, so you can retain full control, while Red Hat offers support from the sidelines. The Enterprise edition is not available without support. Red Hat maintains a refined silence on the OpenShift website [2] about the prices for the Enterprise license; as usual, the price to be paid could vary according to discounts granted. If you contact Red Hat sales, they send an evaluation license for the product on request.

Shift Toward Docker

The first version of the OpenShift environment appeared in 2011; in the meantime, it has reached version 3. Under the hood, it has changed substantially. The producer conducted various experiments, especially in the area of virtualization, before settling on Docker [3] and Kubernetes [4] in the current version.

The solution provided is sensible. Docker is effectively the standard when it comes to container virtualization, whereas Kubernetes is a management framework that facilitates the administration of Docker containers on a large number of servers. OpenShift is thus a complete solution: The host's operating system is part of the environment, along with all components that run on it, which allows you to operate application containers.

The user interfaces, such as APIs or GUIs, are also expressly included in the package. The admin can control the roll-out of containers so that the desired services run within OpenShift. Development is also relevant: OpenShift promises developers a complete and simple environment, in which container development and the use of applications already running are closely linked. The full path from idea to application in a production operation is thus covered.

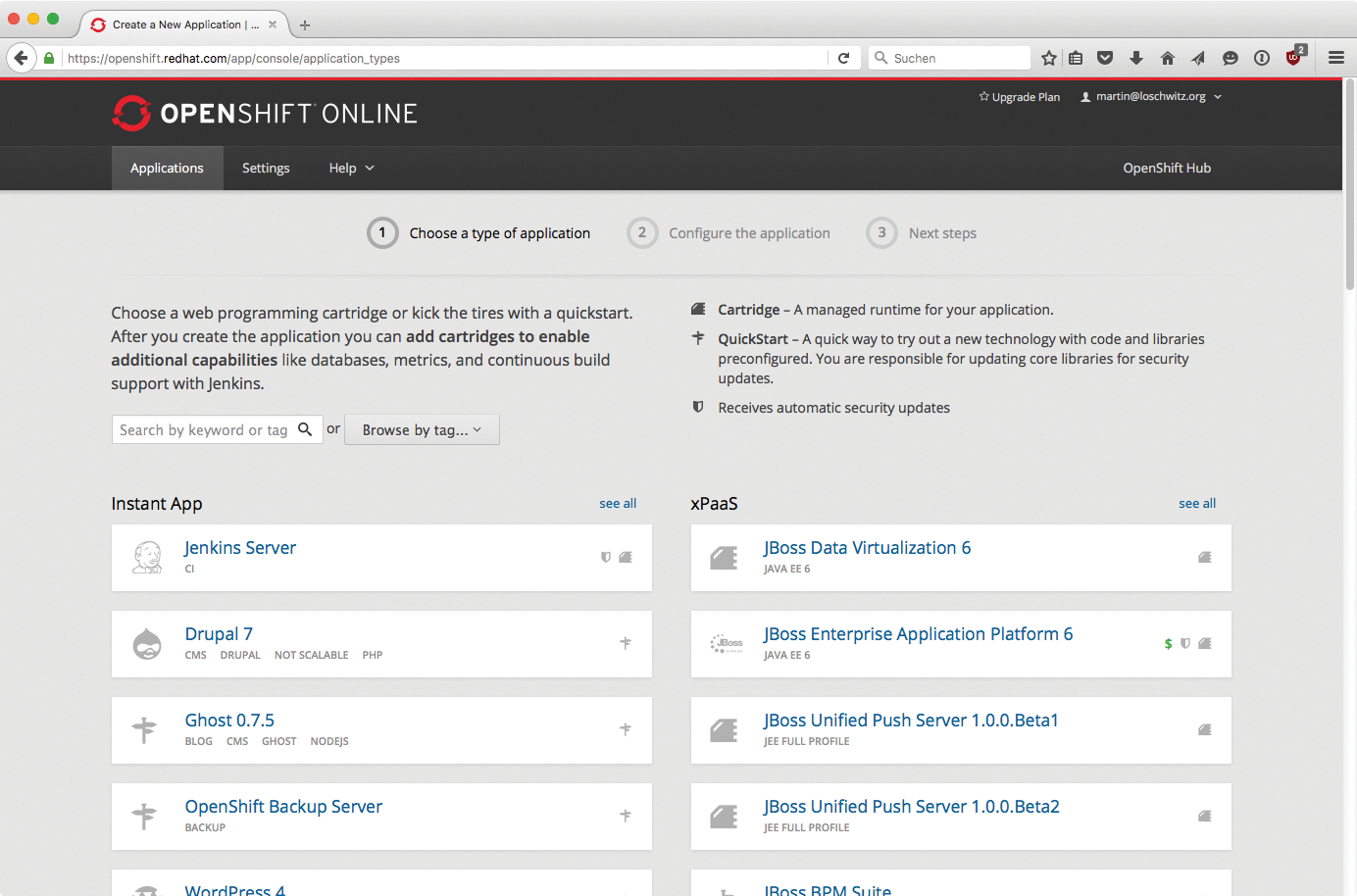

In concrete terms, this means that during the first stage in an environment already prepared via OpenShift, the developer writes code that is then checked into a version control system (Git in OpenShift's case). With the click of a mouse, a complete virtual environment is automatically generated from a container image (cartridge) and the developer's code. All important components are included within this environment (Figure 2). If the application and the obligatory web server need other services (e.g., a database), they can also be started from OpenShift along with the application.

As with all current Red Hat products, OpenShift is based on Enterprise Linux (RHEL) 7, at least for the classic approach. OpenShift also supports Red Hat's Atomic Platform as of version 3.1, which works with a system dependent on RHEL that is specially optimized for the operation of containers. In some respects, the Atomic variant differs markedly from a normal RHEL. For instance, it does not execute various system services as RPM packages, but as its own containers.

The classic RHEL is clearly the more sensible solution if infrastructure to operate RHEL (e.g., functioning automation) is available at the data center. Atomic, however, is better suited for new, greenfield installations that are unwilling to accept the overhead of a complete RHEL.

OpenShift Origin

The core component of Red Hat OpenShift is OpenShift Origin. Although the names are similar, they have two different purposes. On Origin, all threads run together; it is the product's technical foundation. Red Hat OpenShift, on the other hand, is the complete PaaS platform, of which Origin is the important component. In OpenShift, Origin acts as the control center that performs all tasks directly related to the development and rollout of PaaS stacks. The management of sources is a part of that, as is creating containers on the basis of PaaS applications.

Between OpenShift versions 2 and 3, Red Hat practically turned the software upside down. Version 2 still worked on the basis of brokers and gears, which was Red Hat's wording for distributing containers on hosts. The whole technology was developed in-house. OpenShift 3, on the other hand, is completely customized to Docker: Image management is based on Docker and can also use Docker Hubs to obtain basic images. Because Docker itself is not compatible with clusters, Red Hat provides Google's Kubernetes. How that works and how admins are involved requires a closer look.

Kubernetes Under the Hood

The Kubernetes project, invented and since developed by Google, is a cloud environment based on container virtualization with Docker (Figure 3). In principle, it is therefore comparable to OpenStack or CloudStack, although when it comes to the specifics, large differences can be seen.

![OpenShift is a PaaS solution based on Docker and the Kubernetes cloud environment and extended by various functions. (Image from Red Hat openshift.com [5]) OpenShift is a PaaS solution based on Docker and the Kubernetes cloud environment and extended by various functions. (Image from Red Hat openshift.com [5])](images/F03-openshift_1.png)

One of these differences is the overall complexity of the Kubernetes architecture, although it is still significantly less complicated than other clouds. Moreover, Kubernetes offers no support for any virtualization methods other than Docker containers, because the Docker concept is an integral component of Kubernetes' design.

A Kubernetes cloud comprises two types of nodes. The master servers are the controllers of the setup that coordinate the operation of containers on simple nodes. The master servers operate different services, such as an API for client access that also takes over management of users within the Kubernetes cloud [6].

The scheduler selects nodes on which new containers land according to the data available. The replication controller also plays a role, facilitating horizontal scaling. If a certain container can no longer cope with its burden, the replication controller starts new containers. That occurs automatically if the relevant environment is configured accordingly.

In Kubernetes, the way a solution understands a container is clearly documented, because containers are always specific to an application. Ideally, only one application will run within a container. Because setups generally contain more than one service, Google has implemented pods in Kubernetes; a pod is a group of containers belonging to the same setup. All of a pod's containers start together and run on the same node of the setup.

This principle is ideally suited for PaaS. Even when an environment needs several services, they can be started together in OpenShift, with OpenShift forwarding the request to Kubernetes, which then processes the necessary tasks in the background. Ultimately, with these facts in mind, it is only logical that Red Hat discarded its own underpinnings in OpenShift 2 and latched onto Kubernetes in version 3.

Network and Persistent Memory

Kubernetes relieves OpenShift of two further problems on which cloud environments put special demands: network and memory. For example, the network must be configurable from the environment and cannot depend on on-site network hardware; yet, the containers of two customers running on the same bare metal still must not be able to see each another.

For this problem, Kubernetes offers software-defined networking (SDN) that is even allowed to harness external solutions (e.g., Open vSwitch). Kubernetes also processes traffic with the outside world automatically, so admins can cross that item off their list. The customer can be sure that their active containers have a network connection and can communicate with one another.

Storage is an ongoing theme in clouds. Kubernetes assumes that a container can roll itself out afresh at any time. Persistent storage is therefore not envisaged for the average container. Nonetheless, everyday experience shows that it is sometimes necessary; a database with customer data is the perfect example.

Kubernetes can manage persistent storage and attach it to containers, although the user has to request this specifically. OpenShift loops this option through its API so that containers can be provided with persistent storage for PaaS.

This hymn of praise to Kubernetes begs the question of whether administrators should deal directly with Kubernetes instead of taking the long route via OpenShift. In fact, Kubernetes is very well suited for PaaS, but the environment in the version offered by Google is in a rough state. Administrators have to take care of setting it up and equipping the environment with suitable applications. Similarly, everything that eases deployment for developers, such as direct accessibility to Git, is lacking in Kubernetes.

In this area, Red Hat has found the added value that should make OpenShift appetizing to the customer: Red Hat is not only expanding Kubernetes with an installation routine and fancy graphical tools, but also rolling in with a truckload of containers optimized for OpenShift. The integration into development processes, realized via Git, is also attractive.

Installation

If you want to run OpenShift's Enterprise edition in your own data center, you first have to deal with the installation. Again, Red Hat has done its homework. The atomic-openshift-installer utility puts the program on the individual nodes of a setup if they are pre-installed with RHEL 7. The program requires a configuration file with values like the participating nodes and their IP addresses. In this file, the admin defines who is the Kubernetes master and which nodes can later become regular Kubernetes nodes so that even unsupervised installations pose no problems.

If that is too much effort, a "containerized" installation is available that involves several containers that start locally and hold the components relevant for OpenShift. It could hardly be easier than that.

Scripting Languages, Databases, Web

Once OpenShift is set up, the potential PaaS applications occupy center stage. Red Hat still refers to these as gears – think container when you hear "gear." Red Hat is noticeably going flat out when it comes to images. The company has created a clone of the Docker Hub in the form of the OpenShift Hub. On it are various cartridges with which environments (e.g., for PHP, Ruby, or Java) and many other technologies can be started in OpenShift with a mouse click. Cartridges are available for all relevant scripting and programming languages. Virtual machines can start directly from the Hub [7] in Red Hat's public OpenShift Cloud (Figure 4).

If you instead want to operate a container in your own OpenShift instance, you will find the sources on GitHub. If a cartridge for the framework needed can be found from neither Red Hat nor the community, you could possibly write it yourself. You will find various instructions for how to go about this online. Because a separate API service executes cartridges in OpenShift, its documentation [8] is also recommended reading.

Alongside containers for development environments, Red Hat provides containers for databases, such as MySQL 5.6 or PostgreSQL 9.4. On the basis of these templates, the admin can roll out the database along with the application containers so that all the required services work immediately after startup.

The gold standard is a container that has been certified by Red Hat [9]. This means that Red Hat has not only checked to ensure that it meets technical standards, but that the provider will provide support and security updates. Red Hat also provides their own certified containers; the same promises apply.

Through its Marketplace, where suppliers sign up to sell their products, OpenShift incorporates services that cannot be rolled out as gears in an OpenShift installation. These services will typically be of interest to businesses backing other "as a service" offers; for example, ElephantSQL offers PostgreSQL as a service, and Clear DB has a similar offering for MySQL.

Both providers operate the services themselves and individually release the credentials through which the service can ultimately be reached.

If a module is available for the Marketplace from OpenShift, the respective service can be incorporated directly into your own PaaS, as well as via the OpenShift tools, in a seamless process.

The Marketplace is therefore a sensible addition to OpenShift, not least because plenty of exotic services are available, such as RabbitMQ as a Service (CloudAMQP) or Keen IO, which offers Analytics as a Service for the cloud.

High Availability for Controllers

A typical Kubernetes setup has obvious weaknesses, particularly when it comes to the master node, which is a single point of failure. That said, its failure does not automatically lead to all containers crashing or losing their connection to the Internet. However, it might well be impossible to manage existing containers or create new ones with a failed master node.

Red Hat has therefore extended Kubernetes in OpenShift to include a high-availability solution. Because the company employs, or used to employ, all the developers who designed the Linux-HA stack, choosing it was only logical: Pacemaker and Corosync in tandem ensure that the failure of a master node in OpenShift is compensated for within a few seconds by launching the required services on another host.

OpenShift offers its own API and thereby fulfills the minimum requirement for an application in the context of clouds. If you want to use the platform's services, you have the option of using your own web interface, the web console, or the command-line client rhc. The functional range of both approaches is practically identical, making personal preference the sole decider.

Familiar Sight for Developers

The aspects of OpenShift I have looked at so far mostly relate to the installation and operation of clusters, as well as the containers within. However, OpenShift is not an operations tool: DevOps is its hunting ground, and developers will find plenty of attractive features in the solution.

In the context of OpenShift, DevOps means that the platform does not just start gears and groups of gears with specific environments and services on request: It is additionally in a position to roll out applications automatically. The application is a distinct administrative unit in OpenShift, and gears are consolidated into logical groups that bear the name of the application.

A Git server belongs directly to OpenShift: Developers create an application in OpenShift and select which features it has (e.g., "needs MySQL" and "uses PHP"). Afterward, they can check out the (empty) Git directory locally that pertains to this application and make their changes. All aspects of this application can be configured via the Git directory. Once the local changes are complete, one executes a commit via Git and a final push back to OpenShift. This ensures that in the final step, the Git folder's changes are activated in the container.

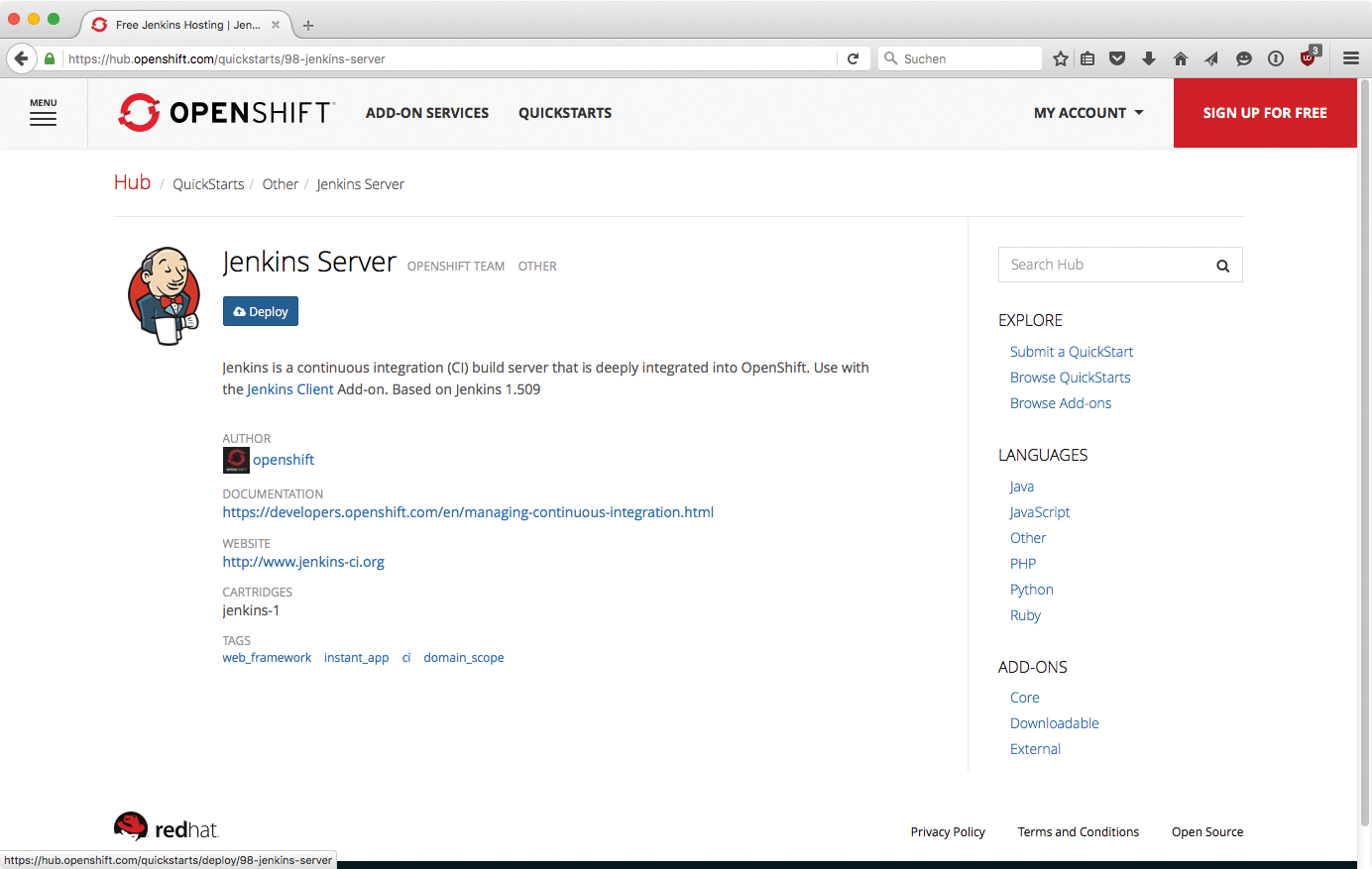

One indispensable tool for continuous development is Jenkins, which puts new code through its paces by checking it with a myriad of previously confirmed test cases. Those who do not want to push their changes directly from development into a container can run them through the tests defined in Jenkins for reinforcement before the code is rolled out in a container.

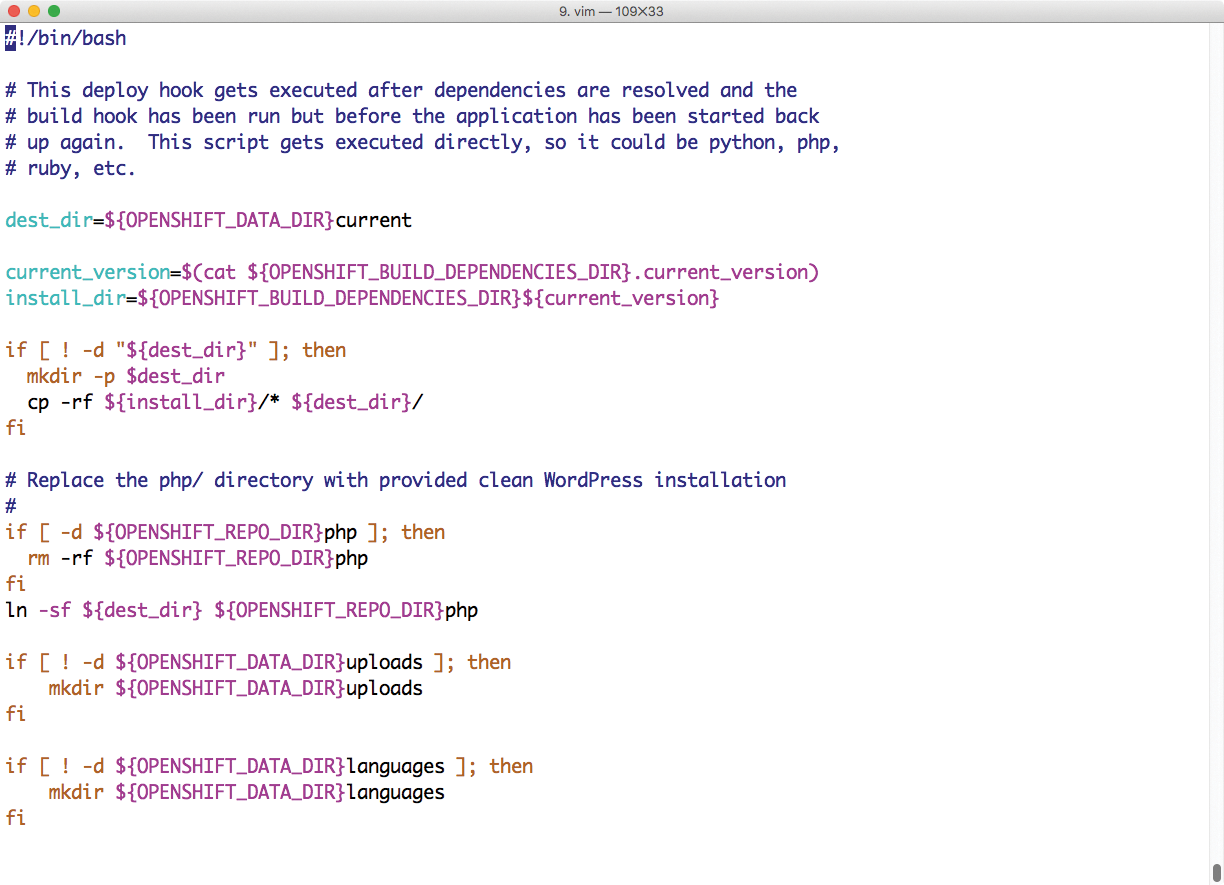

If you want another tool for code checking besides Jenkins, you can use hooks (see Figure 5) that let you specify actions directly in the Git directory, which OpenShift will then execute after a change to the application.

Conclusion

OpenShift impresses with its solid functionality and simplicity. The product achieves what the manufacturer promises: If you want PaaS, you will definitely make your daily routine easier with the use of OpenShift. OpenShift has a clear advantage over pure Kubernetes; complete and even supplier-certified containers for specific environments are especially important in this regard.

However, the product is also very attractive because it integrates well with development processes and considerably shortens the route that the source code takes from developer to final roll-out into production. If you have already thought about using Kubernetes in your own company, by all means take a closer look at OpenShift.