Live migration of virtual machines with KVM

Movers

The KVM hypervisor has been a powerful alternative to Xen and VMware in the Linux world for several years. To make the virtualization solution suitable for enterprise use, the developers are continually integrating new and useful features. An example of this is live migration of virtual machines (VMs).

On Linux systems, the kernel-based virtual machine (KVM) hypervisor built into the kernel won the upper hand a long time ago, because KVM is available on all common Linux distributions without additional configuration overhead, and the major distributors such as Red Hat and SUSE are continuously working on improving it. For most users, the upper performance limits in terms of memory usage and number of VMs are probably less interesting than bread-and-butter features that competitor VMware already offered while KVM was still in its infancy.

Meanwhile, KVM has caught up and now offers live migration of virtual machines [1] – a prerequisite to reliably virtualizing services for several years. This feature lets admins migrate VMs along with services from one server to another, which is useful for load balancing or hardware updates. High-availability services can also be realized more easily with the help of live migration. And, live migration means that the virtual machines just keep running, with interruptions kept to a minimum. Ideally, clients using the VM as a server will not even notice the migration.

Live migration is implemented by some sophisticated technologies intended to ensure that, on one hand, the interruption to service is as short as possible and, on the other hand, that the state of the migrated machine exactly corresponds to that of the original machine. To do this, the hypervisors involved begin to transfer the RAM while monitoring the activities of the source machine. If the remaining changes are small enough to be transferred in the allotted time, the source VM is paused, non-committed memory is copied to the target, and the new machine is started there.

Shared Storage Required

A prerequisite for the live migration of KVM machines is that the disks involved reside on shared storage; that is, they use a data repository that is shared between the source and target hosts. This can be, for example, NFS, iSCSI, or Fibre Channel, but it can also be a distributed or clustered filesystem like GlusterFS and GFS2. Libvirt, the abstraction layer for managing various hypervisor systems on Linux, manages data storage in storage pools. This can mean, for example, conventional disks and ZFS pools or Sheepdog clusters, in addition to the technologies mentioned above.

The different technologies for shared storage offer various benefits and require more or less configuration overhead. If you use storage appliances by a well-known manufacturer, you can also use your vendor's network storage protocols (e.g., iSCSI, Fibre Channel), or you can stick with NFS. For example, NetApp Clustered ONTAP also offers support for pNFS, which newer Linux distributions such as Red Hat Enterprise Linux or CentOS 6.4 support.

In contrast, iSCSI can be used redundantly thanks to multipathing, but again the network infrastructure to support this must be available. Fibre Channel is the most complex in this respect, because it requires a private storage area network (SAN). There is also a copper-based alternative in the form of FCoE (Fibre Channel over Ethernet), which is only offered by Mellanox. An even more exotic solution is SCSI RDMA on InfiniBand or iWARP.

Setting Up the Test Environment with NFS

For the first tests, you can aim a whole order lower and use NFS; after all, this kind of storage also can be implemented with built-in tools. For example, using a Linux Server with CentOS 7, you can set up an NFS server very quickly. Because the NFS server is implemented in the Linux kernel, only the rpcbind daemon and the tools required for managing NFS are missing, and they can be installed via the nfs-utils package. The etc/exports file lists the directories "exported" by the server. The following command starts the NFS service:

systemctl start nfs

A call to journalctl -xn shows the success or failure of the call. Using showmount -e lists the currently exported directories. The syntax of the exports file is simple in principle: the exported directory is followed by the IP address or hostname of the machines allowed to mount the directory, followed by options that, for example, determine whether the shares are read-only or released for writing. That said, many such options are available, as a glance at the export man page will tell you.

To write to the network drives with root privileges from other hosts, you also need to allow the no_root_squash option. A line for this purpose in /etc/exports would look like this:

/nfs 192.168.1.0/ 24(rw,no_root_squash)

It allows all the computers on the 192.168.1.0 network to mount the /nfs directory with root privileges and write to it. You can then restart the NFS server or type exportfs -r to tell the server about the changes. If you now try to mount the directory from a machine on the aforementioned network, this may fail, because you might need to customize the firewall on the server (i.e., to open TCP port 2049).

Storage Pool for libvirt

If you prepare two KVM hosts, you need to define a libvirt-storage pool with NFS on each of them. Libvirt handles mounting the share itself, if it is configured appropriately. You first need to define a storage pool, for example, using the virsh command-line tool. This in turn requires an XML file that determines the location and format of the storage. An example of this is shown in Listing 1.

Listing 1: Storage Pool with NFS

01 <pool type='netfs'> 02 <name>virtstore </name> 03 <source> 04 <host name='192.168.1.1'/> 05 <dir path='/virtstore'/> 06 <format type='nfs'/> 07 </source> 08 <target> 09 <path>/virtstore</path> 10 <permissions> 11 <mode>0755</mode> 12 <owner>-1</owner> 13 <group>-1</group> 14 </permissions> 15 </target> 16 </pool>

Save this XML file as virtstore.xml to define a storage pool named virtstore:

virsh pool-define virtstore.xml

The following command configures the storage pool to start automatically:

virsh pool-autostart virtstore

You still need to adjust the owner and permissions of the files depending on your Linux distribution. If you use SELinux with Red Hat, etc., you can turn to a special setsebool setting that allows access:

setsebool virt_use_nfs 1

The VM hosts involved use the secure shell, SSH, to communicate, and you need to configure them accordingly. The easiest approach is to generate a new key with ssh-keygen and do without the passphrase, which is only meaningful on a well-secured network, of course. Then, copy the keys to the other host with ssh-copy-id. Now, you can test whether an SSH login without a password works.

When you create a new virtual machine on one of the two VM hosts, make sure it uses the previously generated NFS storage pool. Also, you must disable the cache of the virtual block device because virsh otherwise objects when you migrate:

error: Unsafe migration: Migration may lead to data corruption if discs use cache! = none

You will want to turn off the cache anyway because the VM host also has a buffer cache that is used before the VM data reaches a physical disk via the simulated disk. To do this, you can either use a graphical front end such as virt-manager or continue to do everything at the console by editing the configuration with virsh edit VM. In the XML file that defines the configuration of a virtual machine, look for the lines with the disk settings and add the cache = 'none' option to the driver attributes (Listing 2). Now you can start migrating a VM manually as follows:

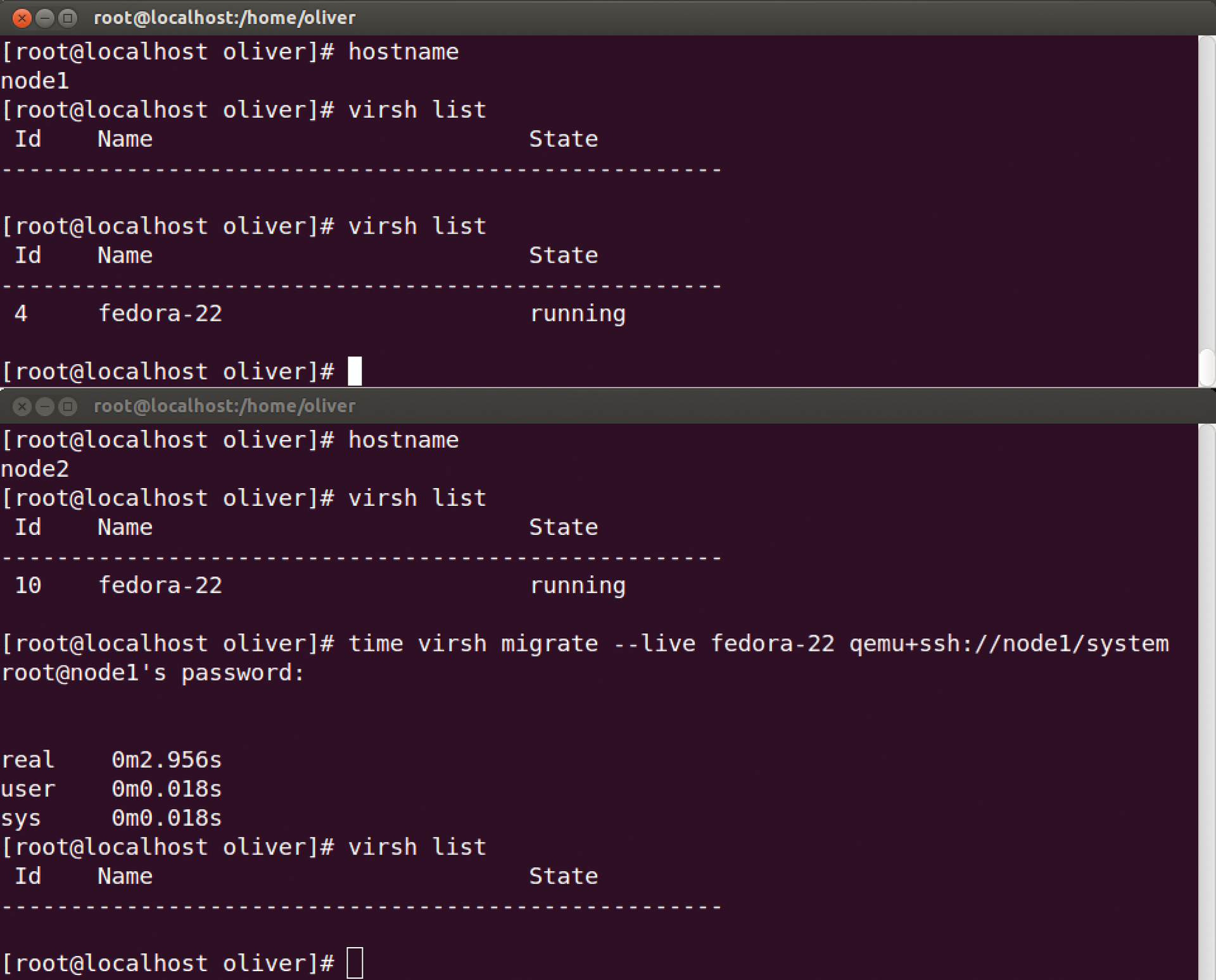

virsh migrate --live fedora-22 qemu+ssh://node2/system

Listing 2: XML File Disk Settings

01 <devices> 02 <emulator>/usr/bin/kvm</emulator> 03 <disk type='file' device='disk'> 04 <driver name='qemu' type='raw' cache='none'/> 05 ...

The virtual machine fedora-22 was transferred from computer node1 to computer node2 in about three seconds (Figure 1).

Management Tools for KVM

Instead of virsh, you will want to use more sophisticated management tools for a production setup, such as oVirt, the open source project based on the Red Hat Enterprise Virtualization Manager (RHEV-M).

In production environments, the management instance of oVirt or RHEV-M should reside on a separate computer with at least 4GB of memory and 20GB of disk space. You also need one or several virtualization hosts, in which both the VMs and a software component named VDSM (Virtual Desktop Server Manager) run; the latter communicates with the central server.

The easiest way is to install on CentOS or Red Hat Enterprise Linux, but the oVirt website also has some tips for installing on Ubuntu and Debian. In the case of CentOS and RHEL, first add the package sources:

yum localinstall http://resources.ovirt.org/pub/yum-repo/ovirt-release35.rpm

then install the software using yum -y install ovirt-engine. Next, you can configure the software using engine-setup; you can accept the defaults in most cases.

Also for oVirt, you need to think about the firewall configuration and open numerous ports; the best place to find out which ones is from the oVirt FAQ [2]. Alternatively, oVirt offers to handle firewall management during the installation, but only on the management server.

Once OVirt is running, you can reach the web interface on https://oVirt-Server-ovirt-engine. Log in to the administration portal to complete the configuration of the data center. In addition to the virtual machine hosts, you also need a storage domain (e.g., based on NFS). If you use a Linux machine, you need to configure special options when exporting the filesystem for collaboration with oVirt to work:

/virtstore 192.168.100.0/24(rw,anonuid=36,anongid=36,all_squash)

If the installation of a VM host fails, check the firewall settings and make sure that the oVirt repository is entered on the host. If the oVirt Manager manages to install the required VDSM software on the host, it then switches the status to "active." Along with the storage domain, you now have a functioning data center.

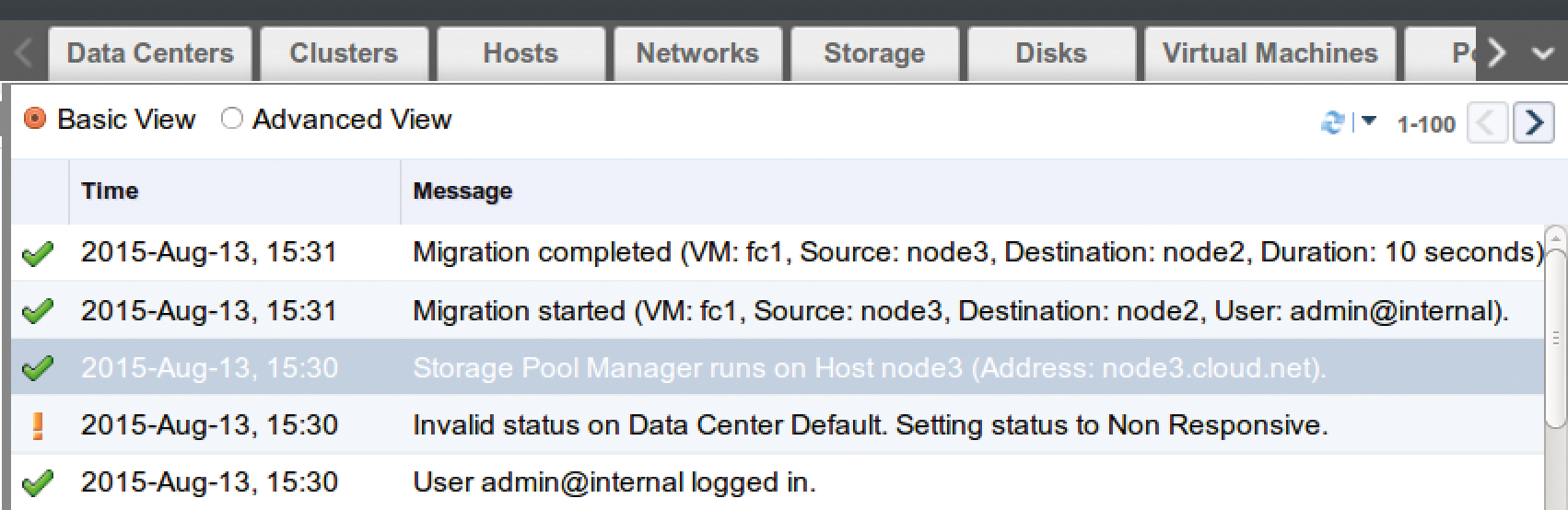

To install a virtual machine, you need a template; the easiest approach is to take one from the existing Glance repository. To do this, first click on Data Centers | Default in the left column and select the Storage tab at the top. Right-click on the ovirt-image-repository domain; you can now import the available images as templates and easily migrate VMs between two hosts at the push of a button. Alternatively, oVirt offers a number of ways to migrate VMs automatically, for example, when a node is put into maintenance mode. For load balancing, you can set up a migration policy. Figure 2 shows what a successful migration looks like in the oVirt event log.

oVirt is a comprehensive software tool that requires some training time, just like RHEV-M. For smaller setups, you have several more-or-less sophisticated alternatives, which the KVM project lists on its own page [3]. One highlight here is Proxmox VE; openQRM [4] takes a further step in the direction of cloud management.

One interesting and much appreciated, but not widely known, alternative is Ganeti, a project by Google Labs. Although it only offers a command-line interface, it can be controlled with just a few commands. The only obstacle is the non-trivial installation, which is described in the documentation on Ubuntu and Debian machines. I did manage to get Ganeti up and running on CentOS in the lab without major problems.

The potentially biggest hurdle is the inconsistent use of hostnames. Your best bet is to have a functioning DNS, in which the names of the participating computers are configured. Alternatively, you can enter the names in the hosts file, but then you need to pay attention to consistency. In addition to the IP addresses and names for each node, you need another IP/name combination, which is used for the cluster.

Shared storage for Ganeti is provided by the distributed replicated storage device (DRBD), which you need to configure as it is described in the Ganeti documentation. Alternatively, Ganeti also supports GlusterFS and Ceph/RADOS, but I did not test this setup. As of version 2.7, Ganeti can include external shared storage, which allows, for example, the use of NFS.

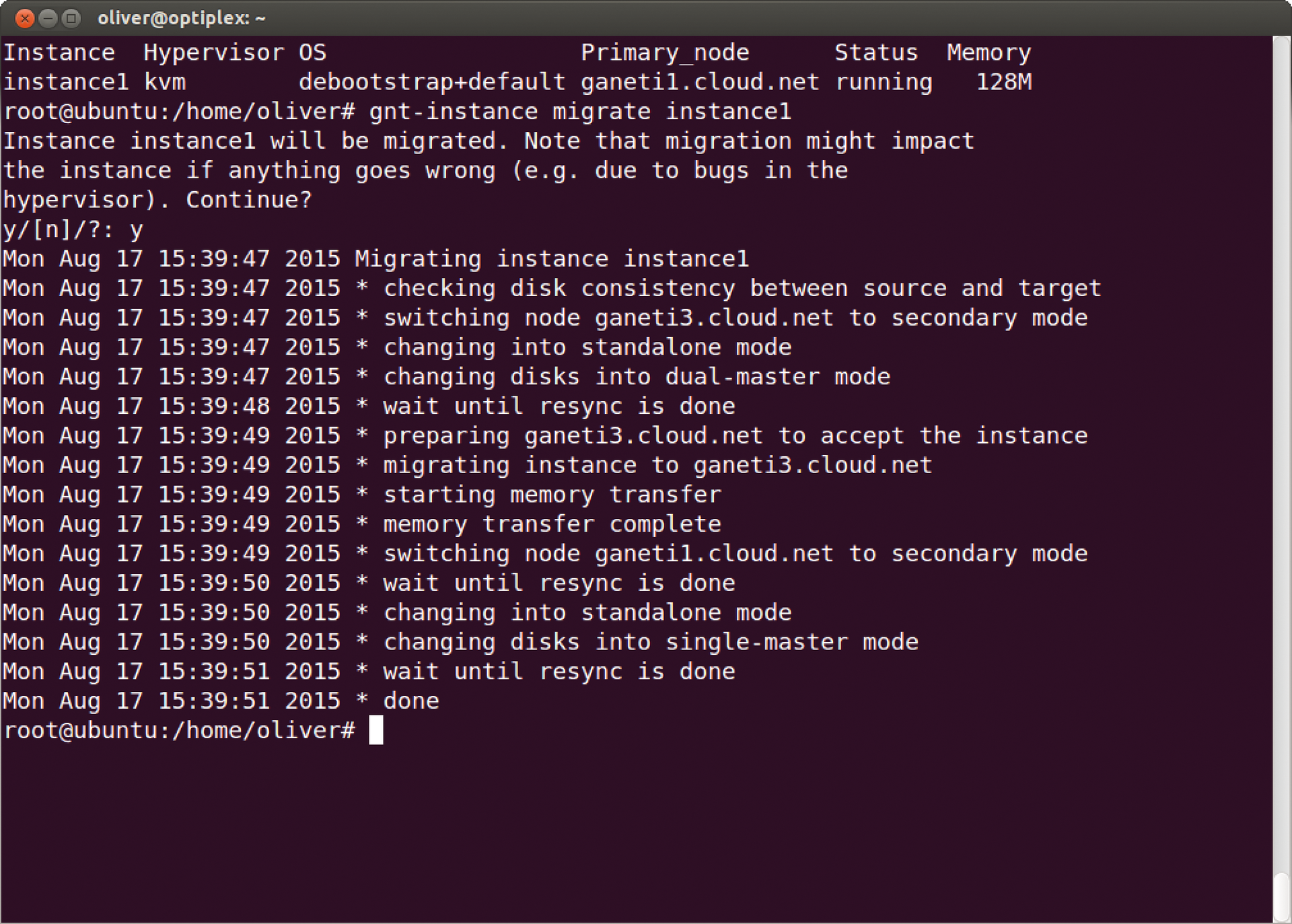

After installing the software, you initialize the cluster with a call to gnt-cluster init and specify that you are using the KVM hypervisor; alternatively, Ganeti also works with Xen. Once the cluster is running as required, the following call starts the migration of a virtual machine (Figure 3):

gnt-instance migration vm1

If a migration goes wrong against all expectations, the management tool also offers a cleanup option to eliminate remnants of the old VM. If you would prefer something more convenient than the command line, take a look at Synnefo [5], a graphical front end that was financed by the Greek government and the EU. Ganeti itself offers high availability and failover and is intended for a setup with up to 150 physical hosts.

Conclusions

Virtualization only unfolds its full potential with the ability to handle live migration of virtual machines. It is the basis for achieving high-availability setups and load distribution. With the KVM hypervisor on Linux, such applications can be implemented today without problem, specifically including the matching storage and network requirements. Various management tools like oVirt or Ganeti facilitate management and let you migrate VMs without manual intervention, for example, in load balancing scenarios.