Opportunities and risks: Containers for DevOps

Logistics

Like the term "cloud," the phrase "DevOps" is used so widely these days that people can no longer agree on a single definition. A common definition states that DevOps is a cooperation between developers and operations professionals that results in the optimization of IT and development processes and automatically leads to a better coordinated delivery of software and appropriate infrastructure. Most definitions no longer lay down specific requirements regarding the tools or programs used.

Containers have become widely accepted, and the meteoric rise of Docker has fixed the technology in the minds of developers, administrators, and planners alike. On the one hand, containers quickly provide developers a clean environment in which to experiment freely. On the other hand, containers significantly reduce the overhead required for deployment. You would forgiven if you think a container is the technical implementation of the DevOps principle.

However, anyone dealing with containers could be taking a big risk in everyday operations, because a black box (Figure 1) in the form of a container is a frightening scenario for administrators.

In this article, I explain the potential problems of containers and present alternatives, because if you use containers correctly, you can profit from their benefits and avoid the pitfalls.

Inventory

A look at the initial state when using Docker will help in understanding the problems associated with containers: Developers work on containers and in the end have a finished product in which the desired application runs smoothly. They usually start their work with a finished image: Anyone wanting, for example, to develop a web application based on Ubuntu 16.04, should start with a container in which Ubuntu and Apache are already installed before adding their own application. If the application is running after making a few adjustments to the container, the developer can create an image from it again and give it to the administrator.

The administrator is responsible for the IT infrastructure operations: This individual loads the container onto a Docker host and puts it into operation. Immediately afterward, the container service is available on the network; the developer and administrator can give themselves a pat on the back (if they're not the same person) and remove it from their to-do list. It only becomes apparent later that the container could be a problem.

Update Problems

The problem becomes evident when software needs to be brought up to date, usually involving a security update. Loads of examples from the past, such as the various errors in the SSL standard library and the problem in the C library resolver (libc), illustrate this problem.

When push comes to shove, the admin has to update multiple systems at the same time. The solution is simple for physical machines: As soon as the distributor provides a suitable update, the system's update function or an automated solution installs the appropriate packages.

The process becomes more complicated with Docker-style containers. Admins can hardly say anything about their update capabilities because they are often based on a third-party provider's basic image. The best case scenario is for the container to have automatic updates enabled and be built in line with common standards so that updates work. Moreover, an application that uses its own libraries within a container, such as a locally installed C library, can be updated directly in the container. Alternatively, the developer could also rebuild the container so that it contains the necessary security fix from the beginning. To do this, however, you need to make sure the developer can recreate the container with the application again. Depending on the number of rolled-out containers, this would also need to be applied to many different containers at the same time; therefore, anyone using hundreds or thousands of containers is faced with a mammoth project.

Development vs. Operations

Containers like the example cited here illustrate the most important difference between development and operations. Development usually involves the task of improving existing applications, including adding new features or adapting the container to different conditions. Administration, on the other hand, concerns itself with stability, security, and maintainability.

For example, if an existing component of a container application provides new features, the developer will want to use them in the application. However, it isn't the developer's job to worry about how the application operates. Thus, it is usually enough that a new feature performs within the development environment as desired.

On the other hand, admins have completely different interests with regard to the operation of a platform. New features might be nice, but the new version of the software must operate within the framework of the existing container. Admittedly it is often rather cumbersome to roll out an application so that it fits into the existing infrastructure. Although deploying the original development environment as a container is a temptation and seems feasible at first, for the reasons discussed here, admins should resist this temptation.

Companies don't need to forgo containers completely, though, because they certainly provide benefits. The dilemma isn't caused by the use of containers but by the problematic way in which they are used, and administrators have ways to employ containers without the concomitant frustration.

1. OverlayFS

One of the great disadvantages of classic container deployment is that a complete Linux system is attached to each container. Although a container might not be a standalone system per se, with a complete Linux distribution in the background, it becomes another element in need of maintenance. Disk space overhead can be ignored, because storage is one of the cheapest items; however, the maintenance overhead hurts when you consider that the system that houses (usually) multiple containers is itself a full Linux installation.

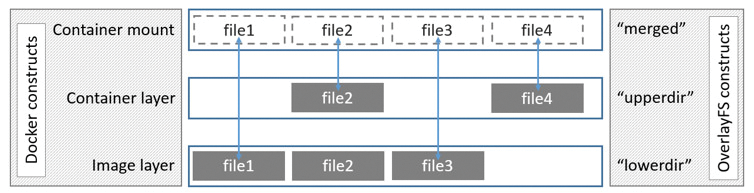

The problem can be solved elegantly by using OverlayFS (a competitor of AuFS, which you might recognize from its use in Knoppix). Programs access the original host system files through OverlayFS. If the admin creates new files or modifies existing ones on an OverlayFS mount, it isn't reflected on the host filesystem. Instead, OverlayFS manages the difference between the host and OverlayFS itself. This is a union mount: The administrator sees both the host content and the changes applied to the OverlayFS mount (Figure 2).

Anyone who opts for using containers on OverlayFS therefore needs to invest quite a bit of work in the operating system installed on the host. The first step is to create a solid basis: As well as the essential elements, the host system for OverlayFS must have the components that the containers will require later. If, for example, primary PHP applications are running as a container, the required PHP modules should be available on the host. Also note that the more components present on the host system, the smaller the respective containers need be.

The maintenance overhead is considerably reduced because the OverlayFS driver notes changes to the host and passes them on to its clients: If the administrator installs a new SSL or C library on the host, thanks to the union mount principle, OverlayFS will make sure the changes are also implemented for all OverlayFS instances. In the end, the respective containers just need to be restarted to enforce the changes.

Use of a current kernel is recommended for OverlayFS. Anyone using a long-term support (LTS) version of Ubuntu has a good start, because Canonical also provides backports from later versions of the Ubuntu kernel to the current LTS version. For example, new kernel versions were available starting with Ubuntu 14.04.2 [1], and newer kernels continued to ship by default through kernel v4.4 to LTS 14.04.5; then, the newest 16.04 LTS was released with the same kernel version. When Ubuntu reaches 16.04.2, it too will receive kernel updates by default through its lifetime. Note that other distributions with long-term support implement different strategies in terms of kernel updates.

OverlayFS on the host side is only half the battle when it comes to managing containers efficiently: The container solution needs to manage the other half. Several versions of Docker have understood how to deal with OverlayFS, but naturally one issue does arise: Anyone who becomes entangled in the morass of Docker functionality won't be able to reap the benefits imparted by using Docker. Therefore, a look at alternatives couldn't hurt.

OverlayFS and LXC

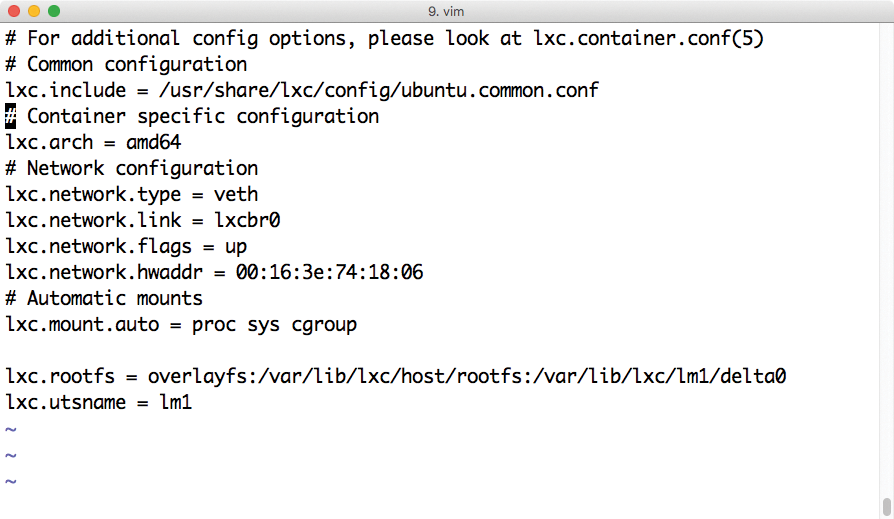

Linux containers (LXC) also has an OverlayFS driver (Figure 3) and is hardly less potent than Docker in terms of functionality. Docker and LXC use the same functions in the Linux kernel (e.g., cgroups and namespaces). LXC is the older of the two solutions and served as a model for Docker in many respects. Anyone who doesn't want to follow the Docker path can drop in on the LXC solution.

Admins aren't spared at least one task with LXC: They do need to prepare the required containers so they are useful for developers. In Docker, this function is included in the Docker tools. Anyone who uses LXC can either create the required containers manually or automate the provision of containers. Larger scale container setups need to do this anyway, because it must always be possible to expand the setup with more hypervisor hosts for new containers if the previous setup runs out of resources.

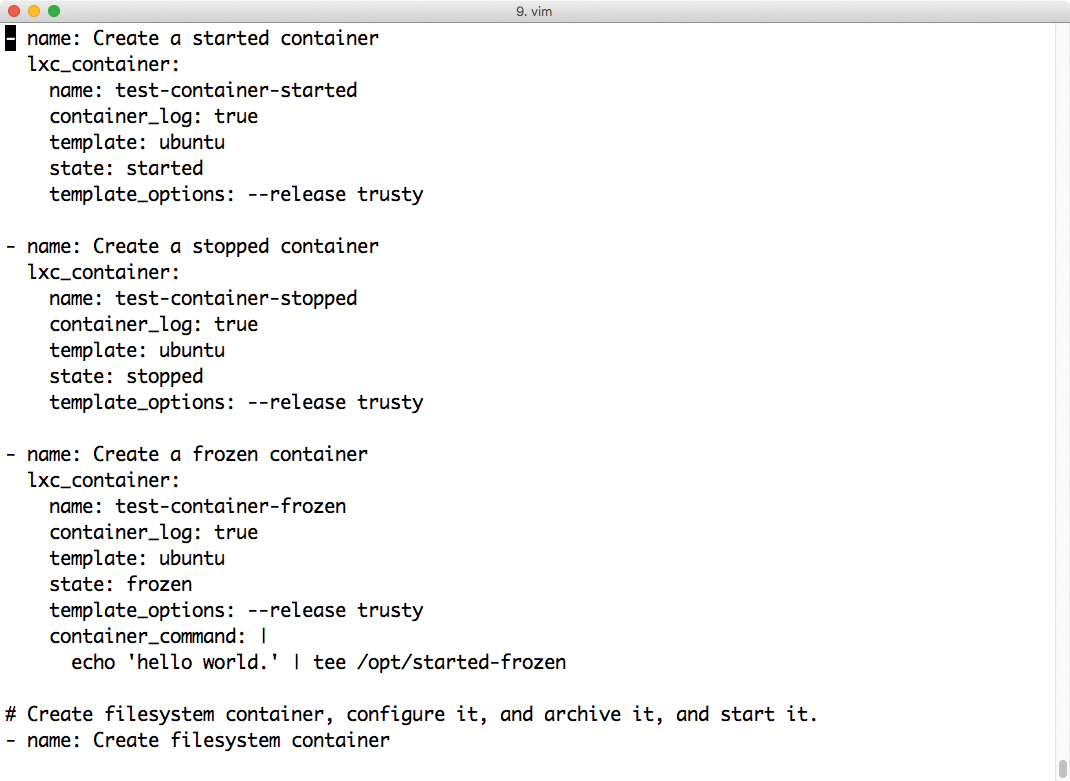

Ansible is the automation solution of the moment and has very well developed LXC functionality. The complete provision of LXC containers can be accomplished quickly with Ansible (Figure 4). On request, dozens of containers can be started at the same time, even from an individual Ansible playbook.

Transparent

A container setup based on LXC meets the needs of both administrators and developers. As with a typical deployment approach, it remains open to the developers to make changes within the container at their own discretion. Because each individual container is strictly separate from the main system, changes to the host are not reflected in the containers residing on the host. Unlike the typical scenario, administrators are familiar with the basis of the container and can even stimulate changes to its filesystem via the OverlayFS route. The container is thus no longer a black box; instead, it is based on defined standards.

Admittedly, this scenario doesn't prevent developers from ripping open a security gap in containers that the general update can't repair when making changes to the host system. However, the vast majority of developers will settle for what they discover in their container via OverlayFS, so if manual updates are still required, the number of affected installations will be small.

2. Reproducible Containers

As elegant as the OverlayFS solution may be, it might not fit situations that fall outside the need for a single standard setup. Anyone who has to support different versions of Ubuntu or add CentOS, for example, has two options: get the appropriate amount of hardware to provide the necessary Overlay systems for different distributions, or deploy fully fledged containers.

The good news is: Docker has various strengths here, and anyone who uses Docker sensibly can get around the black box problem. The magic word is reproducibility: Containers that can be recovered easily at any time cause few problems in everyday admin life.

The discussion is reminiscent of the comparison used extensively in the cloud context between kittens and herd animals: Kittens are classic old-school systems, which are less automated – if at all – and require a lot of manual work. The herd animal is a symbol for virtual machines that are automatically rolled out in the same way and are even interchangeable at any time with a modified version.

The reproducibility factor solves the problem of the sheer number of containers with which administrators have to deal in case of problems: If it is possible to recreate many containers automatically via the push of a button, then time-consuming repair of individual instances is not needed.

Docker Tools

Docker cannot be faulted for the huge numbers of kittens built by admins. In fact, it is possible to create clean containers with Docker. A simple example shows this:

FROM ubuntu RUN apt-get update && apt-get install -y x11vnc xvfb firefox RUN mkdir /.vnc RUN x11vnc -storepasswd 1234 ~/.vnc/passwd RUN bash -c 'echo "firefox" >> /.bashrc' EXPOSE 5900 CMD ["x11vnc", "-forever", "-usepw", "-create"]

The short block of code creates a Docker container in which both X11 and Firefox are running and that allows external access via VNC. On the basis of this Docker file, the same Docker containers can be created reproducibly on each host. The basis of the process is the Ubuntu image from the Docker Hub, which is more or less official and maintained by the provider.

Admittedly complex web applications cannot be satisfied with so few commands, and Docker files cannot be read when they stretch over several screens, so the second basic component of reproducible containers is the use of an automation solution.

Puppet, Chef, Ansible, …

Whether Puppet, Chef, Ansible, or another solution is used for automation is of secondary importance from an administrator's perspective. The only important point is that a fresh container based on an official image supplies the desired function after the tool is called.

For a change, the developer, not the admin, is responsible for the bulk of the work. In many cases, admins aren't in a position to deploy the container, because they simply aren't familiar enough with all the potential applications to be rolled out.

The developer's mantra, "works on my machine in the container," isn't enough in this case, which must be reproducible. Of course, nothing can stop admins from helping developers implement the automation, and cooperation would save both sides a lot of work that might otherwise be required later. The admin's task is to ensure compliance. If a developer asks for a live container deployment, there's no harm in taking a quick look first. A modified image, instead of bare image, of the chosen distribution is a no-go.

This mode of operation might require patience and the will to cooperate on all sides, but ultimately, it establishes ground rules from which administrators and developers can benefit: Admins get maintainable containers, and developers get development environments off the ground much more quickly than they could starting from scratch.

3. Build Base Images

The third mode of operation for handling containers is not a separate concept, but rather a variation on alternative two – even if it is more radical. The third alternative assumes that base images for containers do not come from the Internet; instead, their creation is the first step of the development process. When developers and administrators build containers together for production use, they are virtually starting from scratch and use a toolchain to produce a container for a specific purpose.

The concept seems unnecessarily complex at first. After all, it would be much more convenient to use an Ubuntu image from the Docker Hub as a basis. However, preparing the necessary environment in which containers can develop takes some time, and the process of creating containers also usually takes longer than just downloading a basic image. This approach is therefore less suitable for setups with just a few containers.

The advantages of this approach instead come to fruition in large environments with lots of containers. Because the developer and the operating team have complete control over the content of containers, they can generate it from the outset to include all the required components. If, for example, specific packages are required for operating an application, they are present and installed in the container immediately. Thus, an automated solution to install packages after the container launches would be unnecessary.

Anyone who can control their toolchain for the construction of containers also can discard the update problem elegantly. If you are tired of installing updates in running containers, the development or operations team can simply generate new containers that already contain all required updates. In the best case scenario, all this requires is the push of a button: Administrators simply trigger the reconstruction of all running containers and then replace the running containers with the new versions. In this scenario, administrators can also be certain that their container images won't contain any undesirable components.

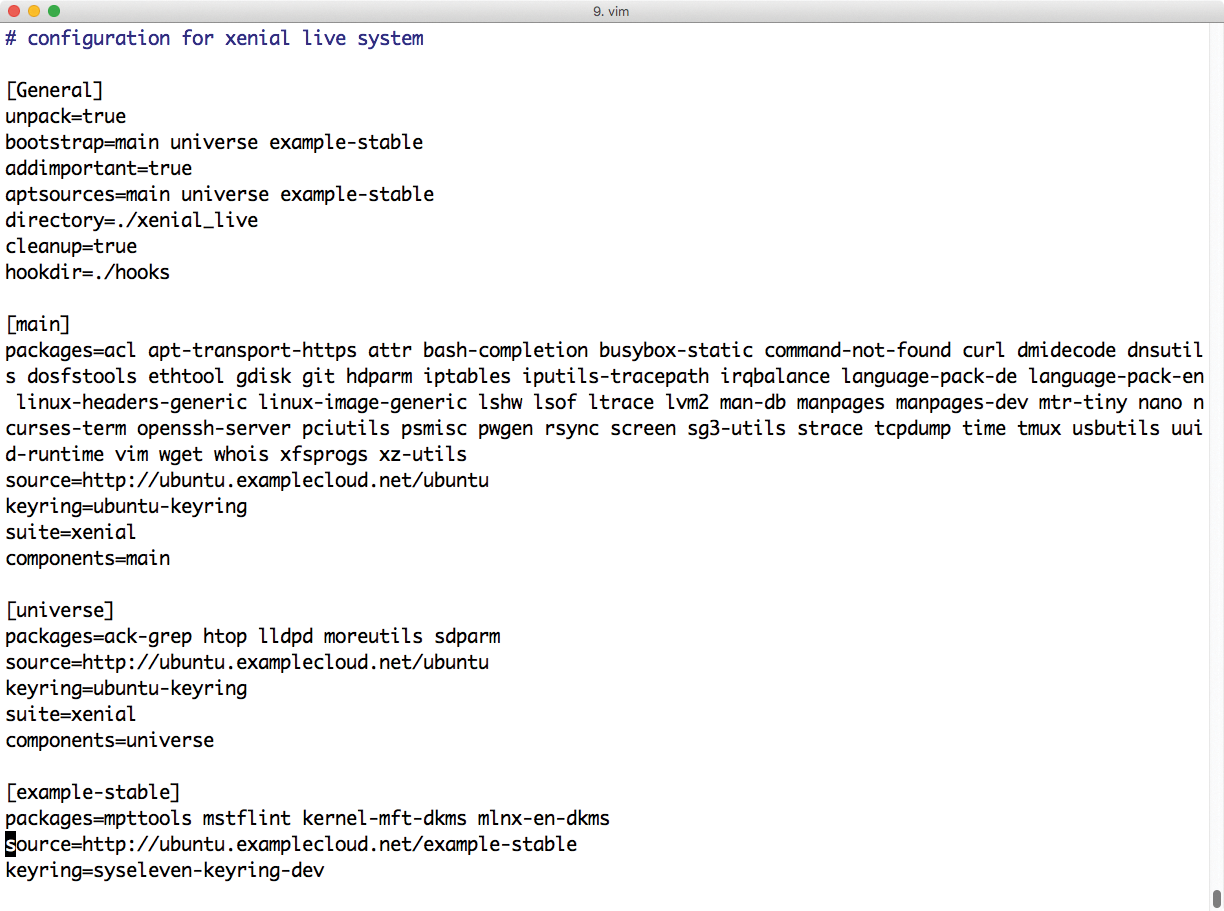

Multistrap Aid

Those who opt for the third approach will find many tools on the web that help create Docker containers. The multistrap tool, which is aimed at fans of Debian or Debian-based distributions, is a good example (Figure 5). A minimal Ubuntu image and configuration files for Docker containers is available online [2]. To create a Docker container for the current LTS release of Ubuntu, just call multistrap along with a configuration file.

Multistrap starts customize.sh at the end of the process, which allows any number of commands to be run in the filesystem of the future container before the image for the container is built. Anyone who doesn't want to delve too much into Bash magic can use one of the automation tools.

Conclusions

Containers are useful and a real asset in DevOps, as long as you observe a few rules. If containers are reproducible, they also can be managed efficiently. However, black boxes cause problems. Anyone using containers should keep this fact in mind.