SDN and the future of networking

New Networks

Generally speaking, the IT infrastructure is responsible for three tasks: processing, storing, and transporting data. The IT landscape was fairly static in the time before the virtualization of x86 computers. Planning and implementing changes or upgrades takes months or even years, from ordering, to delivering, and physically installing new hardware. This arduous process has changed radically with the arrival of VMware [1], KVM [2], and Hyper-V[3], because virtualization makes it easy to jump-start new computers.

New computers nearly always require an IP address and network integration. In the past, the configuration, along with physical dismantling and installation and wiring, used to take hours at best, but more like days. Equivalent tasks take a matter of minutes or even seconds in the virtual world.

The network infrastructure has to deal with this dynamic, but traditional approaches and concepts are much too slow to keep up. In addition, other developments, such as the emergence of smartphones and Bring Your Own Device (BYOD) [4] technologies, have added additional complications, requiring the integration and management of devices that appear as if out of nowhere and then disappear again.

At the same time, certain connections need to work immediately, securely, and reliably (see the "But of course!" box). The number of examples of the need to find new methods for managing networks could go on and on. A key factor in all solutions is the abstraction of the network hardware, which has led to the development of SDN [5].

The Story So Far

The focus of this article is on IP-based networks containing a number of autonomous systems – typically switches, routers, and firewalls. These devices receive, process, and distribute data packages; they analyze the origin and destination and consult tables and rules before forwarding.

For this arrangement to work, these systems must be familiar with the network topology (at least to a certain extent). The role and position of a device on the network are directly related. One of these factors changing has a direct impact on the other. Computer virtualization means you can reposition a device on the network in next to no time – and you can modify both the position and role of the device.

But that's not all. The network has evolved over time. The Internet Engineering Task Force (IETF) [6] makes sure this evolution happens in a controlled manner, developing a number of protocols to spice up simple control logic. These upgrades often only address a specific task and operate in isolation.

The growing number of these standards, coupled with the vendor-specific optimizations and software dependencies, has significantly increased the complexity of today's networks. They have also lost almost all flexibility. Structural changes are risky and constitute a medium-sized project.

March Separately, Network Together

The basic idea of SDN is nearly ten years old and hails from Stanford University. At the time, Martin Casando described the idea of separating data flow and the associated control logic in his doctoral thesis [7]. He and his supervisors Nick McKeown and Scott Shenker are seen as the fathers of SDN. Casando, McKeown, and Shenker founded a company called Nicira Networks, which now belongs to VMware and is the basis of NSX [8].

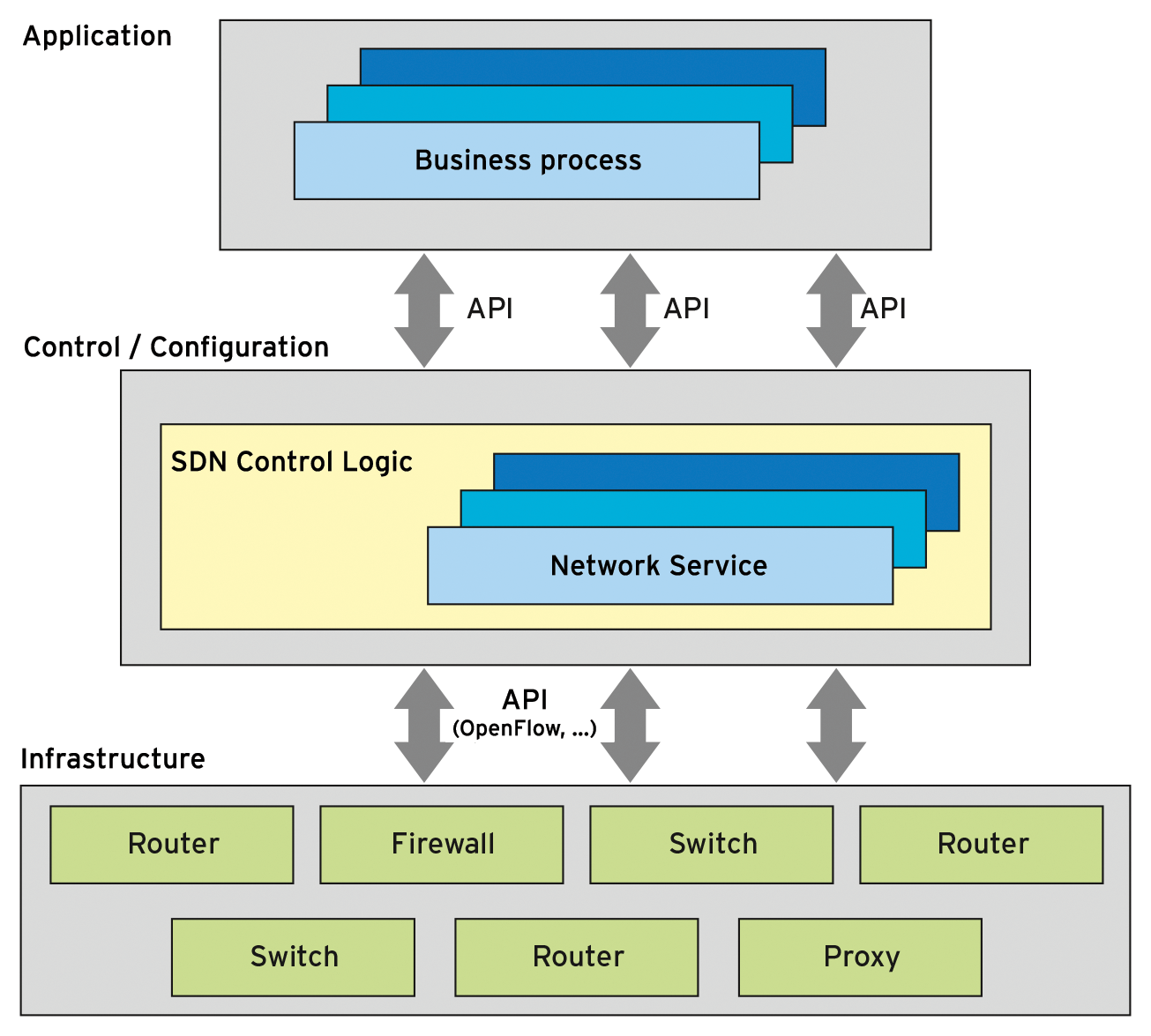

Put more generally, the idea of SDN is separating configuration and infrastructure (Figure 1). Literature uses the terms control plane and data plane for these layers. The infrastructure plane includes devices such as firewalls, switches, or routers. But in an SDN, the control logic in them is significantly limited or non-existent in extreme cases. This control logic is, instead, the responsibility of the control plane. The stupid network devices make do with much simpler firmware. Freed from thinking, they focus on forwarding data packages.

The intelligence is in the control plane, which you can imagine as a correspondingly potent computer with control software. You specify the guidelines for receiving, processing, and forwarding data packages. For this system to work, the control logic and network devices need to communicate with each other. The interfaces should be well-known and standardized so that any manufacturer can easily set them up. OpenFlow [9] plays a pioneering role in standardizing the configuration.

Experience has shown that there also needs to be a kind of supervisor that supports the development of interfaces but also intervenes if things are going in the wrong direction. Open Networking Foundation (ONF) [10] has performed this task for SDN for over five years. Google, Facebook, Microsoft, Deutsche Telekom, Verizon, and Yahoo are the real heavyweights as founding members of ONF.

On the Plus Side

In addition to the previously mentioned benefits, SDN leads to a significantly lower dependence on hardware manufacturers – the brain is indeed in the control plane. The decoupling of the network hardware's life cycles and the logical topology is also advantageous. Standardized interfaces open up new hardware-agnostic automation possibilities.

You simply need to define a new policy in the control plane. This policy transmits the necessary information to the end devices. The separation of the control logic allows its centralization. This way you can easily get an overall picture of your network. The information that was distributed over perhaps hundreds of devices in the pre-SDN era is now available at one point in the best case.

The actual highlight is yet to come: SDN introduces an abstraction that makes the network easier to understand. In the past, IT departments had to break down a business process into routing tables and firewall rules. Network information was distributed across all sorts of devices. Consumers (business processes) and service providers (network administrators) spoke completely different languages.

However, with SDN, the application understands the topology (Figure 2) and can react to changes or even initiate them. Recent descriptions of SDN introduce the term application plane for this feature.

Operating Models

Are there any downsides? Well of course! A well-known challenge with centralization is making it safe. What happens if the control plane fails? What are the necessary high availability concepts? If multiple control instances are involved, does this immediately raise the question of how many there are? What's the situation with scalability? Very critical voices also ask about the additional overhead of the communication between control logic and infrastructure. But one thing at a time: there are different approaches for operating SDN.

In the symmetric model, the control body is centralized as much as possible. This article has already described the advantages, but the central susceptibility is a disadvantage. The asymmetric model, on the other hand, gives the elements of the data plane enough information for the immediate operation. The individual systems are familiar with the relevant configurations and can therefore work even if the control logic fails.

This approach often works well with the work methods of administrators who organize the network into autonomous cells. However, the greater independence from the central control plane also has its price. The asymmetric method leads to redundant information and more decentralized management.

In highly virtualized environments, it makes sense to let the hypervisor or the host do the necessary mental work. The other approach is network-centered, involving dedicated network devices performing the SDN job. A combination of the two options is also conceivable but reduces the profit.

The third dimension of the SDN operating models uses the distribution of information as a differentiator. This approach can be either proactive or reactive. In a reactive environment, the network device receives a data package and asks the control plane how to proceed. The advantage is that only the required information migrates across the network. However, problems will result in delivery delays.

Alternatively, the control logic can proactively supply the data plane with all the information. This technique typically depends on well-known broad and multicast mechanisms. The advantages and disadvantages are similar to those of the asymmetric operating model.

What Remains in the End

A lot has happened in the SDN environment over the past five years. The ONF product page [11] is now rather long and doesn't just list the usual suspects from the network area. OpenFlow may have introduced standardization, but the implementation by the manufacturer was, and unfortunately still is, a painful experience. SDN has appeared in tandem with Network Function Virtualization (NFV) [12] for some time. Both technologies are high on the list of topics for telecommunications giants. The OpenStack community showed great interest in the recent polls.

SDN is here to stay. However, classic environments are still finding it difficult to part from the traditional approach. The development of technology is happening much faster than the necessary change in thinking. Anyone planning their network for the future will need to give some thought to SDN.