Software-defined networking in OpenStack with the Neutron module

Neutron Dance

If you have studied the SDN, you probably know something about cloud computing. SDN would not be as widespread as it is today if cloud solutions such as OpenNebula or OpenStack did not include SDN as an integral component. This article takes a look at Neutron, the OpenStack module that handles SDN management.

SDN Basics

SDN means decoupling management functionality in the network from the switch hardware. Only dumb switches appear in SDN environments: Their task is merely to forward packets from one port to another. They do not split the network into logical segments.

The reason for this simplicity is the need for automation. The configuration of a conventional switch is mostly static; only an admin can change it. But clouds are supposed to work as automatically as possible, and it would be impractical in a cloud to have to change the switch configuration for a new virtual local area network (VLAN) manually once a new customer registers.

Rather than depending on switches, the VLAN function for clouds is provided by the individual computers of the installation. Whatever runs on the computers of the cloud can be configured and automated from the cloud.

The key to SDN is two cloud components: On the one hand, an API interface, typically using the RESTful principle, fields commands from the users, and, on the other hand, agents usually implement the desired configurations on the hosts.

Under the hood, Open vSwitch is almost always used to implement virtual switches on each host. The agents of the cloud solutions thus define a suitable Open vSwitch configuration on the hosts and empower customers to operate virtual networks.

What sounds complicated in theory is easily explained based on the example of OpenStack Neutron. Neutron is the OpenStack SDN component responsible for ensuring that virtual machines (VMs) have a functioning network, that is, a virtual customer network as well as a connection to the outside world.

Neutron As an Abstraction Layer

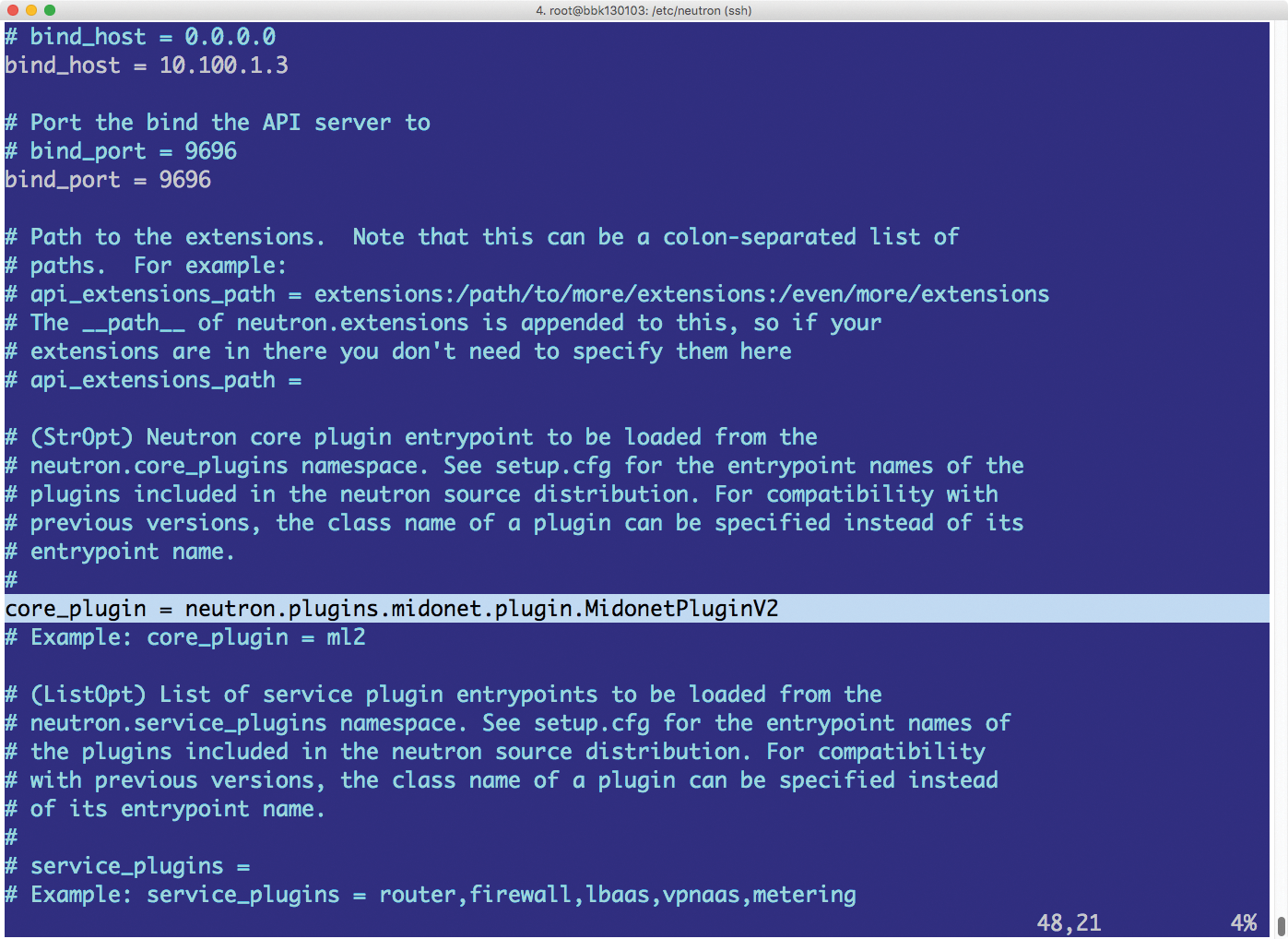

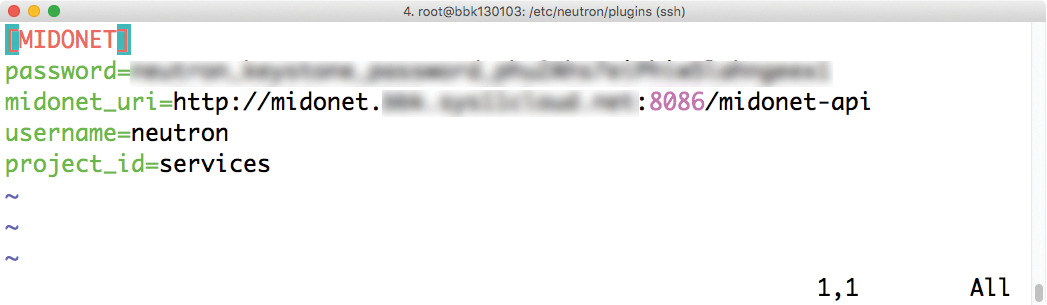

Neutron acts as an abstraction layer with its own plugin mechanism, which gives it the flexibility to incorporate Open vSwitch [1], MidoNet by Midokura [2], OpenContrail [3] by Juniper, and other SDN solutions – Neutron has a plugin for almost all SDN approaches on the market.

Variety also has disadvantages: In many cases, Neutron beginners do not know where to start to understand the solution. This article examines a standard setup with Open vSwitch. For completeness, I should mention that OpenStack developers do not unconditionally recommend the Open vSwitch version for production use.

SDN in OpenStack the API

To understand the principle of SDN in OpenStack, you need to understand OpenStack Neutron. The Neutron server is a RESTful-based API in typical OpenStack style.

The Neutron API is the point of contact for any request relating to the SDN configuration in an OpenStack cloud: If a customer wants to create a virtual network via a web interface or Neutron command, the command ends up with the Neutron server. Nova, the OpenStack component that is responsible for managing VMs, talks to Neutron when a virtual port is needed for a new VM. Neutron also plays the role of assigning public IP addresses to VMs.

The Neutron server actually does very little work by itself. Although the specification defines the API commands that clients need for certain tasks, the server does not even implement the commands in a specific configuration for specific servers. Instead, Neutron has a plugin interface (Figures 1 and 2), which you can use to retroactively load external functions.

Neutron itself has a number of plugins. For the standard scenario, you'll find a plugin that expands Neutron to include Open vSwitch features. Other plugins support communication between Neutron and components such as the MidoNet API or OpenContrail by Juniper. The current Neutron plugin interface, Modular Layer 2 (ML2), theoretically even allows several neutron plugins to operate at the same time. In practice, such setups are never implemented.

The Neutron Server, now extended to include an SDN plugin, acts as a central source of knowledge for all SDN-related information in OpenStack. But this is only half the job: On the hosts that ultimately run VMs, you somehow need to transfer the configuration stored in the Neutron database to the system configuration. To do this, the Neutron developers devised a solution where agents are available for the Neutron server on the hypervisor hosts: These agents receive their instructions from the Neutron server and then configure the target system as defined by the Neutron server.

The default setup with Open vSwitch supports a large number of agents in Neutron: The L2 agent converts the Open vSwitch configuration on the hypervisor hosts and ensures that VMs on the same customer networks can communicate across the nodes. The DHCP agent supplies local dynamic IP addresses to VMs. The L3 agent acts as an interface to the Internet gateway node.

Inspecting the Agents

What exactly do the individual Neutron agents on the hosts do? How does a virtual switch work in concrete terms?

Clouds distinguish between two types of traffic. Local traffic is traffic flowing back and forth between two VMs. The big challenge is to implement virtual networks across node borders. If such multiple VMs of a customer are running on nodes 1 and 2, they need to be able to talk. The other kind of traffic is Internet traffic: These are VMs that want to talk to the outside world and receive incoming connections.

The two concepts of overlay and underlay are central in the SDN cloud context. They refer to two different levels of the network: Underlay means all network components that are required for communication between the physical computers. Overlay sits on top of the underlay; It is the virtual space, where customers create their virtual networks. The overlay thus uses the network hardware of the underlay. In other words, the overlay uses the same physical hardware that the services on the computing node use to communicate with other nodes.

To avoid chaos, all SDN solutions on the market support at least some type of encapsulation: For example, you can use Open vSwitch with VxLAN or Generic Routing Encapsulation (GRE). VxLAN is an extension of the VLAN concept intended to improve scalability. GRE stands for generic routing encapsulation and offers very similar functions.

Important: Neither of these solutions supports encryption; they are therefore not virtual private networks (VPNs). The only reason for encapsulation in the underlay of a cloud is to separate the traffic of virtual networks from the traffic of the tools on the physical host.

ID Assignments in the Underlay

For local traffic to flow from the sender to the receiver, Neutron agents enable virtual switches on the computing nodes. These switches make the Open vSwitch kernel module available. At the host level, virtual switches look like normal bridge interfaces – however, they have a handy extra feature: They let you add IDs to packets. A fixed ID is associated with each virtual network that exists in the cloud. The virtual network adapters of the VMs that are connected to the virtual switch during operation stamp each incoming package with the ID of the associated network.

The process works much like tagged VLANs. But in the SDN cloud context, the switch that applies the tag is the bridge that is connected to the VM. And because agents within the cloud access the same configuration, the IDs used for specific networks are the same on all hosts.

On the other side of the bridging interface is encapsulation, that is, typically GRE or VxLAN. Although both protocols build tunnels, they are stateless: A full mesh network of active connections is not created. SDN solutions use the encapsulated connections to handle traffic with other hosts. Traffic for VMs on another node is always routed through the tunnel.

A concrete example will contribute to a better understanding: Assume a VM named db1 belongs to Network A of customer 1. The NIC is connected to the Open vSwitch bridge on the computing node. The port of the VM on that bridge has an ID of 5. All the packets that arrive at the virtual switch through this port are thus automatically tagged with this ID.

If the VM db1 now wants to talk to the server web1, the next step depends on where web1 is running: If it is running on the same computing node, the packet arrives at the db1 port on the virtual switch and leaves via the port of the VM web1 – which has the same ID. If web1 is running on another system, the package enters the GRE or VxLAN tunnel and reaches the virtual switch. The virtual switch checks the remote ID and finally forwards the packet to the destination port.

Virtual switches do two things: They uphold strict separation between the underlay and overlay through encapsulation, and they use a dynamic virtual switch configuration to ensure that packages from a virtual network only reach VMs that are connected to the same network. The task of Neutron's SDN-specific agent (L2 agent) is to provide the virtual switches on the host with the appropriate IDs. Without these agents, virtual switches would be unconfigured and therefore unusable on all hosts.

The described system is sufficient for managing and using VLANs.

Similar Approach for Internet Traffic

The best VM is of little value if you can't access the Internet or if it is unreachable from the outside. Virtually all SDN approaches stipulate that flows of traffic from or to the Internet use a separate gateway, which is configured directly from the cloud. The gateway does not even need to be a separate host; the necessary software can run on any computing node as long as it is powerful enough.

"Software," in this case, means at least network namespaces and in most cases also a Border Gateway Protocol (BGP) daemon of its own. Basically, a typical OpenStack gateway is attached to the underlay quite normally; for those networks for which virtual gateways were configured at OpenStack level, a virtual network interface that is attached to a virtual switch in the overlay also exists on the gateway. Naturally, if you want to route packets into the Internet, this traffic must be separated in the overlay from the traffic of other virtual networks.

In the final step, network namespaces help to assert traffic separation between virtual networks en route into the Internet. Network namespaces are a feature of the Linux kernel, allowing virtual network stacks on the host that are separate from each other and from the main network stack of the system.

Using Public IP Addresses

Public IPv4 addresses are typically used in two places in OpenStack clouds: On the one hand, IP addresses are necessary in the context of the gateway, because a network namespace needs a public IP address to act as a bridge between a virtual customer network and the real Internet. On the other hand, many customers want to allow access to their VMs from the Internet via a public IP address.

How IPv4 management works depends on the SDN solution: In the default setup of Open vSwitch, the L3 agent is controlled by Neutron on the gateway node. It only creates virtual tap interfaces in the namespaces and then assigns a public IP.

Advanced solutions such as MidoNet or OpenContrail by Juniper can even speak BGP (see the box entitled "Border Gateway.")

Floating IPs

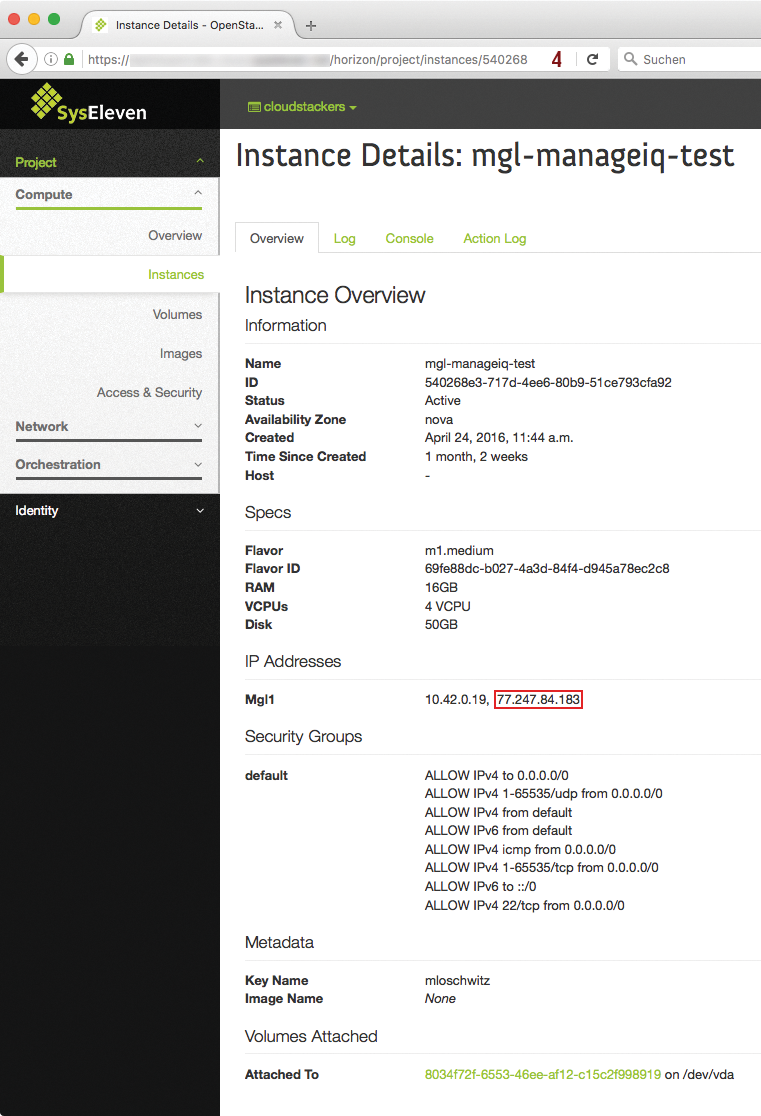

In the cloud context, IPv4 addresses that customers can dynamically allocate and return are called floating IPs. This concept regularly meets with incomprehension even from experienced sys admins: If your are accustomed to using the system configuration to give a machine a public IP address, you might feel slightly uncomfortable when you click on the OpenStack web interface.

However, there are good reasons for floating IPs: On the one hand, the floating IP concept lets you implement genuine on-demand payment; Customers thus really only pay for the IP addresses they need (Figure 3). Secondly, floating IPs keep down the overhead caused by network segmentation: In OpenStack, a network can be configured with a /24 prefix as a complete block. The network address, gateway, and broadcast address only need to be specified once. The remaining IP addresses can be used individually. Typical segmentation would waste several addresses for broadcast, network, and gateway addresses.

A third benefit of floating IPs is that dynamic IP addresses allow processes such as updates within a cloud. A database can be prepared and preconfigured. Commissioning then merely means redirecting an IP address from the old VM to the new one.

On the technical side, the implementation of floating IPs can be derived from the preceding observations: On a pure Open vSwitch, the Neutron L3 agent simply configures the floating IP on an interface within the network namespace, which is assigned to the virtual client network with the target VM. BGP-based solutions use a similar approach and ultimately ensure that the packets reach the right host.

The situation is quite similar with DHCP agents: A DHCP-agent can only act on a virtual customer network if it has at least one foot on it. The hosts running the Neutron DHCP agent are therefore also part of the overlay. Network namespaces are not used – a network namespace with a virtual tap interface and a corresponding Open vSwitch configuration is created for each virtual network. A separate instance of the Neutron DHCP agent runs for each of these namespaces. Booting VMs issue a DHCP request in the normal way; this passes through the overlay and reaches the host running DHCP, where it receives a response.

Metadata Access

The fact that SDN sometimes does very strange things can be best described by reference to the example of metadata access in OpenStack. Amazon had the idea of creating a tool named cloud-init. The tool launches when a VM starts in the cloud and executes an HTTP request to the address 168.254.169.254 to retrieve information about its hostname or SSH-keys, which should allow access. The IP stated here is not part of the IP space of the virtual network created by the customer – and is consequently first routed to the gateway node.

The problem is: The Nova API service, which mostly runs on separate cloud controllers, provides the metadata. And these controllers have no connection to the cloud overlay, except if they happen to run the DHCP or L3 agent. The Neutron developers ultimately helped me with a fairly primitive hack: The gateway node runs a metadata agent that consists of two parts. The agent itself only intercepts the packets of the HTTP request and sends them to the metadata proxy, which finally passes them to the Nova API via a UNIX socket – directly in the cloud underlay. On the way back, the packets take the reverse route.

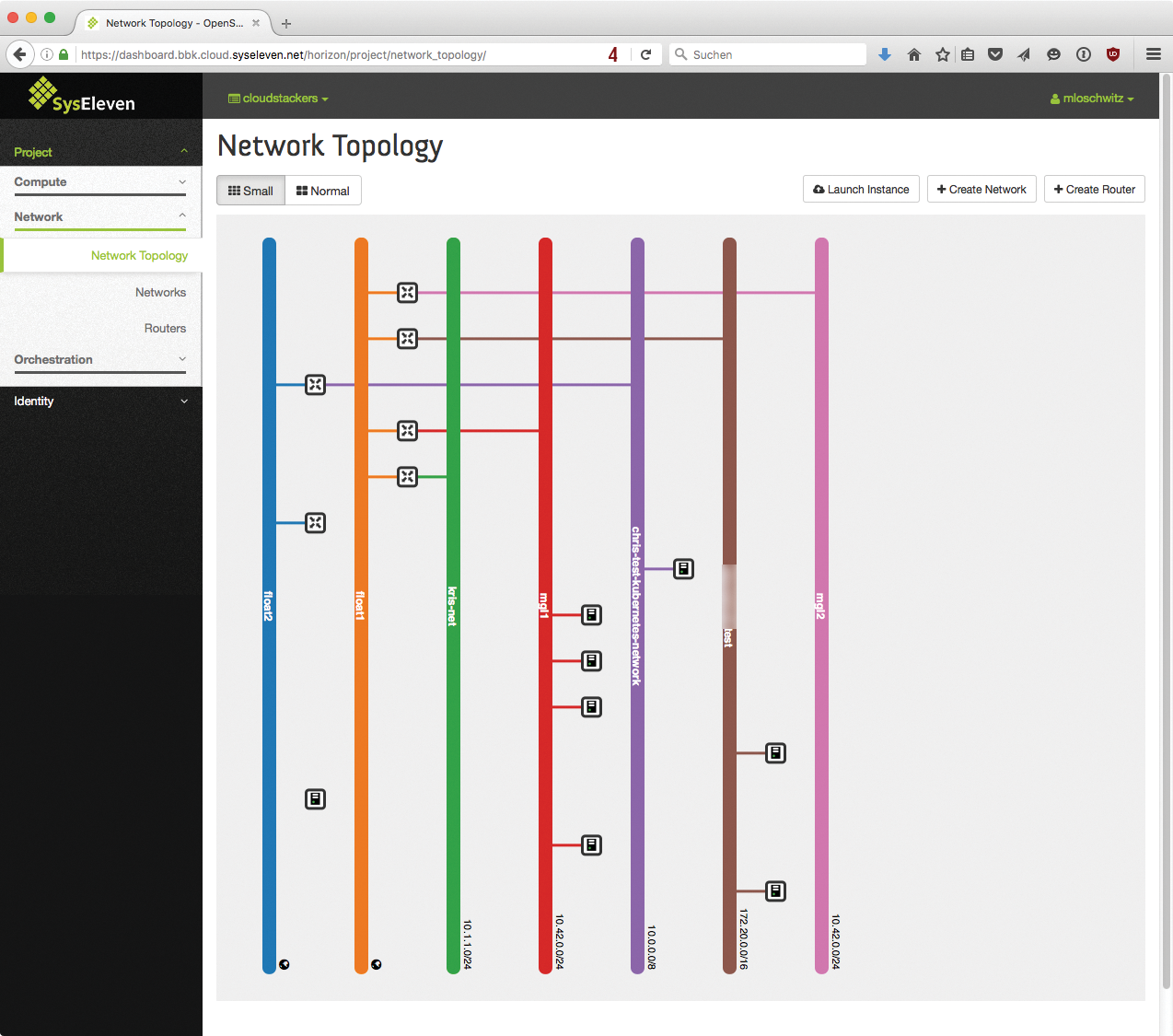

From the User's Point of View

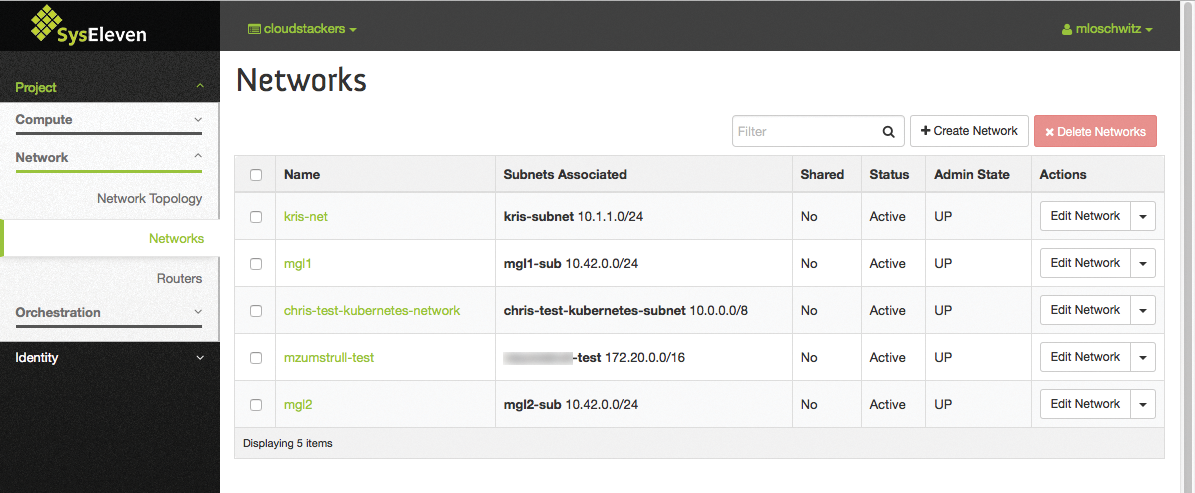

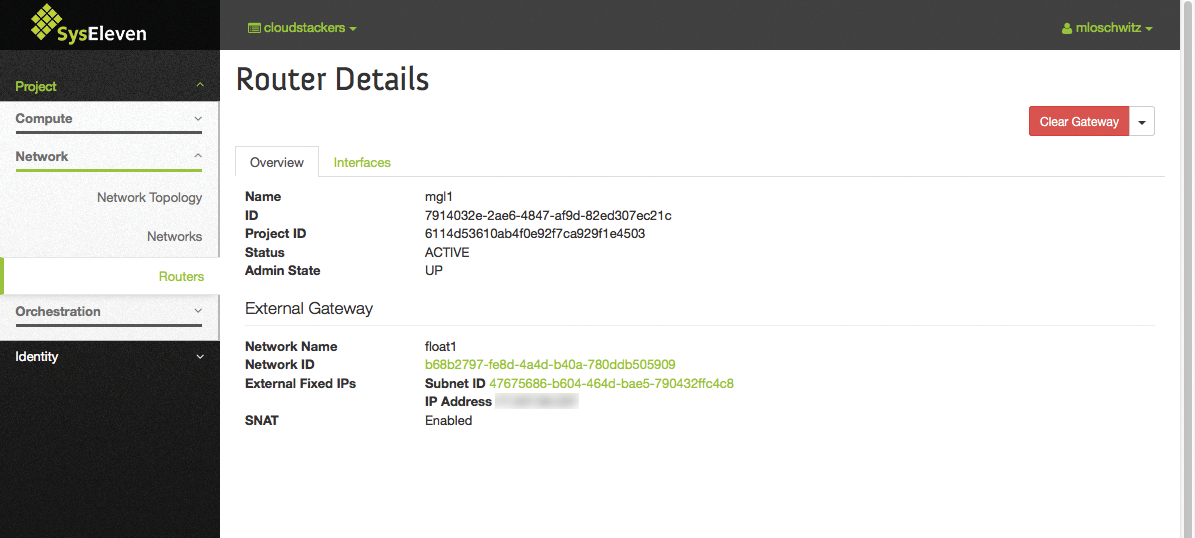

OpenStack combines many technologies in the background – and that seems to work. Users click their virtual networks together via a web interface (Figures 4 and 5) and do not need to worry about unauthorized third parties seeing the traffic from their systems. The most important aspects in terms of Internet access are covered by virtual routers (Figure 6) and floating IP addresses.

The solution is also fun from the provider's perspective: Although the learning curve is steep and it takes some time before the SDN works, after doing so, the network does not cause admins very much effort. Because the whole physical infrastructure at switch level is a flat hierarchy and the entire network configuration is possible directly from within the cloud, configuring switches is a thing of the past.

Neutron's modular architecture allows providers new features with little overhead. X as a service (XaaS) in Neutron, for example, lets the developer implement load balancers as a service, via an agent that installs an HA-proxy instance on a previously defined host. The load balancer configuration is carried out centrally in the Neutron CLI or via the OpenStack dashboard.

The same is true for firewall as a service (FWaaS) and VPN as a service (VPNaaS): Developers have no problem to accessing the existing Neutron infrastructure and using agents to provide the desired services. In the example of VPNaaS, the customer can connect to their virtual cloud network directly via VPN.

Which SDN Solution?

Neutron does not make any decisions for the admin when it comes to choosing the right SDN technology. The Neutron developers consider Open vSwitch unsuitable for a variety of scenarios; especially for large environments that provide hundreds of computing nodes.

If you want to roll out OpenStack on a large scale, you will need to consider solutions such as MidoNet, Plumgrid, or OpenContrail sooner or later. Each of these options has strengths and weaknesses. If you are in doubt, only extensive evaluation will help you decide the best solution for your network.