Storage innovations in Windows Server 2016

Employee of the Month

Windows Server 2016 offers many advances in network storage. To understand what is happening in Microsoft storage now, it is best to start with a recap on some innovations that arrived in Windows Server 2012.

With the Windows Server 2012 release, Microsoft first unveiled an option for setting up a file server for application data using on-board tools. This feature assumes two to eight servers that run a file server in a failover cluster and thus offer high availability. The storage can either be SAS disks in enclosures or logical unit numbers (LUNs) attached via Fibre Channel Storage Area Network (FC SAN)/Internet Small Computer System Interface (iSCSI). This storage is then provided to the application servers, such as Hyper-V or SQL Server, over the network. SMB version 3 is used as the protocol.

In Windows Server 2012 R2, Microsoft offered the ability to use SSDs and HDDs simultaneously in a storage pool for performance reasons. This technology, known as tiering, automatically moves frequently used data in 1MB chunks to fast disks (SSDs) during operation, while data that sees little or no use is stored on HDDs. This technique gives admins the ability to build high-performance, highly available, and economically attractive storage solutions.

If you are using SSDs, 1GB of the available space is used as a write-back cache by default. This reduces the latency for write operations and the negative performance impact on other file operations. Other new features in Windows 2012 R2 were the support for parity disks in the failover cluster, the use of dual parity (similar to a RAID 6), and the ability to automatically repair or recreate Storage Spaces given sufficient free space in the pool. This repair feature removed the need for "hot spare" media. The free disk space on the functional disks is used to restore data integrity.

IOPS with Storage Spaces Direct

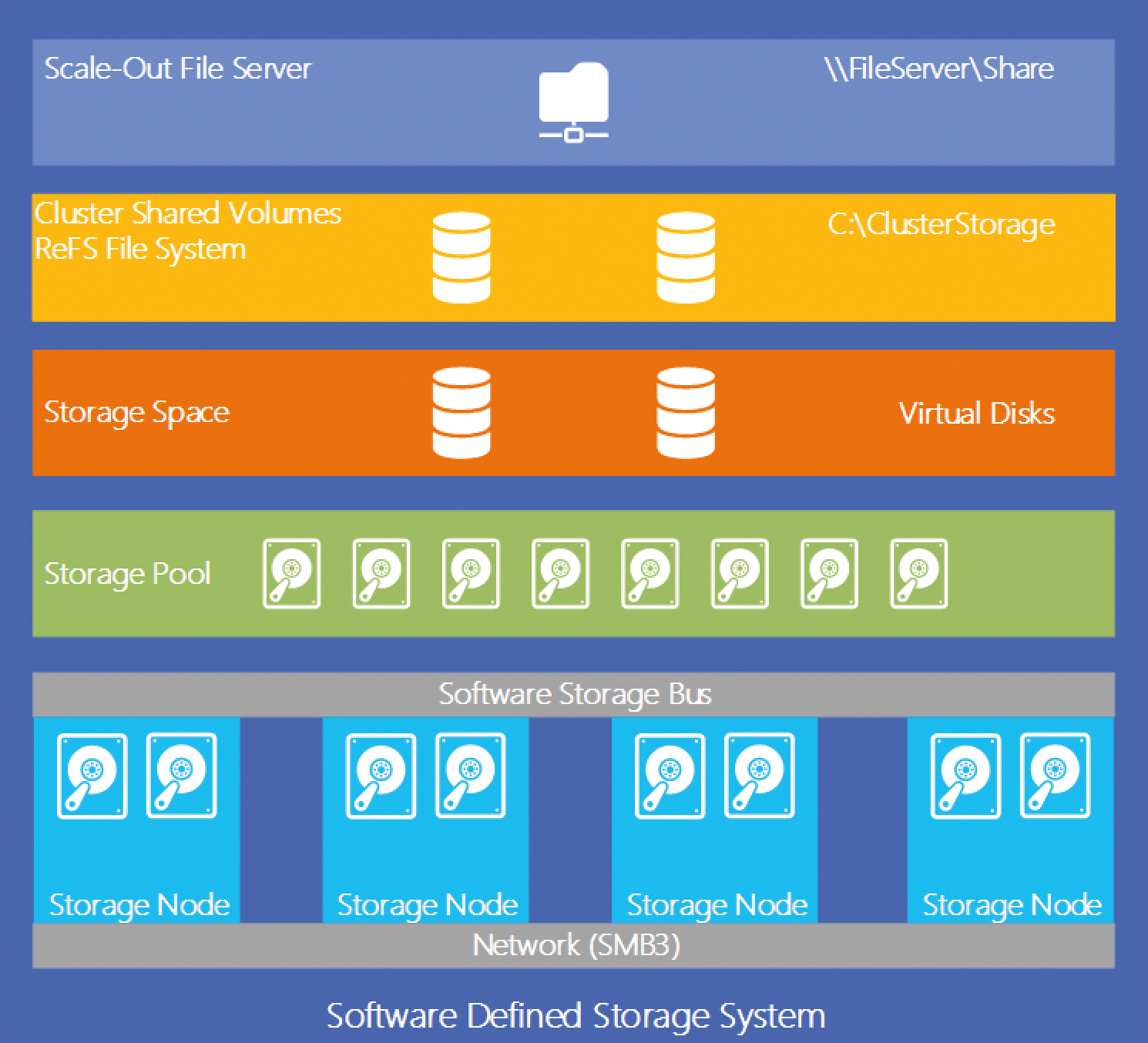

The upcoming Windows 2016 Server operating system adds some significant improvements in storage technology (Figure 1). Many companies are already using solutions that draw on local storage from multiple hosts that are logically grouped on a network. Microsoft enters this market with Storage Spaces Direct (S2D). The storage servers you use no longer need a common connection to one or multiple disk enclosures; you can now use locally installed media. The disks can be striped across multiple servers to create a pool that can serve as the basis for one or more virtual data storage devices (vDiscs). However, in building such an array, note that you can only lose a maximum of two servers (depending on the number of nodes).

Windows Server continues to rely on the SMB protocol – and has even improved it with the latest release, so you can also continue to use the core functionality of SMB3:

- SMB Multichannel: When using multiple network interface cards (NICs), the usable bandwidth increases, because data is transferred using all available NICs. The failure of one or more adapters is transparently fielded; the link does not break down as long as there is at least one connection. Multichannel does not require teaming, although it is technically possible using multiple teams. The configuration and use of Multipath I/O (MPIO) is not supported; the use of multiple cards is defined in the SMB protocol.

- Remote Direct Memory Access (RDMA): The use of special NICs enables the use of SMB Direct, which allows servers to drop data directly into the RAM of partner servers. The load of the data transfer is not outsourced to the CPU, but the adapters handle this autonomously. RDMA allows for extremely high-performance data transfers with very low latency and a very low CPU load.

If you want to use S2D, there is already a fair amount of detail about the necessary preconditions: You can now also use SATA disks; the requirement for using devices with SAS ports has been dropped. Currently, SAS SSDs, in particular, are quite expensive. In the future, you will be able to go for the slightly cheaper SATA versions. But this does not mean that you should use cheap consumer-level components. Always go for enterprise hardware (see "Getting the Right Hardware" box).

If the performance of commercially available SSDs is insufficient, Windows Server 2016 lets you use Non-Volatile Memory Express (NVMe) memory. NVMe is flash memory connected directly via the PCI Express bus. Using the PCI Express bus means NVMe works around the limitations of the SAS/SATA bus. Microsoft presented a corresponding structure in collaboration with Intel at the Intel Developer Conference in San Francisco [2]. The minimum number of servers in such a cluster is four systems, each of which needing at least 64GB of RAM. As a minimum requirement, you need one SSD/flash memory device per node. However, installations where only hard drives are used are very rarely found in practice. Thus, sufficient SSD memory exists in most cases. This is primarily because ordinary hard drives deliver between 100 and 200 IOPS. Compared with this, an SSD achieves 20000-100000 IOPS depending on the model. This massive difference makes the use of tiering extremely attractive, even if the price of an SSD at first appears quite high.

On the network, you will want to use RDMA cards with a rate of at least 10Gbps; cards with 40 or 56Gbps are better. In terms of price, investing in a 40Gbps infrastructure does not cost much more than buying a 10Gbps infrastructure.

Large and Small Scale

Two possibilities exist for using S2D: You can run a scale-out file server, which is exclusively used for provisioning the available storage. Your Hyper-V virtual Machines (VMs) are run on separate hosts that are interconnected via SMB3. Alternatively, you could also use a hyper-converged failover cluster, in which case, your storage and your Hyper-V VMs run on the same hardware systems. This option was not previously supported with Windows on-board resources.

If you operate a very small environment that undergoes relatively little change in the course of operation, the hyper-converged model could be just the thing for you. At least four servers with local disks and sufficient RAM capacity, CPU performance, and network bandwidth will let you operate your entire, highly available VM infrastructure. This approach is not new; there are already quite a few providers that offer such a solution. Technically Microsoft is not offering a world premiere here, but as a user, you have the advantage that the technology is already included in the license, and you do not need to buy any commercial add-on software.

The second variant, separating storage and Hyper-V, is primarily suited for medium to large environments. Especially if you have a large number of VMs, the number of Hyper-V hosts in the failover cluster is significantly larger than that of the nodes in the storage cluster. In addition, if you need more Hyper-V hosts, you only need to extend this cluster without directly growing your storage space. If you use a hyper-converged cluster, you need to expand computing power and storage at the same time, just adding storage-only or compute-only nodes is not possible. Each additional server requires the same features as the existing systems, even if the additional space is not actually required.

Replication with On-Board Tools

Also new in Windows Server 2016 is the ability to replicate your data without the use of a hardware SAN or a third-party software solution. You can replicate your data synchronously or asynchronously. In synchronous replication, the data on the primary side is written directly to the secondary side. Technically, the process is as follows: When an application writes data to its storage space, this operation is written to a log. This log needs to reside in flash memory so that the process is completed as quickly as possible. At the same time, the data is transmitted to the remote side and also written to a log. Once the remote side confirms the successful write, the application that created the data receives confirmation that the write operation has been successfully completed. In the background, the changes are written out from the log to the actual disk. This process no longer delays the application because of the need to wait for confirmation of the write operation. To ensure that this type of replication does not impact the performance of your VMs, the latency between the two sites needs to be minimized. Microsoft specifies a maximum round-trip time of five milliseconds; lower values are preferable of course.

Asynchronous replication behavior is a little different. A change to memory is handled by an application or VM on the primary side. Like in the synchronous version, this data is first written to a log, which will optimally reside on a flash disk. Once complete, the application receives appropriate feedback. While the application is already generating new data, the information from the first process in the log is transmitted to the remote side and also written to a log. Once this process is complete, the remote side confirms successful storage. Now the data is also written out from the log to the disk.

In both versions, you need to consider a number of conditions:

- The primary and secondary side need to have the same data media, data media types, volumes, formatting, and block size.

- The log should be stored in flash memory, which stores the data very quickly.

- A maximum latency of five milliseconds for synchronous replication. RDMA is an option but not mandatory.

- The bandwidth should be as high as possible. As a minimum, you should go for 10Gbps; higher bandwidths are always better.

The use of Storage Replica is possible in several scenarios independently of synchronous or asynchronous transmission. Technically, data replication occurs at the volume level. This means that it does not matter how the data is stored on the volume, what filesystem you use, or where the data is located. Storage Replica is not a Distributed File System Replication (DFSR) that replicates files. You can replicate data between two different servers, or replicate data within a stretch cluster, or between two clusters. SMB3 is used to transfer the data. This approach allows for the use of various techniques: Multichannel, Kerberos support, encryption "over the wire," and signing.

The target drive is not available at the time of replication. To view the replication of your data as a backup is also wrong. If a logical error in your data occurs on the primary side, for example, this error is also replicated, and you have no backup of the data – and thus no option for reverting to the original and correct state.

Goodbye NTFS

New Technology File System (NTFS) has more or less ended its useful life as a filesystem for storing data on Windows Server 2016 systems. Microsoft has announced the Resilient File System (ReFS) as the new default filesystem for use with virtualization. By using and verifying checksums, ReFS is protected against logical errors or incorrect bits ("bit rotting") even with very large volumes of data. The filesystem itself writes checksums, but you can also check and correct the data on the volume if necessary. Operations such as checking or repairing with CHKDSK now happen on-the-fly; there is no need to schedule downtime.

When using ReFS as storage for Hyper-V VMs, you will find some additional improvements. Generating VHDX files with a fixed size now requires only a few seconds. Unlike previously, this operation does not fill the volume with zeros first; instead, a metadata operation occurs in the background that tags the entire space as occupied in a very short time. When resolving Hyper-V checkpoints (known as snapshots in Windows Server 2012), there is no longer any need to move data. Resolving checkpoints is now a metadata operation. Even checkpoints with a size of several hundred gigabytes can be merged within the shortest possible time. This reduces the burden dramatically for other VMs on the same volume.

Better Storage Management

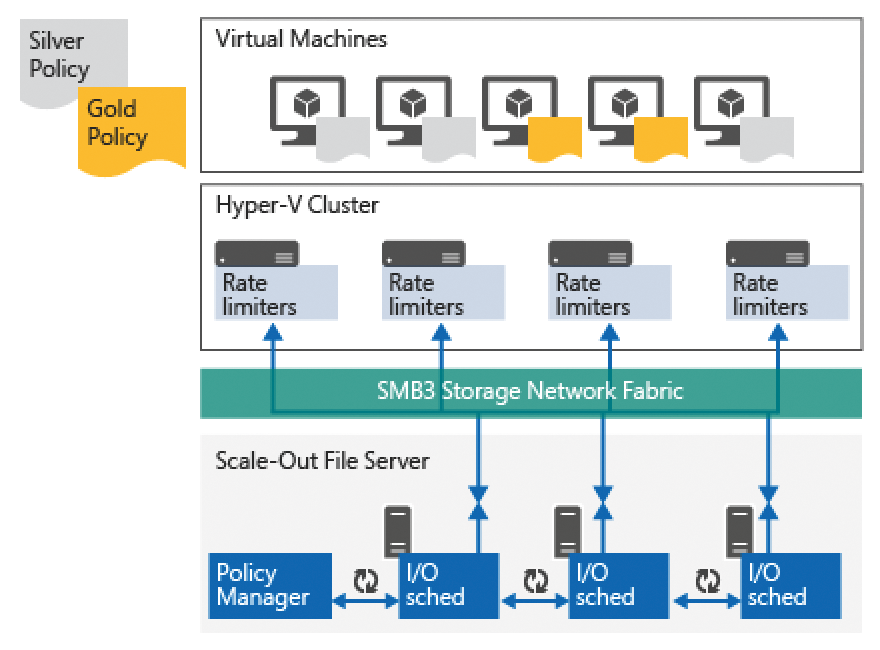

In Windows Server 2012 R2, IT managers can only restrict single VHDs or VHDX media. This changes fundamentally with Storage Quality of Service (QoS) in Windows Server 2016, which significantly expands the options for measuring and limiting the performance of an environment. Thanks to the use of Hyper-V (usually in the form of a Hyper-V failover cluster with multiple nodes) and a scale-out file server, the entire environment can be monitored and controlled.

By default, the system ensures that, for example, a VM cannot grab all resources for itself and thus paralyze all other VMs ("Noisy Neighbor Problem"). Once a VM is stored on the scale-out file server, logging of its performance begins. You then call these values with the Get-StorageQosFlow PowerShell command. The command then creates a list of all VMs with the measured values. These values can be used as a basis for adapting the environment, say, to restrict a VM.

In addition to listing the performance of your VMs, you can configure different rules that govern the use of resources. These rules regulate either individual VMs or groups of VMs by setting a limit or an IOPS guarantee. If you define, say, a limit of 1,000 IOPS for a group, all VMs together cannot exceed this limit. If five of six VMs are consuming virtually no resources, the sixth VM can claim the remaining IOPS for itself. Among others, this scenario targets hosters and large-scale environments that want to assign the same amount of compute power to each user or to control the compute power to reflect billing. Within a storage cluster, you can define up to 10,000 rules that ensure the best possible operations in the cluster, thus avoiding bottlenecks.

Technically, Storage QoS is based on a Policy Manager in a scale-out file server cluster that is responsible for central monitoring of storage performance. This policy manager can be one of the cluster nodes; you do not require a separate server. Each node also runs an "I/O scheduler" that is important for communication with the Hyper-V hosts. A rate limiter also runs on each node; the limiter communicates with the I/O scheduler, receiving and implementing reservations or limitations from it (Figure 2).

Every four seconds, the rate limiter runs on the Hyper-V and storage hosts; the QoS rules are then adapted if required. The IOPS are referred to as "Normalized IOPS," which means that each operation is counted as 8KB. If a process is smaller, it still counts as an 8KB IOPS operation. 32KB thus count as 4 IOPS.

Because monitoring automatically takes place on Windows Server 2016 if used as a scale-out file server, you can very quickly determine what kind of load your storage is exposed to and the kind of resources that each of your VMs requires. If you upgrade your scale-out file servers, when the final version of Windows Server 2016 is released next year, this operation alone, and the improvements in the background, will help to optimize your Hyper-V/Scale-Out File Server(SOFS) environment.

If you do not use scale-out file servers and also have no plans to change, you can still benefit from the Storage QoS functionality. According to Senthil Rajaram, Microsoft Program Manager in the Hyper-V Group, this feature is being introduced for all types of CSV data carriers. This change means you will have the opportunity to configure a restriction or reservation of IOPS if you use iSCSI or FC SAN.

Organization is Everything

The currently available version of Storage Spaces offers no way to reorganize the data. This feature is useful, for example, after a disk failure. If one disk fails, either a hot spare disk takes its place, or the free space within the pool is used (which is definitely eminently preferable to one or more hot spare disks) in order to repair the mirror. When the defective medium is replaced, there is no way to reorganize the data to restore the new volume to the same "level" as the other disks.

In Windows Server 2016, you can run reorganize using the Optimize Storage Pool command. In this process, the data within the specified pools are analyzed and rearranged, so that a similar level exists on each disc after completing the procedure. If another disk fails, all the remaining disks, and the free storage space, are available for restoring the mirror.

Conclusions

Microsoft has extended its already highly usable storage solution to include many features that were often missed previously, especially in enterprise environments. The possibilities of storage replication were often what prompted customers to rely on third-party solutions in the past. These replication functions are now included directly in the operating system and offer high availability solutions without an additional charge.

The hyper-converged failover cluster has attracted the attention of some users who would like to operate storage and compute together. This article was an overview of the previously known information, based on Technical Preview 3. Please note that all of these features may yet change, or some features might not be adopted into the final version.