Useful tools for the automation of network devices

Easy Maintenance

The way in which admins manage the infrastructure at the data center has changed significantly in recent years: Whereas most setups not so long ago were home brewed, automation has asserted itself across the board. That said, some blind spots remain; for example, hardware is not maintained automatically, although it is possible in principle. The network infrastructure is an extensive example; after all, network admins often still painstakingly maintain by hand the hardware of Juniper, Cisco, and other established vendors.

As the setup grows in size, it is increasingly difficult to maintain with manual techniques. Clouds – and in fact all installations intended to scale well horizontally – have specific requirements; one of these is the ability to roll out huge amounts of hardware in a short time. If you then start with manual deployment, you can either look forward to all-nighters or give up straight away.

The good news is that you no longer need to maintain the network infrastructure by hand, because tools for automating data centers are common, affording you plenty of opportunities.

Here, I describe the options you can turn to without worry. The main focus is on Puppet and NetBox. Although NetBox, only recently released, might not focus primarily on automation, it does make an important contribution in terms of efficient data center organization.

The Industry Leader: Puppet

Puppet is by far the most widely used tool on Linux for automation. At least one Puppet module exists for almost every popular application, although many modules vie for the favor of the user in case of major league applications. Classic Puppet modules are divided into two categories: those that come from the community, and those that are officially sanctioned by the vendor, Puppetlabs.

Puppetlabs noted years ago that automating the hardware for network tasks is an issue in genuine DevOps environments. In 2014, the vendor announced a cooperative agreement with several major network companies, including Cisco, Arista, Brocade, and Huawei. Since then, prebuilt Puppet modules have been available for devices by these vendors. Cisco itself offers the Cisco module in its GitHub directory [1]. The other vendors followed suit.

Unconventional Implementation

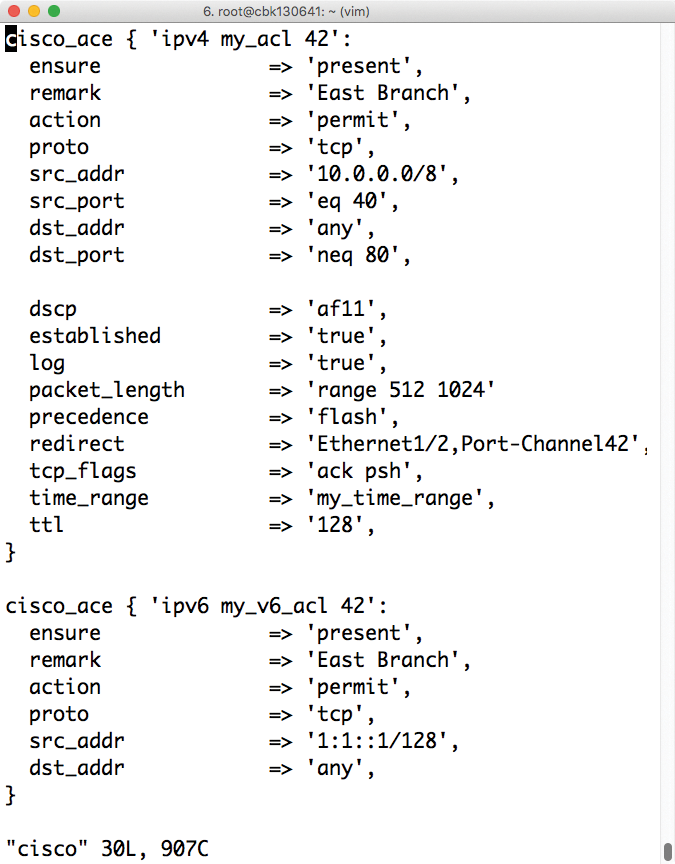

Because classical network devices are not open platforms, the way Puppet integration is implemented can seem strange at times. In Cisco's case, for example, Puppet integration is available for several of its Nexus series models. The Puppet NX-OS environment, which primarily consists of the Puppet agent, must be installed on the device. Admins of Linux environments can source packages for the Cisco devices directly from Puppet [2].

The setup must include a Puppet master server – serverless mode, which many choose for performance reasons, does not work. The Puppet module by Cisco is mandatory on each master, because it's the only way to set up meaningful configurations. The routine that follows is familiar to experienced Puppet users: The agent running on the device first needs to register with the master, before picking up the configuration stored there and setting up the device accordingly (Figure 1).

The feature scope of the Cisco module for Puppet is pretty impressive: It can adapt practically any important configuration option to suit your needs, including classic network parameters such as Border Gateway Protocol (BGP) configurations on routers (e.g., the SNMP configuration) so that the switch can be queried automatically via SNMP in the next step. Of course, basic operations such as assigning individual ports to VLANs or configuring network trunks also work. All in all, Cisco integration for Puppet looks good.

Other Manufacturers on Par

The fact that Puppetlabs cooperates with vendors means that functioning Puppet integration is also available for devices by Huawei, Arista, and Brocade and differs only in details like the setup, which you still need to handle. For example, Network OS by Brocade needs a proprietary Brocade provider on the Puppet master, but no Puppet agent runs on the Brocade switch itself. The agent has to run on a separate host and then connects to the switches remotely to transfer a configuration. This is certainly not elegant, but it serves its purpose.

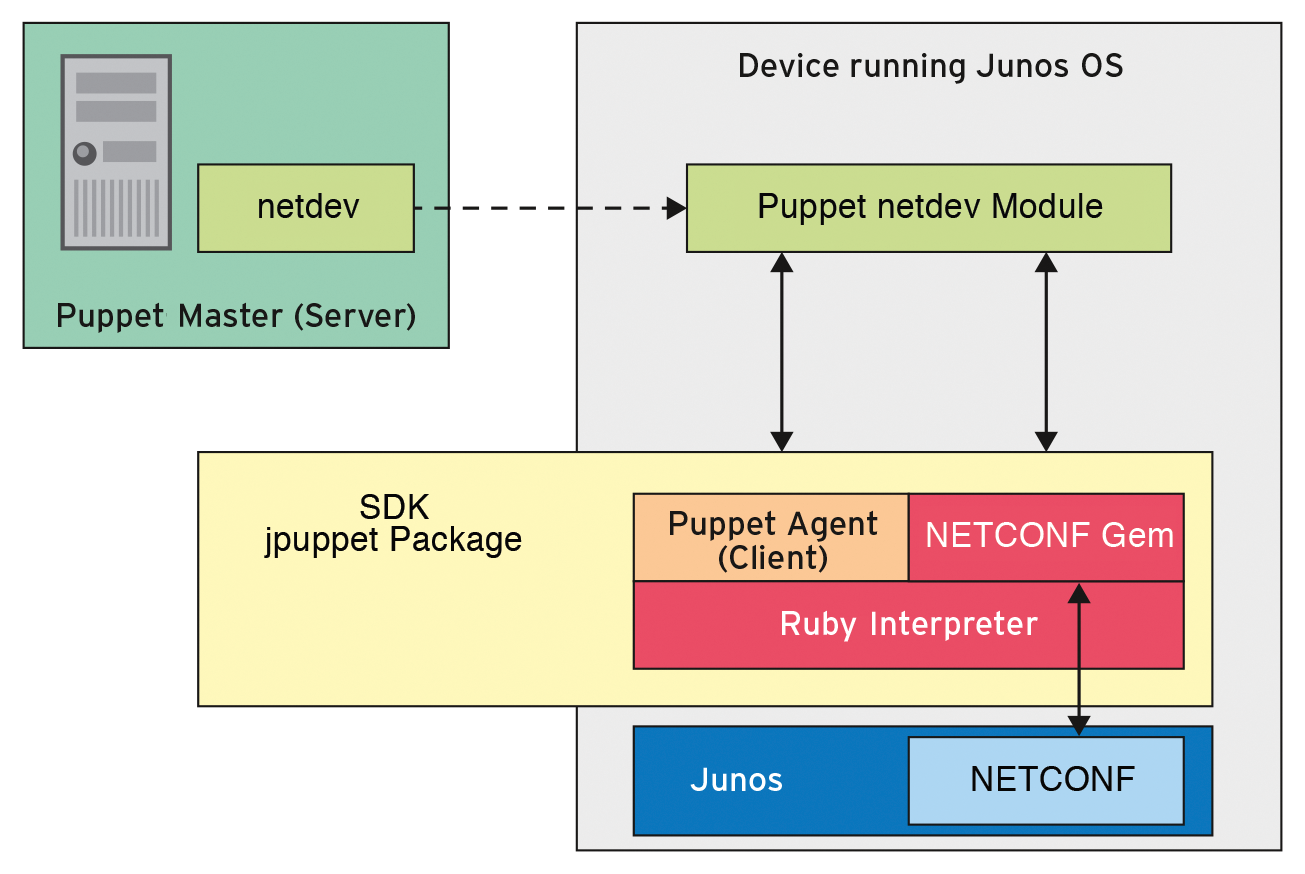

Puppetlabs and Juniper, at least so far, have not announced any kind of partnership publicly, but this does not detract from the Juniper-provided Puppet for Junos OS. The module for the Puppet master comes with a puppet agent, jpuppet (Figure 2), which can be installed on current Junos OS releases. The rest is known: A corresponding configuration on the puppet master ensures that the agent on the Junos OS device configures the device according to your specifications. More detailed information is available online [3].

Cisco with Chef and Ansible

The neatest Puppet integration is of little help to those who are already using some other kind of automation. Although it is theoretically conceivable to amend the existing Puppet solution, it would require several employees with expertise in both approaches. Fortunately, Puppet is not the only solution that has attracted commercial interest in network component deployment. Chef is also supported by several vendors.

More or less in parallel with the Puppet implementation for Nexus switches, Cisco also offers a Chef Cookbook. For this to work, the NX-OS Chef implementation containing the necessary components [4] needs to be installed on the Linux instances of the target devices. Cisco also provides a matching Chef Cookbook.

Cisco has obviously not invested as much time in the work on the Chef Cookbook as it did on the Puppet module. In terms of functionality, the Chef tool is clearly inferior to its Puppet counterpart. However, the most important parameters on Nexus devices can be controlled without Chef, using the module offered by Cisco.

Cisco even has something to offer for Ansible: Current versions of NX-OS with the NX-API can be controlled via the nxos-ansible module, which is available on GitHub [5]. All Nexus 9000 series devices, as well as selected 3000 series devices, come with the NX-API.

Juniper Keeping Pace

Because the big two of the network scene, Cisco and Juniper, have been in a head-to-head battle for years, it comes as no surprise that Juniper is no slouch when it comes to Chef and Ansible. A separate Junos OS module for Ansible, available in the Ansible Galaxy [6], can be used to modify various configuration parameters. If you opt for Chef instead, you can install the components needed for Chef on your Junos using a matching Cookbook provided by Juniper. The manufacturer provides all of the Chef components online [7].

Cisco and Juniper have taken care to ensure integration with the popular automation frameworks by virtue of their widespread distribution of devices. When it comes to support for Chef and Ansible, no such statement can be made by minor manufacturers of devices, for which you must turn to the vendor and the community for prebuilt solutions.

Devices by the various manufacturers are identical in one respect: The architecture is closed; that is, you face proprietary firmware provided by the device vendor in binary form only and are only allowed to enhance the firmware to the extent permitted by the vendor. For both Cisco and Juniper, you would make no progress with Puppet if the manufacturers did not offer Puppet agents for their environments. Many network architectures follow this classical approach, consequently leading to vendor lock-in.

If you are planning a new scalable setup on a greenfield, you have one distinct advantage in this regard: You can decide against the conventional approach from the outset and in favor of hardware that comes equipped by the manufacturer with software for DevOps environments.

Open Firmware Follows DevOps Principles

Earlier this year, Dell unveiled a networking operating system (OS10) with a Debian-based kernel that can be managed like any normal Linux server (see also the article on OS10 in this issue). Cumulus Linux [8] remains the unchallenged king of the hill for Linux switches: For years, the enterprise has propagated a network infrastructure that can be managed automatically along with other hosts.

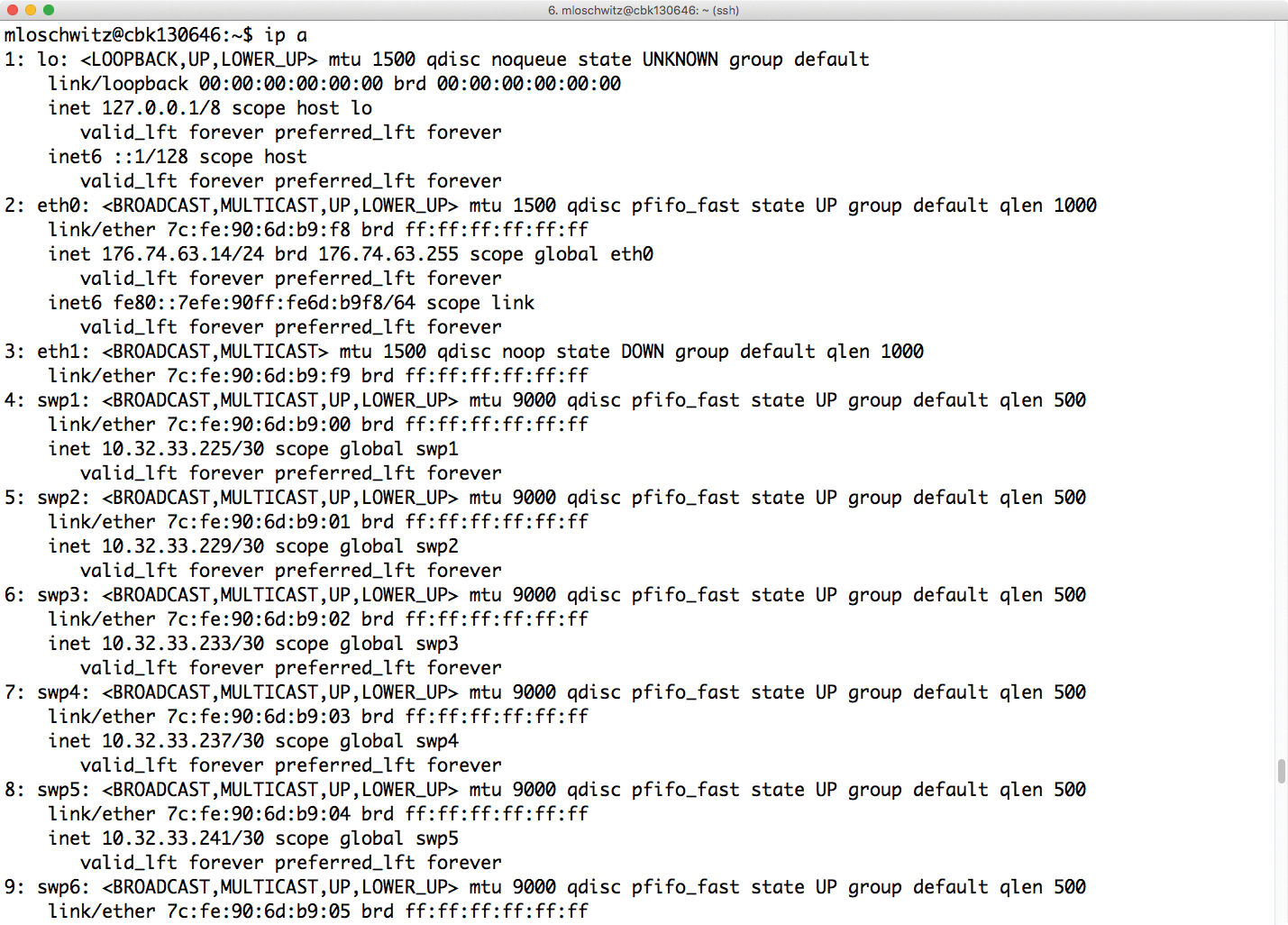

Cumulus is also based on Linux at heart and offers the usual configuration interfaces – primarily shell access via SSH. An abstraction layer in the device firmware, dubbed the switch abstraction layer, exposes a normal Netlink interface on the Linux side, and talks with the chipset installed on the switch on the other side.

Typing ip a on the switch shows at least the same number of interfaces as the switch has physical ports (Figure 3). Because Cumulus is Debian-based, virtually any Debian package can be installed on the Cumulus devices.

Freedom of Choice for Automation

Such a setup has many benefits: First, you can use the switch in a considerably more versatile way than is possible with typical proprietary solutions. Quagga or Bird routing software can be easily installed on a switch using apt-get – for example, to handle Layer 3 routing via BGP for leaf-spine architectures. It is much more important, however, to integrate Linux easily into any automation solution. No longer do you need to check whether any suitable integration tools for Puppet, Chef, or another solution are provided by the company that built the switch. Instead, you can maintain the respective hardware with standard tools.

Layer 3 routing is a perfect example of this: Each port of the switch must be provided with an IP configuration, and a BGP daemon must deal with the announcement of the routes on the network. Ansible handles the task of configuring the network interface for the existing features. Connecting Quagga or Bird is handled through the use of Playbooks, many of which you can get on the web. Even a fully automated switch deployment should not comprise more than a few hundred lines of code. The situation is similar with Puppet or Chef, with which Linux switches can also be integrated.

The popularity of Cumulus has increased steadily in recent years, and you no longer need a magnifying glass to find compatible devices. Dell has some switches with Cumulus preinstalled, and the Mellanox Ethernet series (SN2700/SN2410) can be purchased with Cumulus hardware. Several white-label switch manufacturers also rely on Cumulus.

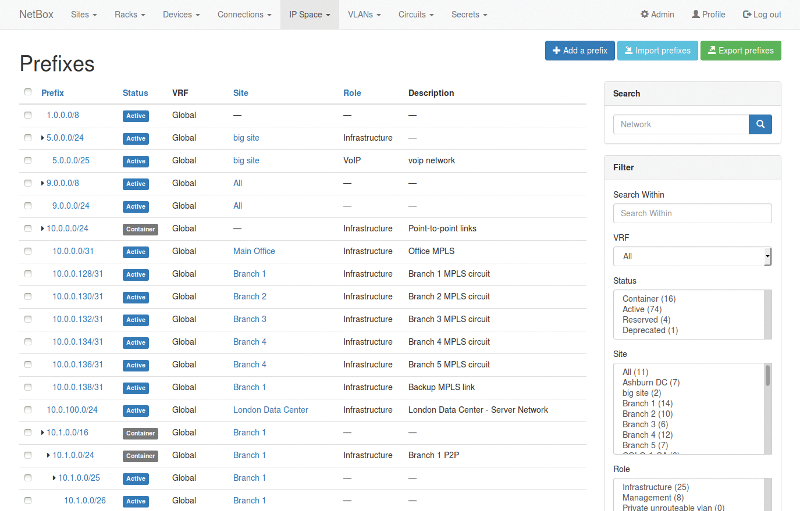

IPAM and DCIM with NetBox

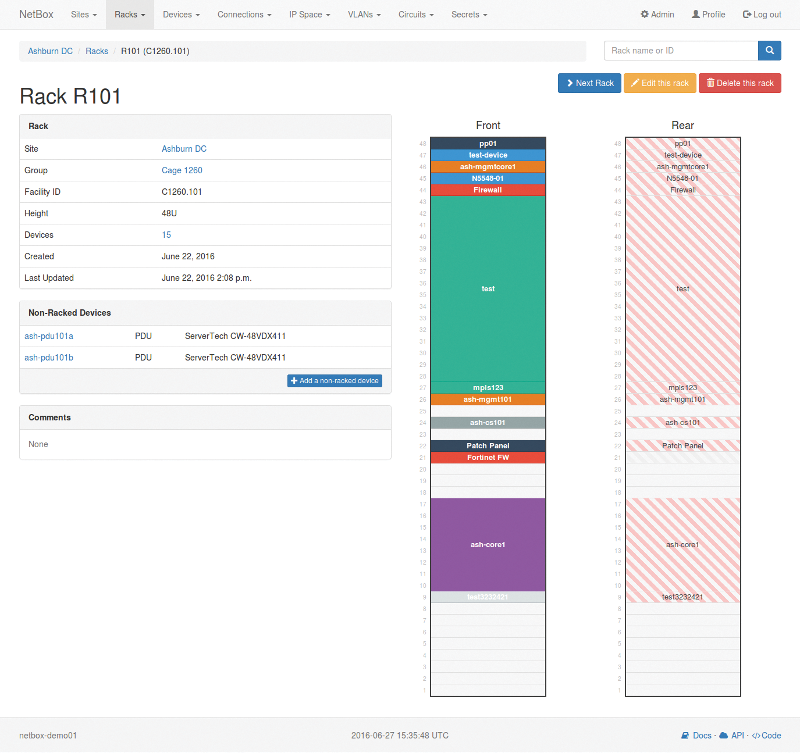

The next tool in the collection, NetBox [9], targets not only network infrastructure but promises IP address management (IPAM; Figure 4) and data center infrastructure management (DCIM; Figure 5). Under the hood, NetBox works with Python, and Django provides the web interface. NetBox talks to a PostgreSQL database with the NetBox metadata in the background. Moreover, a web server gateway interface (WSGI)-compatible web server is necessary to operate the software (e.g., uWSGI or standard Apache with mod_wsgi enabled).

IPAM services are relatively clear-cut: IP address blocks and hosts are entered in NetBox, and the individual IP addresses are then assigned to specific hosts. On the IPAM side, you always have a list of the installed hardware with the IPs that are currently in use. Several IPs can be defined for each host – for example, if a host has an official IPv4, an official IPv6, and a management IP on a BNC interface. The IPs can be specified precisely down to the level of the interfaces in NetBox. Through the web interface, you discover which IP addresses a host currently possesses and which interface on the host belongs to which address.

The tool comes from DigitalOcean, who has been developing NetBox behind closed doors for a while, and the program is now publicly available. As one of the first public cloud providers, the admins at DigitalOcean were confronted with a huge number of hosts to manage. NetBox is therefore aimed at operators of large infrastructure environments with hundreds of hosts. The IPAM not only fulfils organizational purposes, it also acts as a single source of truth for various services in the cluster. The deployment of new servers can be automated to a far greater extent if the IP for the new host is defined centrally and can be queried automatically.

DCIM as a RackTables Substitute

To fulfill the DCIM functionality, software needs to map dependencies between IP addresses, interfaces in hosts, and locations. The NetBox developers implemented these features and created a complete DCIM, almost as a side-effect of their in-house work. NetBox thus poaches on RackTables' territory: Although hosts including the hardware they contain can be managed in RackTables, and you can even assign IP addresses, RackTables lacks the IPAM component.

Basically NetBox replaces two tools: RackTables [10] and the open source IPAM system NIPAP [11]. However, NetBox also easily maps the dependencies between hosts according to DCIM and IP addresses from address management. If you delete such a host, NetBox automatically detects that the IP addresses assigned to this host are now free.

NetBox Capabilities

The NetBox authors proudly stress that they have put much thought into how to manage data in NetBox. The outcome is an extended data model that distinguishes between objects of different types, with a type for each resource that NetBox can manage, such as data centers or sites, racks, devices (servers or switches), network interfaces, IP addresses, and VLANs, to cite a few examples. NetBox garnishes the management of IP addresses and hardware in the rack with additional features, all of which focus on infrastructure management.

Connections between two points have their own object type: circuit. The best data center in the world is of little use if it does not have a connection to the outside world, but such a connection has previously challenged DCIM and IPAM systems, especially when data sets include multiple data centers and the links between these data centers need to be shown.

NetBox also allows you to define different types of circuits, including the Internet transit, out-of-band connectivity, peering, and private backhaul types. Therefore, NetBox even allows ISPs to map existing peering relationships to other providers.

Multitenant Capability

One killer feature in NetBox is its multitenant capability, which allows you to define levels and allocate them to organizations; NetBox can then map the dependencies between these organizations. In one possible scenario, a customer can rent part of a rack's capacity and in turn resell space in the rack commercially to others.

All objects in NetBox can be assigned to tenants, who can then be grouped. This capability is more of a logical division for the sake of clarity. For example, if you want to show customers and partners separately in NetBox, you can use tenancy for this purpose.

The NetBox developers specifically point out that they follow the Pareto principle, which states that 80 percent of the desired and required functionality can be achieved with 20% of the code. Or to put this another way: NetBox does not offer every single function that may be desirable in the IPAM and DCIM context. Instead of PostgreSQL, an already existing MySQL can be used. The NetBox codebase is small and manageable, so you do not need to do battle with a feature-bloated monster. The sleek design is also very conducive to performance.

Export Templates

With DCIM and IPAM playing an increasingly important role for providers, it will be necessary to exchange data with other services sooner or later. NetBox offers several options in this respect, the most important of which is based on export templates. For each object type, you create templates that define a specific output format. NetBox relies on the Django template language in this context; in turn, Django draws heavily on the Jinja2 language (i.e., the template language used by Ansible). Using predetermined parameters, you can then access objects in Ansible with these templates.

All values stored for an object can be used in the NetBox export templates. The export itself is ultimately initiated via the appropriate pages in the web interface. For example, it is possible to create configuration files for Nagios automatically in NetBox.

If you like things colorful, you will find useful add-ons. External links let you integrate graphs into the NetBox interface – RRD data from an existing monitoring system can be displayed in a graphical interface in close proximity to the switches to which the data belongs.

The topology maps that NetBox generates are equally practical: Using a search box, you enter the devices you want to display on such a map. NetBox then automatically generates an attractive image that clearly shows how the individual devices are connected to each other – even down to the level of individual interfaces.

Of Course an API

The API reflects the cloud heritage of NetBox in its RESTful API, which can be used to query values from the NetBox database via a standardized interface. In this way, applications that can query the RESTful API can integrate content from NetBox.

At the time this issue went to press, however, the API only supported read access. According to the developers, that will change soon with the introduction of API support for write access. If you want to try out read access right now, you will find a client written in Go that can communicate with NetBox [12]. As NetBox becomes more widespread, clients in other scripting languages are likely to follow soon, with the Python language leading the way.