NFS and CIFS shares for VMs with OpenStack Manila

Separate Silos

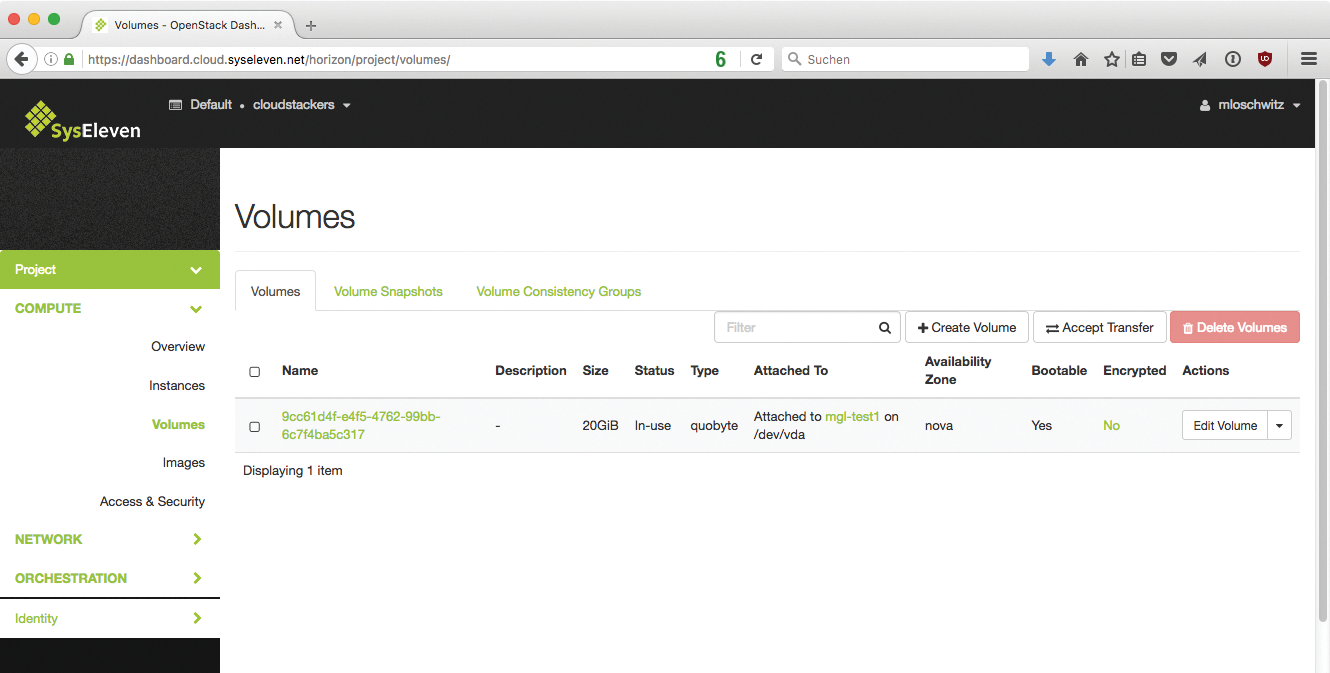

Those involved with OpenStack quickly get the impression that the subject of cloud storage has finally been solved, because at a key point in the setup, OpenStack Cinder makes sure virtual machines (VMs)are provided with storage in the form of persistent block devices (Figure 1). However, you will quickly notice that Cinder still has shortcomings – namely, at the point which persistent, shared storage, rather than just persistent storage, is requested. NFS and CIFS implement this shared storage in classic computing environments, but Cinder has no solution whatsoever for this use case, leaving you out in the cold.

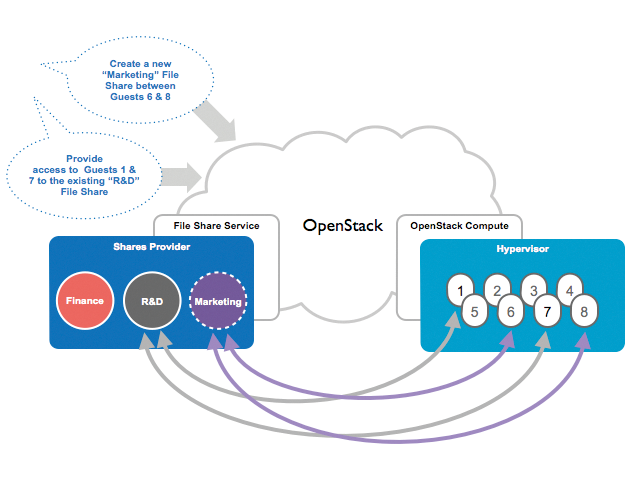

Yet, just as in classic setups, a cloud environment has the need for shared storage, so cloud customers also should be able to access shared memory from several VMs – and even beyond the boundaries of their own project. One of OpenStack's biggest strengths is its modularity, so it is no wonder that a component has been created that addresses the issue of shared memory: OpenStack Manila [1]. The aim of this module is to make it possible for VMs to use NFS and CIFS in OpenStack (Figure 2). In this article, I explain the project in detail, highlight the weaknesses in the implementation, and explain which setups can be implemented in OpenStack using Manila.

Usage Scenarios

Why are shared filesystems in clouds important? After all, you have other ways to make files available for several VMs at the same time (i.e., Amazon's S3 protocol, which involves applications storing statistical user data in an object store). A classic example is web shop assets (e.g., product images). They rarely change but are required by multiple clients at the same time.

Images are so well suited for object storage because they are binary objects, so each image is stored in S3 and is referenced from within the application with its full S3 path. The customer's web browser downloads the respective image directly from S3; in the end, the website looks just as the provider desires.

Unfortunately, there is a snag when it comes to legacy applications. Web shops, for example, normally assume that their assets are stored on a central shared filesystem, which the website links reference. NFS is usually used in this case, so anyone wanting to put such an application on the cloud has multiple options.

One option is to convert the web shop software so that it can load its statistical data from S3-compatible storage. This may be pedagogically correct, but the plan often fails in reality because the web shops are proprietary software, and the source code isn't even available to the web shop operator.

Even if the source code is available, porting to S3 capability isn't exactly easy: S3 modules might be available for common scripting languages in web design (e.g., for PHP); however, weeks or months go by before a large environment such as a web shop is completely converted and tested in this manner.

From the perspective of the website operator, there are certainly strong reasons not to change too much in the web application and instead to upgrade externally the functions that can best be used to run the application.

Clash of Culture: NFS and OpenStack

OpenStack and NFS can fundamentally be operated together without separate components. The simplest scenario is to start the VMs of a setup on a private network and then run an NFS server on one of the VMs.

However, what sounds good in theory is only really practical to a limited extent: Such a setup doesn't offer the option of also making the contents of the NFS server available to VMs that are not on the same private network. Specifically, this can mean the provider needs to keep these (identical) images in several locations on the cloud. Given the usual method of invoicing for clouds (pay per use), this isn't a very attractive option.

The problem illustrates the fundamental contradiction when combining OpenStack and the shared filesystem that Manila attempts to resolve: OpenStack does not provide the option to make filesystems available to different clients beyond the limits of private networks. However, this is just what Manila aims to achieve; incidentally, it also insists on turning NFS or CIFS shares into qualified resources.

Qualified Resources

The second major difference from a DIY solution is hidden in the term "qualified resources": OpenStack Manila provides an option for managing NFS or CIFS shares directly at the OpenStack level. You can now create and delete NFS shares using commands directly via the cloud APIs; you can also directly configure access to these shares at the API level. Manila therefore looks after the NFS server operation, and users don't need to worry about this issue anymore. In the best case scenario, a simple mouse click in the OpenStack dashboard is enough to access a NFS share that can then be connected to all individual VMs.

Another advantage is that, in principle, it is possible to use OpenStack resources directly from the various automation and orchestration solutions. Users also can create an NFS share automatically in a template for the OpenStack orchestration Heat service, which starts many VMs at the same time and creates matching networks. If NFS were running in a manually installed VM project instead, this very type of control would be impossible.

Clutter of Concepts

Anyone starting out with OpenStack Manila will be faced with a tide of terms and names that have their own meanings in a Manila context. First and foremost is "the share": The share is the OpenStack resource you use to work at the OpenStack API level. Users create shares or delete them, take snapshots of shares, or restrict the access permissions for their own shares. Shares support various protocols, including CIFS and NFS.

The back end is directly connected to the share: In Manila, a back end means an instance of the Manila file share service that communicates with an external storage solution. This is operated by the share servers (i.e., a Manila-specific server that connects a back end to a share). Back ends may be any devices or services that provide storage on demand.

The share servers are a bridge construction: They are usually real VMs that start the respective back end automatically on the basis of user requirements. If a back end manages its share servers itself, it can start them from the outset so that they run in the corresponding virtual network and so that access works via the target VMs.

Not all back ends can even deal with share servers. Many back ends for special storage devices, such as NetApp or EMC, instead focus on connecting shares to the target VMs without using a separate VM as an intermediate station. These back-end drivers are marked accordingly, and Manila automatically makes sure that other network settings are used when connecting VMs with these shares.

A Fork of Cinder

At the beginning of the Manila development is a fork of OpenStack Cinder – the block storage component – which is not surprising, because although the objective of Manila is to manage shared filesystems, there are also fundamental similarities between the two types of storage, such as handling the storage for specific VMs. For both Cinder and Manila, it is still necessary to connect a storage to one VM or, as is the case with Manila, multiple VMs, by fixed assignment.

Handling memory in the background is also similar between Manila and Cinder. Neither service offers storage itself, and neither therefore has its own disk space. Instead, both services assume that the admin will provide configurable storage that they split into small pieces as required and then pass onto individual VMs.

The design of Manila then is unsurprisingly oriented on that of Cinder. Like Cinder, Manila consists of an API (i.e., the typical OpenStack solution) that allows users to send commands to the service. A separate component selects one of the available storage back ends, which then activates manila-share. This service configures a filesystem share that is then available to VMs. However, Manila is significantly more closely integrated in the concept of VMs in OpenStack than users of Cinder would know – or expect.

The Delicate Issue of Network

The basis of these differences points once again to the delicate issue of software-defined networking (SDN). OpenStack provides its own component called Neutron that takes care of all aspects of SDN. Typical OpenStack clouds strictly separate the private networks of individual customers – based on the assumption that a customer's network traffic will never come into contact with the traffic of another network.

This is not a problem for Cinder: Cinder communicates directly with the OpenStack virtualization component Nova and pins new block devices on VMs via the hot-plug function of the hypervisor (i.e., via KVM in most cases). Therefore, it is enough if Nova and Cinder can communicate with each other via the cloud's management network.

Manila, on the other hand, must rely on the VMs being able to communicate in a private network with an NFS share, which – depending on the setup – might be located in a different private network. The problem can be solved in multiple ways in OpenStack. Option one is to create a shared network that all connected VMs can access. Option two is to allow access via router in OpenStack.

Option three builds on the approach of attaching a share directly to a private network so that only VMs within the network can access the share. This option may be the safest because it prevents unauthorized external access; however, it also makes it impossible to use shares in several projects at the same time.

Which network options are available in a setup depends on the back end being used. Drivers that support share servers are inherently flexible: A separate VM is launched that can be connected in the existing virtual networks. Anyone who uses one of the manufacturer-specific drivers instead must do without this luxury. The storage network and the private customer networks are often on different layers in the network stack (layer 2 vs. layer 3). Manila then makes new plans and builds a bridge construction, such as via an OpenStack router or via a previously specified shared network.

Generic Back End

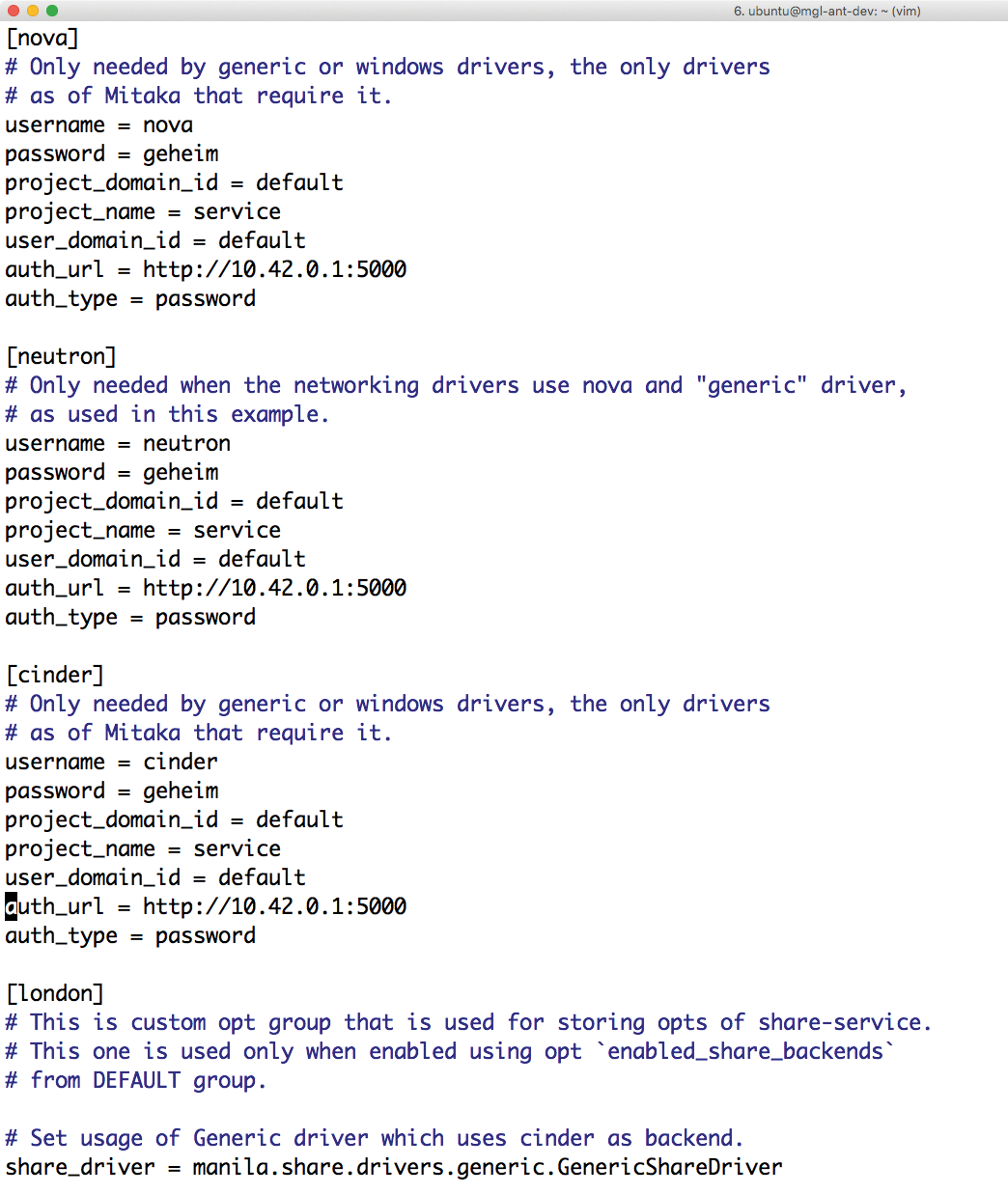

Detractors like to accuse the OpenStack developers of reinventing the wheel at every possible opportunity. But the Manila developers have exposed that as a prejudice: Anyone who wants to use Manila without special storage can use the generic back end, which is directly connected with Cinder when correctly configured (Figure 3). This back end configures a volume in Cinder according to the configuration set for Manila by the cloud admin. Nova then launches a VM that uses this volume.

In this scenario, Nova relieves Manila of all the network work: The freshly launched VM simply receives its own port in the virtual customer network. The back end then configures a service for the desired protocol – usually NFS or CIFS – on that VM and finally displays the path for access to the created volume. Only the obligatory mount command is missing on the VMs to use the shared filesystem.

Manufacturer-Specific Back Ends

The generic back end naturally creates a large amount of overhead during this process; even the VM eats up resources and costs hard cash, depending on the invoicing model. Many manufacturers of storage solutions therefore have a vested interest in providing Manila users with alternative drivers for their products. For example, the NetApp driver for Manila makes sure that the shares of an ONTAP instance are directly inserted in Manila and are available to VMs through Manila. EMC and HP Enterprise follow similar approaches for their storage solutions (i.e., Isilon, 3PAR).

IBM's general parallel filesystem (GPFS) has a back-end driver, as does GlusterFS and the Quobyte data center filesystem, which is also POSIX-compatible. Manila thus effectively addresses practically every OpenStack setup: If you use one of the storage solutions with a private driver, you use that driver. Alternatively, the generic driver for Cinder is an option.

Using Shares Efficiently

Creating a share is one thing, but managing the drive efficiently is another: In everyday life, it might be necessary to enlarge or reduce the existing share. Admins might also want to create snapshots of the respective share for backup purposes to access them later after a restore.

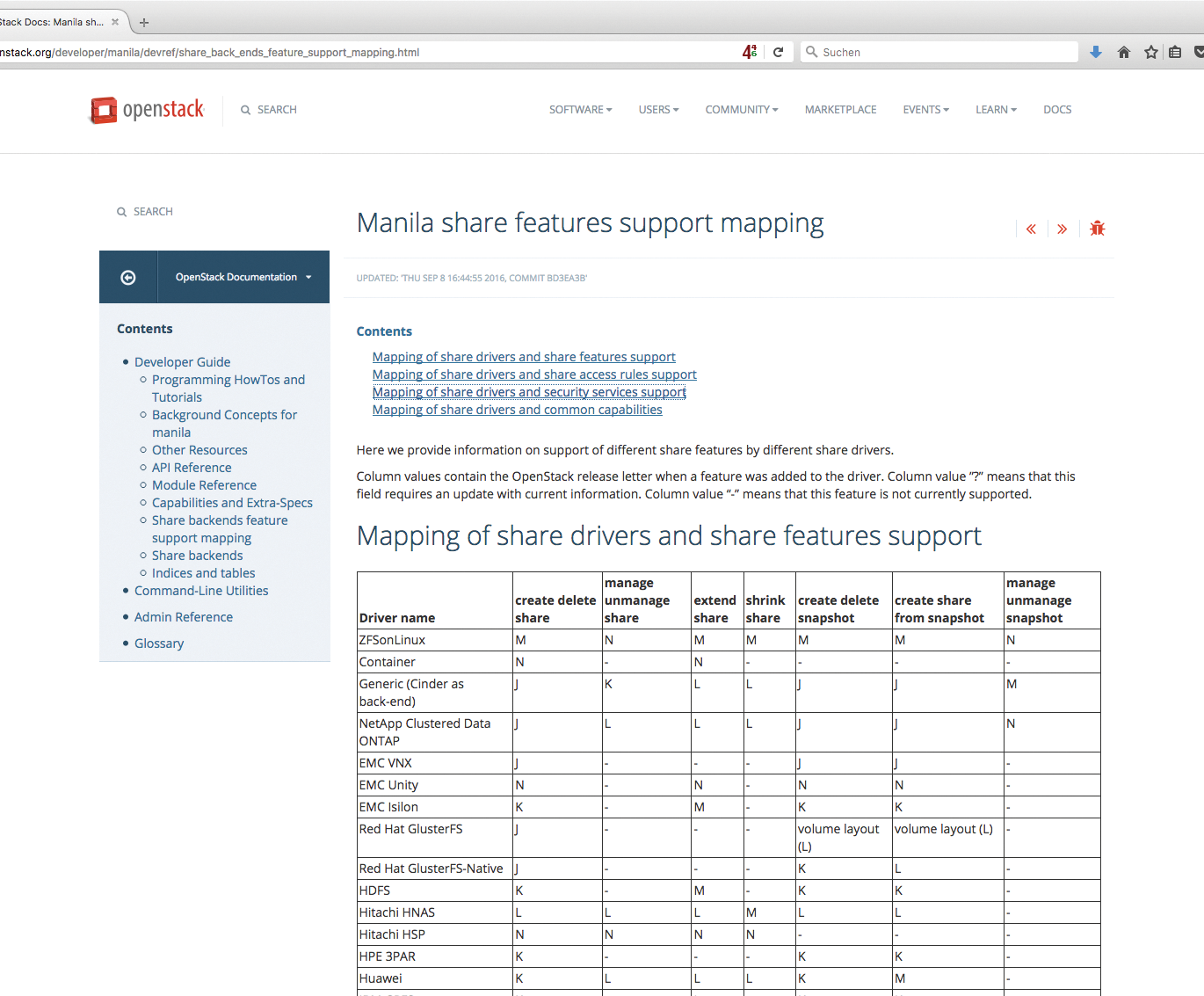

The good news is that the features mentioned are already provided in the Manila code. The bad news is that features available on an individual basis strongly depend on the driver for the back-end storage being used. Technically speaking, this wouldn't be feasible any other way. For example, how is Manila supposed to create snapshots on a ONTAP device from NetApp without using the NetApp internal tools for this purpose?

In practical terms, however, this just means that the quality of the Manila driver for the respective back-end storage is crucial. It is a good tradition that companies that want to connect to Manila with their storage solution maintain their Manila drivers themselves. Anyone who wants to use the corresponding features effectively is best off taking a look at the mapping of share drivers and features [2] in the Manila documentation when planning their setup to make sure the storage driver really fits the requirements (Figure 4).

Matters of Security

The concept of access via a shared network raises issues in the setups where the back end does not manage its own share servers, because these drivers require either shared networks or gateway functionality – especially in those setups that are actually designed for strict separation of networks.

That raises the important question: How can unauthorized access be prevented? As long as purely virtual private networks are used, the SDN solution takes care of it; however, it is precisely this mechanism that is leveraged by the shared network. The Manila developers' answer is an ACL system that works on an IP basis. The IP space where access is possible can be defined for each share. In the last step, a user also has the option to allow access only to a single IP address.

Security Plus

Theoretically speaking, even if a client were to access a Manila share, it wouldn't mean they could actually use it, because Manila implements its own access system with a login and password where potential clients first need to register. Again, the quality of the implementation is very much dependent on the capabilities of the respective storage driver. The framework in Manila itself is as flexible as possible: LDAP, Kerberos, and Active Directory are available. Using these protocols, admins can define a security service needed by the respective storage drivers to communicate.

That's it for the available drivers in terms of support for those security services. Only NetApps's ONTAP driver has all three modes. The driver designed for CIFS can at least be addressed via Active Directory. The generic driver, which doesn't have any of the three mechanisms described, comes off badly. In such cases, the admin ultimately has no other choice than to switch off the security service control; this might make the setup easier, but it is a setback in terms of security.

On the Dashboard

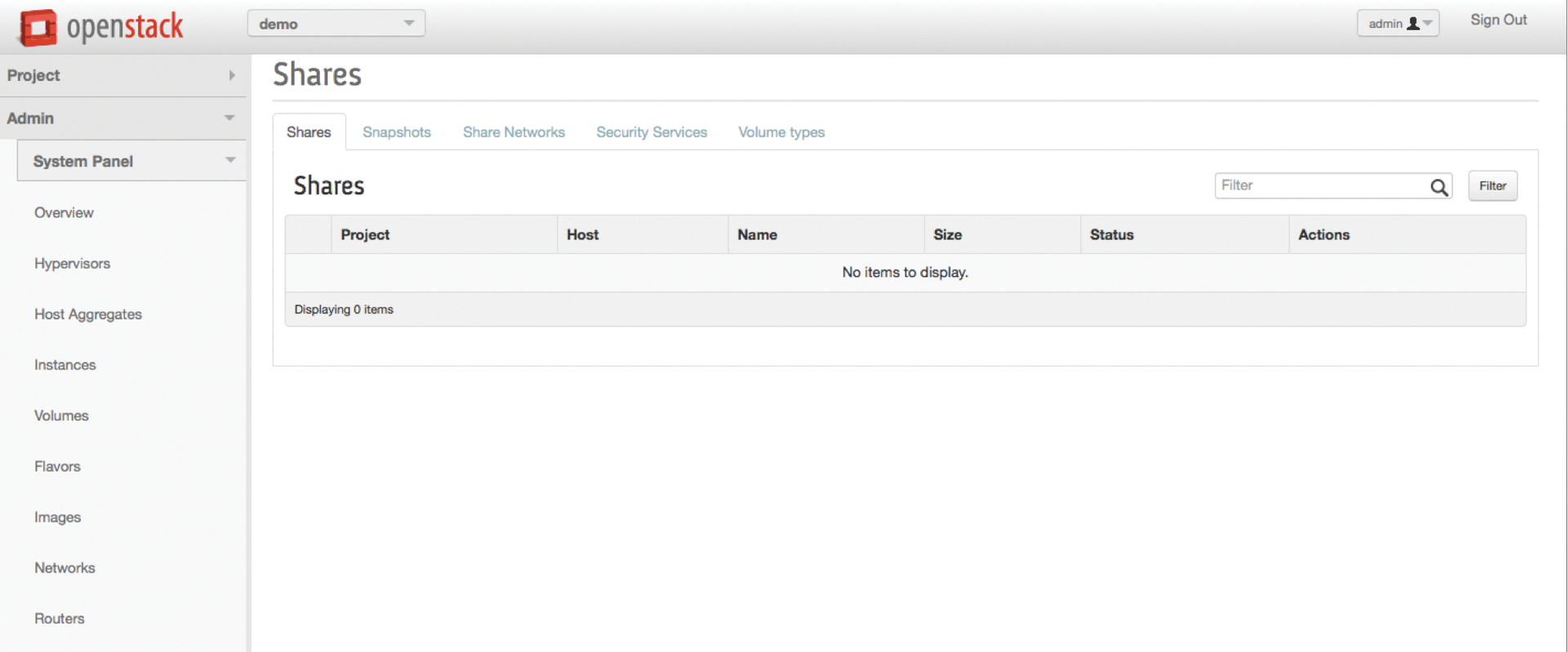

Even if, as you know, everything involving APIs for the OpenStack services can be controlled in the brave new world of the cloud, many admins still feel more comfortable if they have graphical access to technology they are not familiar with – at least in the beginning. This scenario is what the OpenStack dashboard addresses, alias Horizon. However, anyone who installs the vanilla version of the dashboard will have to do without Manila, because the UI plugin for Manila in Horizon still hasn't made it into the official Horizon source code.

You might be able to solve the problem on the command line, but the solution involves the manual installation of the UI module in Horizon. If in doubt, admins that have completely automated their cloud need to build a package for their own system themselves from the Manila plugin for Horizon. Nevertheless, anyone with experience in building packages will not encounter unsolvable problems, but it cannot be denied that this leaves a bitter taste (Figure 5).

Conclusions

Manila already is almost an archetypal solution in OpenStack: The component design succeeded in the core, and Manila is in a position to provide OpenStack users with real added value, because sooner or later, many cloud users will be confronted with the need to set up a shared filesystem.

Manila is far from perfect from an admin's perspective, though. As is typical of OpenStack, the documentation is rather incomplete and difficult to understand. Various pitfalls include incomplete drivers for storage by large manufacturers, complex configuration work, and the missing UI module for Horizon.

On the positive side, NetApp should be highlighted. Many of Manila's developers are working on it, and with the driver for the separate ONTAP devices, a blueprint has almost been presented for a perfect back-end driver. Drivers for other solutions are more appearance than substance.

Nevertheless, users can hope for improvement – Manila is now following the official OpenStack release cycles. Furthermore, the solution is continuing to attract new developers, meaning that more development power is available. However, it might still take a while before Manila is level with the established standard components of OpenStack, such as Neutron, Nova, and Cinder.