Safeguard and scale containers

Herding Containers

Since the release of Docker [1] three years ago, containers have not only been a perennial favorite in the Linux universe, but native ports for Windows and OS X also garner great interest. Where developers were initially only interested in testing their applications in containers as microservices [2], market players now have initial production experience with the use of containers in large setups – beyond Google and other major portals.

In this article, I look at how containers behave in large herds, what advantages arise from this, and what you need to watch out for.

Herd Animals

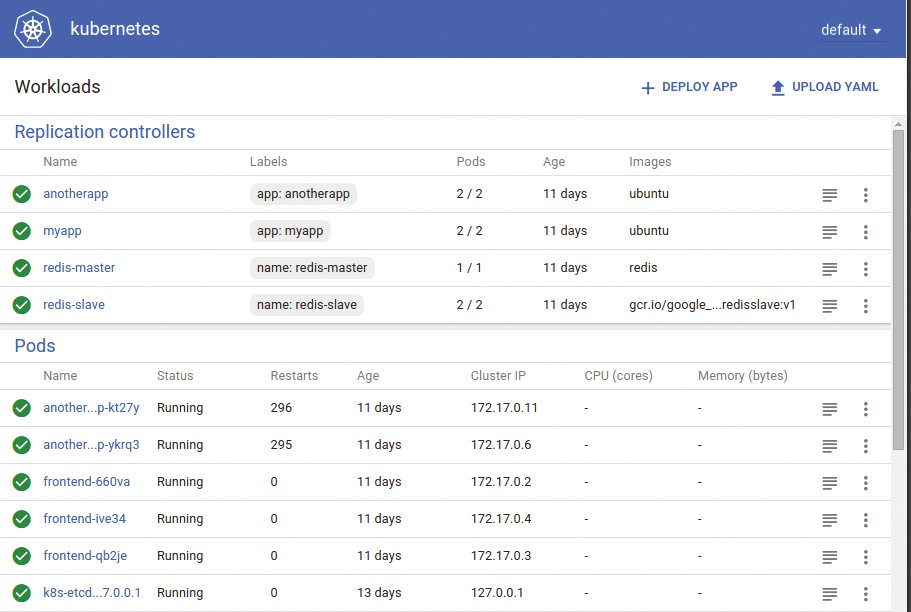

Admins clearly need to orchestrate the operation of Docker containers in bulk, and Kubernetes [3] (Figure 1) is a two-year-old system that does just that. As part of Google Infrastructure for Everyone Else (GIFEE), Kubernetes is written in Go and available under the Apache 2.0 license; the stable version when this issue was written was 1.3.

The source code is available on GitHub [4]; git clone delivers the current master branch. It is advisable to use git checkout v1. 3.0 to retrieve the latest stable release (change v1. 3.0. to the latest stable version). If you have the experience or enjoy a challenge, you can try a beta or alpha version.

Typing make quick-release builds a quick version, assuming that both Docker and Go are running on the host. I was able to install Kubernetes within a few minutes with Go 1.6 and Docker 1.11 in the lab. However, since version 1.2.4, you have to resolve a minor niggle by deleting the $CDPATH environment variable using unset CDPATH to avoid seeing error messages.

What is more serious from an open source perspective is that parts of the build depend on external containers. Although you can assume that all downloaded containers come from secure sources – if you count Google's registry to be such – the sheer number of containers leaves you with mixed feelings, especially in high-security environments.

A build without preinstalled containers shows that it is possible to install all the components without a network connection, but the Make process fails when packaging the components for kubi-apiserver [5] and kubelet [6]. For a secure environment, you might prefer to go for a release that uses only an auditable Docker file-based repository. (See also the "Runtimes and Images" box.)

Cluster To Go

After the install, you can set up a test environment in the blink of an eye: (1) select a Kubernetes provider and (2) fire up the cluster:

export KUBERNETES_PROVIDER=libvirt-coreos cluster/kube-up.sh

After a few minutes, you should have a running cluster consisting of a master and three worker nodes. Alternatively, the vagrant provider supports an installation under VirtualBox on Macs [7].

All Aboard

Kubernetes's plan is to contain all the components required to create your own PaaS infrastructure out of the box. It automatically sets up containers, scales them, self-heals, and manages automatic rollouts and even rollbacks.

To orchestrate the storage and network, Kubernetes uses storage, network, and firewall providers, so you first need to set these up for your home-built cloud. If you want to build deployment pipelines, Kubernetes helps with a management interface for configurations and passwords and supports DevOps in secure environments with complaint – and without a password if you have more than one configuration in a repository.

Kubernetes promises – and it is by this that it must be judged – that you will no longer need to worry about the infrastructure, only about the applications. There is even talk of ZeroOps, an advancement on DevOps. Of course, that will still take a long time. Ultimately, it is just like any other technology: For things to look easy and simple, someone needs to invest time and money.

Pods and More

Pods [11] are the smallest unit of deployment and are containers that share a single fate. Kubernetes generates them on the same node: They share an IP address, share access to the filesystem, and die together when a container in the pod breathes its last breath. A YAML file describes a pod; Listing 1 defines one for a simple Nginx server.

Listing 1: nginx_pod.yaml

01 apiVersion: v1 02 kind: Pod 03 metadata: 04 name: nginx 05 spec: 06 containers: 07 - name: nginx 08 image: nginx 09 ports: 10 - containerPort: 80

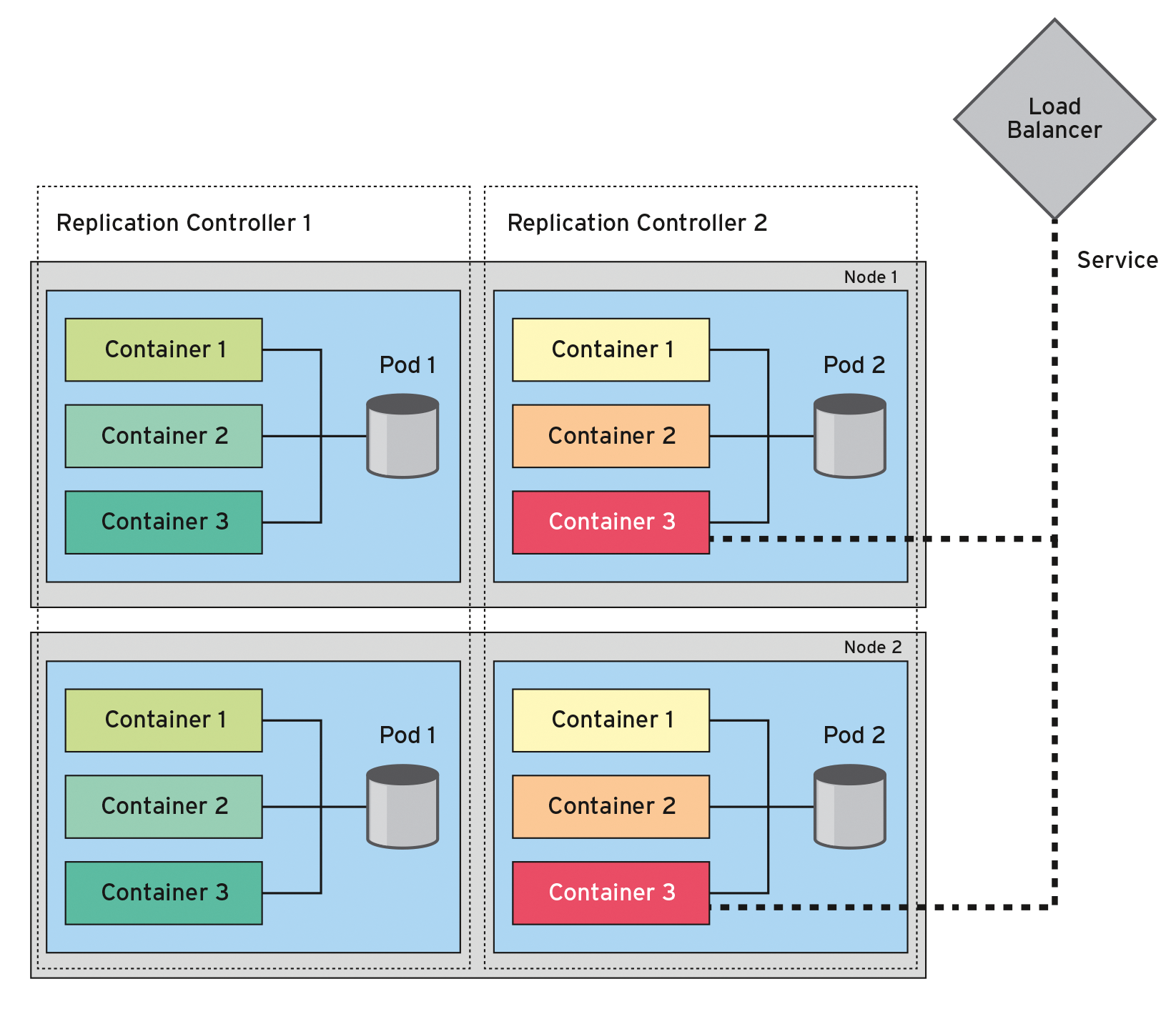

A replication controller [12], however, guarantees that a certain number of pods are always running. Listing 2 shows what this looks like in YAML format. Typing kubectl scale lets you change the number of active instances retroactively; you can use kubectl autoscale to define an autoscaler as a function of the system load. The autoscaler boots up instances at set intervals as the load on the system increases, and if it drops, it sends the instances back to sleep.

Listing 2: nginx_repl.yaml

01 apiVersion: v1 02 kind: ReplicationController 03 metadata: 04 name: nginx 05 spec: 06 replicas: 3 07 selector: 08 app: nginx 09 template: 10 metadata: 11 name: nginx 12 labels: 13 app: nginx 14 spec: 15 containers: 16 - name: nginx 17 image: nginx 18 ports: 19 - containerPort: 80

Services (Figure 2) [13] (e.g., an Nginx service [14]) create a unit out of a logical set of pods that offer the same function. They decouple ongoing pods and services by spanning a transparent network across all the hosts. Each node can retrieve services, even if no pod is running on the node.

Pets vs. Cattle

To populate the Kubernetes architecture meaningfully, you need to bear some fundamental things in mind, including distinguishing between cattle and pets [15], which has played a role in the DevOps area for quite a long time. While you lovingly tend your pets, you eat the cattle for dinner. The cattle are all stateless processes, whereas the pets are the data in the databases.

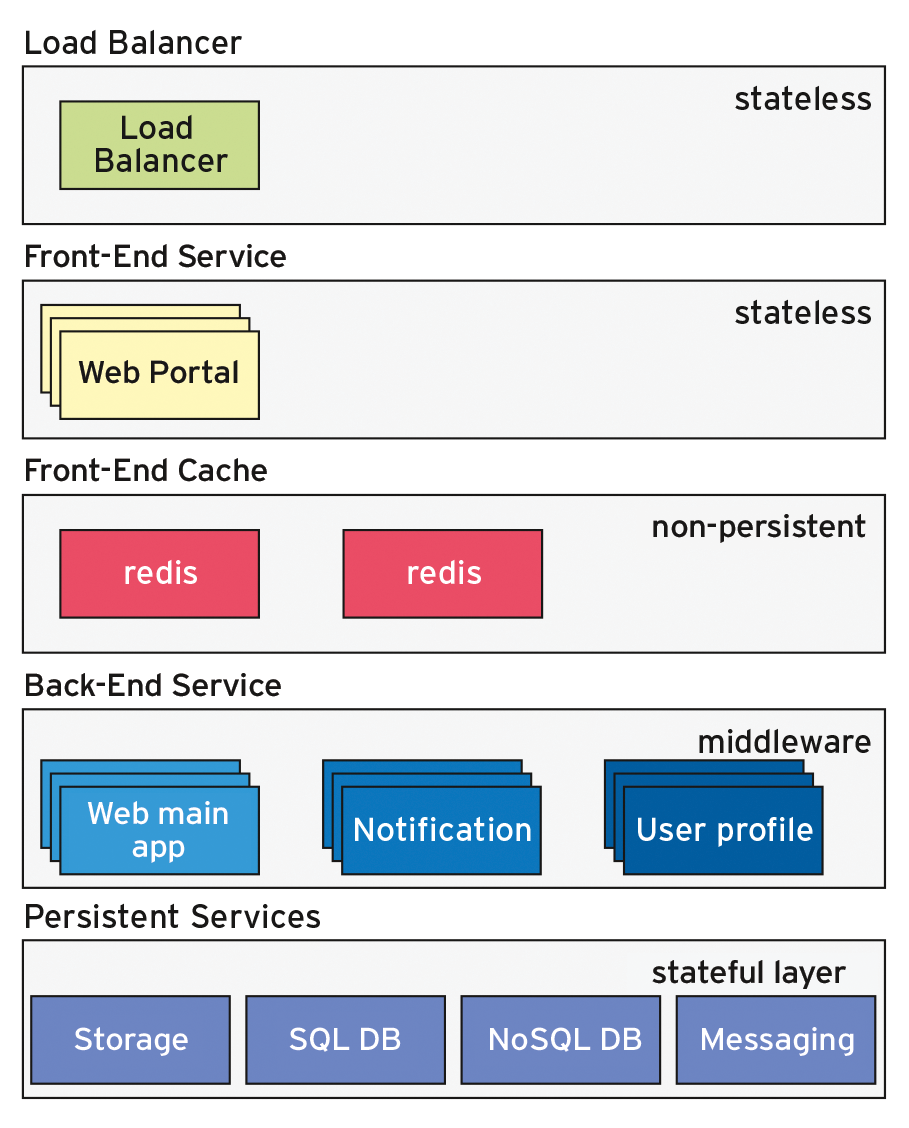

The pod approach only helps you deal with pets. Without any further action, containers do not persist; thus, you will want to design pods as stateless processes, but databases and all persistent data need a storage back end (Figure 3) to stored the data so that they persist beyond the lifespan of the pod.

Persistent Data

Therefore, how should you best handle the data? The simplest solution might be to rely on the cloud provider; that is, use the storage or database services provided. However, this does involve the risk of provider lock-in, which you need to weigh against the expense you save.

You can install databases on existing storage with a ReplicationController and a replicas: 1. In this case, the data resides on the storage back end. If the container restarts on a different host, it can still access the existing data. This requires a cross-host storage provider. In the simplest case, NFS is used; GlusterFS and Ceph are also suitable. Kubernetes supports a variety of providers.

If you want to work with your own hardware, you can even set up local disks. With the help of labels, you can uniquely assign pods to nodes. Replication of databases proves to be the drawback of this approach. The existing SQL and NoSQL, but also messaging systems, require separate solutions.

Resources and Network

Docker supports almost all types of resource management under Linux. In practice, Kubernetes does not completely hand over control [16]; version 1.3 only displays the CPU and memory resources. If want to use this to tame your Hadoop distributions, for example, you will be disappointed because of a lack of control over network and block I/O performance.

Various network models are available. In your own data center, it certainly makes sense to separate the management network from the network to which the Kubernetes containers connect. If you start a cluster with the libvirt-coreos provider on the virtual network, you can isolate it quite easily.

A plugin that supports the container network interface (CNI) [17] helps you switch networks on and off by network provider. A number of other interfaces that you can integrate into your networks are available in the form of Flannel [18], Calico [19], Canal [20], and Open vSwitch [21].

The API server lets you read the details of the services with the kubectl get service command, which connects the services with a load balancer [22]. The Kubernetes Ingress resource also provides a proxy with which you can configure directly the common paths for the web user view in Kubernetes [23].

Safeguarding

Beyond these test examples, Kubernetes secures all communication processes using server and client certificates, including processes between kubectl or kubelet and the API server, and between the /etcd/inetd.conf instances, as well as registries such as the Docker Hub.

For an application to run cleanly as a microservice, it must meet some conditions for Kubernetes to recognize that it's still alive. Logging to stdout is required for kubectl log to display the data correctly.

If you want to design your own microservice, take another look at the architecture of Kubernetes [24]. From the load balancer, through the web layer and possibly a cache, up to the business logic, all applications are stateless.

Admins can standardize architecture thanks to the container design pattern by Brendan Burns [25], Kubernetes' lead developer. For example, if you operate a legacy application, you can let logging, monitoring, and even messaging ride along as a sidecar in a second container of the pod, which allows communication with the outside world.

Also, only one process can run in any container of a pod. A common problem is admins moving logging to the background or running tail -f against stdout. Both will keep Kubernetes from identifying the process as dead. As a result, you cannot clear away and replace the container and thus the pod.

All cattle applications must be genuinely stateless, replicate databases in their own way, and automatically synchronize after interruptions. You need to test failure scenarios that respond to the failure of a node and the network, including recovery solutions, just as you test applications. This is a challenge, especially for classic SQL databases, to which NoSQL databases such as MongoDB are a response.

Language Skills

Image sizes also affect the deployment process. There are very lean programming languages such as Go, and there are languages that require a 150MB image for a minimal version. Cleaning up the container caches gives you an easy way to reduce the sizes significantly. The installation of containers FROM scratch is also recommended for size and safety considerations. Container operators will find a statically linked Go web server with an image size of just 6.7MB.

If you need to compile Ruby Gems or Python Eggs, it is advisable to dump the compiler and all the unused files into a black hole when done. For Ruby, Traveling Ruby [26] presents an attractive alternative. It links all Gems and the Ruby executables statically to a file, providing you the ability to set up minimal containers with Ruby.

For Java, rather than J2EE, use Spring Boot [27], which lets you configure applications at the command line and start a web server from within the application. Java is unfortunately very difficult to streamline with its own package system and branched properties.

Otherwise, it makes sense to create debug-enabled or trimmed-down and hardened containers for each programming language, as well as for any development, test, and production environment. Additionally, you will want to limit your own applications to just one distribution, so that as many containers as possible can reuse the corresponding base image.

Deployment Pipelines

Kubernetes is extremely well suited to deployment pipelines. In addition to the containers, Dockerfiles can easily be audited and transported. On the basis of the audited Dockerfiles, you can build containers for testing and production from the files in safety-critical areas without the influence of the developers. A Git repository makes the audit more easily traceable; the cluster operator can then fully automate the entire process. It is basically also possible to let experienced developers roll out images to simplify the infrastructure in DevOps teams.

CoreOS

If you follow the container paradigm, the only role of an operating system is to start a container safely and reliably and to guarantee its life cycle. This makes the few programs of even the leaner Linux distributions redundant. CoreOS [28] takes this idea and reverts to the core of an operating system. The /usr partition weighs in at just 1GB, of which CoreOS is less than 700MB. Kubernetes itself requires more than double the amount of disk space at 1.5GB.

The small size of CoreOS makes it possible to use a second disk as an alternative for an update. The distribution comes with two /usr partitions (USR-A and USR-B). During updates, they take turns and are mounted as read-only. If something goes wrong, you can at any time restore the old system as a fallback. Such immutable operating systems were previously only known from little-used embedded Linux distributions, which are used in the security field. They eliminate the need for package management – and actually also for configuration management – at the same time.

To meet the mission statement of CoreOS, you can enable a Trusted Platform Module [29] for the hardware and use rkt [30] as a container runtime alternative for Docker that only executes signed containers.

New Build

One gap has yet to be closed: Most images (depending on the counting method, two thirds or even five sixths of the images on Docker Hub) contain vulnerabilities, one third of which are considered critical. In secured environments, you should therefore only install self-made images FROM scratch from their own registries [31]. It also helps to renew containers regularly – that is, to use them for a maximum of one week or even rebuild them every day on an isolated build system.

Configurations can be grouped in config_maps [32] or accommodated in the environment variables. Passwords can be stored in secrets [33], which appear to the containers to be RAM disks and are not stored on the node's disk. An automatic build pipeline can handle the required steps; Jenkins can be easily extended with appropriate plugins for this purpose.

If you prefer to avoid this overhead, you can use Clair [34], a docker registry developed by CoreOS. It scans the included containers for known common vulnerabilities and exposures (CVEs) and raises the alarm if it finds an insecure library in a container. As a hosted alternative, there is also Quay.io, which is part of the CoreOS project.

By the way, you should remember to dimension the registry and a repository server for the legacy packages on a big enough scale. The reason being that a mass roll-out of containers after patching a low-level library usually triggers a full roll-out of all images. The lean technology of rolling out only deltas cannot be used in such a case.