Hyper-V containers with Windows Server 2016

High Demand

Gone are the days when Hyper-V was trying to catch up with the basic functionality of VMware. After the release of Windows Server 2012 R2, Microsoft's hypervisor drew level with vSphere for most applications. Therefore, the new version of Hyper-V in Windows Server 2016 mainly offers improvements for demanding environments, such as snapshots and improved security in the cloud.

Initially, Microsoft intended to release Windows Server 2016 simultaneously with Windows 10, but they dropped this plan in the beta phase and moved Server 2016 to an unspecified date in the second half of 2016. The tests for this article were conducted before the final release and are based on Technical Preview 4.

New File Format for VMs

A few things quickly catch the eye when you look at the new Hyper-V: The role of the hypervisor in Windows Server 2016 can only be activated if the server's processors support second-level address translation (SLAT). Before, this function was only required for the client Hyper-V; however, now, it is also required for the server. Older machines might have to be retired.

When you create new virtual machines (VMs) in Hyper-V, the new file formats become apparent. Thus far, Hyper-V has used files in XML format to configure the VMs, but now the system relies on binary files. This eliminates a convenient way of quickly reviewing the configuration of a VM in the XML source code. The official message from Microsoft here is that the new format is much faster to process than the XML files, which need to be parsed in a computationally expensive way in large environments. Rumor has it that support reasons were also decisive: All too often, customers directly manipulated the XML files, creating problems that were difficult for Microsoft's support teams to fix. With binary files, this problem is a thing of the past.

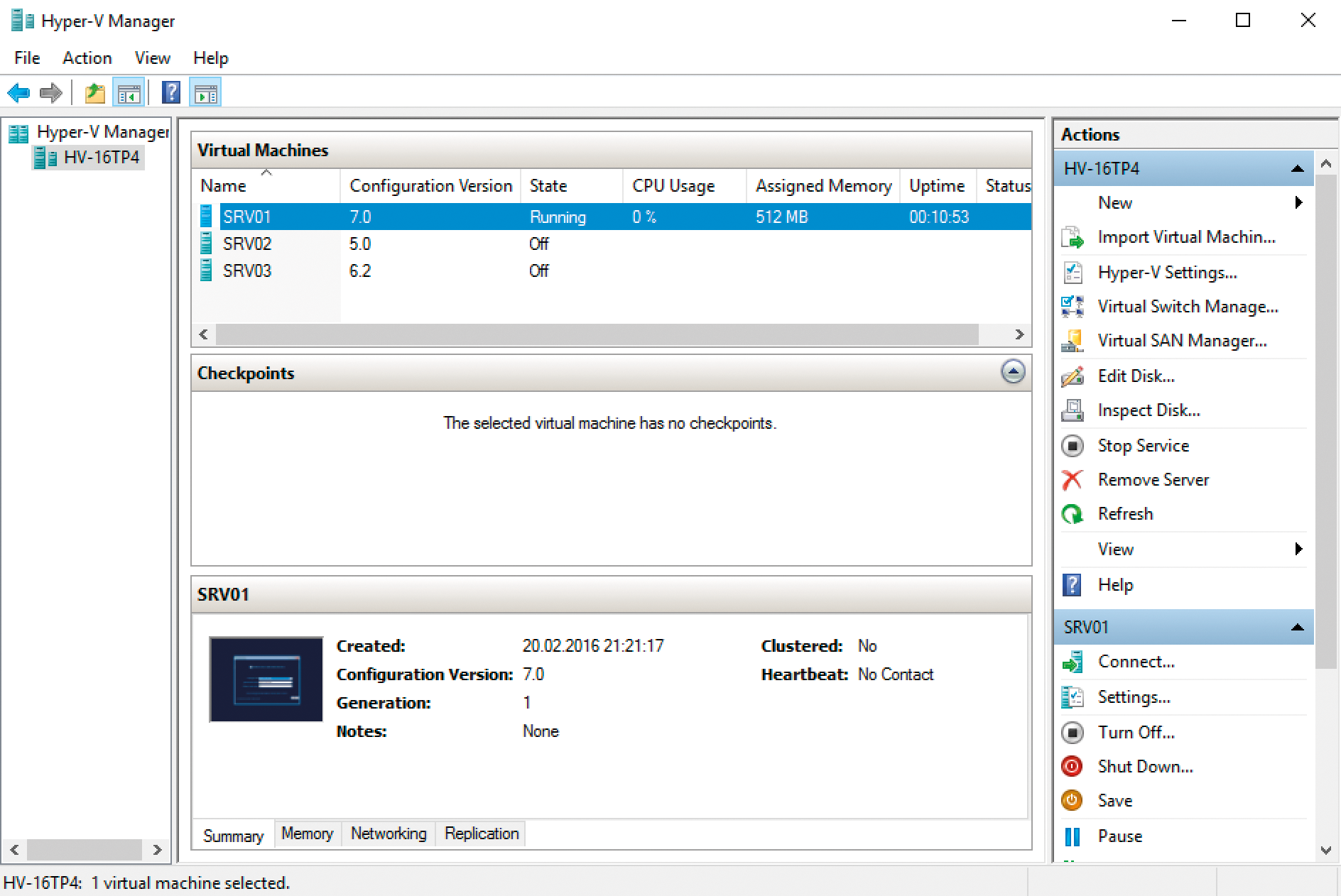

If administrators move existing VMs from one Hyper-V host with Windows Server 2012 R2 to a Windows 2016 host, the VMs initially remain unchanged. So far, implicit conversion had always taken place in such a scenario: If you moved a VM with Export and Import or by live migration from a Windows 2012 host server to 2012 R2, Hyper-V quietly updated the configuration to the newer version, thus cutting off a way back to its original state.

The new Hyper-V version performs such adjustments only if the administrator explicitly requests it, allowing for "rolling upgrades" in cluster environments in particular: If you run a Hyper-V cluster with Windows Server 2012 R2, you can add new hosts directly with Windows Server 2016 to the cluster. The cluster then works in mixed mode, which allows all the VMs to be migrated back and forth between the cluster nodes (Figure 1). IT managers can thus plan an upgrade of their cluster environments in peace, without causing disruption to the users. Once all the legacy hosts have been replaced (or reinstalled), the cluster can be switched to native mode and the VMs updated to the new format. Although Microsoft indicates in some places on the web that the interim mode is not intended to be operational for more than four weeks, this is not a hard technical limit.

Flexible Virtual Hardware

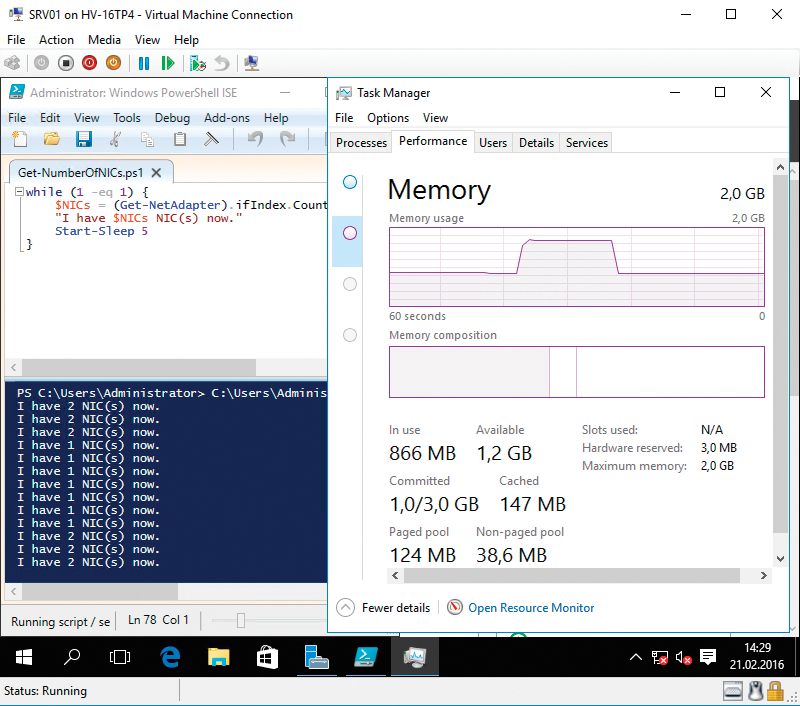

Hyper-V in Windows Server 2016 brings some interesting innovations for the VM hardware. For example, it will be possible in the future to add virtual network adapters or memory to a virtual machine during operation and to remove these resources without interruption (Figure 2). Previously, Hyper-V could take memory away from an active VM when the Dynamic Memory feature was enabled. The new release makes this possible for VMs with static memory.

Of course, caution is advisable: Obviously, an application can crash if it suddenly has less memory. Of course, Hyper-V doesn't just simply take away RAM access if actively used data resides within, but an application can still react badly if its developer has not considered a scenario in which all of a sudden there's less RAM. Conversely, it can also happen that a program does not benefit from suddenly having more RAM available. For example, Exchange only checks when starting its services to see how much memory is available and ignores any changes to it.

A new and still experimental feature is actually quite old, from a technology point of view: Microsoft has a Discrete Device Assignment through which certain hardware devices of the host are passed to a VM and can then be managed by the VM. Although the basics of this technology existed in the first Hyper-V release from 2008, it will only become directly usable with Windows Server 2016. However, you should not overestimate its abilities: Discrete Device Assignment does not bind just any old host hardware to a VM; instead, it is used to communicate directly with certain Peripheral Component Interconnect Express (PCIe) devices. For this to work, many components need to cooperate, including not just the drivers, but also the hardware itself.

SR-IOV network cards that support Hyper-V for Windows Server 2012 compose the first device class to use this technique. These special adapters can be split up into multiple virtual Network Interface Cards (NICs) on a hardware level and can then be assigned exclusively to individual VMs. The novel Non-Volatile Memory Express (NVMe) storage drives are the second use case for which Microsoft is making the feature more widely available; this is a new class of SSDs for enterprise use. High-end graphics cards will also become eligible in the future. In a blog, the developers also write of (partly successful, partly failed) experiments with other devices [1].

New Options in Virtual Networks

At the network level, the future Hyper-V comes with some improvements to detail. For example, vNIC Consistent Naming is more of a convenience feature. A virtual network adapter can be equipped with a (meaningful) name on the host level, and it then shows up in the VM operating system with the name of the card. This makes mapping virtual networks far easier in complex environments.

Additions to the extensible virtual switch are more complex. Compared with the previous generation, this virtual switch now provides an interface for modules that can expand the switch's functionality. One new feature is primarily designed for the container technology (more on that later), but it is also useful in many other situations, because a virtual switch can now serve as a network address translation (NAT) device. The new NAT module extends the virtual switch from pure Layer 2 management to partial Layer 3 integration. With the new feature, the switch can forward network packets not only to the appropriate VM, but it can also handle IP address translation operations that are otherwise handled by routers or firewalls.

Through the integration of NAT, you can hide the real IP address from the outside world on a VM. Also, this offers a simple option for achieving a degree of network isolation (e.g., for laboratory and test networks) without cutting off external traffic. Desktop virtualizers such as VMware Workstation and Oracle VirtualBox have long offered such functions. Seeing that Hyper-V is also included in Windows 10 as a client, the new feature will come in handy there.

Another network innovation can be found at the other end of the virtual switch: its connection to the host hardware. Switch-Embedded Teaming (SET) lets you team multiple network cards directly at the level of the virtual switch. In contrast to earlier versions, you do not need to create the team on the host operating system first, making it far easier to automate such setups.

SET offers a direct advantage for environments with high demands on network throughput, because a SET team can also contain remote direct memory access (RDMA) network cards. These adapters allow a very fast exchange of large amounts of data and cannot be added to a legacy team. There are, however, disadvantages compared with the older technique; for example, SET network cards must be the same, which is not necessary for host-based teaming.

More Consistency in Snapshots

Particular emphasis is placed on the hypervisor's reliability functions. Again, the next Hyper-V has something new up its sleeve. The most important feature is production checkpoints, wherein the hypervisor's popular snapshot technique becomes a fully fledged data backup tool (Figure 3). Thus far, VM snapshots have always suffered from a point-in-time problem: This kind of snapshot – Microsoft has referred to them as "checkpoints" for some time – freezes the state of a VM at any given time and stores it on your hard drive. The snapshot contains not only the content of the virtual hard disks in the VM, but also the configuration details and the content of the system memory. If the administrator resets a VM to one of these snapshots, it again has precisely the state it had at the time of the freeze; even the mouse pointer is positioned in the same place.

The VM's applications are unaware of this process. Although it is handy in some situations, it can be tricky, too, because complex distributed applications are often unable to deal with one of the participating servers suddenly having a historic state. Other mechanisms, such as automatic changing of computer passwords in Active Directory, can also cause errors with snapshots.

With the help of production checkpoints, Microsoft is looking to free the snapshot technology from these risks. A snapshot of this kind includes far less data than its conventional counterpart: Only the contents of the hard drive and the VM configuration are backed up; the memory remains sidelined. Production checkpoints also work with full Volume Shadow Copy Service (VSS) integration; that is to say, the VM and its applications are aware of the operation and prepare their data accordingly. If the VM is reset to a production checkpoint, a restart occurs after a failure, and distributed applications can resume communication properly, just as after a classic recovery.

Microsoft has also revised the backup process used when working with the built-in Windows Server backup or the System Center Data Protection Manager. Third-party software tools also often address these interfaces. In previous versions, the backup functions had several difficulties and disadvantages. For example, integration was often a problem with professional storage systems, because their backup support for the VSS service with hardware VSS providers too often did not work properly. Providers of modern backup programs also criticized the backup process because Hyper-V, unlike its competitor vSphere, does not have "changed block tracking," which means that the backup only needs to store the changes since the last backup.

In addition to solutions for these two main problems, Microsoft has made some more changes to the backup features, which together lead to higher performance. Now it is possible to back up shared VHDX files on the host, which are virtual cluster disks that a VM cluster can use for data storage. Thus far, agents were needed to back up such data on the VMs, but now you can do this from the host.

Security in the Cloud

The upcoming version of Hyper-V offers many striking features for security in hosting situations; thus, the manufacturer has responded to the concerns often expressed by customers about the cloud. The new security features address the issue from two sides.

The perspective of the hosting service provider that runs VMs for its customers is addressed by host resource protection, which ensures that individual VMs in a hosted environment do not overtax the available resources. With constant monitoring and custom rules, the function ensures that a VM that threatens to slow down other VMs is limited in its consumption. This technique has its origins in Microsoft Azure; it works with access patterns and thus tries to identify abnormal behaviors of the VM itself that indicate a denial of service attack.

The other point of view, that of the customer whose VMs are operated by a cloud service provider, is taken into account by Shielded VMs and is called Hardware-Trusted Attestation. Simply put, the customer's VMs are now always encrypted, so that the service provider's cloud administrators do not see the data or applications. Of course, this requires a lot of infrastructure and cost. For production operation, the service provider's host servers need Trusted Platform Module (TPM) version 2.0 chips, which previously did not exist in servers. They work with a virtual TPM, which ensures BitLocker encryption of virtual disks on the customer's VMs. A Hardware Security Module (HSM) with protected certificate management is also required to allow customers to store certificates securely for encryption.

Because the VMs also need to be encrypted during operations and, for example, during live migrations, the hosts communicate with a separate Host Guardian Service, which runs on a Windows Server in a separate Active Directory environment. This server has details of all the TPM modules on the hosts involved and is thus in a position to allow secure execution of the encrypted VMs on a host.

For laboratory purposes or for companies that only want to use shielded VMs internally, a second, less secure mode exists for this security function that does not require special hardware but works on the basis of Active Directory security groups. The encryption uses the same functions as in the above-outlined Hardware-Trusted Attestation. However, Admin-Trusted Attestation means that the keys and certificates involved are not protected against the administrator of the host environment. This mode is thus only suitable for internal usage, and not for hosting scenarios.

In addition to these two major extensions of the security infrastructure, Microsoft has also added some security function enhancements. For example, secure boot can be enabled for Linux VMs on Windows Server 2016 to ensure the integrity of the operating system.

Storage Connectivity Reliability

Major new features are also included for operational reliability through failover clustering in Windows Server 2016. Two technologies increase the tolerance of the cluster in case of short-term interruptions: Storage Resiliency temporarily suspends a VM, if its storage on the storage area network (SAN) is not accessible. Previously, a VM crashed after 60 seconds at the latest if it could not access its virtual disks. In the new release, the administrator can arbitrarily extend the pause mode or even define a timeout after which a failover to a different host then occurs.

Compute Resiliency works in a similar way for all the hosts in the cluster, but not for individual VMs. A host in the cluster that is not accessible to the other cluster servers does not immediately trigger a failover for the VMs running there. If the function is active, the cluster does not intervene for four minutes by default. If the failed host comes back up in this time, its VMs continue to run during this time. In environments with unstable network connections, this can mean VMs being significantly more accessible to users. If it turns out that the same host repeatedly causes problems, the cluster can quarantine it and migrate all the VMs to other hosts.

Microsoft has invested heavily in storage connectivity for clusters. The already long-announced Storage Replica feature allows a Windows server to replicate its data synchronously or asynchronously to a different server, enabling Stretched Clusters that are distributed across remote data centers.

In recent versions of the preview, Microsoft has extended this to include Storage Spaces Direct (S2D), where data storage is no longer handled by dedicated storage servers but by Hyper-V hosts themselves. Local solid state and conventional hard drives are installed that use caching and tiering for a flexible, high-availability approach. Synchronous replication transfers the data of one host to one or more additional hosts. Hyper-V can thus address the entire memory of all participating hosts as a virtual SAN, which is very resilient, thanks to replication.

The principle is equivalent to the "hyperconverged system," as launched by Nutanix or SimpliVity. Competitor VMware has developed a similar concept with vSAN, but this solution is regarded as complex and expensive. Windows may liven up the market here with S2D.

Nested VMs

In the slipstream of container technology, which becomes available to the Windows world with Server 2016, Microsoft has introduced a feature that many admins have wanted for a long time: nested virtualization. Nested virtualization means that you can run a Hyper-V host, which is itself a VM, in Hyper-V. Now, you might be wondering why the IT world needs something like this, and this is precisely what stopped Microsoft from doing so in Hyper-V, thus far. There are hardly any practical scenarios for it. People who use this technology do so mostly for lab or training purposes, because you can simulate a whole virtualization cluster with just one computer. This scenario worked with VMware and others, but not with Hyper-V.

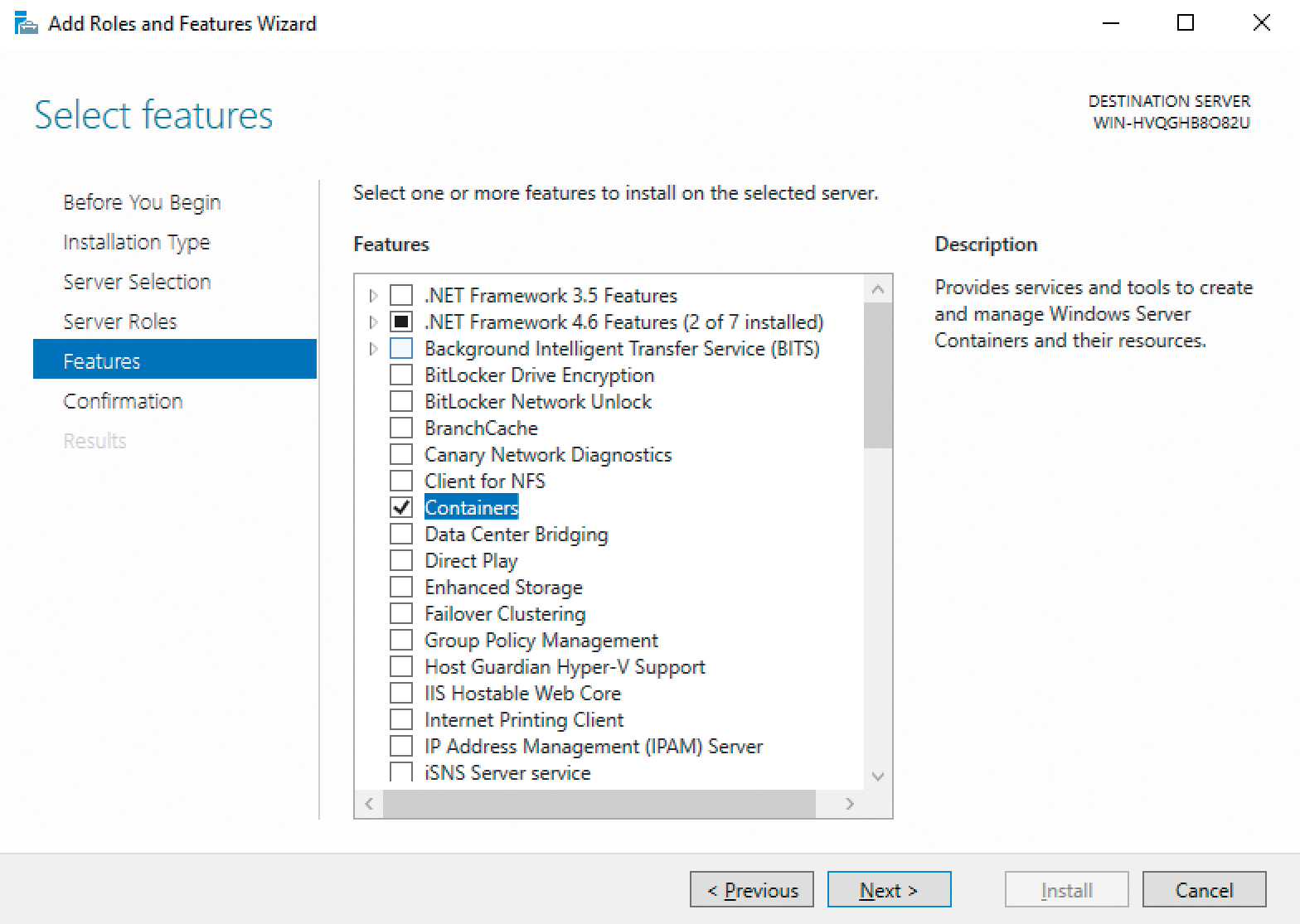

Meanwhile, Microsoft has developed a requirement for nested virtualization – the Hyper-V container (Figure 4). As a special feature, Windows Server 2016 now not only offers container technology, but also an advanced container security mode. Lifting the lid on these "containers" reveals virtualization that does not simulate a complete machine but that runs multiple virtual servers on the same operating system base. Each of these "containers" has its own data space, network configuration, and process list – but the base is the same for all of them. For certain purposes, this is significantly more efficient than a full-blown VM. The principle is widely used in the Linux world for web and cloud applications, and Hyper-V containers are a variant.

In contrast to the (likewise new) Windows containers, the virtual servers do not share the operating system; rather, a rudimentary Hyper-V VM is built for each container, which requires more resources than a normal container, but fewer resources than a full VM. Microsoft needed to develop nested virtualization for this technology to work when the container host itself is a VM, which is thus now available for other scenarios.

Conclusions

Windows Server 2016, and thus the next Hyper-V version, was finally released on October 1. On the basis of the Technical Preview used for this review, the complex container technology with its Docker integration and ambitious storage features, which need to be mature when they come on the market, might have been the reason for the delay. However, the many innovations revealed in Hyper-V was likely worth the wait for many admins.