An introduction to a special tool for transforming data formats

Data Lego

The process of preparing data is called "extract, transform, and load" (ETL), and specialty tools like Jaspersoft ETL help you carry out these tasks. In this article, I introduce the community version 6.0.1 of Jaspersoft ETL, which is available as a free download [1].

Data Gold

Panning for gold is tedious. Nuggets of precious metal don't simply sit around on a riverbed. Instead, the prospector has to sift through multiple pans of sand and stones, often retrieving just a few flakes for all of the trouble expended. Data is the equivalent of gold in today's world. Given this reality, you must tediously filter huge volumes of data to extract tiny particles of information that have real value.

When looking for gold, a prospector first has to get a fix on the location of a deposit and then get access to it. The same can be said for data: The process of locating a promising source involves procedures like aligning fonts, converting values and data formats, and importing results into databases. These preparatory steps need to be performed before you can effectively pan for gold in the form of data.

Converting Files

One of the easiest exercises for learning the ETL tool involves converting an input file to a different format. A simple example of a text-based book list should suffice for presenting the first practical steps (Listing 1). Columns separated by semicolons have a particular width. The lines are not in a particular order.

Listing 1: Book List (Excerpt)

Year;Number;Author ;Title ;Publisher ;Pp ;Price 2015;06; Stepanov, Rose ;From Mathematics to Generic Programming ;Edison Wesley ;0320;28 2015;09; Whithurst, Jim ;The Open Organization ;Harvard Business Review ;0227;16 2015;03; Kofler, Kühnast, Scherbeck ;Raspberry Pi: Das umfassende Handbuch ;Galileo ;1064;40 2016;04; Heinle, Stefan ;Heimautomatisierung MIT KNX, DALI, 1-Wire und Co. ;Rheinwerk ;1260;50 2016;09; Blasband, Darius ;The rise and fall of software recipes ;Reality Bites Publishing ;0360;25 2016;11; Luckhaus, Stefan ;Produktivitäts- und Leistungsmessung ;Pass Consulting ;0125;20 2016;01; Troche, Christoph ;FRITZ!Box ;Mitp ;0240;20 2015;02; Will, Torsten T. ;Einführung in C++ ;Galileo ;0520;25 2015;10; Keller, Müller ;Pentaho und Jedox ;Hanser ;0435;60 2015;03; Ristic, Ivan ;Bulletproof SSL and TLS ;Feisty Duck ;0507;46 ...

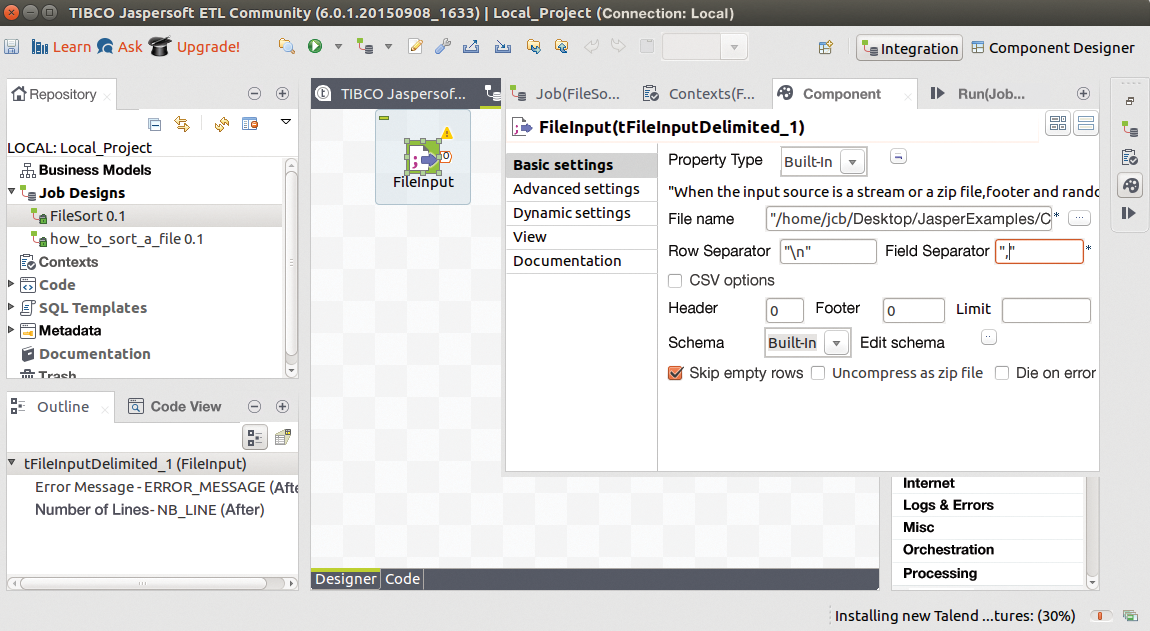

To convert to a different format, you must first set up a "job," which is accomplished by starting the TIBCO Jaspersoft ETL tool and selecting the Create Job option from the context menu for Job Designs in the left pane. After entering a name for the job in the dialog box, a four-pane window opens (Figure 1):

- Top left is a file structure for the jobs and their components.

- The center section has an area with a gridded background in which to assemble jobs from components.

- Top right is a menu of components.

- The bottom window has several tabs used to configure the components and start the jobs.

Now it is time to acquire the data to be processed. In this example, the data source is the text file in Listing 1. You need to use a component of type tFileInputDelimited, which opens the comma-separated values (CSV) file, reads it in line by line, separates the fields according to a defined separator and schema, and passes on the fields to the next component for further processing.

Drag the tFileInputDelimited input component from the menu on the right to the center of the gridded assembly area. Double-clicking on the component opens a new dialog box in which you should define the properties of the component (Figure 2). The definitions include the path to the input file and the separators. With a single click on the Edit schema button, you will come to a window in which you can set up the data type for each field of the CSV file.

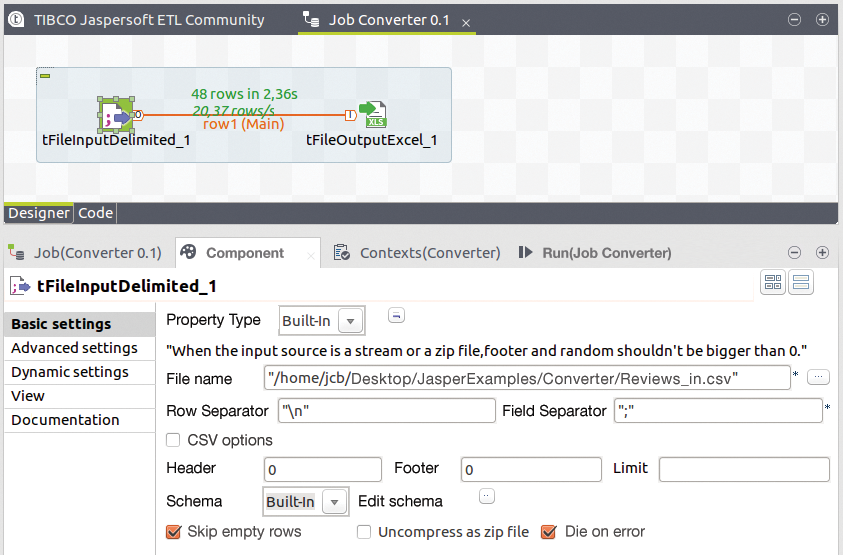

From the right-hand column, select the component to be used to present the result and drag it onto the assembly area. This example uses a component of type tFileOutputExcel. After double-clicking on this component, you can define the name and path of the output file as above before connecting the components from left to right by clicking on the handle of one icon and pulling a line to the next icon (Figure 3). Now the only thing left to do is start the job, and voilà, an Excel spreadsheet containing the comma-separated data appears as specified.

Sorting Files

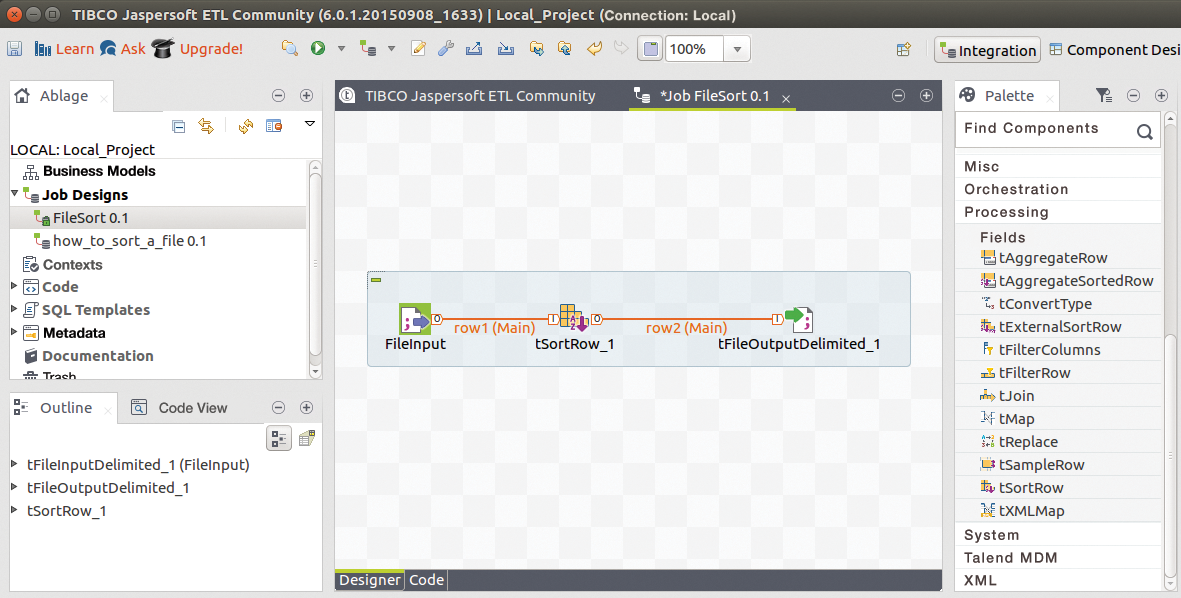

The next level of complexity for a job like this is to insert between the input and output components a component that will process the input data before it is output. For example, sorting would be a very simple method to insert here.

In the example at hand, try sorting the unordered list of books according to year and issue number. To do this, you should place the tSortRow component between the input and output modules. The component is found in the right-hand menu under the Processing option.

Afterward, you should connect the components as before (Figure 4). This sets up the path for the data from the input CSV file to the sorter and from there to the output file. Now you can double-click to configure the sorting criteria in the columns of the schema.

Once the sorting criteria have been defined, you can start the job. After a few seconds, you will see a short notification in the assembly area about the number of processed lines and the elapsed processing time.

Databases

The described procedure should work for all jobs: Components are selected from the right, dragged onto the assembly area, and connected according to the data flow. Double-clicking the icon lets you configure each component. In the final step, run the entire assembly.

The input and output need not be only text files, Excel spreadsheets, or JSON files; they can also be a wide variety of databases, including MySQL, Oracle, MS SQL Server, PostgreSQL, SQLite, Sybase, Ingres, Informix, and others.

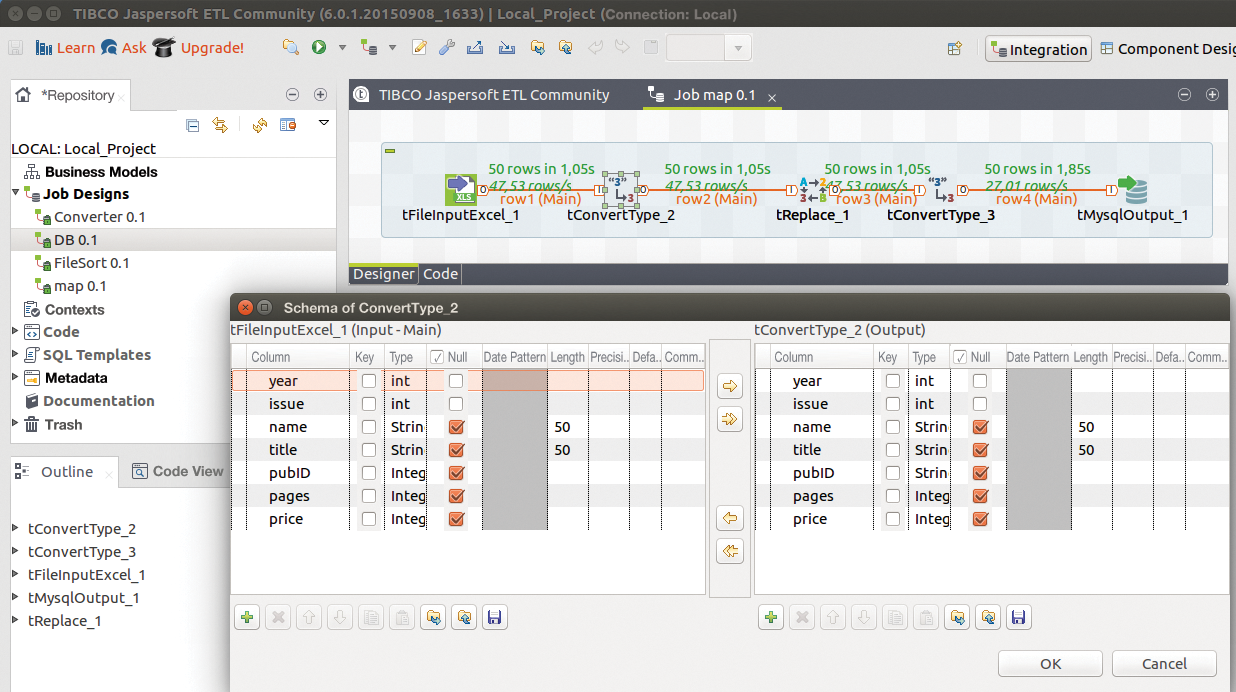

Creating a database is as easy as changing the converter from the Excel component to a database component. The Excel spreadsheet that has already been used now serves as the data source. The example in Figure 5 outputs the data to a MySQL database. If the database table does not exist, it can be generated on the fly.

pubID) is replaced if it meets a certain criterion. A data type is converted temporarily for this to work.In principle, it would suffice to use tFileInputExcel and tMySQLOutput components one after the other for the database export. When making the connections, you should try to avoid storing the complete name of the publisher in every line and instead place notations in a separate table, so that only the publisher ID (a number assigned before importing to the database) appears in the review table.

Another issue to consider in the example used here is that books were published by both Galileo Publishing and Rheinwerk Publishing House under different IDs, even though they are the same publisher that has changed its name. Consequently, the administrator will want to change all of the IDs with the value 6 (Galileo) into IDs of value 15 (Rheinwerk), which indicates the current name of the publishing house. The tReplace component performs this task.

However, this component only works with string values. This means that another component, tConvert, needs to be used to first convert all of the integer values in the pubID column of the review table into strings. After the Galileo IDs have been replaced, the tConvert component converts them back to an integer value, which is then loaded into the database.

Similarly, it is possible to use database tables as a data source. The next example illustrates how to use database tables as a source. The example generates a report that shows the number of books from each publisher. The output should not include any publisher IDs, just the name in plain text, which is accomplished in the database by a join operation:

SELECT publisher.name AS publisher, COUNT (reviews.pubID) AS number FROM publishers, reviews WHERE publishers.id = reviews.pubID GROUP BY reviews.pubID, publishers.name;

It is possible to create this kind of join operation directly in the SQL SELECT statement or in Jaspersoft ETL with the tJoin components.

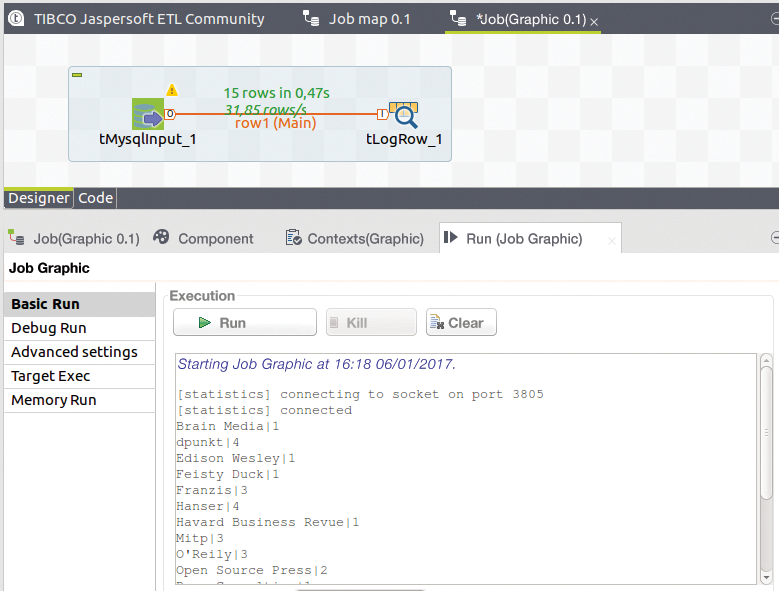

If you want to compute the operation with a somewhat complicated SQL statement and insert the result in a longer chain of components, it is advisable to test the SQL command beforehand. The ETL framework allows you to do this directly. The tlogRow component provides support and displays arbitrary intermediate results (Figure 6).

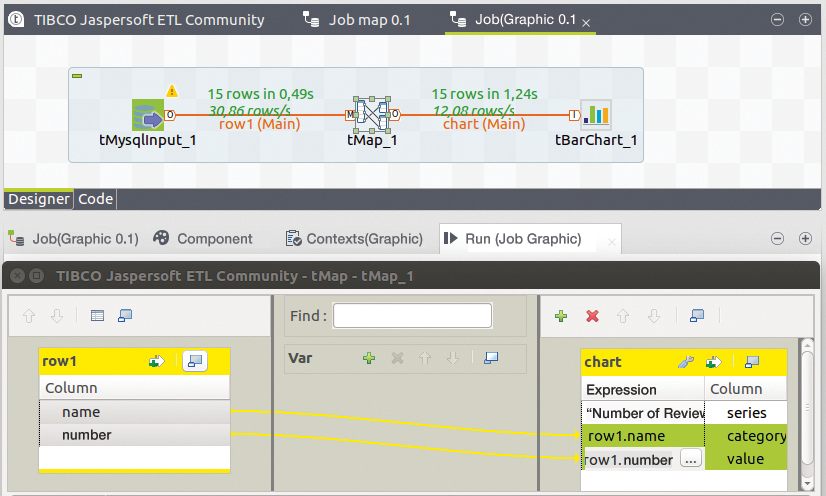

tlogRow component logs arbitrary intermediate results.The ETL tool can even generate a chart from the data with the tBarChart component, which, however, cannot be connected directly to the tMysqlInput component because it expects three specific column names: series, category, and value. The fix for discrepancies between the component and the input is to use the versatile tMap, which helps map the existing column names of the review table to the column names expected by the chart generator (Figure 7) and which also mapped publisher names to publisher IDs in an earlier process not described here.

Conclusion

The examples shown here have purposefully been kept simple, because the goal is to understand the working principles. Once you know the principles, assembling relatively simple components to create complex processing procedures is straightforward.

Many of the filtering and sorting tasks can be performed by other means, as well, including Perl and Python scripts, database commands, or even Unix tools like grep and sort. The appeal of the Jaspersoft tool is that it offers a unified framework for a large class of tasks, most of which can be programmed visually, while integrating a large number of databases and business applications.

It is easy to lose sight of the whole picture with the multitude of ETL components, including interfaces for customer relationship management (CRM) systems like Sugar CRM, Vtiger, and Microsoft CRM; content management system (CMS) applications and document administration like Alfresco; business process management (BPM) tools like Bonita; business intelligence software like the in-house Jasper suite or SAP; enterprise resource planning (ERP) software like Sage X3 and Openbravo; statistical software like SPSS; and dozens of database types.

It is possible to integrate cloud storage, like Amazon's S3, Dropbox, and Google Drive. Components can be active over the Internet to send email, make HTTP requests, invoke web services, use the SOAP protocol, or create interfaces for standards that apply to data in business transactions like EDIFACT and HL7 for health care data.

Additionally, numerous generic components are available for input and output to and from files and streams with any number of formats, as well as components for converting, filtering, aggregating, searching, and replacing entries. Yet other components let you debug jobs and log messages and results. In the event that the above-mentioned resources are not sufficient for what you have in mind, you can even program your own components in Java.