Getting started with OpenStack

In the Stacks

OpenStack has marginalized its rivals with an impressive pervasiveness. Although Eucalyptus and CloudStack were technically on par with OpenStack, today they play only a minor role. The technical body of OpenStack hardly justified the attention it got in the early days, but the solution has now matured significantly, especially in the last two years: Many problems that used to make life difficult for admins have been eliminated, and the biannual OpenStack conferences show that some major corporations now rely on OpenStack.

The greatest practical challenge is its complexity. When you build a cloud environment with several hundred or several thousand nodes, most concepts that are fine for conventional data centers no longer apply, both when planning the physical environment (e.g., structural conditions, racks, servers, hardware, network, and power) and the software that provides the core functionality.

The complexity of the construct means that getting started with OpenStack requires much education and practice, and the path to the first OpenStack installation running on virtual machines (VMs) as a test setup can be long and fraught with pitfalls.

This first part of this series revolves around introducing the most important OpenStack components and methods; these are the current defaults for deploying OpenStack. Part two takes a hands-on approach and describes the OpenStack installation on VMs based on Metal as a Service (MaaS) and Canonical's own automation solution, Juju. Finally, the third part deals with transferring what you have learned into practice: What can be observed in large OpenStack installations in terms of daily life at the data center? How can high availability be achieved? What options are available for software-defined networking (SDN) and software-defined storage (SDS)?

An Entire Cosmos of Components

When talk turns to OpenStack, you might intuitively think of just one big lump of software. However, OpenStack is a collection of many programs (Figure 1) that share communication channels in the background and have one important element in common: The entire OpenStack source code is written in Python.

![Although OpenStack comprises many more services than those shown here, six components is enough for a basic cloud: Keystone (Identity), Glance (Image), Nova (Compute), Neutron (Network), Cinder (Block Storage), and Horizon (Dashboard) [1]. Although OpenStack comprises many more services than those shown here, six components is enough for a basic cloud: Keystone (Identity), Glance (Image), Nova (Compute), Neutron (Network), Cinder (Block Storage), and Horizon (Dashboard) [1].](images/openstack-workshop-1_1.png)

What exactly is a part of OpenStack and what is not is no longer clear: In the early days of the project, the term was reserved as a trademark for the services officially referred to as the core services. A single component only became part of the core after spending a while in incubator status, because it needed to be backed by a large community. The OpenStack Technical Committee officially decides when to include a component in the core services.

The Big Tent initiative put an end to that setup; now, a component only needs to promise to adhere to some basic rules to be considered a part of OpenStack. The original idea behind the Big Tent was to increase innovation within the project, and the developers have achieved this goal, with more than 30 components now. Fears that the Big Tent would dilute the OpenStack brand with immature services have not materialized – or at least not yet.

However, distinctions are still made between the collection of core services and ornamental accessories. To provide useful services with an OpenStack cloud, you need six components: Keystone, Glance, Nova, Neutron, Cinder, and Horizon. The six names are code names, by the way; each OpenStack service also has an official name that is hardly ever used, even though it would be easier to guess the function of a service. Glance, for example, is officially the Image service. By the way: individual OpenStack components are typically not single programs but are in turn divided into several services (e.g., Glance has an API and a registry service).

Support Services

Before I look into the OpenStack components, I'll look at two services that can be found in practically every OpenStack production cloud: the message broker RabbitMQ and MySQL. The OpenStack components use MySQL wherever they need persistent metadata (i.e., run-time data caused by user interaction or administrator intervention, such as the created users or the active VMs.

The need for RabbitMQ is slightly more complicated. Why does cloud software need a remote procedure call (RPC) service in the background? According to OpenStack developers, the individual service components need to communicate with one another across node boundaries. A good example of this is Nova, the virtualization service in OpenStack: A command to create a new VM reaches its API component and creates a new VM on an arbitrary hypervisor node. The communication between Nova's individual services is handled via the Advanced Message Queuing Protocol (AMQP) offered by RabbitMQ.

Alternatives to RabbitMQ and MySQL are possible. Because access to RPC relies on an internal Python library that all services in OpenStack use and that supports the Apache messaging service Qpid, RabbitMQ could be replaced with Qpid without any problems. Similarly, MySQL could be replaced by PostgreSQL without difficulty. In practice, though, MySQL and RabbitMQ dominate the scene.

Keystone: The Bouncer

The Keystone service is responsible in OpenStack for everything that has to do with user authentication and authorization. Keystone joined OpenStack far later than Nova or Glance, but it is essential. Keystone is only a single application: The service is designed as a RESTful API, which all OpenStack services use, that can be addressed using HTTP commands. APIs are OpenStack's standard approach for responding to requests from users or other components.

Keystone's main task is to issue tokens to clients: A token is a temporarily valid string that a user can use to authenticate against other services. When a user asks Keystone for a token with a valid combination of username and password, they can then embed the token as a header in HTTP requests to other services. Keystone can do even more. In the background, the service can connect to LDAP, and it is also used for authentication by the OpenStack components themselves: If a service wants to send a request to another service, it needs a valid token, like any other client (Figure 2).

![Keystone governs authentication and authorization in OpenStack using tokens [2]. Keystone governs authentication and authorization in OpenStack using tokens [2].](images/openstack-workshop-1_2.png)

Keystone is internally organized along the lines of users, clients, and roles. The users belong to a specific client and have a specific role within this client. At their sole discretion, admins determine the roles, whose permissions are set at the level of each individual service.

Glance: Inconspicuous

The Glance Image service handles a very important task in OpenStack, although it is quite inconspicuous. Because you can hardly expect users in clouds to install an operating system from scratch for each new VM, Glance provides prebuilt, preconfigured hard disk images. Users download these images to their virtual disks for new VMs and thus have an installed and running system within a few seconds. The cloud-init service can be used freely to transfer various configuration parameters to the VM.

As mentioned earlier, Glance comprises the API and registry. The service can handle multiple formats for virtual hard disks and connect directly to different storage systems, such as Ceph or GlusterFS, which then help Glance distribute its images.

Glance connects seamlessly with the Nova component. When a user configures and starts a new VM, they thus only need to select the appropriate operating system from a list – Nova and Glance then negotiate the details of commissioning in the background.

Nova: The Veteran

OpenStack Nova is the oldest OpenStack service and, in a way, the primordial soup from which OpenStack emerged in its present form. OpenStack was initially a joint venture between the Rackspace hosting service and NASA. Although NASA has discontinued its commitment to the cloud environment, it is still responsible for the first versions of Nova.

Simply put, Nova in OpenStack takes care of everything that has to do with the management of VMs: creating new VMs, deleting old VMs, and starting and stopping them, including responses to requests by users or admins. Nova consists of a wide range of services, with the API, the Scheduler, and the Compute component being the most important services.

The API fields all commands from users and components and processes them. If an incoming command requires that a new VM be created, the API first sends the command to the Scheduler. The Scheduler keeps track of the available compute nodes and the available resources and chooses a suitable host. On the host, Nova's Compute component then launches and starts up the new VM locally.

The OpenStack developers deserve praise for not reinventing the wheel in the Compute component, but relying on existing technology. For example, if you use KVM as the hypervisor, Nova Compute relies on libvirt to manage the VMs. Nova supports more than just KVM: Xen and various container technologies are also included, along with more commercial approaches like VMware ESX or Microsoft Hyper-V.

Neutron: Connected

Most VMs need access to a network. In contrast to conventional virtualization environments, simply using a bridge to loop through one of the host's networks in a cloud is not sufficient. In clouds of all types, and especially large-scale public clouds, the basic assumption is that VMs from several customers can run on the same host without interfering with one another – anything else would simply not scale.

SDN is therefore mandatory in a cloud. Various implementations of SDN are available on the market: Open vSwitch, along with several commercial solutions based on it (e.g., MidoNet, PLUMgrid), and alternatives such as OpenContrail.

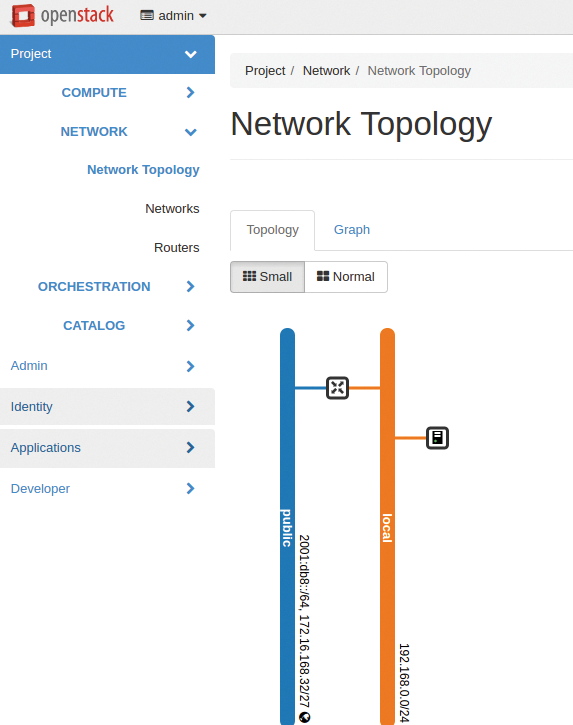

OpenStack Neutron (Figure 3) bridges the gap between OpenStack and the various SDN flavors. Basically, Neutron is not much more than an API that has a plugin interface. A plugin for a specific SDN style can then be downloaded via the interface. Neutron itself has a few SDN implementations on board (e.g., Open vSwitch). The hosts in the setup then run various additional services (aka agents) associated with Neutron that store the appropriate SDN configuration locally.

Neutron is regarded as one of the most complex OpenStack services. Initially, in particular, the interrelations between the agents and services is difficult to comprehend. However, Neutron is now essential for complex setups, with practically no alternative. Development of the legacy Nova Network component, which took care of SDN and was part of Nova, stopped some time ago, and Nova Network will soon be disappearing from Nova.

Cinder: Make Way!

Like SDN, SDS is a very important issue in clouds. That said, the type of storage used does not play a particularly important role: Whether the data ultimately ends up on a Ceph cluster or on a storage area network (SAN) connected via Fibre Channel or a network filesystem (NFS) is irrelevant, although it is important for the VMs running on the cluster to be able to use the existing storage.

In an example with KVM, this means that a storage segment is provisioned on request by a user and is then pushed out to the VM via the KVM hotplug functionality. OpenStack Cinder's realm starts precisely here: The service manages existing storage and sets it up so that Nova can connect it to active VMs.

Cinder also comprises several services: an API, a scheduler, and a storage component that manages storage in the background. The scheduler decides which back-end storage to use to meet user requests. Drivers can connect various types of storage: The range of supported solutions ranges from the aforementioned Ceph, through other SDS solutions such as GlusterFS, to almost all SAN systems by NetApp, Dell/EMC, or Hewlett-Packard.

Horizon: Visualize

When it comes to the last component on the list, whether Horizon is needed for an OpenStack installation or not is a moot point. After all, each OpenStack service comes with its own API and a private command-line client for each API; OpenStack thus can be controlled fully through a command-line interface. There's just one catch: Inexperienced users are quickly overwhelmed by the command line. A cloud provider cannot assume that only command-line gurus will be using their service.

The solution is a GUI in the style of a self-service portal that lets users click together VMs, networks, and storage on demand. This option is exactly what Horizon, officially dubbed the OpenStack Dashboard, provides. Horizon is based on Django (i.e., web-optimized Python) and is one of the OpenStack components that has been around from the outset.

Horizon's functionality is quite remarkable: Depending on the assigned authorizations, you can manage user access, VMs, storage, and virtual networks and take advantage of additional features.

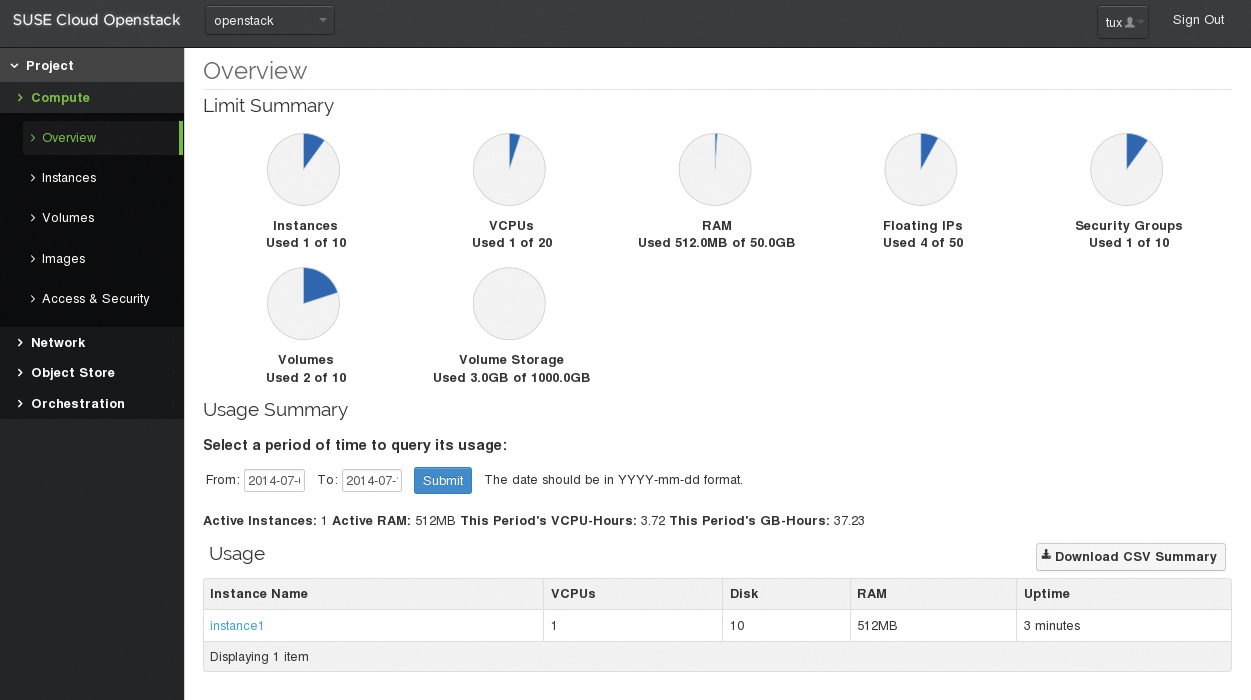

The built-in theme interface is especially exciting for providers: Using an appropriate theme, you can modify Horizon's look to reflect a company's corporate identity, thus creating a homogeneous solution. Manufacturers of ready-made platforms based on OpenStack (e.g., SUSE, Canonical, and Red Hat) demonstrate what this looks like, with dashboards resplendent in green (Figure 4), bright orange, or red.

What Else?

The OpenStack components featured here, together form the base, but in practice, you will want to use a number of other services in an OpenStack cloud. Examples include Heat for orchestration or Ceilometer, which provides billing data and connects to existing billing systems. Other services, such as Trove for Database as a Service (DBaaS), Magnum for Container as a Service (CaaS), or Designate for DNS as a Service (DNSaaS), regularly attract the interest of admins.

If you are just getting started with OpenStack, you will want to restrict the services to what is absolutely necessary, not least because a working platform design will let you retrofit such services without much difficulty later on.

Crucial Question: Deployment

OpenStack newcomers are confronted with a plethora of information relating to the individual components and will often feel like the proverbial duck in a thunderstorm. The predominant question is always the same: How can I roll out and operate a monster like OpenStack meaningfully? It quickly becomes clear that manual configuration, and manually installing the packages needed for OpenStack on each host, is not an option. After all, large OpenStack clouds will include hundreds of nodes, so messing around with them individually is ruled out.

The major manufacturers are aware of this problem and have come up with various solutions designed to make life easier for admins. SUSE, Red Hat, and Canonical stand out from the crowd with tools for automatic OpenStack installation.

SUSE: Early Adopter

SUSE was one of the first companies to jump on the OpenStack bandwagon and for years has actively provided its SUSE OpenStack Cloud product; unsurprisingly, SUSE Cloud is based on the SUSE Linux Enterprise Server (SLES). For its cloud, SUSE not only delivers packages for all relevant OpenStack services for SLES, but a deployment solution based on Crowbar, as well. Crowbar in turn relies on the Chef automation solution and supports bare-metal deployment.

New hosts in the setup rely on PXE to boot a basic system and for inventorying. Using the GUI, the admin then assigns roles that are tied to certain services from the OpenStack collection to a number of hosts. Finally, the admin defines the details of the setup and triggers the OpenStack rollout. SUSE Cloud offers a convenient starting point – if you are already used to working with SUSE.

Red Hat Deferred

Unlike SUSE, it took Red Hat a very long time to opt for a comprehensive OpenStack commitment, but then the Raleigh-based company started to pick up speed, hiring OpenStack developers en masse; today, it is a driving force behind the OpenStack development.

Dubbed Red Hat OpenStack Platform (RHOSP), this unwieldy-sounding product is based on Red Hat Enterprise Linux (RHEL) and comprises a variety of OpenStack-centered tools and utilities that facilitate the installation and the administration of the cloud solution for the user. The bundle includes a separate GUI along with Packstack, a component that rolls out OpenStack with the use of the Puppet automation solution.

In terms of core functionality, SUSE Cloud and RHOSP differ only by a few details. The decision in favor of one solution or the other will ultimately depend on whether you already have experience dealing with SUSE or Red Hat.

Closely Interlinked

The third member of the group, Canonical, has maintained a very close relationship with the developers of OpenStack since the beginning. It was Canonical lead Mark Shuttleworth, himself, who some time ago replaced Eucalyptus with OpenStack in Ubuntu and thus partly triggered the OpenStack hype. It is not a coincidence that OpenStack releases usually happen just a few days before the Ubuntu releases in April and October.

Technically, the Ubuntu cloud is solid: On the basis of MaaS and Juju, it provides the same functionality as that offered by SUSE and Red Hat. Moreover, admins of Ubuntu systems can install a normal Ubuntu and put together their own OpenStack deployment using official Ubuntu packages without paying any license fees. Although users then are without support, this combination is very popular: Many OpenStack setups rely on Ubuntu to keep this freedom.

Mirantis: The Underdog

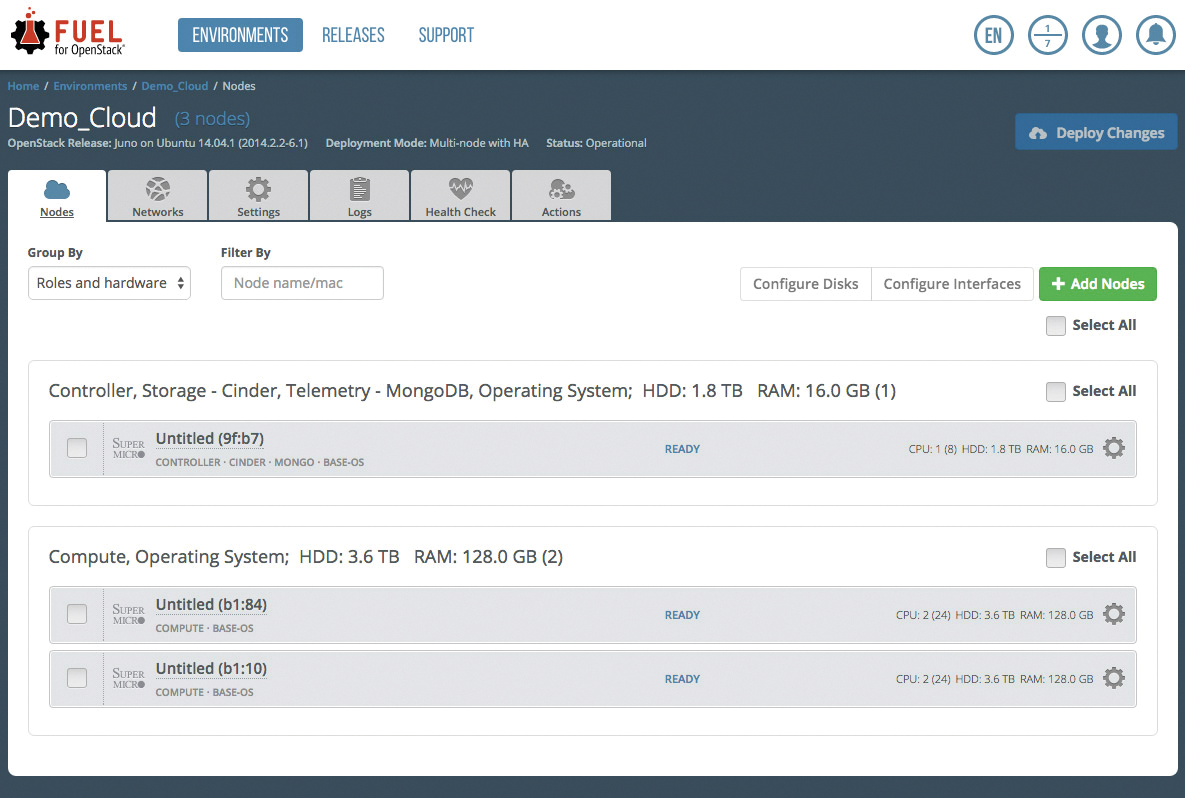

The maker of Mirantis takes its slogan "Pure Play OpenStack" seriously, and even offers a proprietary deployment tool called Fuel (Figure 5). Fuel gives admins an easy and simple approach to installing Mirantis' own OpenStack packages on both Ubuntu and Red Hat systems.

However, Fuel is currently in a state of flux: About four months ago, Mirantis announced that it wanted to rewrite the Kubernetes-based tool completely, which prompted it to bring Google and Intel on board. Until further notice, it does not seem to be a good idea to establish a new setup on Fuel.

Genuine Pure Play Does Not Exist

Incidentally, when Mirantis refers to "Pure Play OpenStack," it primarily means that OpenStack users with Mirantis are not automatically dependent on one of the major distributors. Pure Play OpenStack is not currently offered by any of the major providers: SUSE, Red Hat, Canonical, and Mirantis each enrich their OpenStack packages with various patches, which means, on the one hand, backported fixes from later versions of OpenStack and, on the other, new functions.

However, all manufacturers set great store by unimpeded compatibility with the official OpenStack versions of the services – probably because the OpenStack project can put the thumbscrews on the manufacturers at this point: With the help of a RefStack [3], a running OpenStack installation can be tested for its API's compatibility with the requirements of the OpenStack project. The higher the official compatibility, the better things look for the cloud operator.

Future

In the next installment, I will introduce practical experience with OpenStack. On the basis of multiple VMs, the goal will be to install MaaS and Juju on Ubuntu to create a real OpenStack cloud on VMs. Although Fuel does this best, its upcoming rewrite encouraged me to opt for Ubuntu.