Highly available Hyper-V in Windows Server 2016

Safety Net

Most of the new features in Windows Server 2016 relate to Hyper-V. Microsoft has introduced numerous changes to make the product even more interesting for companies that have not used virtualization thus far or are running an older version of Hyper-V. To achieve high availability of virtual machines (VMs) on the Hyper-V platform, Microsoft has – from the outset – used the failover cluster services built in to Windows Server. In each version of Windows, Microsoft has consistently developed the functions to optimize the operation of VMs and to ensure permanent fail-safe behavior.

The following changes to the failover cluster services were integrated into Windows Server 2016:

- Virtual Machine Compute Resiliency with cluster quarantine and isolation.

- Cluster OS Rolling Upgrade.

- Workgroup and Multi-Domain clusters, including Cloud Witness with Azure Storage account.

- Host Guardian Service to isolate VMs for different tenants.

- Site-aware clusters with failover affinity, storage affinity, and cross-site heartbeating.

- An improved cluster log with TimeZone, DiagnosticVerbose event channel, and Active Memory Dump.

Two New Cluster Modes

Nodes in a failover cluster regularly communicate with each other and exchange status and availability information through a heartbeat. If a cluster node is no longer working reliably, cluster failover policies decide what to do with the resources (roles) hosted on that cluster node. Normally, a failover of cluster resources to another cluster node takes place.

In Windows Server 2016, Microsoft has introduced two new cluster modes. The first, cluster quarantine mode, checks the state of the cluster nodes. If irregularities in communication occur on one or more cluster nodes, or if the cluster node leaves the cluster, all virtual machines are moved to other cluster nodes by live migration, and the cluster node is removed from the cluster for two hours. A maximum of 20 percent of the cluster nodes can be switched to isolation mode.

In the second mode, cluster isolation, the cluster node is permanently removed from the cluster until the administrator adds the node back to the cluster. Machines running on the cluster node continue to run, but no cluster resources can be moved to the isolated node by any other cluster node. The cluster administrator can use PowerShell cmdlets to configure this.

Witnesses in the Cloud

The witness is one of the basic functions of a Windows failover cluster. With parity of cluster nodes, the witness determines on which cluster node resources run. A classic witness in a cluster is the disk witness, in which one logical unit (LUN) handles the witness' functionality. In Exchange Server 2007, Microsoft introduced the file share witness.

However, both witness configurations share a problem: They physically need to reside in a data center that is running cluster nodes. An environment with multiple data centers and stretch clusters can have a single point of failure (SPoF) if a data center fails or is not reachable by the other data center. To prevent this, you can configure Windows Server 2016 to use a Cloud Witness in Microsoft Azure (Figure 1). To do so, you will need to set up an active Microsoft Azure subscription and an Azure storage account. The configuration of the Cloud Witness can be handled in PowerShell or in the Failover Cluster Manager.

Rolling Cluster Update

To offer a smooth transition for customers with existing Windows Server 2012 R2 failover cluster deployments, Microsoft supports mixed-mode clusters in 2012 R2 and 2016. You can add new cluster nodes with Windows Server 2016 to an existing 2012 R2 failover cluster and move VMs to the new cluster node using live migration. The older cluster nodes then can be removed from the cluster, upgraded to Windows Server 2016, and added back to the cluster.

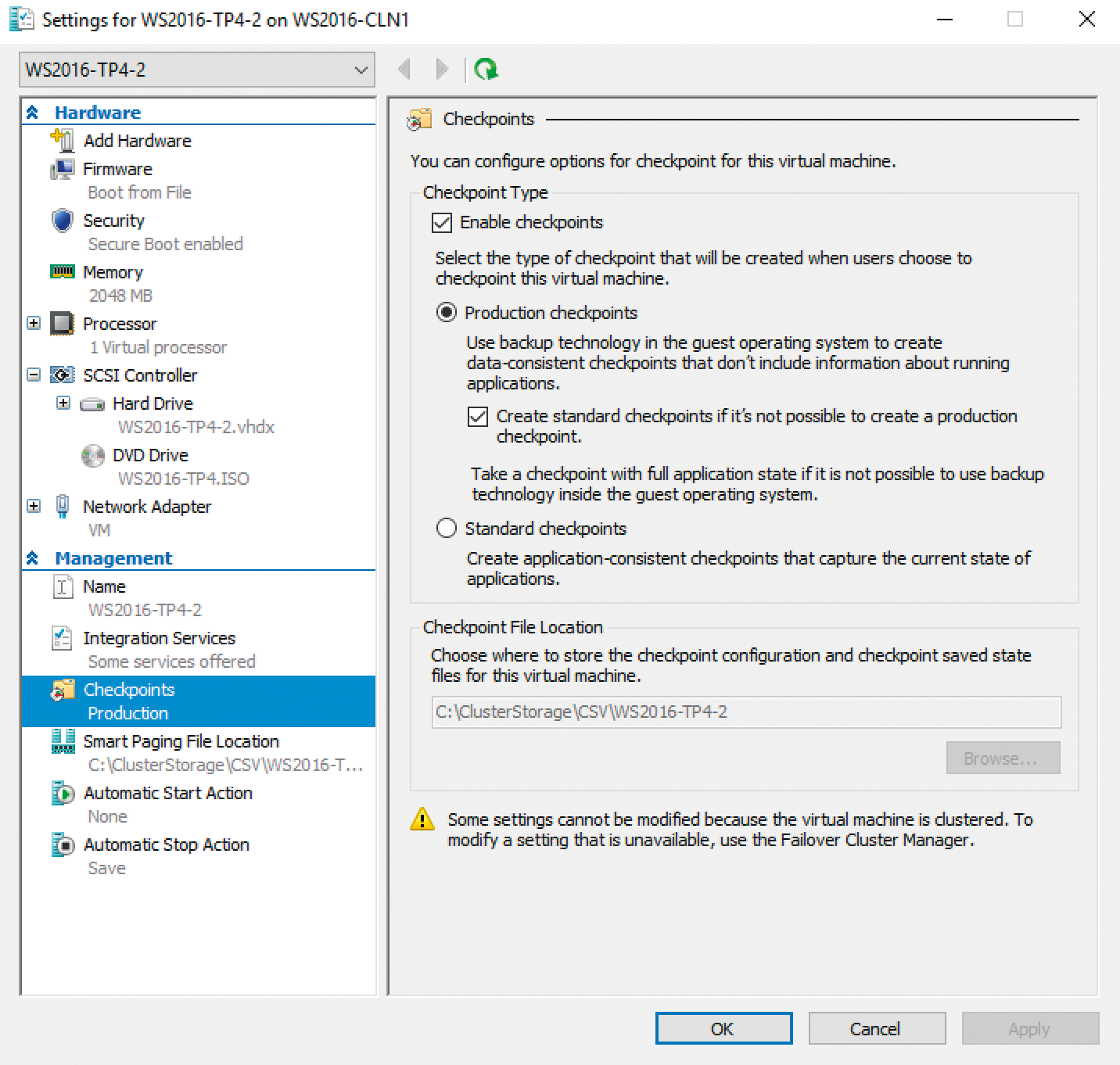

As soon as all the cluster nodes are running in Windows Server 2016, you can raise the functional level of the cluster with the help of PowerShell. From this point on, you can no longer add cluster nodes running Windows Server 2012 R2. You can raise the VM version with the Update-VmConfigurationVersion PowerShell command to take advantage of new features like production checkpoints (Figure 2) and the modified VM configuration file functions.

Hyper-V Failover Cluster Setup

Installing a Windows Server 2016 Hyper-V failover cluster is not rocket science. Microsoft has simplified the steps with each version of Windows so that even inexperienced administrators can install a failover cluster. Because the VMs in a failover cluster must be reachable by all cluster nodes, shared storage is necessary: It can be deployed, for example, via fibre channel, iSCSI, or SMBv3. For the example in this article, I will be using the iSCSI protocol to provide the LUN to the cluster shared volume (CSV) and the quorum (witness).

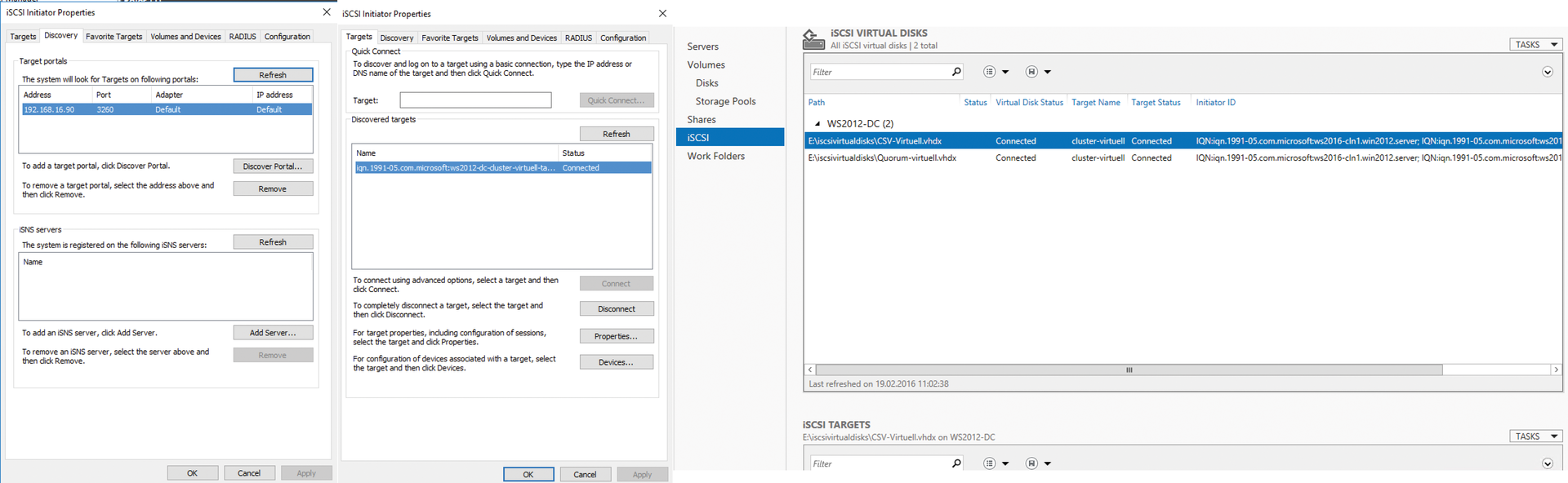

iSCSI storage can be provided by a special storage device, such as a Windows Storage Server or Windows Server 2012; to do so, you need to install the iSCSI Target Server role service with the help of Server Manager or PowerShell. In Server Manager, you navigate to File and Storage Services | iSCSI, click the Tasks drop-down, select New iSCSI Virtual Disk, and define the path to the virtual data medium.

When you create the iSCSI target, you need to define the virtual disk's name and size. You have the choice between storage media of fixed or dynamic size. Select the fixed size as the deployment type. In the next step, click the New iSCSI Target radio button, enter a name for the target, and add the future cluster nodes using the query initiator, MAC address, DNS name, or IQN.

The storage area network (SAN) is now available on the cluster nodes, so you can start the iSCSI initiator there. The question of whether to launch the iSCSI initiator permanently is posed on first launch. To confirm this question, click on the Discovery tab and Discover Portal and specify the IP address or DNS name of the iSCSI target server (Figure 3). The iSCSI targets should now appear in the Targets tab. Click Connect and open a permanent connection to the iSCSI target. If the target can be reached by multiple paths, also enable the multipath I/O (MPIO) functionality. Install the MPIO software from the storage manufacturer in advance if the Microsoft's proprietary MPIO software is not sufficient.

Now enable the new volumes on just one cluster node in the disk manager, initialize the disks, and format them. You will want to initialize the disk for CSV with the GPT format; the quorum disk can be initialized with a master boot record (MBR). Format the quorum disk with NTFS and the default block size. The CSV disk needs to be formatted with the Resilient filesystem (ReFS) and a maximum block size of 64KB.

Connecting in the Network

For trouble-free operation and performance in a Hyper-V failover cluster, you need to pay particular attention to configuring network cards. In a cluster, you need network cards for:

- the cluster heartbeat

- management functions

- live migration

- the iSCSI network

- a Hyper-V virtual switch

For increased performance and resilience, you can and should use additional network adapters, which you combine to create teams with the help of the load balancing and the failover (LBFO) feature in Windows Server 2012 or newer.

The network card should have a throughput of at least 1Gbps; 10Gbps or higher is preferable. If you use network adapters with a throughput of 10Gbps or higher, you can add them to an LBFO team and then break them down into additional adapters using Hyper-V's virtualization feature. This reduces the cabling overhead and gives you additional options, such as bandwidth limitation. For further information on configuring network cards in Windows Server, see the checklist on TechNet [1].

After you have configured the network configuration identically on all future cluster nodes, you can install the failover cluster feature on both nodes and then launch cluster validation on one cluster node. Validation tells you whether the hardware and software configuration of the cluster nodes is suitable for clustering. The cluster validation must complete correctly before you can seek support from Microsoft.

Validating the Configuration

Select both cluster nodes and click the Run all tests (recommended) radio button. If cluster validation is successful, you can then create the cluster. The cluster needs to have its own NetBIOS/DNS name and an IP address from the LAN. Set these and continue with the configuration. After a short time, the cluster should have been created. Now check whether a computer object was created in Active Directory and the appropriate DNS A record was added in the DNS Manager of the DNS server.

Now, some granular adjustments to the cluster configuration are required to identify the individual components uniquely (e.g., network adapters and, later, LUNs). First, check whether the correct LUN has been configured as the quorum by clicking on Storage | Disks below the cluster object in the Failover Cluster Manager. Now the quorum LUN should be listed under Disk Witness in Quorum. Click on the properties of the quorum LUN and change the Name to Quorum in the General tab.

Make sure you have a unique description and unique functionality for the network cards. Click on Networks in the Failover Cluster Manager, right-click a network, choose Properties, and assign a unique name. Now check whether the intended use of the network card is entered correctly. The Live Migration and Heartbeat NICs should have only Allow clients to connect through this network enabled as functions, and the card for management should have only Allow cluster network communication on this network.

To enable the network for live migration, right click on the Networks | Live Migration Settings node and push the Live Migration network card up to the top. If necessary, remove the other network adapters from the setting.

In the next step, you will be assigning the CSV LUN to the cluster shared volumes in the Failover Cluster Manager by selecting the CSV LUN node (Storage | Disks) and, under Actions, clicking Add to Cluster Shared Volumes. Then, launch the File Explorer on a cluster node, navigate to C:\ClusterStorage, and change the LUN name from Volume1 to a unique name before you install the first VMs.

More Performance for the LUN

To increase the performance of a CSV LUN, it is advisable to configure the CSV cache using PowerShell. The CSV cache increases the performance of CSV by reserving RAM as a write-through cache for reading unbuffered I/O transactions only. A Microsoft blog [2] has more information on the CSV cache.

Now create a VM in the failover cluster and assign an IP address before testing to see whether the failover cluster really is redundant, including:

1. Testing network card failures and LBFO functionality.

2. Testing whether another network card assumes this role in case the heartbeat connection fails.

3. Testing failure of the storage host bus adapter and continuous availability of the storage LUN.

4. Testing failure of individual cluster nodes by deliberately powering down and looking to see whether the resources come online correctly on another cluster node.

5. Testing the redundancy of the network switch function.

6. Testing for failure of a storage unit in the case of mirrored storage.

Continue the configuration only if all tests complete with positive results. After you have completed the typical failover and failure safety tests, you will next want to check the live migration features. In the Failover Cluster Manager, click on the Roles node, select the VM you want to move and start the live migration from the context menu (Figure 4). If the names of the Hyper-V virtual switches are identical, live migration should complete without any trouble.

You can determine whether the correct network is used for live migration by starting the Task Manager, selecting the Live Migration NIC in the Performance tab, and then initiating a migration. The related traffic should be routed via this network adapter.

Manager for VMs

If you are looking to implement a larger Hyper-V environment and need a tool for central administration of all Hyper-V hosts and VMs, check out the Microsoft System Center 2016 Virtual Machine Manager (VMM), which supports the following activities:

- Management of a virtualized data center with all Hyper-V hosts and failover clusters.

- Ability to administer VMware servers that are managed with vCenter.

- Fabric and storage management for configuring storage devices and SMB shares on file servers.

- VM management.

- Network management of all virtual and logical switches.

- Update and compliance management via WSUS.

- Library management for provisioning ISO files, VM templates, guest operating system profiles, and more.

- Integration with System Center Operations Manager.

- Resource optimization (dynamic optimization and power optimization).

- Bare metal provisioning of Hyper-V hosts and Scale-Out File Servers (SOFS).

Conclusions

In Windows Server 2016, Microsoft has completely revamped Hyper-V's feature set, adding many new features that facilitate the deployment of VMs locally and in the cloud, providing greater security and performance, and making the integration of Hyper-V even more useful in corporate environments. In the case of failover clusters, Microsoft has reloaded and bought Hyper-V new possibilities, helping administrators achieve even greater reliability and better performance.