Software-defined networking with Windows Server 2016

Virtual Cables

The Network Controller needs to provide the same functionality for both physical and virtual networks as the "IP address management" role service for the management of IP addresses in the network. The difference is that the Network Controller can also centrally manage devices, software, and network appliances from third-party manufacturers. Microsoft has integrated and further enhanced Azure features in Windows Server 2016 for this purpose. In an optimally configured environment, the Network Controller can even provide a kind of software firewall for data centers, including various guidelines, rules, and controls.

In addition to the Network Controller, Microsoft also has expanded the Hyper-V network virtualization (HNV) features significantly. The new switch-embedded teaming (SET) function in Hyper-V on Windows Server 2016 can be used to assign multiple physical network adapters to a virtual switch without having to create a team beforehand. HNV and SET can, of course, also be monitored and managed using the Network Controller, together with System Center Virtual Machine Manager (SCVMM).

Particularly in larger environments, Hyper-V networks comprise numerous VMs, many virtual switches, and various network adapters that connect the hosts to the network. The VMs use virtual network adapters to communicate with the virtual Hyper-V switch, which in turn uses network teams that ultimately communicate with the physical adapters on the host. The physical adapters are connected to physical switches, routers, and firewalls. Special features include quality of service (QoS), HNV, and others.

Clearly, managing virtual networks is becoming more and more extensive, which is exactly where the Network Controller comes into play. Its purpose is to bring order to chaos and to manage and monitor optimally all the components involved. QoS for the various networks obviously plays a role in this area; the individual physical network adapters can be operated in different VLANs, which requires efficient management. On top of that, many more examples show how complicated networks can be today, especially when working with virtualization; then, if companies set up a private or hybrid cloud, everything becomes even more complex.

Network Controller and System Center

Connecting cloud services such as Microsoft Azure to the Network Controller and managing them centrally with local networks is also possible. In addition to hardware devices such as routers, switches, VPN servers, load balancers, and firewalls, you can use the Network Controller to manage software-based networking services – and not just those based on Windows Server 2016, but also on Server 2012 R2. Therefore, virtual switches, appliances, and other areas of virtual networks can be monitored and controlled centrally. The Network Controller works closely together with SCVMM for this purpose, and in turn, the service is monitored using System Center 2016 Operations Manager (SCOM). Microsoft also includes network function virtualization here.

The Network Controller only reveals all its capabilities when used in conjunction with SCVMM and Hyper-V in Windows Server 2016. The Network Controller can be added as a network service in SCVMM 2016 to this end by selecting Microsoft Network Controller in the corresponding wizard (Figures 1 and 2). Microsoft provides configuration files [1] on GitHub for this purpose with a detailed step-by-step guide in TechNet [2] that describes how to use the Network Controller with Hyper-V in Windows Server 2016.

The Network Controller works particularly closely with Hyper-V hosts and VMs that are managed with the SCVMM. The 2016 version of SCVMM provides improvement in the configuration of the virtual network adapter, which also works together with the Network Controller service. You can now, for example, provide multiple virtual network adapters when deploying virtual servers. Administrators can designate the network adapter in the templates for virtual servers – this works much like consistent device naming (CDN) for physical network adapters. However, the virtual server needs to be created as a generation 2 VM and be installed with Windows Server 2016. You can use logical networks, MAC address pools, VM networks, and IP address pools in SCVMM 2016 to create and centrally manage network configurations for VMs. The Network Controller also plays an important role because it can take care of management and monitoring.

Managing Virtual and Physical Networks

With the Network Controller, you therefore have the option to manage, jointly operate, and monitor both physical network components and virtual networks centrally. Configuration automation is the particular focus, which, among other things, includes access to individual devices via PowerShell. However, to work, support is required from the respective hardware manufacturer. The software components in Windows Server 2016, which work directly with the Network Controller, already support PowerShell 5.0.

The Network Controller provides two different APIs through the interface function: One API communicates with the end devices and the other with administrators and their management applications; therefore, all devices are managed through only one interface in the network. In the "fabric network management" area, the Network Controller also enables the configuration and management of IP subnetworks, VLANs, Layer 2 and Layer 3 switches, and the management of network adapters in hosts. Therefore, it is possible to manage, control, and monitor software-defined networks efficiently using the Windows 2016 Network Controller together with IPAM.

You can also create firewall rules for VMs of connected Hyper-V hosts via the Network Controller. The controller also has access to the associated virtual switches. The Network Controller therefore makes it possible to control and monitor firewall rules that affect a specific VM or a workload on a VM and, above all, to distribute them in the network if the rules are required on various appliances. In addition to central control, however, the Network Controller also manages the logfiles that determine which data traffic is allowed or denied using rules.

Furthermore, the Network Controller also assumes control over all virtual switches for all Hyper-V hosts in the network and, in this way, also creates new virtual switches or manages existing ones, allowing even virtual network cards to be controlled in the individual VMs. In Windows Server 2016, you can add and remove the network adapters in ongoing operations in Hyper-V in the VMs, which means you no longer need to shut down the VMs to do so. Hyper-V and the Network Controller work together particularly efficiently in this way, because flexible network control is also possible during operation.

You also have the option to work using network guidelines. The Network Controller supports Network Virtualization Generic Routing Encapsulation (NVGRE) and Virtual Extensible Local Area Network (VXLAN) in this area. As well as the physical network devices and adapters, you can also deploy and monitor Hyper-V network virtualization using the Network Controller.

Virtualized infrastructures in particular benefit from complex new technologies, such as network virtualization, which is supported by numerous new storage features and new functionality in Hyper-V. The virtual network adapters for each virtual server can also be deployed, managed, and monitored using the Network Controller. Guidelines can be centrally deployed, saved, monitored, adjusted, and automatically distributed on VMs, even if VMs move between hosts or clusters.

Managing Distributed VMs

The Network Controller can therefore control and configure both VMs and physical servers that are part of a Windows Server Gateway cluster. In this way, it is possible to link data centers and disconnect or connect the networks of different clients in hosted environments.

You can even deploy VMs in the network, which are part of a Windows Server gateway cluster, called the Routing and Remote Access Service (RRAS) Multitenant Gateway, which makes it possible to incorporate virtual servers in Azure. To this end, the Network Controller supports different VPNs, IPsec, and simpler VPNs with generic routing encapsulation. In addition to deploying such VMs, you can also control, monitor, and create VPNs and IPsec connections between the networks. Individual computers can also be connected to the data center via the Internet this way (e.g., for management by external administrators).

Border Gateway Protocol (BGP) routing allows the network traffic from hosted virtual machines to be controlled for the company's network with both Windows Server 2016 and Server 2012 R2 [3]-[5]. However, it is important that all functions – particularly the Network Controller – be used only if you completely switch the server over to Windows Server 2016.

Supporting Clusters

In Windows Server 2016, you can add cluster nodes to clusters with Windows Server 2012 R2 without affecting the operation, making migration and collaboration with the Network Controller easier. As with the VMs, it is also the case here that the new functions in Hyper-V and for the Network Controller are only available if you have upgraded all cluster nodes to Windows Server 2016. To do this, you need to update the cluster configuration using the Update-ClusterFunctionalLevel command. However, this process is a one-way street, so there's no going back.

If you are running nodes in a cluster with Windows Server 2016 and Windows Server 2012 R2, you can easily move VMs between the nodes. In this case, however, you should only manage the cluster from servers with Windows Server 2016. You can also only configure the new version for VMs with

Update-VmConfigurationVersion VM

for the VMs in the cluster when you have updated the cluster to the new version. Only then will the cluster work optimally with the Network Controller.

With cluster Cloud Witness, you can also use VMs in Microsoft Azure as a witness server for clusters based on Windows Server 2016. This is an important point – especially for cross-data-center clusters. The VMs in the Azure cloud and the responsible networks can also be managed and monitored using the Network Controller. Thanks to cluster compute resiliency and cluster quarantine, cluster resources are no longer unnecessarily moved between nodes if a cluster node has problems. Nodes are moved to isolation if Windows detects that the node is no longer working stably. All resources are moved from the node, and the administrators are informed. The Network Controller also detects faulty physical and virtual networks in this context and intervenes accordingly. The VMs are moved via live migration, including the transfer of memory by Remote Direct Memory Access (RDMA) via different network adapters that are also pooled and jointly monitored by the Network Controller.

Important Communication Interfaces

The southbound API – the interface between the Network Controller and network devices – can automatically detect and connect network devices and their configuration in the network. This API also transfers its configuration changes to the devices. The API handles communication between the Network Controller, administrators, and, ultimately, devices and can also involve Hyper-V hosts.

In turn, the northbound API is the interface between administrators and the Network Controller. The Network Controller adopts your configuration settings via this API and displays the monitoring data. Moreover, the interface is used for troubleshooting network devices, connecting new devices, and other tasks. The northbound API is a REST API with a connection that works via a GUI, with PowerShell, and (of course) system management tools like System Center. The new 2016 version of System Center can be seamlessly connected to the Windows Server 2016 Network Controller in this area – mainly the SCVMM. Monitoring is also performed using System Center 2016 Operations Manager.

Automated Network Monitoring

Microsoft places great emphasis on network monitoring with the Network Controller. You therefore have a tool available for troubleshooting in the network. The Network Controller not only detects problems related to latency and package losses, it also keeps you informed about where these losses come from and which devices in the network are causing problems. This means you can automatically start troubleshooting measures, such as live migrations or scripts, as desired.

The Network Controller can also collect SNMP data in this context and identify the status of connections, restarts, and individual devices. This means you are in a position to group devices such as switches in a particular data center, whole server racks, data center, or other logical groupings and therefore quickly identify whether a switch failure will affect certain VMs.

Another feature of monitoring is detecting network overloads through certain services, servers, or VMs. If a specific server rack, for example, loses the connection to the network or can only communicate to a limited extent, the Network Controller marks all VMs that are on Hyper-V hosts in this rack as faulty, as well as the connected virtual switches. The controller therefore also detects connections in the network and can provide information about problems in a timely manner or even implement measures itself.

Actively Managing Network Traffic

The Network Controller is also generally able to control and redirect network traffic actively. If certain VM appliances are used in the network for security purposes (e.g., antivirus, firewall, intrusion, or detection VMs), you can create rules using the Network Controller that automatically redirect network traffic to the appropriate appliances.

However, this doesn't only play a role in terms of security, it is also important for collaboration with load balancers. The Network Controller detects servers with identical workloads and their load balancer. The server role can also actively intervene here and direct network traffic to the right places, increasing availability and scalability in the company without affecting the overview.

Servers that work as Network Controllers can be virtualized and pooled in a cluster. Microsoft recommends making the role highly available in productive environments. The best way to do this is to create a cluster or a highly available Hyper-V environment. Complete virtualization means that deployment in a cluster is not an impossible task.

Cmdlets for Network Controller

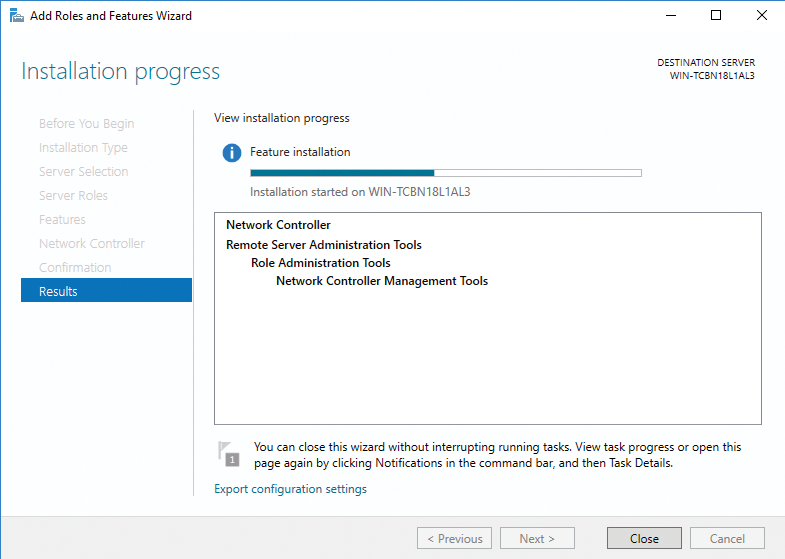

PowerShell 5.0 is integrated in Windows Server 2016 and is also used to control the Network Controller (Figure 3). It is possible to install the Network Controller's role service (in the cluster, as well) and to perform the setup and configuration in PowerShell. The installation is performed with:

Install-WindowsFeature -Name NetworkController -IncludeManagementTools

To create a cluster, you first need to create a "node object" in PowerShell:

New-NetworkControllerNodeObject -Name <name of the server> -Server <FQDN of the server> -FaultDomain <other servers belonging to controller> -RestInterface <network adapter that accepts REST requests> [-NodeCertificate <certificate for computer communication>]

With PowerShell, you can set the cluster for the Network Controller before creating it (Listing 1). You will also find detailed options and control possibilities through scripts and PowerShell online [6], including the various cmdlets for controlling the Network Controller [7]. Installation of the Network Controller can also be automated using PowerShell, as shown in Listing 2.

Listing 2: Automated Installation of the Network Controller

01 $a = New-NetworkControllerNodeObject -Name Node1 -Server NCNode1.contoso.com -FaultDomain fd:/rack1/host1 -RestInterface Internal

02 $b = New-NetworkControllerNodeObject -Name Node2 -Server NCNode2.contoso.com -FaultDomain fd:/rack1/host2 -RestInterface Internal

03 $c = New-NetworkControllerNodeObject -Name Node3 -Server NCNode3.contoso.com -FaultDomain fd:/rack1/host3 -RestInterface Internal

04 $cert= get-item Cert:\LocalMachine\My | get-ChildItem | where {$_.Subject -imatch "networkController.contoso.com"}

05 Install-NetworkControllerCluster -Node @($a,$b,$c) -ClusterAuthentication Kerberos -DiagnosticLogLocation \\share\Diagnostics -ManagementSecurityGroup Contoso\NCManagementAdmins -CredentialEncryptionCertificate $cert

06 Install-NetworkController -Node @($a,$b,$c) -ClientAuthentication Kerberos -ClientSecurityGroup Contoso\NCRESTClients -ServerCertificate $cert -RestIpAddress 10.0.0.1/24

Listing 1: Creating the Network Controller

01 Install-NetworkControllerCluster -Node <NetworkControllerNode[]> -ClusterAuthentication <ClusterAuthentication> [-ManagementSecurityGroup <group in AD>] [-DiagnosticLogLocation <string>] [-LogLocation-Credential <PSCredential>] [-CredentialEncryptionCertificate <X509Certificate2>] [-Credential <PSCredential>] [-CertificateThumbprint <string>] [-UseSSL] [-ComputerName <Name>] 02 Install-NetworkController -Node <NetworkControllerNode[]> -ClientAuthentication <ClientAuthentication> [-ClientCertificateThumbprint <string[]>] [-ClientSecurityGroup <string>] -ServerCertificate <X509Certificate2> [-RESTIPAddress <string>] [-RESTName <string>] [-Credential <PSCredential>] [-Certificate-Thumbprint <string>] [-UseSSL]

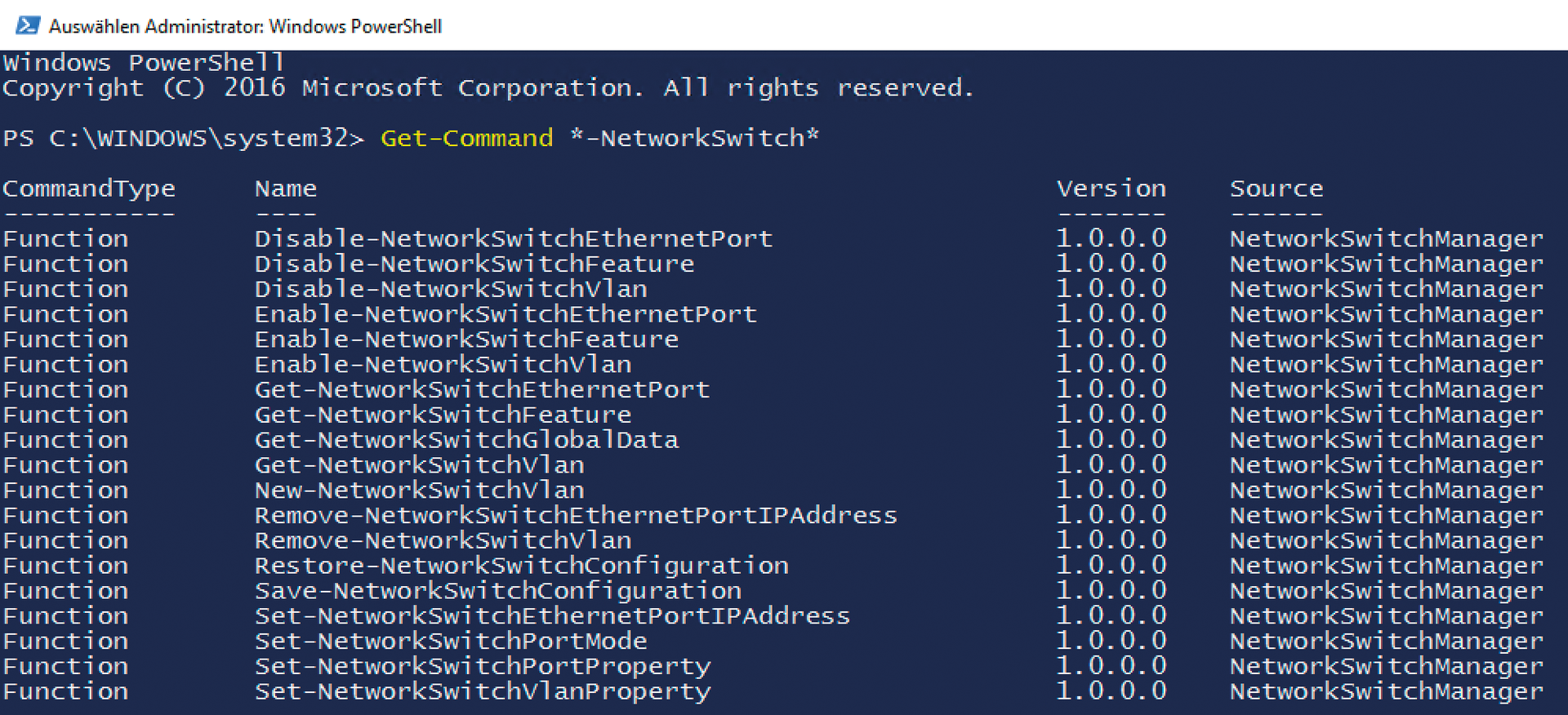

The datacenter abstraction layer (DAL) is the interface to the Network Controller in PowerShell that enables remote management of compatible network components via PowerShell and PowerShell-compatible tools that have a graphical interface for scripts. However, the network components need to be certified by Microsoft. Cisco and Huawei are among the certified manufacturers, although other service providers likely will be added in the future. The Network Controller in Windows Server 2016 is also accessible this way, in parallel with the cmdlets that are already available for the service.

If you use compatible devices, they can be managed via PowerShell – either with or without the Network Controller (Figure 4). Microsoft goes into more detail about the functions and options of compatible devices [8], and you can find examples of script management online [9].

Separating Virtual from Physical

In Windows Server 2016, HNV can be used to separate individual virtual networks from the physical network. The virtual servers in these networks expect to be placed on a separate network, which was generally already possible in Windows Server 2012 R2, but is expanded in Windows Server 2016. HNV can also be connected to the Network Controller.

HNV plays a particularly important role in large data centers, but even smaller companies can benefit from this function if they need to better separate networks. Put simply, HNV expands the functions of virtual servers to include network configuration. Multiple virtual networks can be used in parallel in a physical network. They can use the same or a different IP address space. The exchange of data between the networks can be set up using HNV gateways and monitored using the Network Controller. Many Cisco hardware switches already work with this configuration. In this way, it is possible to combine multiple virtual networks so that servers can communicate with each other on this network, yet contact with other networks is filtered.

Since Windows Server 2012/2012 R2, it has been possible to control bandwidth in the network and integrate drivers from third-party manufacturers with virtual switches using Hyper-V Extensible Switches. In Windows Server 2016, Microsoft also wants to give third-party products the option to access network virtualization and connect to the Network Controller. Extensions are available, for example, to integrate virtual solutions against DDoS attacks or customized virus protection.

From Server 2012 R2, HNV also supports dynamic IP addresses used for IP address failover configuration in large data centers. In Windows Server 2016 and System Center 2016, Microsoft has expanded these functions and made the configuration more flexible. If you work with HNV, multiple IP addresses are assigned to virtual network adapters in the network. The customer address (CA) and the provider address (PA) work together. The CA allows virtual servers on the network to exchange data, just like a normal IP address. The PA is used for exchanging data between the VM and Hyper-V host, as well as the physical network. This can be controlled using the Network Controller and SCVMM 2016 or IP address management (IPAM).

HNV is not an upstream Network Driver Interface Specification (NDIS) filter; instead, it is integrated directly into the virtual switch. Both third-party products and the Network Controller can access the CA directly using this technique and can communicate on the PA, allowing virtual switches and NVGRE to work together better. Data traffic in the virtual switches under Windows Server 2016 also runs with network virtualization and integrated third-party products. HNV is not an interface between network cards and extensible switches; rather, it is an integral part of virtual switches themselves, which is why network interface controller (NIC) teams work very well with network virtualization, particularly the new SET switches in Windows Server 2016.

These functions allow large companies and cloud providers to use the Access Control Lists (ACLs) of the virtual switches and control firewall settings, permissions, and network protection for the data center – all using the Network Controller. To this end, Windows Server 2016 provides the option to also integrate the port in the firewall rules, not just the IP and MAC addresses for the source and destination. This function works together with network virtualization in Hyper-V and can also be controlled using the Network Controller. Network virtualization is especially useful in conjunction with System Center 2016 Virtual Machine Manager because it is possible to create and configure new VM networks through the wizards and then connect to the Network Controller.

Faster Live Migration

Normally, the processor manages the control of data and its use on a server. The processor is burden by each action when a server service such as Hyper-V sends data via the network (e.g., during live migration), which takes up computing capacity and time; above all, users are disconnected from their services, which in turn are saved on the virtual servers because the processor needs to create and calculate data packages for the network. To do so, it in turn needs access to the server's memory. If the package is completely calculated, the processor directs it to a cache on the network card. The package then waits there for transmission and is then sent from the network card to the destination server or the client. Conversely, the same process takes place when data packages arrive at the server. If a data package reaches the network card, it is then directed to the processor for further processing. These operations are very time consuming and require considerable processing power for the large amounts of data that are incurred, for example, when transferring virtual servers during live migration.

The solution to these problems is called Direct Memory Access (DMA). Simply put, the various system components (e.g., network cards) can access memory directly to store data and perform calculations. This relieves pressure on the processor and significantly shortens queues and operations, which in turn increases the speed of the operating system and of server services such as Hyper-V.

Remote DMA (RDMA) is an extension of this technology to include network functions. The technology allows the memory content to be transmitted to another server on the network and to access the memory of another server directly. Microsoft had already integrated RDMA in Windows Server 2012, but they improved it for Windows Server 2012 R2 and installed it directly in Hyper-V.

The technology is again expanded in Windows Server 2016; above all, performance is improved. The new Storage Spaces Direct can also use RDMA and even presuppose such network cards (see the "SDN for Storage Spaces Direct" box). Additionally, RDMA supports SET for teaming network adapters in Hyper-V. Windows Server 2012/2012 R2 can use the technology automatically when two such servers communicate on the network. RDMA significantly increases data throughput on the network and reduces latency when transmitting data and also plays an important role in live integration.

Also interesting is data center bridging, which controls data traffic in very large networks. If the network adapters used have the Converged Network Adapter (CNA) function, data can be better used on the basis of iSCSI disks or RDMA techniques – even between different data centers. Moreover, you can limit the bandwidth that this technology uses.

For quick communication between servers based on Windows Server 2016 – especially on cluster nodes – the network cards must support RDMA on the servers, which is particularly worth using for very large amounts of data. In this context, Windows Server 2016 also improves cooperation between different physical network adapters. Already in Server 2012 R2, the adapters can be grouped into teams via the Server Manager, although this is no longer necessary in Windows Server 2016 because – as briefly mentioned earlier – SET allows you to assign multiple network adapters as early as when creating virtual switches. You need the SCVMM or PowerShell to do this. In this way, it is possible to combine up to eight uplinks in a virtual switch, as well as dual-port adapters.

In Server 2016, you use RDMA-capable network adapters that are directly grouped together in a Hyper-V switch on the basis of SET. The virtual network adapters of the individual hosts access virtual switches directly, but the VMs can also access the virtual switch directly and take advantage of the power of the virtual Hyper-V switch with SET (Figure 5).

SET in Practice

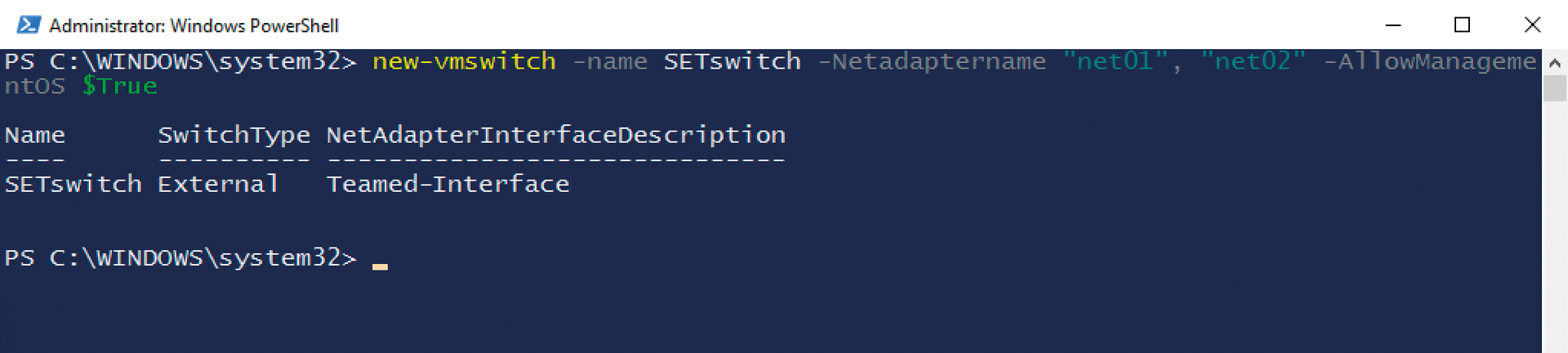

The PowerShell command used to create a SET switch is pretty simple:

new-vmswitch -name SETswitch -Netadaptername "net01", "net02" -AllowManagementOS $True

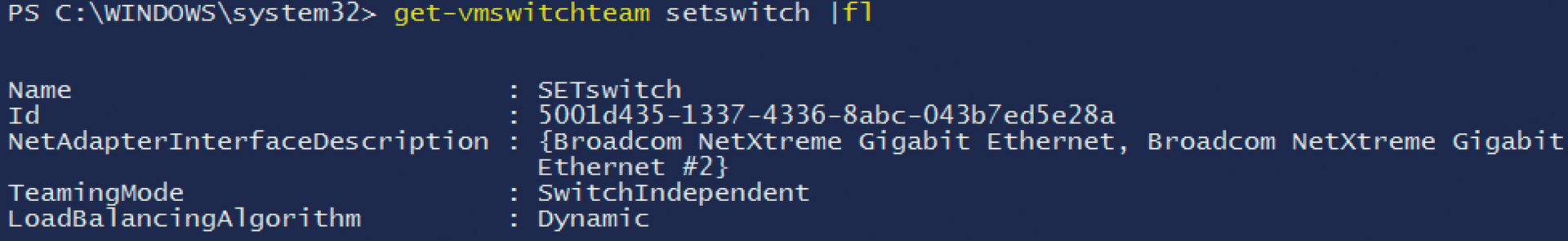

If you don't want to allow the team to manage the operating system, use the -AllowManagementOS $False option. Once you have created the virtual SET switch, you can retrieve its information with the GET-VMSwitch command, with which you also can see the different adapters that are part of the virtual switch. More detailed information can be found with the

Get-Vmswitchteam <Name>

command, which displays the virtual switch settings (Figure 6). If you want to delete the switch again, use Remove-VMSwitch in PowerShell. Microsoft provides a Guide [10] you can use to test the technique in detail.

Because RDMA supports SET, the technology is also cluster-capable and significantly increases the performance of Hyper-V on the network. The new switches support RDMA, as well as Server Message Block (SMB) Multichannel and SMB Direct, which is activated automatically between servers with Windows Server 2016. The built-in network adapters, and the teams and virtual switches too, of course, need to support the RDMA function for this technology to be used between Hyper-V hosts. The network needs to be extremely fast for these operations to work optimally. Adapters with the iWARP, InfiniBand, and RDMA over Converged Ethernet (RoCE) types are best suited.

Hyper-V can access the SMB protocol even better in Windows Server 2016 and can, in this way, outsource data from the virtual servers on the network. The purpose of the technology is that companies don't store the virtual disks directly on the Hyper-V host or use storage media from third-party manufacturers but instead use a network share of a server with Windows Server 2016, possibly also with Storage Spaces Direct. Hyper-V can then very quickly access this share with SMB Multichannel, SMB Direct, and Hyper-V over SMB. High-availability solutions such as live migration also use this technology. The shared cluster disk no longer needs to be in an expensive SAN. Instead, a server – or better, a cluster – with Windows Server 2016 and sufficient disk space is enough. The configuration files from the virtual servers and any existing snapshots can be stored on the server or cluster.

Conclusions

Microsoft now provides a tool for virtualizing network functions, in addition to servers, storage, and clients. The standard Network Controller in Windows Server 2016 allows numerous configurations that would otherwise require paid third-party tools and promises to become a powerful service for the centralized management of networks. However, this service is only useful if your setup is completely based on Windows Server 2016, including Hyper-V, in the network. Setting up the service and connecting the devices certainly won't be simple, but companies that meet this requirement will benefit from the new service, which includes the parallel use of IPAM, which also can be connected to the Network Controller. Together with Hyper-V network virtualization and SET, networks can be designed much more efficiently and clearly in Hyper-V. Additionally, the power of virtual servers increases, as do transfers during live migration.

The employment of System Center 2016 is necessary so that SCVMM and Operations Manager can make full use of and monitor functions. However, this doesn't make network management easier, because Network Controllers and the System Center also need to be mastered and controlled.