Harden your OpenStack configuration

Fortress in the Clouds

One of the biggest concerns about virtualization is that an attacker could succeed in breaking out of the virtual machine (VM) and thus gain access to the resources of the physical host. The security of virtual systems thus hinges on the ability to isolate resources of the various VMs on the same server.

A simple thought experiment shows how important it is that the boundaries of VM and host are not blurred. Assume you have a server that hosts multiple VMs that all belong to the same customer. In this scenario, a problem occurs if a user manages to break out from a VM and gain direct access to the server: In the worst case, the attacker now has full access to the VMs on the host and can access sensitive data at will, or even set up booby traps to fish for even more information.

To gain unauthorized access, attackers need to negotiate multiple obstacles: First, they must gain access to the VM itself. If all VMs belong to the same customer and the same admins regularly maintain them, this risk is minimized, but it cannot be ruled out. In the second step, an attacker needs to negotiate the barrier between the VM and the host. Technologies such as SELinux can help to minimize the risks of an attacker crossing the VM barrier.

Aggravated in the Cloud

The risk potential is far greater when the environment does not operate under a single, uniform management system, such as the case where individual customers operate within a public cloud.

In a public cloud setting, the VMs maintained by each customer must run alongside one another on a common host. Customers cannot choose which host the VM runs on and who else has machines on the same host. You have no control over the software that runs on the other VMs or on how well the other VMs are maintained. Instead, you need to trust the provider of the platform.

This article describes some potential attack vectors for VMs running in the OpenStack environment – and how admins can best minimize the dangers.

Identifying Attack Vectors

The most obvious point of entry for attackers is the individual VM. An attacker who gains access to a VM in the cloud can also gain access to the server if the host and the VMs are not properly isolated.

A number of other factors provide a potential gateway into the cloud. Practically any cloud will rely on network virtualization. Most will use Open vSwitch, which relies on a VXLAN construct or GRE tunnels to implement virtual networks, bridges, and virtual ports on the hypervisor. An attacker who somehow gains access to the hypervisor machine can sniff the network traffic produced by VMs and thus access sensitive data.

The plan of attack does not need to be complex: In many cases, attackers in a cloud computing environment are confronted with a software museum that ignores even the elementary principles of IT security, such as timely updates and secure practices for configuring network services.

One complicating factor in the OpenStack environment is that an attacker can discover critical data easily if the login credentials for an account fall into the hands of a potential attacker: Usually, one such set of credentials is sufficient to modify or read existing VMs and volumes at will.

What can a provider do to avoid these attack scenarios? A large part of the work is to create an actionable environment in which self-evident security policies can actually be implemented.

What Services?

Hardening an OpenStack environment starts with careful planning and is based on a simple question: What services need to be accessible from the outside? OpenStack, like most other clouds, continuously relies on APIs. The API components must be accessible to the outside world – and that's all. Users from outside do not need access to MySQL or Rabbit MQ, which are necessary for the operation of OpenStack.

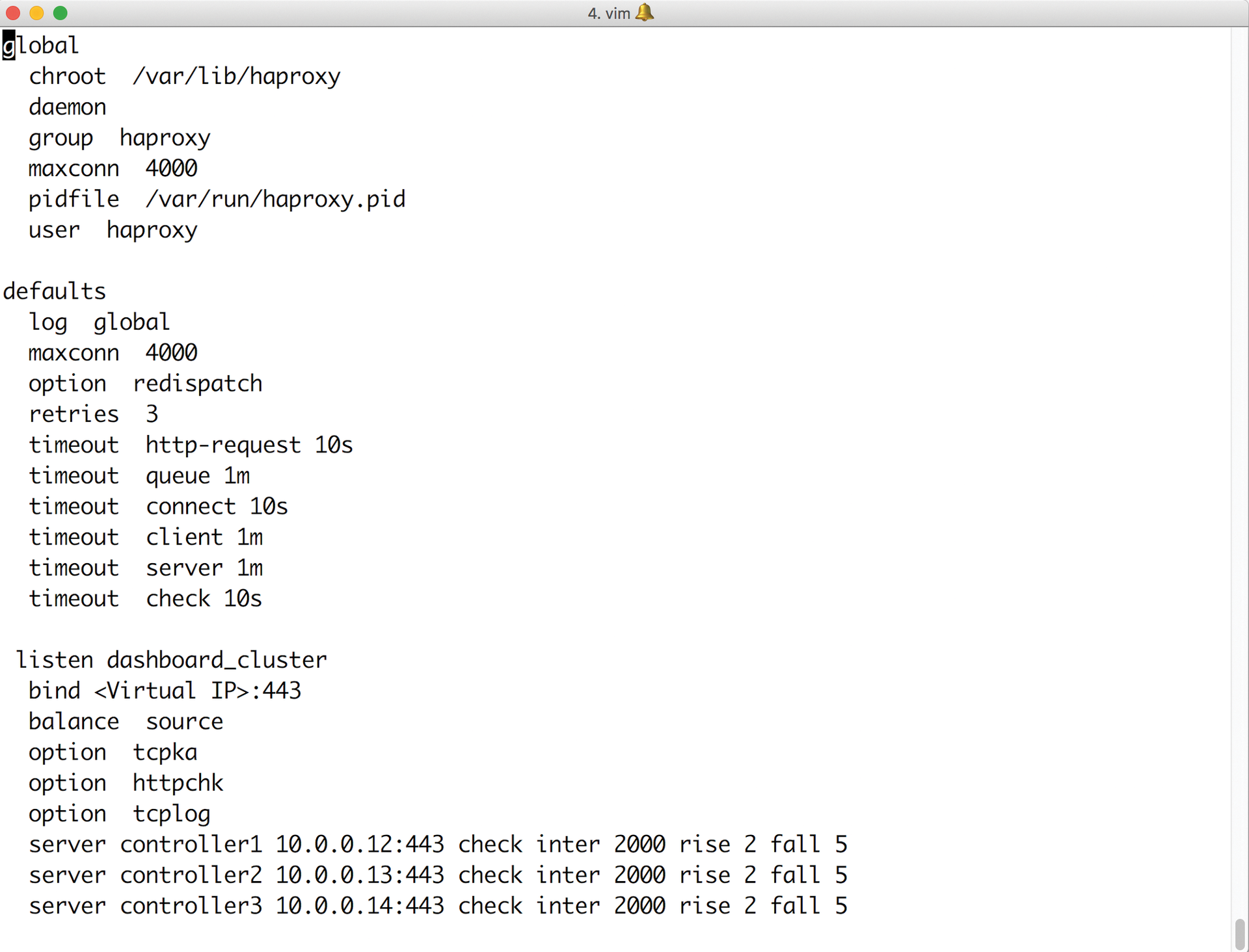

Typically, a cloud does not even expose the APIs directly, because this makes high availability impossible. Load balancers serve as the first line of defense against attacks in this case (Figure 1), allow incoming connections on specific ports.

A first important step in securing OpenStack is the design of the network layout to make external access to resources impossible whenever it is unnecessary. Admins would do well to be as radical as possible: If neither the OpenStack controllers nor the hypervisors need to be accessible from the outside, they do not need a public IP. Furthermore, it is not absolutely necessary to open connections to the outside world from any of these hosts. Completely isolating systems from the Internet effectively prevents attackers from loading malicious software if they actually have gained access in some way.

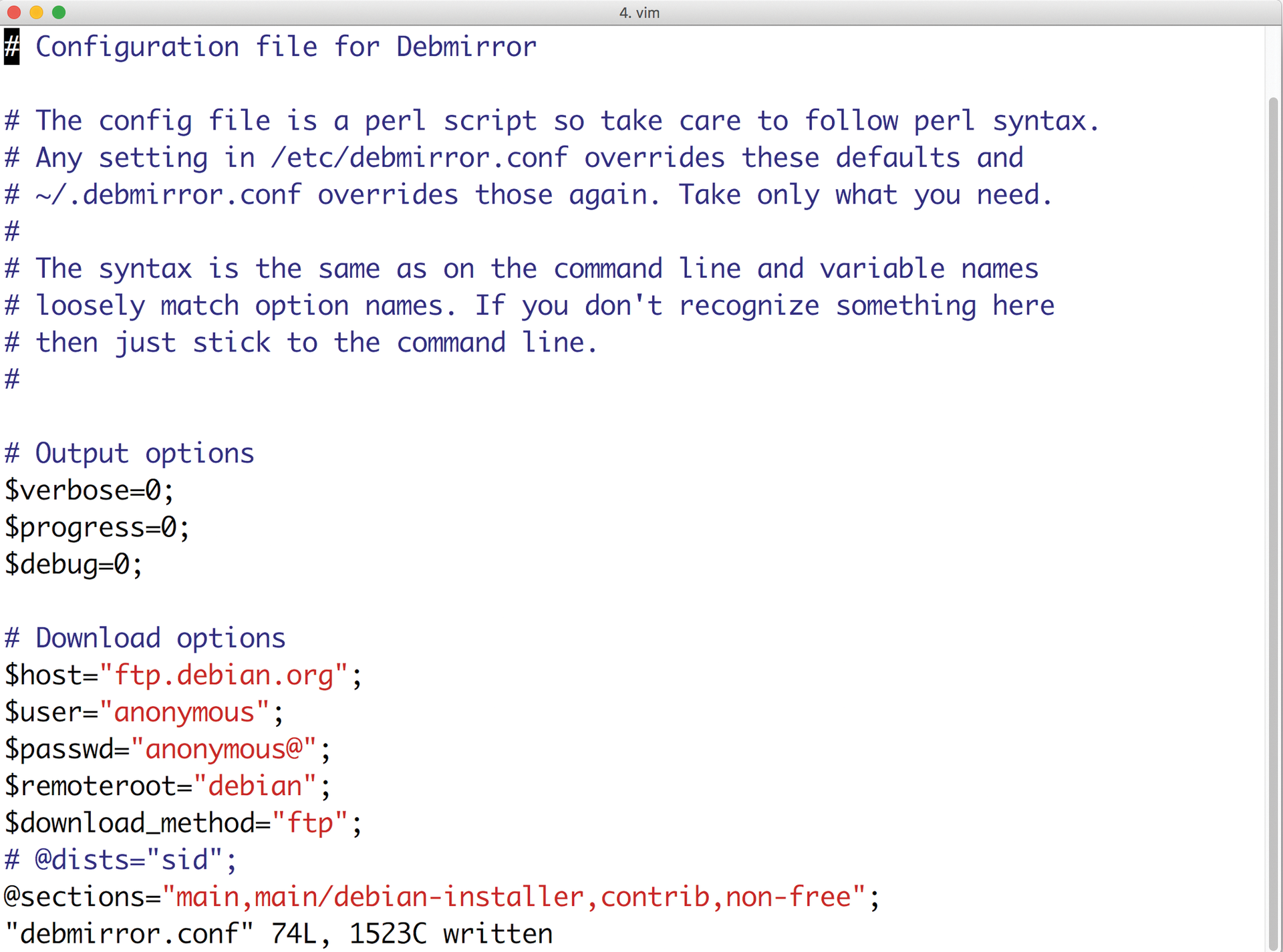

Of course, this approach means additional work. Distribution updates or additional software packages somehow need to find their way onto the affected servers. But this challenge can be managed by installing a local mirror server (Figure 2) and a local package repository.

To understand the principle, you need to think about network segments: A network segment in this case includes systems that only communicate locally with other servers. A second segment serves as a kind of DMZ, supporting servers with one leg on the private network and the other on the Internet. Load balancers, mirror servers, and the OpenStack nodes are typical examples.

Each network segment should include servers that act as jump hosts, that is, they provide access to individual systems on the private network. Actions such as VPN access and login should be handled via SSH keys or – even better – certificates. Packet filters are a good idea in principle, but they only offer protection until someone with admin rights gains access to a server. After that, they are virtually ineffective, because they can be overridden by the attacker at any time.

Updates

A second important building block for hardening OpenStack is consistently installing updates for all components. This need for updates also applies to servers that are not directly accessible from the outside: A vulnerability in KVM that makes an outbreak from the VM possible could erode any network-based isolation.

The point about installing updates may be a truism, but based on my experience, it is essential to remind cloud users that updates are still important. You can see the panic in the eyes of many OpenStack cloud admins at the thought of updates. Because OpenStack is complex and difficult to set up, the prospect of updating is often quite daunting.

Manually installed OpenStack environments are more similar to modern art than to easily maintainable software platforms, but you can avoid some of the biggest pains if you pay attention to a couple of important principles:

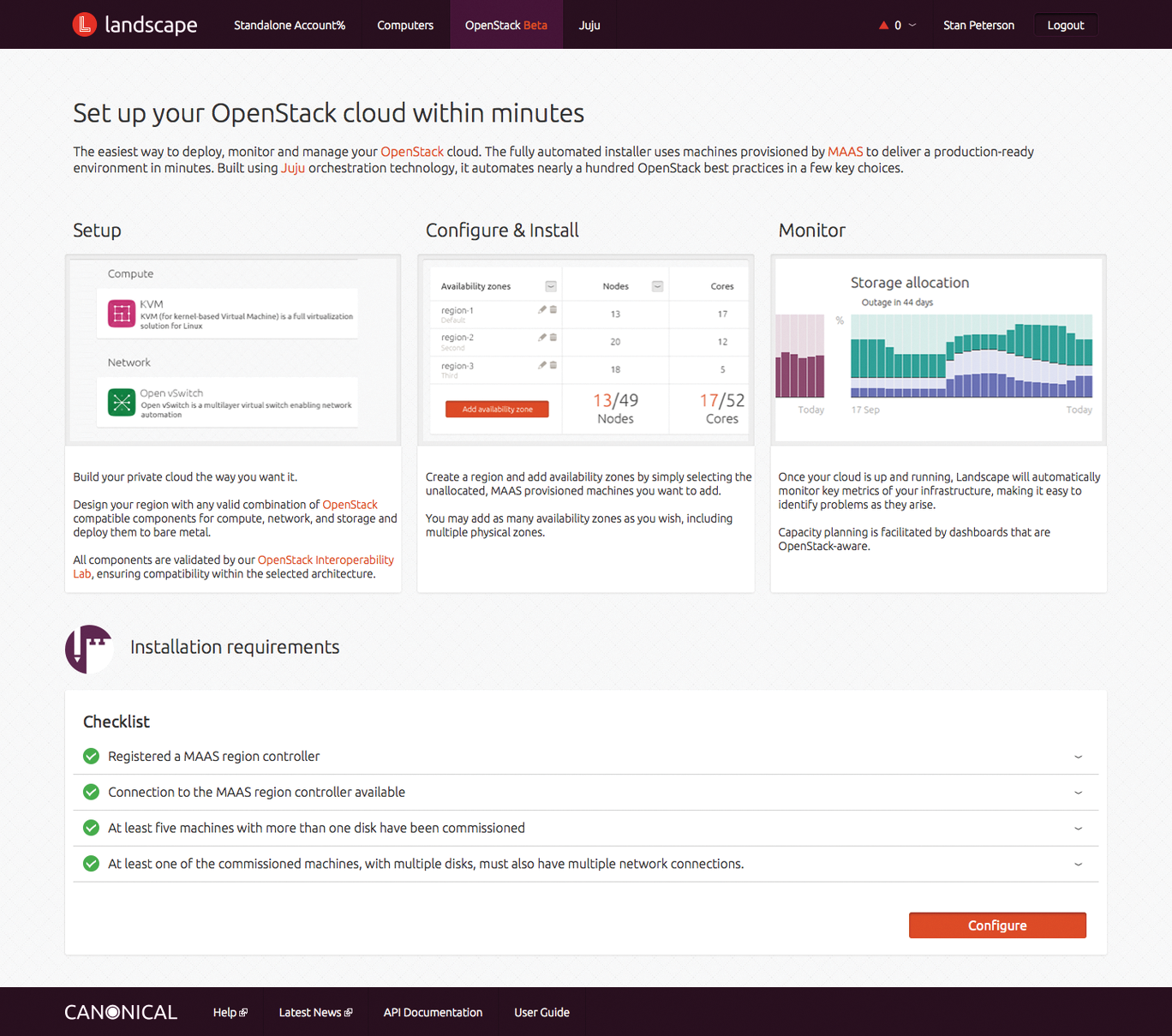

- Only automated deployment – No manually installed servers should exist in a production environment. It must be possible at any time to remove any node from the setup and to replace it with an automated reinstall that puts the latest software on the disk. Manual changes to the nodes in the cloud are automatically prohibited, because they are not easily reproducible (Figure 3).

- Replaceable systems – To make it easier to remove and reinstall a computer for updates, the VMs on each node must support migration to another cloud node at any time during operation – without downtime for customers.

In classic clouds, storage is the problem most of the time: If you do not put your OpenStack VMs on distributed storage, but on virtual disks directly attached to the hypervisor, you'll end up in with a maintenance nightmare, because the data of the VM exists precisely once on the hypervisor.

Distributed storage solutions such as Ceph make more sense: If a VM resides on Ceph, the RBD drive associated with it can be connected to the VM on a different host at any time. The combination of VMware and libvirt including RBD drivers can handle this form of live migration with ease. Other SDN solutions available on the market also support this functionality; there is thus nothing to prevent a successful live migration.

By ensuring that any VM on a hypervisor can change to an another hypervisor at any time, you make it easier to install updates. In some scenarios, installing updates actually becomes unnecessary: If a computer has outdated software, you simply reinstall it with the latest packages.

Fast and easy replacement offers another advantage: If there is even the slightest suspicion that a computer may have been compromised, it can be quickly removed from the cloud for forensic investigations. Once you are finished, automatic reinstallation ensures that the server is available again.

Sore Point: Keystone

The OpenStack Keystone identity service, officially dubbed Identity, is the root of all functionality in OpenStack. All clients that want to do something in OpenStack first need to pick up a token from Keystone with a combination of username and password and then send requests to authenticate. A token is a temporarily valid password, based on which Keystone checks the legitimacy of a request.

Keystone is accordingly difficult when it comes to OpenStack clouds: Attackers who manage to get to a valid set of credentials can do whatever they want, at least within the projects in which the affected access credentials play a role. Furthermore, service access points are usually unrestricted. Handling with this sensitive information is thus a critical task.

The Unspeakable Admin Token

The admin token is a relic left over from the early days of Keystone that can exist in plaintext in keystone.conf. The token is practically a master key for the entire cloud: You can create and modify any other account with it. The admin token was previously used to add the first set of user data to Keystone at the start of an OpenStack installation. There is now a bootstrap command in keystone-manage for this purpose that allows the initial setup without a remote admin token. OpenStack admins need to ensure that the admin_token entry is commented out in keystone.conf file and does not contain a value.

Any OpenStack service that wants to talk to Keystone needs to know the credentials for service access. Virtually all components expect this information in the form of a separate entry in their own configuration. The relevant data is typically available in plain text.

From an admin perspective, it is desirable to restrict read access to the configuration files in an appropriate manner. Although attackers should not even make it onto the affected hosts, if they do manage to log in, they should not be able to read the service configuration files with a normal user account.

At service level you can defuse the Keystone situation by configuring an appropriate user role schema. Keystone is not particularly granular by default – it only has two relevant roles from the user's point of view: the admin and the user role. Many admins inadvertently assign the admin role to users in projects because they assume that they are assigning special rights within the project to the user by doing so. However, if you have the admin role for one project, you can gain access to other projects in a roundabout way. It is thus a bad idea to assign the admin role to users.

The risks involved with the user role can also be defused. The service identifies users by their login data, which consists of the username, the password, and the project for which the user requests a token. Keystone also stores the mappings defining the roles a user has in a specific project.

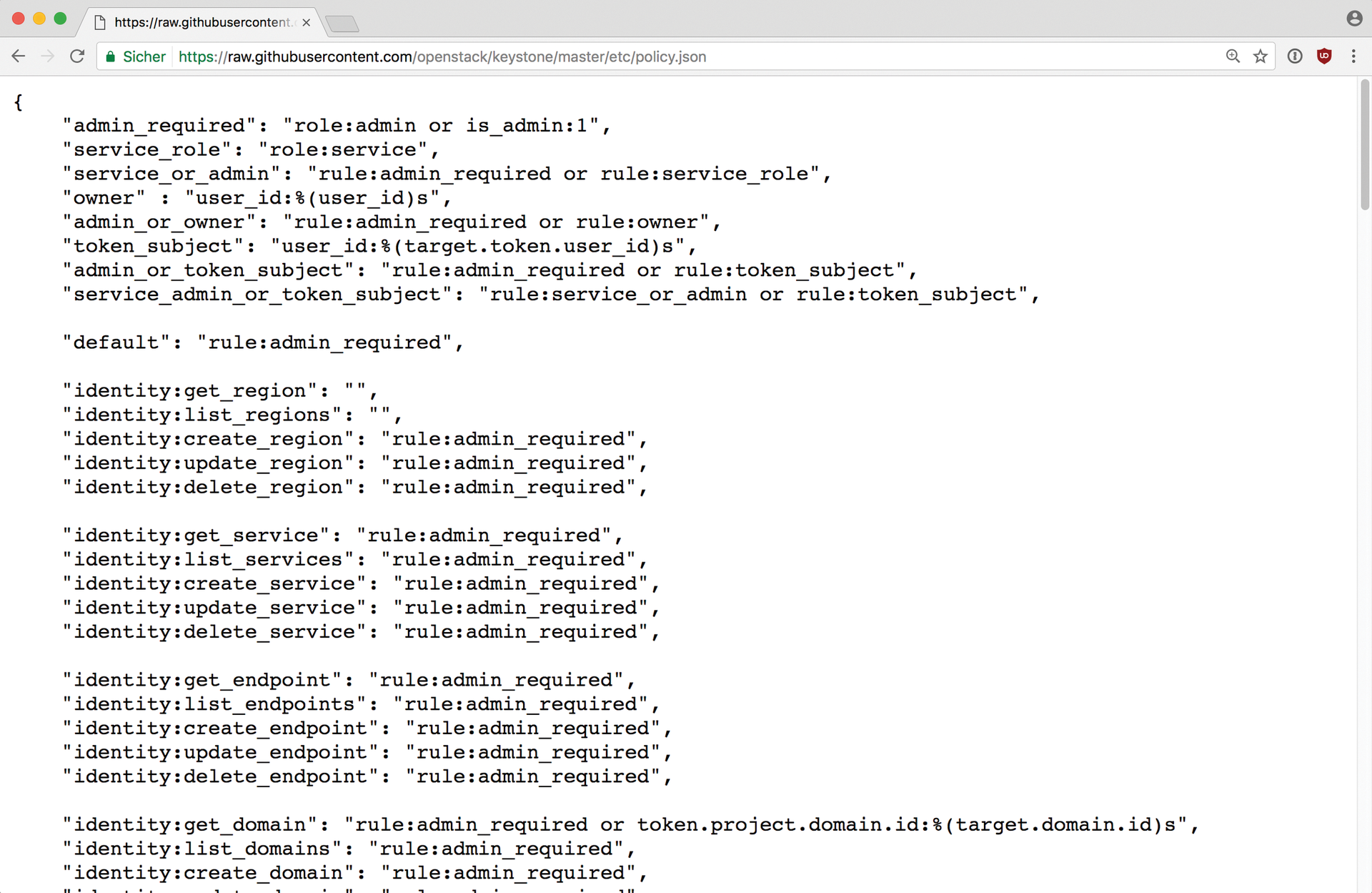

Keystone itself, however, does not decide what a user with a specific role can do in a project; this is decided by the individual components: Each of the OpenStack services has a file named policy.json (Figure 4), in which the role needed by the service is stated for all API operations known to the service.

By adapting the policy.json files of all services, you can define a system based only on the user role. You might create an operator role for writing API operations (say, creating a new VM or a virtual network) and a viewer role for read operations. Then the principle of separation of concerns applies: If you perform only read operations, you are only assigned the viewer roll. If you need write access, you are additionally assigned the operator role.

This approach follows the principle of risk mitigation: The less frequent the write access, the lower the risk that an account will fall into the wrong hands. For the sake of completeness, I should mention that OpenStack does not make it easy for the admin to create policy.json files to match the desired setup. On the one hand, many API operations are not documented at all, or are poorly documented, and the total number of API operations is huge – well above 1,000. If you want to polish your policy.json files, you need to schedule a long lead time.

Secure VMs

The measures described so far are intended to minimize the attack surface of an OpenStack cloud and to mitigate the potential damage the attacker can cause if an attack succeeds. Virtualization in OpenStack also offers a starting point for boosting security.

The available tools depend on the kind of hypervisor you use. The security manual for OpenStack [1] states that KVM is the hypervisor that supports the largest number of external security mechanisms. This list includes sVirt, which is the SELinux virtualization solution, as well as AppArmor, Intel's Trusted Execution Technology (TXT), cgroups, and Mandatory Access Controls (MAC) at the Linux level.

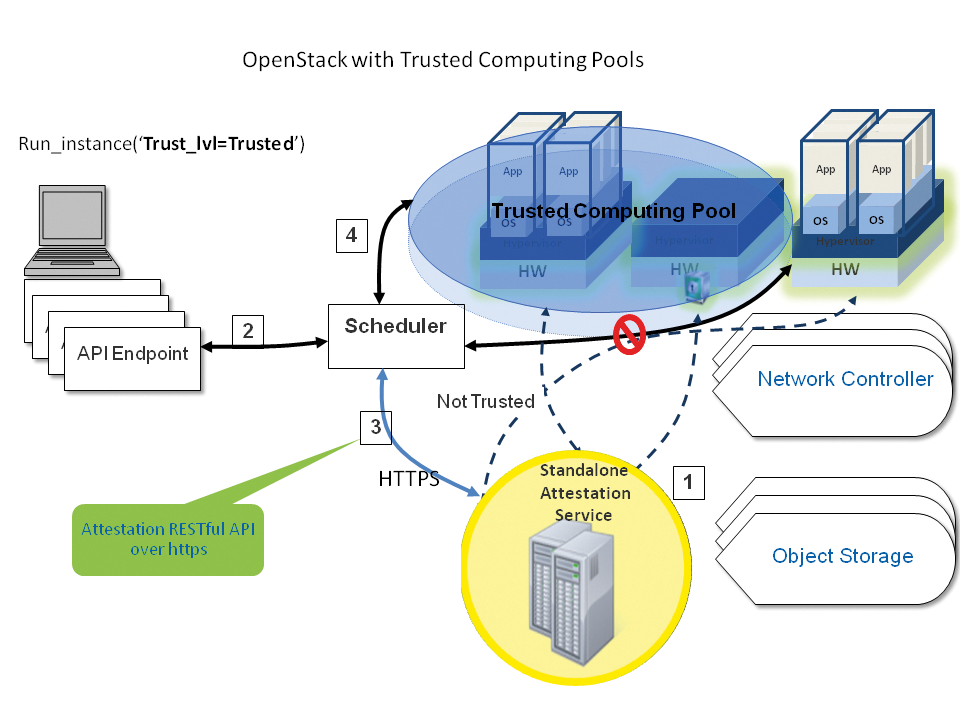

Intel's TXT feature plays a special role, allowing only certain code to run on servers. OpenStack adopts this the feature in the form of trusted compute pools.

You can use these compute pools to group servers that support TXT. If customers then choose a trusted compute Pool (Figure 5) for their VMs, they can be sure that their VMs will only run on servers with TXT functionality. This setup is documented in the Admin Guide for OpenStack [2]. Even if you do not rely on TXT, you can still achieve good basic security using sVirt or AppArmor, which are both enabled out of the box on Ubuntu.

Sadly, many clouds run on Ubuntu systems on which AppArmor is explicitly disabled, because it does not harmonize well with the implemented storage solution in the default configuration and because the overhead involved with finding the appropriate configuration appears to be too troublesome. This approach is not useful, of course.

Something's Not Right

The last thing to consider is security monitoring. Even in conventional network settings, it is a challenge for admins to detect something going wrong. The problem is significantly greater in OpenStack: Customers can define access to the cloud and then do whatever they want in their VMs. Usually, the provider will adopt a policy of benign neglect.

But what happens if a project in the cloud affects the overall performance of the installation or interferes with other customers? The provider has to be proactive: It is important to monitor the cloud such that irregularities can be detected in a timely manner and then to take countermeasures.

Classic Nagios-style incident monitoring is not enough. A provider will want to detect pronounced changes in the volume of incoming or outbound traffic without any apparent reason. Graphing systems and time-series databases, such as open TSDB or Prometheus, offer a useful option for admins. These utilities draw various performance data on a timeline and also let you define thresholds. If the values for incoming and outbound traffic move outside these limits, the system alerts the admin to the potential problem.

Conclusions

When it comes to OpenStack, it is not the exciting, specialized technologies that help admins get a better night's sleep. Instead, ensuring good standards through system deployment and administration has a more positive effect.