Avoiding KVM configuration errors

Active Separation

Whether a virtualization environment comprises only a few hosts or a complex cloud landscape, the majority of admins today who plan to use Linux as the basis of their hypervisors favor KVM. All common Linux distributions already have the necessary software packages, which often facilitates the decision to use KVM, as well as the virtualization project itself.

The architects of such a setup all too rarely pay attention to the security of their design. The widespread distribution of container technologies illustrates the low degree of protection under which many users are willing to work; however, you cannot assume that Linux hypervisors such as KVM are automatically secure simply because it's Linux and because virtualization inherently isolates its guests in a superior way compared with Docker containers, for example. In this article, I describe hardening strategies and look at common configuration errors.

The most far-reaching danger for a virtualization server is a malicious virtual machine (VM) managing to break out and gain access to the host and other guests. The danger becomes tangible with errors in the virtualization components. Antidote number one is rapid deployment of all security patches, but the admin can also use other means to reduce the likelihood of a guest breaking out.

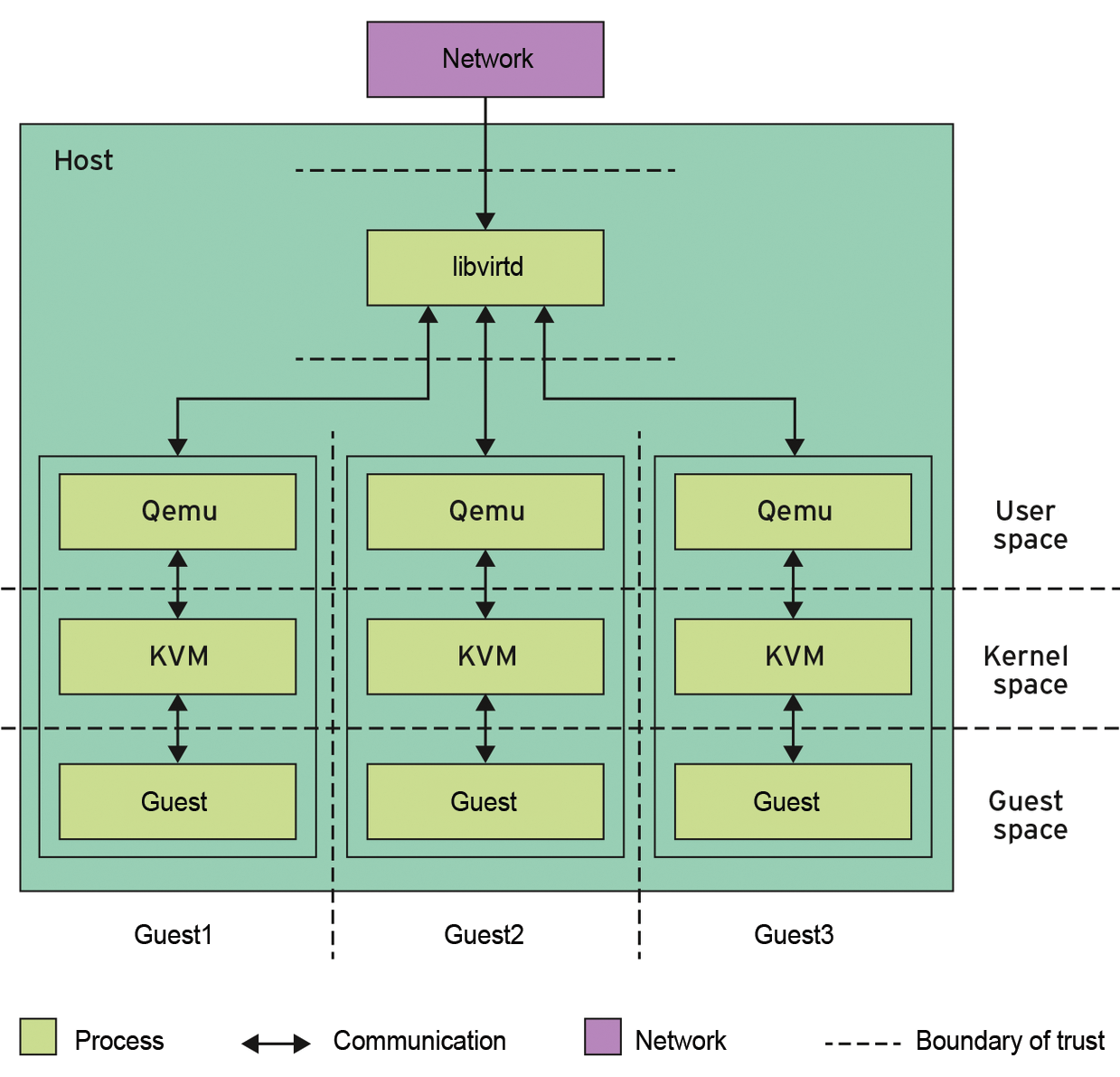

To begin, you need to be very familiar with the main software components of your KVM ecosystem and their interactions. KVM [1], which stands for kernel-based virtual machine, is at the heart of the Linux virtualization universe. The Linux kernel's KVM component only provides the basic functions for running VMs, so the application that uses these functions does not have to deal with all the details of the hardware.

KVM and Qemu

Any Linux application can use the KVM functions provided by the kernel, but in practice, only one is relevant: The Quick Emulator (Qemu) [2] uses KVM to run a guest system in a largely native way on the physical hardware [3]. However, Qemu performs security-critical operations of the guest under its own steam – in particular, access to the (virtual) hardware, which involves the computer interrupting native execution and KVM taking control. KVM then typically calls on Qemu to implement hardware access; that is, it only enters the game if the guest needs not only more CPU and main memory, but also hard disks, the network, or the graphics adapter.

Not even the team of Qemu and KVM can successfully cover the entire field of modern virtualization. This all changes with a third core component: On the one hand, libvirt [4] lets you run multiple Qemu processes and, thus, several guests on a host. On the other hand, libvirt provides an interface for starting and stopping the guests or grabbing snapshots of VMs.

The virsh and virt-manager tools rely on the libvirt interface to help admin staff manage the whole enchilada, but even programmers can use the libvirt services, in particular for complex cloud solutions (e.g., OpenStack). Figure 1 outlines the interaction between KVM, Qemu, and libvirt.

Hardening Systems

The lion's share of the code in the overall solution is not contributed by the KVM kernel component, but by Qemu in the form of various platform emulators. Therefore, you should restrict the Qemu process to user space as far as possible. In practice, this can be done quite simply and effectively with the use of SELinux or AppArmor.

Although both access control systems have the reputation of being difficult to handle – indeed, manual (!) maintenance of control lists does not deliver a good return on the effort invested – distributions that offer one of the two as a standard package also include a working configuration for all packages, including libvirt.

The task of hardening Qemu essentially consists of choosing a Linux distro that supports SELinux or AppArmor out of the box. In this case, libvirt automatically generates the rules for appropriate protections, limiting the privileges of Qemu processes in an appropriate manner. In particular, this limits access to third-party disk images that are stored locally.

Libvirt also uses other hardening features such as cgroups to secure the Qemu process effectively, provided the operating system supports it, which is another reason to look closely at the Linux distro you use. Debian is not recommended, for example, because it does not support SELinux and AppArmor. However, Ubuntu, Red Hat Enterprise Linux (RHEL) 7, and CentOS 7 are recommended with AppArmor or SELinux on board.

Kernel Samepage Merging

RHEL and its clone fail elsewhere, however: When you install Qemu, Kernel Samepage Merging (KSM) [5] is activated automatically. This well-meant technique is intended to deduplicate memory pages with identical content and promises efficiency on a virtualization host, because guests with the same operating systems have many memory pages with identical content. Thanks to KSM, the host only needs to keep them in RAM once.

Apart from the fact that KSM often saves substantially less memory in practice than expected, it comes with significant security risks. The problem is that two guests who have access to the same physical memory page can determine the behavior of the other guest on that memory page. On first access, the page is sent to the CPU cache, which delivers the data in a measurably faster way as of the second read. With KSM, a second guest can leverage this fact to determine whether the first guest has read data identical to its own.

If you think that this is only significant under laboratory conditions, think again: Irazoqui et al. [6] have shown how a guest in a real cloud could discover the keys of an AES implementation of OpenSSL.

Any admin of virtualization environments in production use should thus ensure that KSM is disabled. In RHEL 7, CentOS 7, and others, you need to do this manually. Listing 1 shows how to see whether KSM is active. If the output from your system differs, it is highly probable that KSM is active. In this case, Listing 2 shows how KSM can be switched off on a CentOS 7 system.

Listing 1: Checking the Status of KSM

# cat /sys/kernel/mm/ksm/run 0 # cat /sys/kernel/mm/ksm/pages_shared 0 # pgrep ksmtuned ** #

Listing 2: Disabling KSM

# systemctl stop ksmtuned Stopping ksmtuned: [ OK ] # systemctl stop ksm Stopping ksm: [ OK ] # systemctl disable ksm # systemctl disable ksmtuned # echo 2 > /sys/kernel/mm/ksm/run

The Network Connection

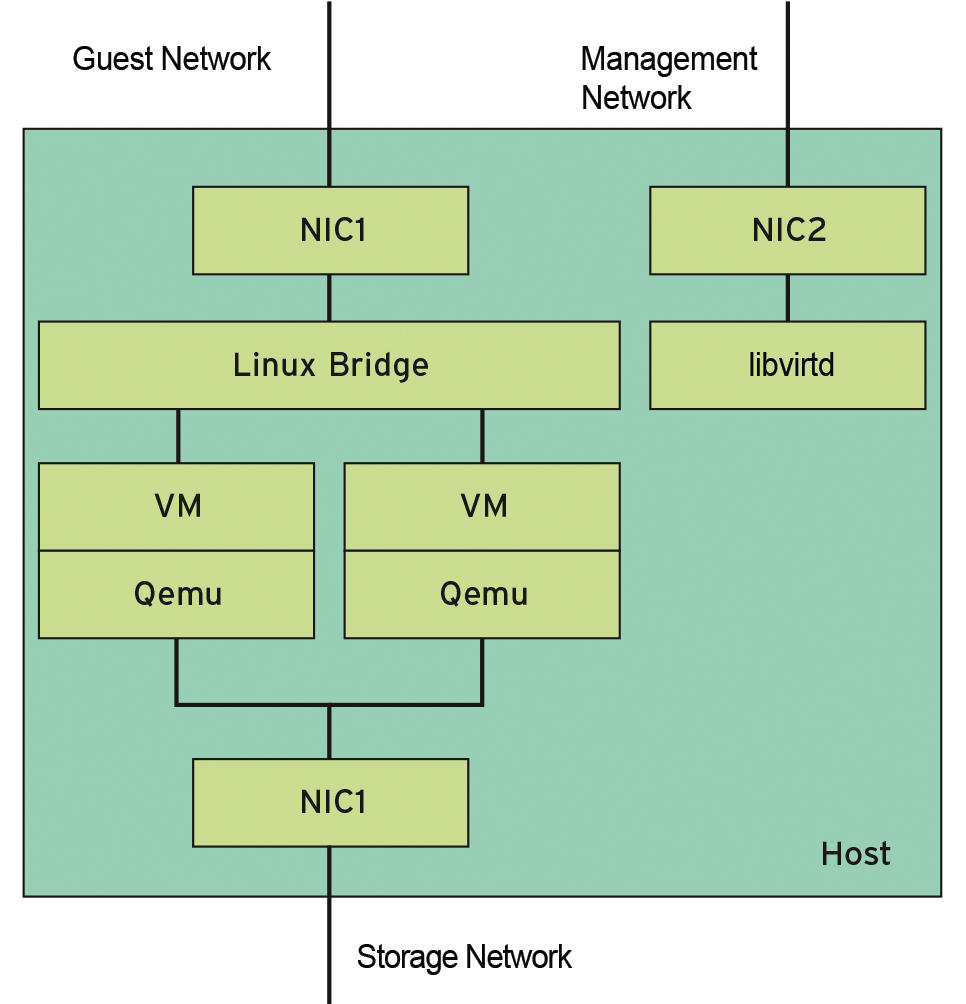

Another challenge for each virtualization host is ensuring a secure network connection for the guests. Essentially three different types of network communication occur: (1) guests communicate with other guests or the outside world, (2) administrative communication manages and controls the guests, and (3) accessing the hard disk images creates traffic to the memory back end. Admins typically separate these flows with the use of multiple physical networks. Figure 2 outlines a simple host structure with three network interfaces.

The problem is that the Linux kernel loves to communicate, which means that, in case of an incorrect guest configuration, the guest might be able to access the other networks rather than just the host. In an unfavorable case, this can mean, for example, that the guest can gain access to disk images belonging to other guests.

An Attack Scenario

The following example assumes that the host implements the connection as a Linux bridge. (However, similar dangers also exist with other connectivity methods, such as MacVTap [7].) The host will then use Layer 2 packet forwarding. In the simplest case, it therefore acts as a switch, routing packets between the guests themselves or between the guests and the external network interface of the guest network. The routing is usually handled by an external device; the guest learns its address via DHCP.

But what if the guest uses the host as its router? How does the host react, if it receives a packet from the guest with a target IP address belonging to a computer of the storage back end? Technically, you can reduce this to the question of whether IP forwarding is enabled on the host. You would assume it wasn't, because there's no reason to do so.

Unfortunately, it is set up automatically during the libvirt installation. If the administrator now makes the mistake of not only assigning IP addresses to the network interfaces for the management and storage network, but also to the network interface on the guest network, the barn door is flung open. Now, the host acts like a router that generously conveys packets between the networks, if you only ask it to do so.

Routing Without an IP Address

Even if the guest network interface does not have an IP address, the guest might still manage to communicate beyond the border of the guest network, because of the IP forwarding mechanism, which may be basic in terms of design but is anything but intuitive. What does a Linux system do with a received packet that is addressed to itself in Layer 2 (MAC address), but to another system in Layer 3 (IP address)? To put it simply: If IP forwarding is enabled, Linux forwards the packet to the target system; otherwise, it drops the packet. It follows that the network interface on which a packet arrives does not need to have an IP address itself for the kernel to decide to forward the packet.

Forwarding a response packet often does not work in practice, but still, an incorrectly routed DHCP or DNS packet can cause a great deal of damage. The admin must therefore ensure that no IP address is attached to the network interface of the guest network, that IP forwarding is disabled, and that the reverse path (RP) filter is switched on.

A Study as a Source

The security of KVM-based virtualization can certainly be considered highly complex, which is why I have only singled out a few, albeit very central, issues. The material comes from a 2016 security analysis performed by OpenSource Security Ralf Spenneberg [8] on behalf of The German Federal Office for Information Security [9]. The company not only investigated the security of KVM itself, but also of its ecosystem, consisting of Qemu and libvirt, as well as network-based data storage with Ceph and GlusterFS. The study is due to be published soon.