Software-defined storage with LizardFS

Designer Store

Most experienced IT professionals equate storage with large appliances that combine software and hardware by a single vendor. In the long term, such boxes can be inflexible, because they only scale to a predetermined scope and are difficult to migrate to solutions by other vendors. Enter software-defined storage (SDS), which abstracts the storage function from the hardware.

Distributed SDS filesystems turn quite different traditional servers with hard disks or solid-state drives (SSDs) into a pool, wherein each system provides free storage and assumes different tasks – depending on the solution. Scaling is easy right from the start: You just add more equipment. Flexibility is a given because of independence from the hardware vendor and the ability to respond quickly to growing demand. Modern storage software has fail-safes that ensure data remains available across server boundaries.

Massive Differences

The open source sector claims solutions such as Lustre, GlusterFS, Ceph, and MooseFS. They are not functionally identical; for example, Ceph focuses on object storage. Much sought after is a feature in which SDS provides a POSIX-compatible filesystem; from the perspective of the client, the distributed filesystem [1] acts much like an ordinary local disk.

Some of the storage software solutions are controlled by companies such as Xyratex (Lustre), and Red Hat (GlusterFS and Ceph). Other solutions depend on a few developers and at times see virtually (or definitively) no maintenance. At the MooseFS project [2] (Core Technology), for example, hardly any activity was seen in the summer of 2013, and the system, launched in mid-2008, looked like a one-man project without a long-term strategy and active community. However, it was precisely MooseFS and its abandonment that prompted a handful of developers to initiate a fork and continue the development under the GLPLv3 license. LizardFS [3] was born.

The developers see LizardFS as a distributed, scalable filesystem with enterprise features such as fault tolerance and high availability. About 10 main developers work on the software independently under the umbrella of Warsaw-based Skytechnology [4]. If you want to install it, you can build LizardFS from the sources or go to the download page [5] to pick up packages for Debian, Ubuntu, CentOS, and Red Hat. Various distributions officially introduced the software in recent months, as well.

Components and Architecture

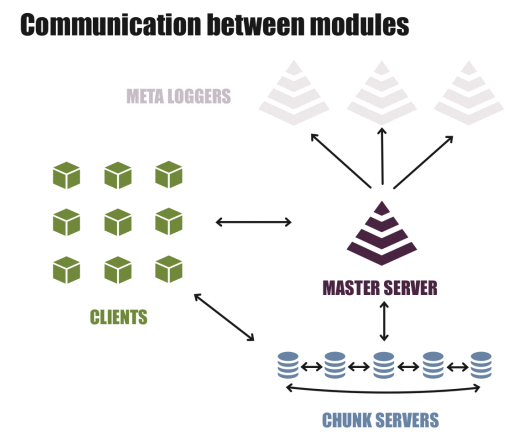

The LizardFS design provides separation of the metadata (e.g., file names, locations, and checksums) from the data, which avoids the emergence of inconsistencies and supports atomic actions at the filesystem level. The system locks all operations through a master, which stores all the metadata and is the central contact for server components and clients.

If the master fails, a second master can assume a shadow role. To set this up, you install a master on an additional server that remains passive. The shadow master permanently picks up all the changes to the metadata and thus reflects the state of the filesystem in its own RAM. If the master fails, the second system with the shadow master assumes the active role and provides all participants with information (Figure 1).

Automatic failover between the primary master and theoretically unlimited number of secondary masters is missing in the open source version of LizardFS. Administrators are either forced to switch over manually or build their own failover mechanism on a Pacemaker cluster. Because switching the master role comprises only changing a configuration variable and reloading the master daemon, administrators with experience in the operation of clusters will develop their own solutions quickly.

Chunk Servers for Storage

The chunk servers are responsible for managing, saving, and replicating the data. All of the chunk servers are interconnected and combine their local filesystems to form a pool. LizardFS divides data into stripes of a certain size, but they remain files from the perspective of the clients.

Like all other components, you can install chunk servers on any Linux system. Ideally they should have fast storage media (e.g., serial-attached SCSI hard drives or SSDs) and export a part of their filesystem to the storage pool. In a minimal case, a chunk server runs on a virtual machine and shares a filesystem (e.g., a 20GB ext4).

The metadata backup logger always collects the metadata changes (much like a shadow master) and should naturally run on its own system. Unlike a typical master, it does not keep the metadata in memory, but locally on the filesystem. In the unlikely event of a total failure of all LizardFS masters, a backup is thus available for disaster recovery.

None of the components pose any strict requirements on the underlying system. Only the master server should have slightly more memory, depending on the number of files to manage.

What the Clients Do

From the perspective of its clients, LizardFS provides a POSIX filesystem, which, like NFS, mounts under /mnt. Admins should not expect too much in terms of account management: LizardFS does not support users and groups that come from LDAP or Active Directory. Any client on the LAN can mount any share. If you want to restrict access, you can only design read-only individual shares, or define access control lists (ACLs) on the basis of the network segment, IPs, or both. You can also define one (!) password for all users.

Although the server components mandate Linux as the basis, both Linux clients, which install the lizardfs-client package, and Windows machines can access the network filesystem using a proprietary tool from Skytechnology. The configuration file for LizardFS exports is visibly based on the /etc/exports file known from NFS and takes a goodly number of the well-known parameters from NFS.

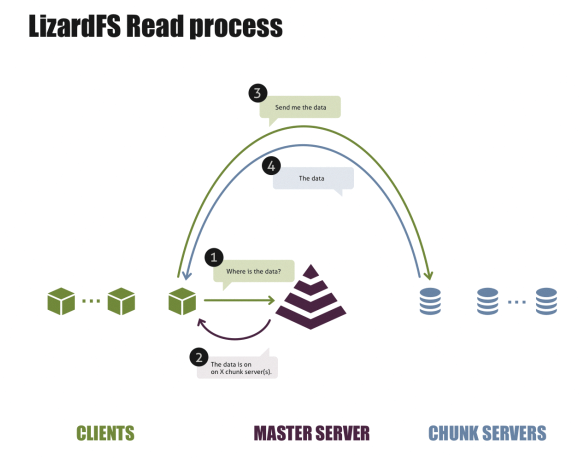

When a user or an application accesses a file in the storage pool, the LizardFS client contacts the current master, which has a list of the current chunk servers and the data hosted there. The master randomly presents a suitable chunk server to the client, which then contacts the chunk server directly and requests the data (Figure 2).

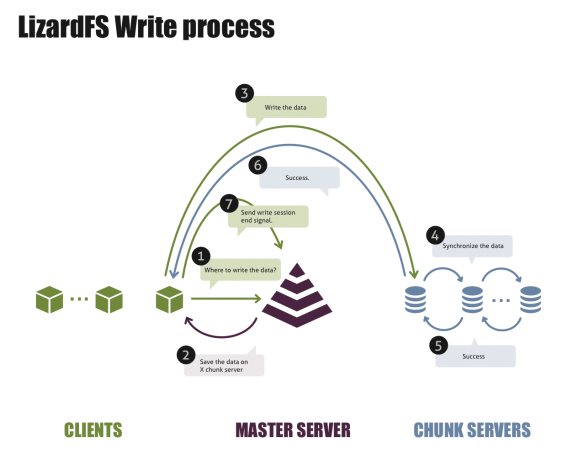

The client also talks to the master for write operations, in case the LizardFS balancing mechanisms have moved the file in question or the previous chunk server has gone offline, for example. In the default configuration, the master also randomly selects a chunk server to store the data, although one condition is that all servers in the pool are evenly loaded.

Once the master has selected a chunk server for the write operation and delivered its name to the client, the client sends the data to the target chunk server (Figure 3), which then confirms the write and – if necessary – triggers a replication of the newly written data. The chunk server thus ensures that a replication target is accessed as quickly as possible. A response to the LizardFS client signals the success of the write operation. The client then ends the open write session by informing the master of the completed operation.

During both read and write operations, the LizardFS client observes a topology, if configured. If the client thinks it useful, it will prefer a local chunk server to those that would result in higher latency. Nearby or far away can be defined on the basis of labels (such as kansas, rack1, or ssd) or by reference to the IP addresses of the nodes.

Topologies and Goals

By nature, LizardFS runs on multiple servers, although in terms of functionality, it makes no difference whether these are virtual or dedicated machines. Each server can take any role – which can also change – although specialization often makes sense. For example, it makes sense to run chunk servers on hosts with large and fast hard disk drives, whereas the master server in particular requires more CPU and memory. The metadata backup logger, however, is happy on a small virtual machine or a backup server because of its modest requirements.

LizardFS uses predefined replication objectives (goals) to replicate the data as often as required between targets (i.e., the data is both redundant and fault-tolerant). If a chunk server fails, the data is always available on other servers. Once the defective system is repaired and added back into the cluster, LizardFS automatically redistributes the files to meet the replication targets.

Admins configure the goals on the server side; alternatively, clients may also set replication goals. Thus, a client can, for example, store files on a mounted filesystem normally (i.e., redundantly), whereas temporary files can be set to be stored without replicated copies. By giving the client some room in configuring file goals, a certain degree of flexibility can be reached.

Chunk servers can be fed the topologies from your own data center so that LizardFS knows whether the chunk servers reside in the same rack or cage. If you configure the topologies intelligently, the traffic caused by replication will only take place between adjacent switches or within a co-location. It is conducive to locality that LizardFS also reports the topologies to the clients.

If you want to use LizardFS setups across data center boundaries, you will also want to use topologies as a basis for georeplication and tell LizardFS which chunk servers are located in which data center. The clients that access the storage pool can thus be motivated to prefer the chunk servers from the local data center to remote chunk servers.

Furthermore, users and applications themselves do not need to worry about replication. Correct georeplication is a natural consequence of properly configured topologies, and the setup automatically synchronizes the data between two or more sites.

Scale and Protect

If the free space in the storage pool is running low, you can add another chunk server. Conversely, if you remove a server, the data needs to be stored elsewhere. If a chunk server fails, LizardFS automatically rebalances and offers alternative chunk servers as data sources to clients currently accessing their data. The master servers scale much like the chunk servers. If two masters are no longer enough (e.g., because a new location is added), you simply set up two more masters at that location.

If you want data protection on top of fail-safe and fault-tolerant data, you do need to take additional measures. Although LizardFS sometimes distributes files as stripes across multiple servers, this does not offer many benefits in terms of security and privacy. LizardFS does not have a built-in encryption mechanism, which is a genuine drawback.

You need to ensure that at least the local filesystems on the chunk servers are protected (e.g., by encrypting the hard disks or using LUKS-encrypted filesystem containers). The approach is useful in the case of hardware theft, of course, but anyone can access the current chunk server if they run a LizardFS client on the LAN.

In a talk on new features upcoming in LizardFS, Skytechnology's Chief Satisfaction Officer, Szymon Haly, reported at the beginning of November 2016 that his company definitely sees the need for encryption features. A new LizardFS version was planned for the first quarter of 2017 that would enable encrypted files and folders. Haly did not reveal the type of encryption or whether the new version would also use secure communication protocols.

Free Interface, Proprietary Failover

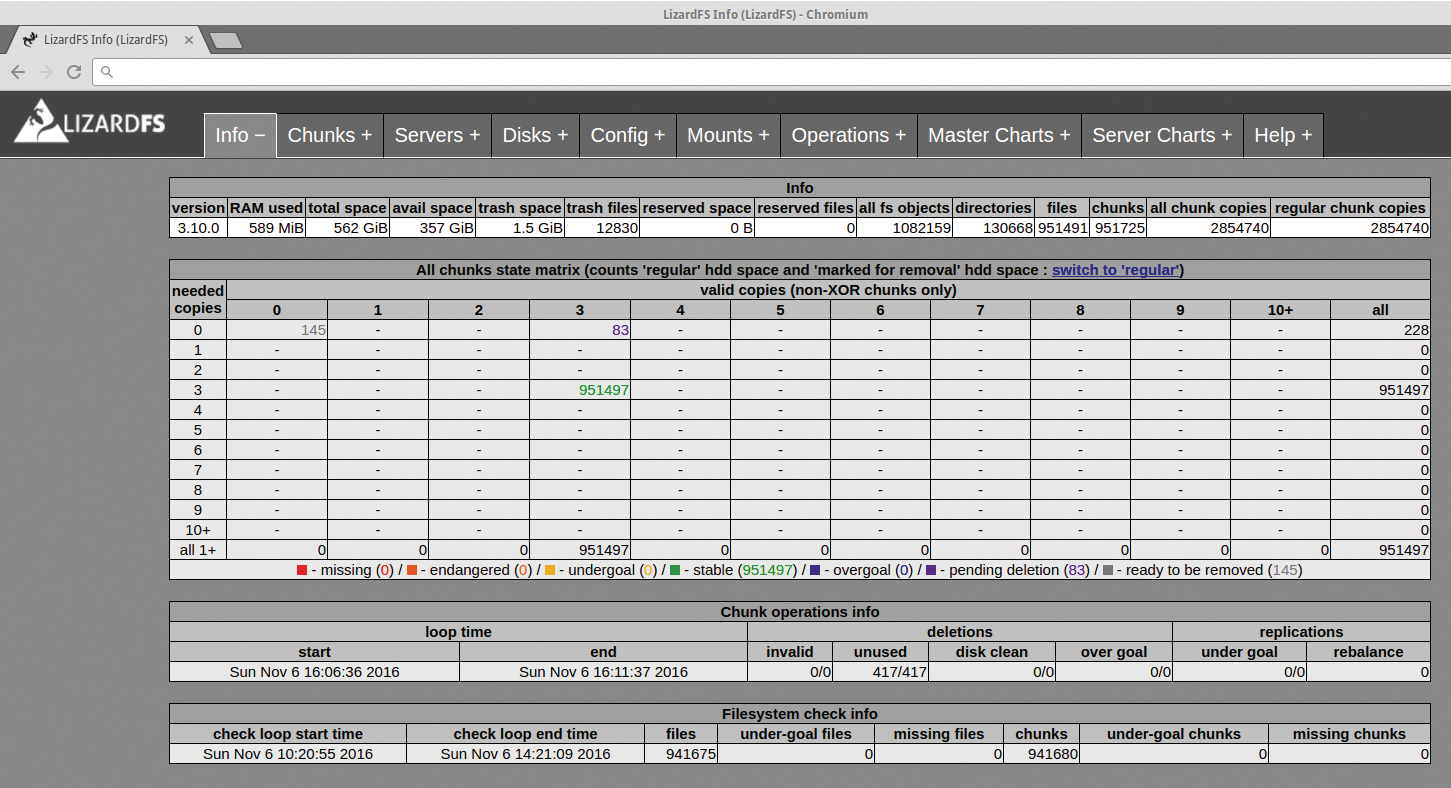

To help users and administrators keep track, LizardFS provides a simple but sufficient web GUI in the form of the CGI server (Figure 4). The admin can basically install the component on each system and thus have an overview of all the servers on the network, the number of files, their replication states, and other important information.

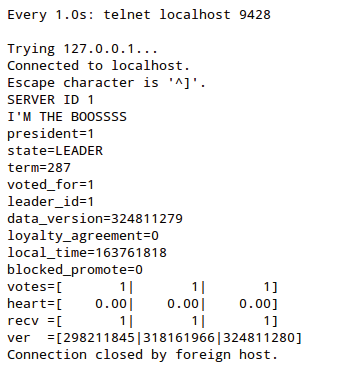

If you enter a support agreement with Skytechnology, you can use the proprietary uRaft daemon, which is independent of LizardFS and provides automatic failover according to the Raft consensus algorithm [6]: All masters on which the uRaft daemon is installed use heartbeats to select a primary master. For the quorum to work reliably, the number of masters must be odd. They also all need to reside on the same network, because the primary master's IP (floating IP) moves with the primary master.

In the case of uRaft, simple shell scripts make sure that the master is promoted or downgraded appropriately. If you run LizardFS with the uRaft daemon, you abandon the typical master and shadow roles and leave it to uRaft to manage the respective roles. uRaft even takes care of starting and stopping the master daemon, which makes the init script of the LizardFS master useless. Figure 5 shows uRaft providing information on cluster health.

Monitoring

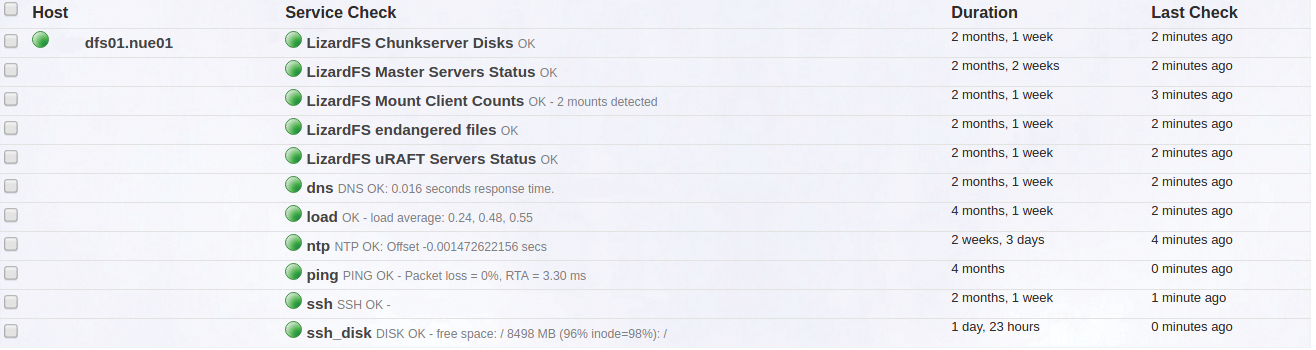

For basic monitoring of a LizardFS environment, the aforementioned web interface is available; however, it cannot replace classic monitoring. LizardFS uses a special output format for almost all administrative tools, which also allows for querying of states. Thus, there is nothing to keep you from setting up a connection to Nagios or some other monitoring tool.

Thanks to compatibility with an earlier version of MooseFS, many monitoring modules or plugins for the original project – of which there are many on the Internet – also work with LizardFS (Figure 6). Resourceful system administrators can write their own checks, as well. An even simpler solution is possible: I worked for a company that has entered into a support agreement with Skytechnology. In the context of the support services, we received Nagios checks that, apart from a couple of details, allowed reliable monitoring of the LizardFS components.

Where to Use It

The POSIX-compliant LizardFS can in principle be used wherever a client requires disk space. The most sensible use arises when user data needs to be stored redundantly for better availability and you want to avoid using classic (think "expensive and inflexible") storage appliances. In contrast, LizardFS distributes the data on the basis of its own system across a more or less large and widespread, in terms of network topology, pool of inexpensive standard machines.

However, this scheme can also be a disadvantage: You cannot specify that related data is stored contiguously on a chunk server. Although you can use topologies to influence the storage locations (e.g., one copy in rack 14, another in rack 15), this not a guarantee of fixed locations. Contiguous data that spreads across more than one chunk server (and therefore across rack or even data center boundaries), naturally leads to changing network latencies, resulting in significantly different response times for application read and write operations that is noticeable to users.

Distributed storage for large, heavily used databases proves to be detrimental in practice – system architects do well to be slightly skeptical of LizardFS in this case. For everything else, however, it is perfectly suitable, including, for example, for rendering farms or media files in which all clients simultaneously access nearby (in terms of network topology) chunk servers, thus pushing server performance to the limit.

The ability to use LizardFS as storage for virtual machines is little known. According to its own statements, Skytechnology is working on better VMware integration.

Quotas, Garbage, Snapshots

When many systems or users access LizardFS volumes, you can enable quotas and restrict the use of disk space. You can also enable recycle bins for LizardFS shares for users who are used to having them in Samba and Windows shares. Files moved into the recycle bin remain on the chunk servers until they exceed the configured retention time. Administrators can mount shares with certain parameters, thus providing access to the virtual recycle bins. Unfortunately, you have no way to provide the users themselves access to previously deleted data.

Snapshots offer another approach to storing files. A command to duplicate a file to a snapshot is particularly efficient because the master server copies only the metadata. Only when the content of the original starts to differ from the snapshot does the chunk server modify the appropriate blocks.

Thanks to the replication goals and topologies, you can create files so that copies are archived on a desired chunk server or a group chunk server. LizardFS can natively address tape drives, so you can equip a group chunk server with linear tape open (LTO) storage media, thus ensuring that your storage system always keeps certain data on tape and that clients can read these if necessary.

Compared

LizardFS competes with SDS, such as Ceph and GlusterFS. Ceph is primarily an object store – comparable to the Amazon S3 API – that can also provide block devices, and the CephFS POSIX-compliant filesystem is more of an overlay of the object store than a robust filesystem. CephFS was classified as ready for production at the end of April 2016, so long-term results are not available for a direct comparison, which in any case would be of little real value.

On paper, GlusterFS offers almost the same functionality as LizardFS, but it has been on the market since 2005 and thus enjoys an appropriate standing in the SDS community. Red Hat's distributed storage system offers many modes of operation that produce different levels of reliability and performance depending on the configuration.

GlusterFS offers configuration options at the volume level, whereas LizardFS defines the replication targets at the folder or file level. Both variants have advantages and disadvantages. In the case of GlusterFS, you need to opt for a variant when creating the volume, whereas you can change the replication modes at any time with LizardFS.

Security-conscious system administrators appreciate the ability of GlusterFS to encrypt a volume with a key. Only servers that have the correct key are then entitled to mount and decrypt the volume. Both GlusterFS and LizardFS are implemented on Linux clients as Filesystem in Userspace (FUSE) modules.

LizardFS only writes data put in its care to a chunk server; from there, the chunk servers replicate the data between themselves. With GlusterFS, however, the client handles the replication: The write operation occurs in parallel on all involved GlusterFS servers, so the client needs to make sure the replication was successful everywhere, resulting in poorer performance for write access, although of little consequence otherwise.

Whereas LizardFS always presents a master to the client, GlusterFS clients can specify multiple servers when mounting a volume. If the first server in the list fails, the client independently accesses one of the other nodes, which is a clear advantage over LizardFS, because the admin cannot guarantee a failure-safe environment without a proprietary component or possibly a hand-crafted Pacemaker setup.

Conclusions

LizardFS is not positioned as a competitor to the Ceph object store, but as an alternative to GlusterFS, and it is already running as a production SDS solution in setups with several hundred terabytes. LizardFS has many features that are likely to match the needs of many potential SDS users (see the "Evaluation and Experience" box). Additionally, it can keep data in sync between multiple data centers. More excitement is in store if the developers stay true to their announcement and implement an S3-compatible API.

Future

During the one-year evaluation period, I was witness to the introduction of a number new features: For example, documentation [7] was launched, which even describes installation. Also, Skytechnology has hired new developers, probably because of rapid growth: "We are now collaborating on completely replacing the remaining MooseFS code, so we can implement features that were previously not possible," reported Michal Bielicki, head of the DACH region (Germany-Austria-Switzerland) at LizardFS.

These changes should translate to significant improvements in performance and support for IPv6 and InfiniBand. Chief Satisfaction Officer Szymon Haly also announced file-based encryption for the first quarter of 2017 in an interview with Linux Magazine.

Further improvements will introduce advanced ACLs, better logging behavior for Windows clients, and minimal goal setting, which the community has been requesting for some time now, because these settings could improve reliability and data security.

Rumors that Skytechnology wants to share the uRaft proprietary failover component as an open source project were neither confirmed nor denied by company representatives in the interview. However, Adam Ochmanski, the founder and CEO of Skytechnology, had stated on GitHub at the end of August that LizardFS 3.12.0 would include uRaft [8]. It is unclear whether he was referring to the disclosure of the source code or publication in binary form.

It is unfortunate that Skytechnology, the company behind LizardFS, has so far reserved the automatic failover daemon for paying customers. Admins with experience in high availability indeed might be able to set up a working cluster with Pacemaker without a cookbook guide and scripts or tips describing how to do so. A potential for improvement exists in terms of customer-facing communication (roadmap, more documentation, community work), software versioning (small changes always cause a major release), and technical details: An SDS today should come with native encryption for communication between the nodes and data, and it should make sure that write operations remain possible, even if many chunk servers are offline. With just 10 or more active developers, this work is unlikely to be a quick process.