Modern Fortran for today and tomorrow

Up To Date

Fortran has been one of the key languages for technical applications since almost forever. With the rise of C and C++, the popularity of Fortran waned a bit, but not before a massive library of Fortran code had been written. The rise of scripted languages such as Perl, Python, and R also dented Fortran a bit, but it is still in use today for many excellent reasons and because of a HUGE library of active Fortran code.

Fortran 90 took Fortran 77 from the dark ages by giving it new features that developers had wanted for many years and by deprecating old features – but this was only the start. Fortran 95 added new features, including High Performance Fortran (HPF), and improved its object-oriented capabilities. Fortran 2003 then extended the object-oriented features; improved C and Fortran integration, standardizing it, and making it portable; and added a new range of I/O capabilities. Features and capabilities still on the developers' wish lists led to Fortran 2008 and concurrent computing.

I still code in Fortran for many good reasons, not the least of which is performance and readability.

Origins

Fortran was originally developed as a high-level language so code developers didn't have to write in assembly. It was oriented toward "number crunching," because that was the predominant use of computers at the time (Facebook and YouTube weren't around yet). The language was fairly simple, and compilers were capable of producing highly optimized machine language. Fortran had data types that were appropriate for numerical computations, including a complex data type (not many languages have that), and easily dealt with multidimensional arrays, which are also important for number crunching. For all of these reasons, Fortran was the language of numerical computation for many years.

The advent of C caused a lot of new numerical code to be written in C or C++, and web and systems programming also moved to other languages, such as Java, Perl, and Python. However, Fortran is definitely not dead, and a lot of numerical code is still being written today using modern Fortran.

Fortran 77

Fortran began in about 1957 with the advent of the first compiler. It was used a great deal because it was much simpler than assembly language. In my experience, Fortran 77 (F77) brought forth the features that made the language easier to use to create many algorithms. As a result, a lot of older Fortran code was rewritten for F77.

In the past, Fortran was not case sensitive, so a lot of code was written in uppercase, creating a precedent followed for many years. You will find that Fortran coders of some lineage code in uppercase. However, in this article I mix upper- and lowercase in the examples.

In F77, a very useful way to share data across functions and subroutines was the use of common blocks, in which you define multiple "named" blocks that contain variables. (They could be of mixed type, including arrays.) A typical scenario was to put the common blocks in an include file and put each function or subroutine into its own file. Each file that needed access to the common block "included" the file of common blocks.

Another feature, or limitation, of Fortran is that all arrays have to be fixed in size at compile time (no dynamic memory), so if you declare an array x(100,100), you cannot change the dimensions or size after the code has been compiled. One trick was to define one very large vector and then "give" parts of it to various portions of the code. Although a little messy, you could simulate dynamic memory if the array was large enough.

Fortran also had fixed formatting. Some languages sort of have this today, such as Python, which requires indentation. In Fortran, the first column of each line could contain a C or c, indicating the beginning of a comment line, although you couldn't put comments just anywhere in the code. It could also be used for a numerical label. Column 6 was reserved for a continuation mark, so that lines that were longer than one line could be continued. The code began in column 7. Columns 1 to 5 could be used for statement labels (Listing 1; note that the first and last three lines are not code, but guides to show how code aligns in columns).

Listing 1: Sample Fortran 77 Code

11111111112222222222

12345678901234567890123456789

-----------------------------

SUM = 0.0

D0 100 I=1,10

SUM = SUM + REAL(I)

100 CONTINUE

...

Y = X1 + X2 + X3 +

1 X4 + X5 + X6

-----------------------------

11111111112222222222

12345678901234567890123456789

By default, F77 defines variables starting with (upper- or lowercase) i, j, k, l, m, and n as integers, so they are used as do loop counters. Variables that use any other letter of the alphabet are real unless the code writer defines them to be something else (they could even define them to be integers). You could turn off this implicit definition with IMPLICIT NONE just after the program, subroutine, or function name, which forces defining each and every variable (not necessarily a bad thing).

Fortran 90

The next Fortran standard, referred to as Fortran 90 (F90), was released in 1991 as an ISO standard, and in 1992 as an ANSI standard. Fortran 90 is a huge step up from F77, with a number of new features added and a number of older features deprecated. Fortran 90 was the first big step in creating modern Fortran.

The first new feature of importance was free-form source input. You were now allowed to put the code anywhere you wanted, and you could label statements, for example:

sum = 0.0 all: do i=1,10 sum = sum + real(i) enddo all

The next big feature in F90 is my personal favorite – allocatable arrays. In Listing 2, line 3 shows how to define an allocatable array. In this case, array a is a 2D array defined by (:,:). The allocation does not occur until line 7. Along with the array allocation is a "status" that returns a nonzero value if the allocation was unsuccessful or 0 if it was successful.

Listing 2: Allocatable Arrays

01 PROGRAM TEST1 02 IMPLICIT NONE 03 REAL, ALLOCATABLE :: a(:,:) 04 INTEGER :: n 05 INTEGER :: allocate_status 06 n=1000 07 ALLOCATE( a(n,n), STAT = allocate_status) 08 IF (allocate_status /= 0) STOP "Could not allocate array" 09 ! Do some computing 10 DEALLOCATE( a ) 11 END PROGRAM TEST1

Allocatable arrays use heap memory and not stack memory, so you can use a lot more memory. For almost any large arrays, I always use allocatable arrays to make sure I have enough memory.

After performing some computations, you then deallocate the array, which returns the storage to the system. Line 9 is a comment line. Fortran 90 allows you to put a comment anywhere by prefacing it with an exclamation mark.

Another feature added to F90 was array programming, which allowed you to use array notation rather than do loops. For example, to multiply two, 2D arrays together, use c = matmul(a, b), where a, b, and c are compatible arrays. You could also use portions of an array with d(1:4) = a(1:4) + b(8:11).

Unlike CPU-specific code, typically with loop unrolling to achieve slightly better performance, Fortran coders used array notation because it was so simple to write and read. The code was very portable, because compiler writers created very high performance array intrinsic functions that adapted to various CPUs. Note that this included standard intrinsic mathematical functions such as square root, cosine, and sine.

Fortran 90 also introduced derived data types (custom data types). The simple example in Listing 3 shows how easy it is to create several derived data types (lines 8-15). The derived (customer data) type has variables (e.g., i, r, and r8), a fixed-size array (array_s, which is allocated on the stack), an allocatable array (array_h, which is allocated on the heap), and another derived type, meta_data. Using derived types within derived types allows you to create some complex and useful custom data types.

Listing 3: Derived Data Type

01 program struct_test 02 type other_struct 03 real :: var1 04 real :: var2 05 integer :: int1 06 end type other_struct 07 08 type my_struct ! Declaration of a Derived Type 09 integer :: i 10 real :: r 11 real*8 :: r8 12 real, dimension(100,100) :: array_s ! Uses stack 13 real, dimension(:), allocatable :: array_h ! Uses heap 14 type(other_struct), dimension(5) :: meta_data ! Structure 15 end type my_struct 16 17 ! Use derived type for variable "a" 18 type(my_struct) :: a 19 ! ... 20 write(*,*) "i is ",a%i 21 22 ! Structures (variables) of the the derived type my_struct 23 type(my_struct) :: data 24 type(my_struct), dimension(10) :: data_array 25 26 end program struct_test

To access a specific part or member of a derived type, you simply use a percent sign (%), as shown in line 20. You can also make an allocatable derived type (lines 23-24).

A convenient feature called modules, and generically referred to as "modular programming" [1], was added to F90. This feature allows you to group procedures and data together. Code can take advantage of a module, and you can control access to it through the use of simple commands (e.g., public, private, contains, use). For all intents and purposes, it replaces the old common blocks of F77.

Modules are extremely useful. They can contain all kinds of elements, such as parameters (named constants), variables, arrays, structures, derived types, functions, and subroutines. The simple example in Listing 4 defines the constant pi and then uses the module (line 7) to make the contents available to the program.

Listing 4: Modules

01 module circle_constant 02 real, parameter :: pi = 3.14159 03 end module circle_constant 04 05 program circle_comp 06 ! make the content of module available 07 use circle_constant 08 real :: r 09 ! 10 r = 2.0 11 write(*,*) 'Area = ', pi * r**2 12 end program circle_comp

The example in Listing 5 uses contains within a module to access content outside of the module. You can go one step further and denote public components (the default) that can be used outside the module and private components that can only be used within the module, giving you a lot more control over what can be accessed by other parts of the code.

Listing 5: Accessing External Content

01 module circle_constant 02 real, parameter :: pi = 3.14159 03 04 type meta_data 05 character(len=10) :: color 06 real :: circumference 07 real :: diameter 08 real :: radius 09 real :: area 10 end type meta_data 11 12 contains 13 subroutine meta_comp(r, item) 14 type(meta_data) :: item 15 item%diameter = 2.0 * r 16 item%area = pi * r **2 17 item%circumference = 2.0 * pi * r 18 end subroutine 19 20 end module circle_constant 21 22 program circle_comp 23 ! make the content of module available 24 use circle_constant 25 real :: r 26 integer :: iloop 27 type(meta_data), dimension(10) :: circles 28 ! 29 r = 2.0 30 circles(1)%radius = 4 31 circles(1)%color = "red" 32 call meta_comp(r, circles(1)) ! Call the module function 33 34 circles(2:10)%color = "blue" ! array operation 35 r = 4.0 36 circles(2:10)%radius = r ! array operation 37 do iloop=2,10 38 call meta_comp(r,circles(iloop)) 39 end do 40 41 end program circle_comp

POINTER was a new type of variable in F90 that references data stored by another variable, called a TARGET. Pointers are typically used as an alternative to allocatable arrays or as a way to manipulate dynamic data structures, as in linked lists.

A pointer has to be defined as the same data type and rank as the target and has to be declared a pointer. The same is true for the target, which has to have the same data type as the pointer and be declared a target. In the case of array pointers, the rank, but not the shape (i.e., the bounds or extent of the array), has to be specified. The following are simple examples of a declaration

INTEGER, TARGET :: a(3), b(6), c(9)INTEGER, DIMENSION(:),POINTER :: pt2

and multidimensional arrays:

INTEGER, POINTER :: pt3(:,:) INTEGER, TARGET :: b(:,:)

You cannot use the POINTER attribute with the following attributes: ALLOCATABLE, EXTERNAL, INTRINSIC, PARAMETER, INTENT, and TARGET. The TARGET attribute is not compatible with the attributes EXTERNAL, INTRINSIC, PARAMETER, and POINTER.

Using pointers is very simple, having only two operators (Listing 6). In some code, pointers are also used with blocks of dynamic memory (lines 13-17), for which you still have to use the allocate statement (function). In this example, PTR2 points to a single real value, and PTRA points to a block of dynamic memory for 1,000 real values. After you're done using the block of memory, you can deallocate it. In this regard, the pointers behave very much like allocatable arrays. One other common use for pointers is to divide arrays into smaller sections with the pointer pointing to that subsection (Listing 7).

Listing 6: Pointers

01 PROGRAM PTR_TEST1 02 INTEGER, POINTER :: PTR1 03 INTEGER, TARGET :: X=42, Y=114 04 INTEGER :: N 05 REAL, POINTER :: PTR2:wq:w 06 REAL, POINTER :: PTRA(:) 07 ! ... 08 PTR1 => X ! PTR1 points to X 09 Y = PTR1 ! Y equals X 10 PTR1 => Y ! PTR1 points to Y 11 PTR1 = 38 ! Y equals 38 12 13 ! Dynamic memory blocks 14 N = 1000 15 ALLOCATE( PTR2, PTRA(N) ) 16 ! Do some computing 17 DEALLOCATE(PTR2, PTRA) 18 19 END PROGRAM PTR_TEST1

Listing 7: Pointers and Arrays

01 program array_test 02 implicit none 03 real, allocatable, target :: array(:,:) 04 real, pointer :: subarray(:,:) 05 real, pointer :: column(:) 06 integer :: n 07 integer :: allocate_status 08 ! 09 n = 10 10 allocate( array(n, n), stat = allocate_status ) 11 if (allocate_status /= 0) stop "Could not allocate array" 12 ! 13 subarray => array(3:7,3:7) 14 column => array(:,8) 15 ! 16 nullify(subarray) 17 nullify(column) 18 deallocate(array) 19 20 end program array_test

A linked list [2], a sequence of "nodes" that contain information and point to the next node in the sequence, is a very useful data structure. Variations allow the node to point to the previous node. Although it's not difficult to write for specific cases, generic linked lists [3] are more difficult. One instructive example online creates a linked list manager [4].

Fortran 90 created a way of specifying precision to make the code more portable across systems. The generic term used is kind. Real variables can be 4-byte (real*4), 8-byte (real*8, usually referred to as double precision), or 16-byte (real*16). In this example, I assign various kinds of precision to variables.

real*8 :: x8 ! kind=8 or doule precision real*4 :: x4 ! kind=4 or single precision real(kind=4) :: y4 ! integer*4 :: i4 ! integer single precision (kind=4) integer(kind=8) :: i8 ! Integer double precision

By using kind or the equivalent *<n> notation, you make your code much more portable across systems.

At a high level, in addition to the features mentioned, F90 also introduced in-line comments, operator overloading, unlabeled do loops (constructs such as do, if, case, where, etc. may have names.), and the case statement.

In F90, the language developers also decided to deprecate, or outright delete, some outdated F77 features [5], including the arithmetic IF, non-integer DO parameters for control variables, the PAUSE statement, and others. You can easily discover what features have been deprecated or deleted by taking some F77 code, switching the extension to .f90, and trying to compile it with a F90 compiler.

Fortran 90 was only the start. The next two iterations – Fortran 95 and 2003 – pulled Fortran into a new era of programming languages.

Fortran 95

Very quickly after F90 was released, the Fortran standards group started working on the next evolution: Fortran 95 (F95) [6]. The standard was officially published in 1997 and was oriented toward resolving some outstanding issues in F90. However, it did add a new extension for HPF [7]. Also, features that were noted as "obsolete" in F90 were removed in F95.

The HPF extension of F90 focused on parallel computing [8]. HPF uses a data parallel model to spread computations across multiple processors, allowing the use of multicore processors in a node that would otherwise be idle. This model works well for both single-instruction, multiple-data (SIMD) and multiple-instruction, multiple-data (MIMD) techniques.

A few HPF additions were the FORALL statement, compiler directives for array data distribution, a procedure interface for non-HPC parallel procedures, and additional library routines, including data scattering and gathering.

The FORALL construct allows writing array functions that look more like the original mathematical formula and makes it a bit easier to change the code for different ranges without worrying that the arrays are conformable. A quick example is the basic portion of the four-point stencil for the 2D Poisson equation problem [9] (Figure 1).

![Four-point stencil formula [10] Four-point stencil formula [10]](images/F01.png)

Assume the domain ranges from 1 to n for both i and j. Therefore the iterations are over i = 2 to n-1 and j = 2 to n-1. You can write the iteration over the domain using array notation as follows:

a(2:n-1,2:n-1) = 0.25 * (a(1:n-2,2:n) + a(3:n,2:n) + a(2:n,1:n-2) + a(2:n,3:n))

Using forall, the same can be written as:

forall (i=2:n-1, j=2:n-1) a(i,j) = 0.25*(a(i-1,j) + a(i+1,j) + a(i,j-1) + a(i,j+1))

Using forall makes the code a little easier to read. Moreover, it's very easy to change the domain without having to modify the formula itself.

Also introduced as part of HPF were compiler directives that deal with how data is distributed for processing. They are entered into the code as comments (e.g., !HPF$). Anything immediately after !HPF$ is considered an HPF directive [11]. The nice thing about HPF directives is that if the compiler is not HPF-aware, it views the directives as comments and just moves on. This is the same approach taken by OpenACC [12] and OpenMP [13].

The HPF extensions never seemed to be popular with users, and, in fact, not many compilers offered them. While the compilers were being written, a Message Passing Interface (MPI) standard for passing data between processors, even if they weren't on the same node, was becoming popular and provided a way to utilize other processors in the same system or processors on other systems.

Additionally, OpenMP was released, providing a threading model for applications. As with HPF, OpenMP allowed coders to insert directives that told the compiler how to break up the computations into threads and exchange data between threads. These directives were code comments, so compilers that were not OpenMP-capable just ignored them.

Some observed that these two developments led to the decline of HPF, although only a few F95 compilers implemented the extensions; for example, GFortran (GNU Fortran) [14] is not a true F95-compliant compiler because it does not implement HPF, although it implements almost all of the other F95 features.

Fortran 95 added some additional features outside of HPF [15], including FORALL and nested WHERE constructs to aid vectorization, user-defined PURE and ELEMENTAL procedures, and initialization of pointers to NULL(), among others.

In F95, some features or statements were deprecated (i.e., highly recommended not to be used before the next release of the standard) [15], including banning REAL and DOUBLE PRECISION index variables in DO statements and the removal of PAUSE, ASSIGN, the controversial assigned GOTO statement, and the H descriptor.

Fortran 2003

Both F90 and F95 introduced features that allowed object-oriented programming (OOP). Fortran 2003, the next standard, added even more features to enhance the object-oriented capability.

Before Fortran 2003, you could define the basics of classes in F90 [16]. Private and public functions could be used as needed, including constructors and destructors. Several resources are available online about OOP in F90 [17] and F95 [18]. One article even compares F90 and C++ [19], so you can understand the similarities between the two.

In Fortran 2003, the ability to create type-bound procedures [20] was introduced, which makes calling functions within a class much easier. Fortran 2003 also introduced type extensions and inheritance (key aspects of OOP), as well as polymorphism and dynamic type allocation. This collection of additions really completed support for abstract data types, making OOP much easier.

To round out Fortran 2003 [21], derived type enhancements (parameterized derived types, improved control of accessibility, improved structure constructors, and finalizers) and procedure pointers were added.

Classic object-oriented languages such as C++ or Python allow you to define classes that include data and methods (functions) that operate on that data. You can instantiate (create an instance) of the class that has its own data and methods or several instances of the class that each have their own unique data and methods.

In Fortran, modules are roughly equivalent to classes. Modules can contain data and methods (functions and subroutines), but it really doesn't support the concept of separate instances of a module. To get around this, you create a module to contain the methods for the specific class with a derived type that contains the data. From this combination, you can create separate "instances" of the type that pass data to the methods in the modules.

I'll look at a quick F90 example [16] for creating and using classes by creating a module that has two public functions: One computes the area of a circle given the radius, and the other prints out the area of the circle. In the main program, I can create a derived type using the module and then use methods in the module using the derived type (Listing 8).

Listing 8: Classes and Modules

01 MODULE CLASS_CIRCLE 02 IMPLICIT NONE 03 PRIVATE 04 PUBLIC :: CIRCLE, CIRCLE_AREA, CIRCLE_PRINT 05 06 REAL :: PI = 3.1415926535897931d0 ! Class-wide private constant 07 08 TYPE CIRCLE 09 REAL :: RADIUS 10 END TYPE CIRCLE 11 12 CONTAINS 13 14 FUNCTION CIRCLE_AREA(THIS) RESULT(AREA) 15 TYPE(CIRCLE), INTENT(IN) :: THIS 16 REAL :: AREA 17 AREA = PI * THIS%RADIUS**2.0 18 END FUNCTION CIRCLE_AREA 19 20 SUBROUTINE CIRCLE_PRINT(THIS) 21 TYPE(CIRCLE), INTENT(IN) :: THIS 22 REAL :: AREA 23 AREA = CIRCLE_AREA(THIS) ! Call the circle_area function 24 PRINT *, 'Circle: r = ', this%radius, ' area = ', AREA 25 END SUBROUTINE CIRCLE_PRINT 26 END MODULE CLASS_CIRCLE 27 28 PROGRAM CIRCLE_TEST 29 USE CLASS_CIRCLE 30 IMPLICIT NONE 31 32 TYPE(CIRCLE) :: C ! Declare a variable of type Circle. 33 C = CIRCLE(1.5) ! Use the implicit constructor, radius = 1.5 34 CALL CIRCLE_PRINT(C) ! Call a class subroutine 35 36 END PROGRAM CIRCLE_TEST

Notice (lines 32-24) that the derived type is used to create the variable C; then, I can "store" the radius in C by calling the "constructor" of the class (all it does in this case is store the value), and the program calls the "method" to print out the value by passing the derived type instance C.

In Fortran 2003, you can take advantage of some new features to make the same code look more object oriented. In the example in Listing 9, type-bound procedures can be used within the module.

Listing 9: Type-Bound Procedures

01 MODULE CLASS_CIRCLE 02 IMPLICIT NONE 03 PRIVATE 04 PUBLIC :: CIRCLE, CIRCLE_AREA, CIRCLE_PRINT 05 06 REAL :: PI = 3.1415926535897931d0 ! Class-wide private constant 07 08 TYPE, PUBLIC :: CIRCLE 09 REAL :: RADIUS 10 CONTAINS 11 PROCEDURE :: AREA => CIRCLE_AREA 12 PROCEDURE :: PRINT => CIRCLE_PRINT 13 END TYPE CIRCLE 14 15 CONTAINS 16 17 FUNCTION CIRCLE_AREA(THIS) RESULT(AREA) 18 CLASS(CIRCLE), INTENT(IN) :: THIS 19 REAL :: AREA 20 AREA = PI * THIS%RADIUS**2.0 21 END FUNCTION CIRCLE_AREA 22 23 SUBROUTINE CIRCLE_PRINT(THIS) 24 CLASS(CIRCLE), INTENT(IN) :: THIS 25 REAL :: AREA 26 AREA = THIS%AREA() ! Call the type_bound function 27 PRINT *, 'Circle: r = ', this%radius, ' area = ', AREA 28 END SUBROUTINE CIRCLE_PRINT 29 END MODULE CLASS_CIRCLE 30 31 PROGRAM CIRCLE_TEST 32 USE CLASS_CIRCLE 33 IMPLICIT NONE 34 35 TYPE(CIRCLE) :: C ! Declare a variable of type Circle. 36 C = CIRCLE(1.5) ! Use the implicit constructor, radius = 1.5 37 CALL C%PRINT ! Call a type-bound subroutine 38 39 END PROGRAM CIRCLE_TEST

In the module definition, I type-bound the procedures CIRCLE_AREA and CIRCLE_PRINT to the module (lines 11-12). In the main program, once I instantiate the derived type C = CIRCLE(1.5) (line 36), I can call methods (functions) as I would a derived type (CALL C%PRINT) (line 37). This looks a bit more like OOP.

In addition to object-oriented characteristics, Fortran 2003 introduced new features to enhance its ability to interact with C and C++ libraries or code. If you have tried to integrate C and Fortran in the past, you understand the fundamental differences between the two. For one, multidimensional arrays in C/C++ are in the opposite order in Fortran. Additionally, all arguments in Fortran are passed by reference and not by value, which means C must pass Fortran arguments as pointers. Finally, by default, C arrays start with 0 (zero) and Fortran arrays start with 1. Modern Fortran allows you to define arrays starting with 0 (zero) – or any integer, including negative numbers.

Integrating Fortran and C has been notoriously difficult, although possible, and linkers will blissfully create a binary for you. Moreover, it has never been easy to create portable C/Fortran integration. Sometimes, you have to test the compilers to determine exactly how they integrate.

Fortran 2003 added a standardized way to generate procedures, derived type declaration, and global variables that are interoperable with C. Because of standardization, Fortran 2003-compliant compilers make portability of C and Fortran integration much better.

The basic model for Fortran/C integration comprises three basic parts: (1) Define Fortran "kinds" that map to C types. Remember that in F90, data types started defining the precision of variables via the kind definition. (2) Use an intrinsic module to define names: USE, INTRINSIC :: ISO_C_BINDING. (3) Use a BIND(C) attribute to specify C linkage. Discussing the details is beyond the scope of this article, but look around the web if C and Fortran integration is important.

Another set of big changes in Fortran 2003 has to do with I/O. Before Fortran 2003, all I/O was record based, which worked fine for data that had been produced by other Fortran code or for reading ASCII files. However, it doesn't work well when reading data from a file that was created by an instrument or a spreadsheet or a database. Fortran I/O had to become much more general, including the ability to do "stream" I/O. With Fortran 2003, you could now do both formatted and unformatted stream I/O.

Using stream file access has several benefits. The first is that the file will likely be smaller because there are no record markers. However, this depends on how you were performing I/O before you started using streams. Second, streams allow you to read data from files you normally would not be able to read, such as databases. Third, new I/O functions allow you to move to different "positions" within the file to perform I/O.

Unformatted stream writes have no record markers. Subsequent writes just append data to the file [22]. For example, consider two WRITE() statements that write first and then second. The first write adds 5 bytes to the file, and the second write adds 6 bytes to the file. Therefore, the next write should start at byte 12. To get the current position in the file, use INQUIRE(UNIT=<fn>, POS=mypos).

If the code did not use streams, then the writes would add a record marker after each write. Therefore, the position would be something greater than 12 bytes. If you compile the code with a compiler that supports streams, the resulting position will be 12.

Using streams, you can specify a position within a file for a READ() or WRITE() function. For example, READ(11, POS=12) string reads a string at position 12 (i.e., 12 bytes into a file). The start of a file is position 0.

A couple of quick pieces of advice when using stream output: If you use it to create an output file, older Fortran code will not be able to read it (no record markers). Only code built with compilers that implement streams will be able to read the file. Conversely, if you are reading a file written by an older version of Fortran, you can't read it using streams – you have to read it as classic Fortran with record markers.

Other features added in Fortran 2003 [21] include (1) data manipulation enhancements (allocatable components, deferred type parameters, VOLATILE attribute, explicit type specification in array constructors and allocate statements, pointer enhancements, extended initialization expressions, and enhanced intrinsic procedures). (2) I/O enhancements: asynchronous transfer, stream access (previously discussed), user-specified transfer operations for derived types, user-specified control of rounding during format conversions, named constants for preconnected units, the FLUSH statement, regularization of keywords, and access to error messages. (3) Procedure pointers. (4) Support for IEEE floating-point arithmetic and floating-point exception handling. (5) Support for international usage: access to ISO 10646 4-byte characters and choice of decimal or comma in numeric-formatted I/O. (6) Enhanced integration with the host operating system: access to command-line arguments, environment variables, and processor error messages.

The Fortran developers were not done, however. Fortran 2008 incorporates some new features to improve performance, and Fortran 2015, which, although still under development, shows some serious promise.

Fortran 2008

Fortran 2003 is to Fortran 2008 much as F90 is to F95. The revision added some corrections and clarifications to Fortran 2003 while introducing new capabilities. Probably the biggest added feature was the idea of concurrent computing [23] via Coarray Fortran [24]. Concurrent computing executes several computations during overlapping periods of time, allowing users to run sections of code at the same time. In contrast, sequential computations occur one after the other and have to wait for the previous computations to finish.

As a point of a clarification, concurrent computing is related to, but different from, parallel programming, which is so prevalent in HPC. In parallel computing, various processes run at the same time. On the other hand, concurrent computing has processes that overlap in terms of duration, but their execution doesn't have to happen at the same instant. A good way to explain the differences between serial, sequential, concurrent, and parallel is shown in Table 1 [23], which assumes you have two tasks, T1 and T2.

Tabelle 1: Serial, Sequential, Concurrent

|

Order of Execution |

Model |

|---|---|

|

T1 executes and finishes before T2 |

Serial and sequential |

|

T2 executes and finishes before T1 |

Serial and sequential |

|

T1 and T2 execute alternately |

Serial and concurrent |

|

T1 and T2 execute simultaneously |

Parallel and concurrent |

"Simultaneous" means executed in the same physical instant, and "sequential" is an antonym of "concurrent" and "parallel." In this case, sequential typically means something is performed or used serially in a particular order.

Coarray Fortran (CAF) is a set of extensions for Fortran 95/2003 that was developed outside of the Fortran standard so that experimentation could take place quickly. The extensions were adopted in Fortran 2008, with the syntax varying a little bit relative to the original CAF definition. CAF is an example of a Partitioned Global Address Space (PGAS) [25], which assumes a global address space that is logically partitioned. Each process has a portion of the address space, which can have an affinity for a particular process.

CAF uses a parallel execution model, so code performance can be improved. A draft of the Fortran 2008 standard [26] stated that "A Fortran program containing coarrays is interpreted as if it were replicated a fixed number of times and all copies were executed asynchronously. Each copy has its own set of data objects and is called an image. The array syntax of Fortran is extended with additional trailing subscripts in square brackets to give a clear and straightforward representation of access to data on other images."

Data references without square brackets are local data (local to the image). If the square brackets are included, then the data might need to be communicated between images. CAF uses a single-program, multiple data (SPMD) model.

When a coarray-enabled application starts, a copy of the application is executed on each processor. However, the images (each copy of the application) are executed asynchronously. The images are distinguished from one another by an index between 1 and n, the number of images. Notice it starts with 1 and not 0, which is perhaps influenced by the Fortran roots.

In the application code, you define a coarray with a trailing []. The coarray then exists in each image, with the same name and having the same size. They can be scalar, array, static, or dynamic and of intrinsic or derived type. Synchronization statements can be used to maintain program correctness. Table 2 shows examples of the ways you can define a coarray. Notice that scalars can be coarrays, fixed-size arrays, allocatable arrays, and derived types, and you can have coarrays with different coranks. However, you cannot have a coarray that is a constant or a pointer.

Tabelle 2: Defining Coarrays

|

Coarray Type |

Definition |

|---|---|

|

Scalar coarray |

|

|

Array coarray |

|

|

Scalar coarray with corank 3 |

|

|

Array coarray with corank 3 and different cobounds |

|

|

Allocatable coarray |

|

|

Derived type scalar coarray |

|

What is a "corank"? Variables in Fortran code can have rank, bounds, extent, size, and shape, which are defined inside the parentheses of an array declaration, as specified from F90 onward. For coarrays, the corank, cobounds, and coextents are given by data in square brackets. The cosize of a coarray is always equal to the number of images specified when the application is executed. A coarray has a final coextent, a final upper cobound, and a coshape that depends on the number of images.

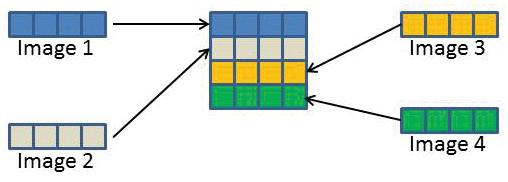

A simple example declaration is integer :: a(4)[*]. Here, each image ("process") is an integer array of 4. You can assemble a 2D (rank = 2) coarray data structure from these 1D (rank = 1) arrays. If using four images with the previous declaration, the coarray looks like the illustration in Figure 2. The arrays are stacked on top of each other because of the 1D coarray specification [*].

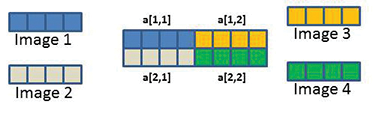

If you specify the coarray in a different manner, but still use four images, a 2D coarray can be declared as integer :: a(4)[2,*]. Each image is again a 1D integer with length (extent) 4. However, the coarray specifies that the 2D array is assembled differently (as in Figure 3). Remember that each image has it's own array that is the same as the others but can be combined using coarrays.

The use of coarrays can be thought of as opposite the way distributed arrays are used in MPI. With MPI applications, each rank or process has a local array; then, the process needs to be mapped from the local array to the global array so that local data can be mapped to the larger global array. The starting point is with global arrays and then to local arrays.

Coarrays are the opposite. You can take local arrays and combine them into a global array using a coarray. You can access the local array (local to the image) using the "usual" array notation. You can also access data on another image almost in the same way, but you have to use the coarray notation.

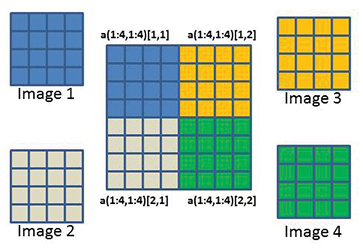

In another simple example, each image has a 2D array that is combined into a coarray to create a larger 2D array. Again, assume four images with the coarray declaration integer :: a(4,4)[2,*]. Each image has a 2D integer array a. With the coarray definition given by the square bracket notation, the "local" arrays are combined into a coarray (globally accessible) that looks like Figure 4.

If the array is local to the image, you access the array as you normally would. For example, for image 3 to access element (2,2) from the array, the statement would be something like b = a(2,2). You can always use coarray notation if needed, but in this case, you know the data is local, so you can access it using local notation. If image 1, 2, or 4 wanted to access that element (global access), then the statement would have to be b = a(2,2)[1,2].

You still have to pay attention to what image holds what data, but writing the statements to access the data is fairly easy. The key is the coarray subscripts. The declaration integer, dimension(10,4) :: a is a simple local variable with rank 2 (i.e., two indices) and has lower bounds 1 and 1. The upper bounds are 10 and 4. The shape is [10,4].

The declaration integer :: a(10,4)[3,*] would convert the array to a coarray, which adds a corank of 2 (two indices). The lower cobounds are 1 and 1. The upper cobounds are 3 and m, and the coshape is 3,m, where m = ceiling(num_images()/3).

Using coarrays, you no longer have to use MPI_SEND() and MPI_RECV() or their non-blocking equivalents for sending data from one process to another. You just access the remote data using the coarray syntax and let the underlying coarray library do the work, making multiprocess coding easier.

CAF for Fortran 2008 can be implemented in different ways. A common way is to implement it using MPI, as in GFortran [27]. Except for a small number of functions, GFortran provides coarray functionality starting in Fortran 2008 v5.1 using the coarray capability in OpenCoarrays [28]. The compiler translates the coarray syntax into library calls that then use MPI functions underneath.

OpenCoarrays

I decided to build and test the latest GFortran and OpenCoarrays on CentOS 7.2, which comes with an older version of GFortran, so my first step was to install the latest and greatest version, GCC 6.2.0. Believe it or not, building and installing a new GCC is not as difficult as it would seem. If you install as many dependencies as possible using the packages of the distribution, it's much easier. In general, I follow the directions in the GCC wiki [29] and GNU GCC installation page [30].

I installed GCC 6.2.0 into my home directory, /home/laytonjb/bin/gcc-6.2.0, and then modified the environment variables $PATH and $LD_LIBRARY_PATH so the new compilers were used instead of the older ones. The command used to build and install GCC is:

$PWD/../gcc-6.2.0/configure --prefix=$HOME/gcc-6.2.0 --enable-languages=c,c++,fortran,go --disable-multilib

The next step was to build and install an MPI library using the GCC 6.2.0 compilers. The OpenCoarray website recommended MPICH first, so I built and installed MPICH-3.2 in /home/laytonjb/bin/mpich-3.2. Again, the environment variables $PATH and $LD_LIBRARY_PATH were modified in the .bashrc file to point to MPICH binaries and libraries.

After installing the MPI library, the next step was to build and install OpenCoarray (OCA). The latest stable version as of the writing of this article was 1.7.2:

./install.sh -i /home/laytonjb/bin/opencoarray-1.7.2

The OCA build didn't take too long and was much shorter than building GCC. At this point, I'm ready to start compiling and executing some CAF code!

The first example is very simple (Listing 10). This proverbial "hello world" program for Fortran coarrays was taken from the Wikipedia page for coarrays [24].

Listing 10: Hello World with Coarrays

01 program Hello_World 02 implicit none 03 character(len=20) :: name[*] ! scalar coarray, one "name" for each image. 04 ! Note: "name" is the local variable while "name[]" accesses the 05 ! variable in a specific image; "name[this_image()]" is the same as "name". 06 07 ! Interact with the user on Image 1; execution for all others pass by. 08 if (this_image() == 1) then 09 write(*,'(a)',advance='no') 'Enter your name: ' 10 read(*,'(a)') name 11 end if 12 ! Distribute information to all images 13 call co_broadcast(name,source_image=1) 14 15 ! I/O from all images, executing in any order, but each record written is intact. 16 write(*,'(3a,i0)') 'Hello ',trim(name),' from image ', this_image() 17 end program Hello_world

Using GCC, particularly from GFortran, you have two ways to build and execute this code. The first way is to use the compile and run scripts provided by OCA. If the name of the source file is hello.f90, then you should compile and execute with:

$ caf hello.f90 -o hello $ cafrun -np 4 ./hello Enter your name: Jeff Hello Jeff from image 1 Hello Jeff from image 4 Hello Jeff from image 3 Hello Jeff from image 2

The first line compiles the code and the second line executes it. The -np 4 in the cafrun command tells the application to use four images. The second way of building and executing coarray code is to build it with the mpif90 script and execute it using the mpirun script,

mpif90 -fcoarray=lib yourcoarray.f90 -L/path/to/libcaf_mpi.a-lcaf_mpi -o yourcoarray $ mpirun -np <n> ./yourcoarray

where <n> is the number of images. The -fcoarray=lib compilation command adds in the all-important libraries.

Other, more complicated coarray Fortran code examples [31] are floating around the web, although not as many as for F90 or Fortran 2003. Because Fortran 2008 is so new, it looks like coarray examples haven't quite caught up yet.

A great place to find out what aspects of Fortran 2008 your compiler supports and does not support is the Fortran 2008 wiki [32]. In addition to CAF, Fortran 2008 has implemented submodules (additional structuring facilities for modules; supersedes ISO/IEC TR 19767:2005), Coarray Fortran, the DO CONCURRENT construct for loop iterations with no interdependencies, the CONTIGUOUS attribute to specify storage layout restrictions, the BLOCK construct (which can contain declarations of objects with construct scope), and recursive allocatable components as an alternative to recursive pointers in derived types, among other features.

Fortran 2015

The next evolution of Fortran, even though many compilers have yet to implement all of Fortran 2008, is Fortran 2015. The standard has been under discussion for several years and is still undergoing work, with the goal for a 2018 release [33].

In general, Fortran 2015 is intended to include minor revisions, some clarifications, and corrections to inconsistencies, as well as some new features. In general, Fortran 2015 has two "thrusts." The first is around improved C and Fortran integration, and the second is around enhancing coarrays.

Many of the Fortran/C interoperability standards for Fortran 2015 were aimed to help MPI 3.0. An article from "Dr. Fortran" [34] (see the "Dr. Fortran's Retirement" box) contains a discussion of the proposed Fortran/C changes and even has a couple of examples.

A second feature is new additions to coarrays. The first addition is called "teams," which are collections of images in coarray applications that work together on a particular task. An application can have several teams working on separate tasks that then communicate their results to their parent.

From teams, you can also create subteams that can be dissolved and reformed dynamically. With subteams, if one image fails, you can capture the problem, recreate a new team, and proceed with your computations. Contrast this with MPI: If a rank fails, the entire application hangs or crashes.

The definition of a "failed image" reveals a problem of much discussion (or argument). For example, an image really might not be failed but just very slow for some reason. The complication is how to define "slow" (or stalled) and how to have Fortran detect and recover from these (slow or stalled) images. Initially, the Fortran team decided to add some synchronization constructs to detect that an image has failed. Although the final determination is not yet settled, the discussion is proceeding in the correct direction.

The second part of the coarray additions is called "events." This addition is very much as it sounds; that is, events allow an image to notify another image that it has completed a task and that it can proceed.

The third part of the coarray additions is called "atomics." Created in Fortran 2008, the concept of "atomic" objects allow for invisible operations. However, they have had limited support. In Fortran 2015, the following atomic operations were added: ADD, AND, CAS (compare and swap), OR, and XOR.

The fourth and last part of the coarray additions is called "collectives," which are intrinsic procedures for performing operations across all images of the current team. The new routines that have been defined (but are subject to change) are: CO_MAX, CO_MIN, CO_SUM, CO_BROADCAST, and CO_REDUCE.

As with previous versions of Fortran, some features are targeted for deprecation in Fortran 2015, including labeled DO loops, EQUIVALENCE, COMMON, and BLOCK DATA.

The features that are finally deleted from Fortran are the arithmetic IF and the non-block DO construct, which doesn't end in a CONTINUE or END DO.

Summary

Fortran 90 catapulted Fortran from a perceived "old" language to a modern language on equal footing with any other. It retained Fortran's history of simplicity and performance, but it added features that developers had been wanting, such as free-form input, dynamic arrays, pointers, derived types, modular programming (modules), and portable precision definitions (kind).

Furthermore, a number of older features were deprecated from the Fortran standard, a refreshing process that I believe allows the language to stay simple, with a focus on numerical performance. Although taking an older Fortran 77 program and updating it to Fortran 90 involved a bit of work, the result was definitely worth the effort, and you could even find tools, including some online tools, for autoconverting code from Fortran 77 to Fortran 90.

Fortran 95 and 2003 really pushed Fortran into the modern era by dropping old and unused features and adding newer, modern, and needed features. Fortran could now handle object-oriented programming and had much better and standardized integration with C. It also incorporated some features from HPF that improved code readability. Fortran was still oriented toward numerical computations, but now it was much easier to express programming concepts and write portable code.

The 2008 revision added concurrent computing via Coarray Fortran, and Fortran 2015, although still under development, hovers just within sight, with a projected release date of 2018.