Relational databases as containers

Shippable Data

Software services packaged as containers finally reached the remotest corners of IT about three years ago. Docker [1], rkt [2], LXC [3], and the like see themselves confronted with technologies that – in part – are significantly older than they are themselves, some of them going back 15 or more years. This category includes relational databases [4], their best known representatives being Oracle [5], MySQL [6], MariaDB [7], and PostgreSQL [8].

Entry-Level Relational

Searching the web for the keyword "database" shows various techniques, implementations, approaches, and software variants. In this article, I focus on the Relational Database Management Systems (RDBMS) and thus ignore a number of other technologies.

Candidates from the NoSQL scene [9], especially distributed storage for key-value pairs, are excluded. The same applies to databases that store their data in memory [10]. Although these younger database representatives are better suited for cooperation with containers, the popularity of relational databases in the market [11] is strong.

Also, specialized container implementations like Shifter [12] or Singularity [13] are not covered in this article, because they play a subordinate role in the context of relational databases.

The container users' camp is already sufficiently heterogeneous and brings together both friends and challengers of Docker, rkt, and LXC. Apparently, the convergence efforts of the Open Container Initiative (OCI) [14] are not yet bearing fruit.

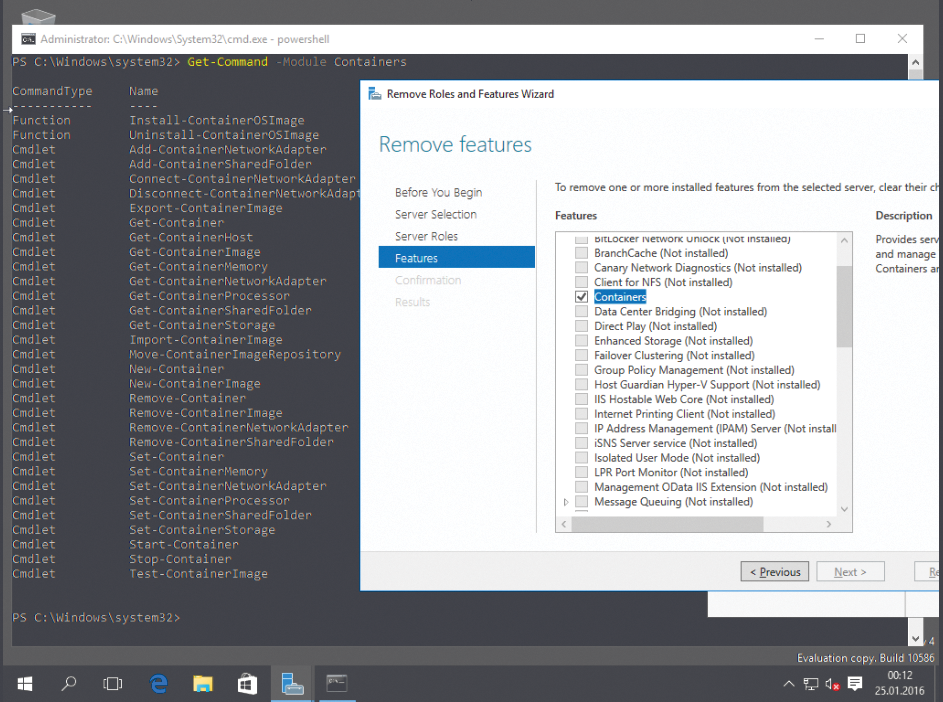

In this article, I focus on Docker, because most commercial products support this container implementation. The Internet is full of instructions on using Docker; even Microsoft relies on this container technology for its latest server version (Figure 1) [15] [16].

The Challenge

Admins supporting relational databases in Docker instances face three challenges:

- support for non-volatile storage of databases with stateless containers

- consideration of which database manufacturers provide support for commercial operations

- investigation into the compatibility of containers and databases with traditional IT environments

Anyone who has a satisfactory response to each of these requirements has a foundation for the successful use of classical database systems in container environments.

Sustainability Is Key

Traditional RDBMS implementations that forget their data every time they restart are almost worthless. Containers, on the other hand, are generally known to be stateless: They enter the same initial state after each start, although this has not quite been true for some time. Today, you have a number of methods and implementations on hand to equip Docker with memory.

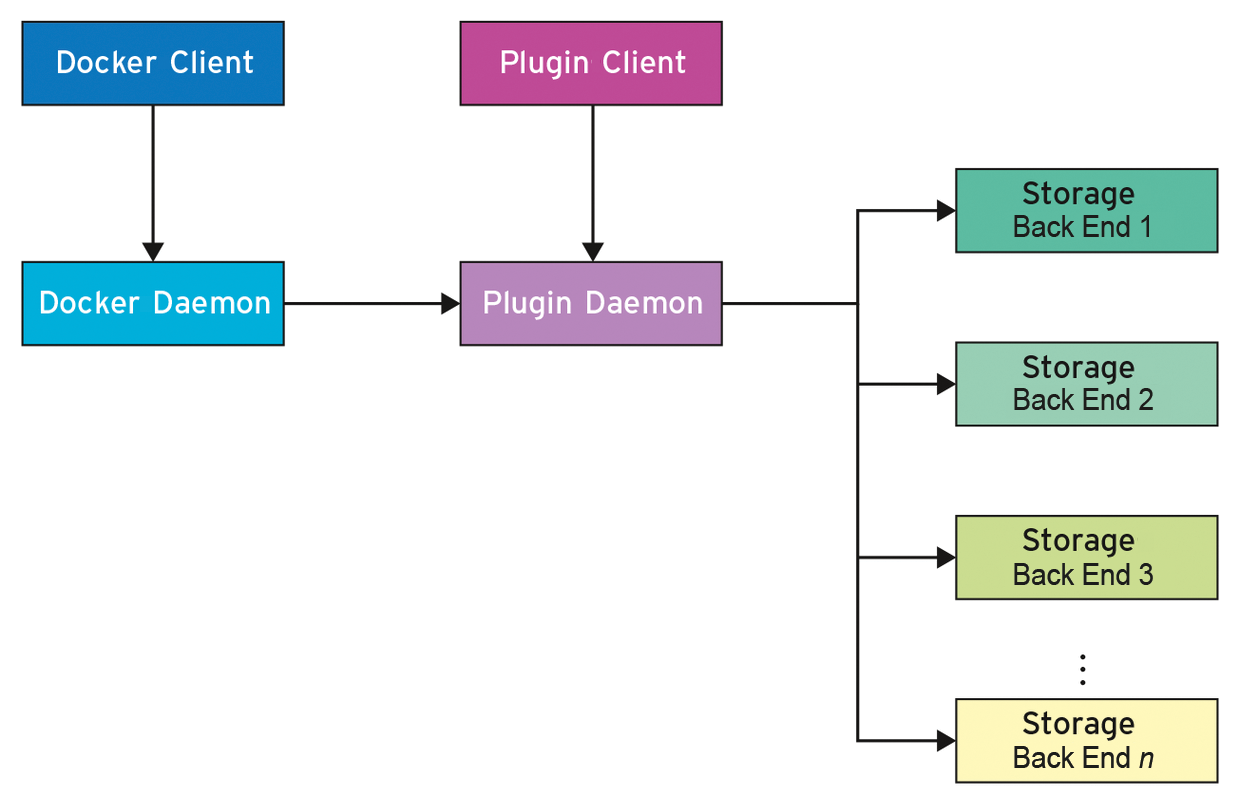

In the simplest variant, the data store is outside the container. In the early days of Docker, this was the only option for nonvolatile data storage. This approach is quite appealing but assumes that the respective applications support the mass storage protocol outside the containers. Moreover, this method is not suitable for classical database systems because they generally expect a directory (see the "Block Devices" box). The trick, then, is somehow to map a directory inside the container to one outside the container. Data volumes [17] and Docker's plugin infrastructure [18] can help (Figure 2).

In the simplest case, container admins would mirror a host directory on the Docker instance, decoupling the lifetime of data stored there from the duration of the containers. Therefore, when you terminate a Docker instance, you still keep the data in that volume or directory. When you start a new container, you mount the host directory and thus have access to the latest version of the database content. This only works for cases in which all Docker instances are located on the same host. However, if you have a problem, some or even a complete loss of data is in the cards.

Distributed Storage

Distributed file storage, as provided by the Network File System (NFS) [19], GlusterFS [20], or Ceph [21], offers a remedy. In principle, classical cluster filesystems such as GFS2 [22] or OCFS2 [23] are also suitable for this purpose. However, the industry is increasingly losing interest in these two filesystems, which makes their futures questionable.

Additionally, the question arises of who manages knowledge relating to the distributed data store: the container or the host. The original implementation of the data volumes did not allow a choice. The host had to keep track of access to the distributed data store. The container instance did not see the storage used.

Since the introduction of plugins [18], Docker now directly accesses distributed data media, which makes the setup leaner on the host side and puts responsibility and administration in the hands of the container. Table 1 lists some plugins for Docker data volumes.

Tabelle 1: Docker Plugins for Data Volumes

|

Name |

Manufacturer |

References |

|---|---|---|

|

Flocker |

ClusterHQ |

|

|

Ceph |

Red Hat |

|

|

REX-Ray |

Dell EMC |

|

|

NFS |

Various |

|

|

GlusterFS |

Red Hat |

Access to distributed data storage through plugins implicitly almost completely decouples (high) availability of data from the container instance. For the latter, the admin simply needs to ensure that the corresponding hosts have access to the remote data store. For further details on plugins for data volumes in Docker, see the "Pluggable Data Storage" box.

Officially …

How well do Oracle, MariaDB, and PostgreSQL support Docker? The answer has two parts. Part 1 deals with the question of whether the software runs at all when the admin forces it into a container. Docker is sufficiently mature, so no problems are to be expected in everyday operation. Search for the name of your favorite RDMBS and the keyword Docker to find numerous installation guides.

For the database to run in Docker, both sides need to offer support, which, as of January 2017, can be assumed to be given if you have the current versions of Docker and the databases. For your first steps, the documentation for the official Docker images is recommended [35] – with one exception: Oracle users, as well as fans of MariaDB, MySQL, and PostgreSQL, will find good instructions for operating the corresponding container image and deployment with suitable applications.

However, the second part of the answer is more exciting. It is about whether the database manufacturers also officially support the hip young container technology. Numerous entries, including some Docker images [35], suggest a certain degree of operations support for database producers. However, this ranges between "on a goodwill basis, if time allows" to "24/7 with a guaranteed response time."

The whole thing is even more complicated because, in the ideal case, all the software used would require some degree of operations support, which includes, for example, the host operating system, the container technology, and the database itself. Therefore, it is advisable to limit the number of parties involved in a setup: A service provider usually provides support for all components.

For the open source databases, it is worthwhile asking the operating system manufacturer. For industry giants like Red Hat, SUSE, or Ubuntu, support should not be a problem. Alternatively, admins should look to the database manufacturer for help or task a service provider. In the case of PostgreSQL, for example, your only option is via third-party service providers [36] if you need enterprise-level support.

Although it is not possible to make a robust recommendation for the best operations support, clearly a sufficient number of providers are willing to help with production use of classical databases in containers.

Reluctant Oracle

Oracle offers the smallest selection of containers. Originally the software giant supported only the in-house container technology, which was based on LXC. Recently, database operators have also been able to use Docker; a corresponding support document [37] was published in mid-December 2016.

Oracle does not provide ready-made Docker images. However, you can find some very good instructions for a do-it-yourself design online [38] [39]. In the simplest case, using the buildDockerImage.sh script (Listing 1) might suffice.

Listing 1: buildDockerImage.sh

$ ./buildDockerImage.sh -h

Usage: buildDockerImage.sh -v [version] [-e | -s | -x] [-i]

Builds a Docker image for Oracle database.

Parameters:

-v: version to build

Choose one of:

-e: creates image based on 'Enterprise Edition'

-s: creates image based on 'Standard Edition 2'

-x: creates image based on 'Express Edition'

-i: ignores the MD5 checksums

* select one edition only: -e, -s, or -x

LICENSE CDDL 1.0 + GPL 2.0

Copyright (c) 2014-2016 Oracle and/or its affiliates. All rights reserved.

Who's in Charge?

In a tough production environment, high availability (HA) often plays an important role. Classic databases use either cluster, multimaster, or master-slave concepts. The required technology either is provided by an external application or is part of the RDBMS software itself.

If you are looking to introduce containers, you need to consider HA solutions. The three HA solutions mentioned raise two questions. The first relates to who manages the active instances. The second relates to data synchronization between the components involved. One of these can be a data store accessed by all active instances, often using Storage Area Networks (SANs) [40] or Network Attached Storage (NAS) [41]. An alternative method is data replication of the stake-holding entities.

Earlier, I referred to a similar data storage requirement: data synchronization handled by the data volumes with distributed data storage in the background. The case of different container instances writing data at the same time, though, requires more, and more intensive, reflection.

Taking Up the Baton

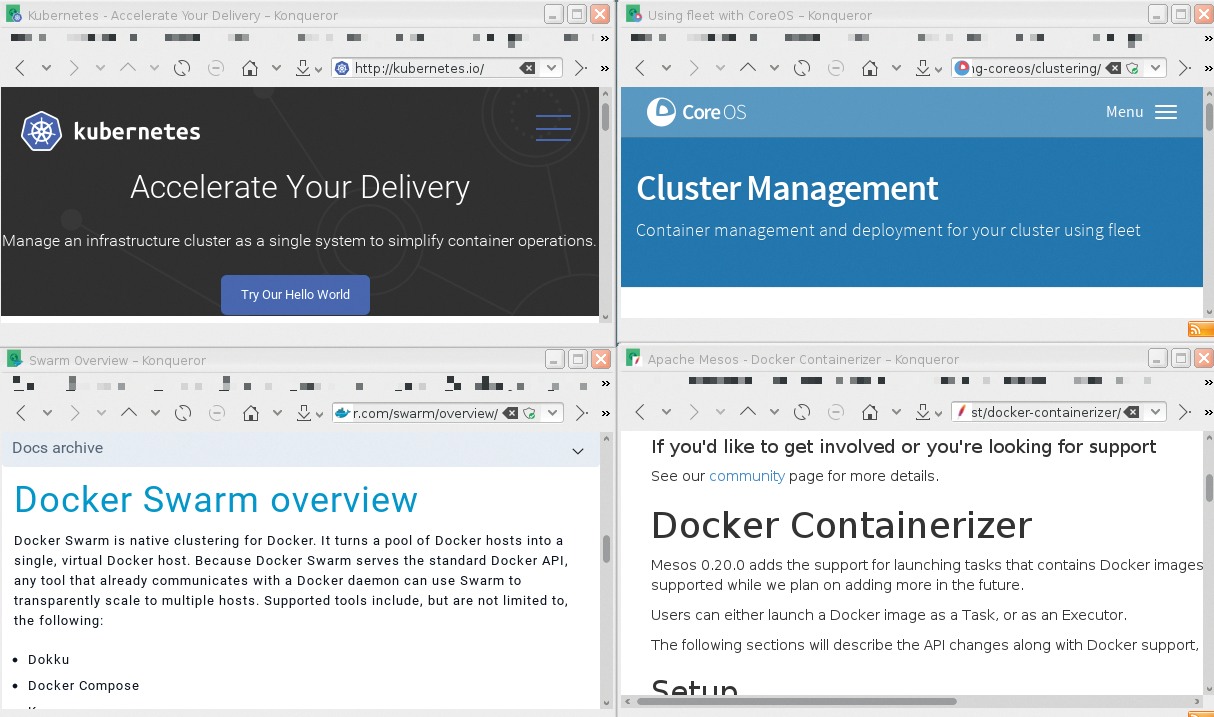

In a similar fashion, administrators simply manage the active container instances from the outside. The buzzword here is "container orchestration," and Google was among the first users. The company used Borg, the forerunner of Kubernetes, which was released in 2015 [42], above all to manage thousands of containers. Docker Swarm [33] or Apache Mesos [43] are just some of the other projects that deserve a closer look, seeking to attract new clientele for Docker and Apache at conferences (Figure 3). Originally, Fleet also belonged to this list [44], but CoreOS recently discontinued it in favor of Kubernetes.

Status Quo

Operating classic database systems in containers is no longer a technology problem; rather, the challenges lie in the area of processes and the employees who are responsible for setup, operation, and removal. Docker and other providers shift the focus and responsibility in the technology package upward – into the container. The underlying infrastructure, in particular the hardware, becomes secondary. Topics such as DevOps and lean management play key roles [45].

Because the demand for horizontal scalability and high distribution is growing, it is also questionable how long RDBMS will be able to lead the market, because the NoSQL competitors can "container" significantly better.